Sbchinn 1.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Craters on (101955) Bennu's Boulders

EPSC Abstracts Vol. 14, EPSC2020-502, 2020, updated on 30 Sep 2021 https://doi.org/10.5194/epsc2020-502 Europlanet Science Congress 2020 © Author(s) 2021. This work is distributed under the Creative Commons Attribution 4.0 License. Craters on (101955) Bennu’s boulders Ronald-Louis Ballouz1, Kevin Walsh2, William Bottke2, Daniella DellaGiustina1, Manar Al Asad3, Patrick Michel4, Chrysa Avdellidou4, Marco Delbo4, Erica Jawin5, Erik Asphaug1, Olivier Barnouin6, Carina Bennett1, Edward Bierhaus7, Harold Connolly1,8, Michael Daly9, Terik Daly6, Dathon Golish1, Jamie Molaro10, Maurizio Pajola11, Bashar Rizk1, and the OSIRIS-REx mini-crater team* 1Lunar and Planetary Laboratory, University of Arizona, Tucson, AZ, USA 2Southwest Research Institute, Boulder, CO, USA 3University of British Columbia, Vancouver, Canada 4Laboratoire Lagrange, Université Côte d’Azur, Observatoire de la Côte d’Azur, CNRS, Nice, France 5Smithsonian Institution, Washington, DC, USA 6The Johns Hopkins University Applied Physics Laboratory, Laurel, MD, USA, (7) Lockheed Martin Space, Little-ton, CO, USA 7Lockheed Martin Space, Littleton, CO, USA, 8Dept. of Geology, Rowan University, Glassboro, NJ, USA 9York University, Toronto, Canada 10Planetary Science Institute, Tucson, AZ, USA 11INAF-Astronomical Observatory of Padova, Padova, Italy *A full list of authors appears at the end of the abstract Introduction: The OSIRIS-REx mission’s observation campaigns [1] using the PolyCam instrument, part of the OSIRIS-REx Camera Suite (OCAMS) [2 3], have returned images of the surface of near-Earth asteroid (NEA) (101955) Bennu. These unprecedented-resolution images resolved cavities on Bennu’s boulders (Fig. 1) that are near-circular in shape and have diameters ranging from 5 cm to 5 m. -

SPACE RESEARCH in POLAND Report to COMMITTEE

SPACE RESEARCH IN POLAND Report to COMMITTEE ON SPACE RESEARCH (COSPAR) 2020 Space Research Centre Polish Academy of Sciences and The Committee on Space and Satellite Research PAS Report to COMMITTEE ON SPACE RESEARCH (COSPAR) ISBN 978-83-89439-04-8 First edition © Copyright by Space Research Centre Polish Academy of Sciences and The Committee on Space and Satellite Research PAS Warsaw, 2020 Editor: Iwona Stanisławska, Aneta Popowska Report to COSPAR 2020 1 SATELLITE GEODESY Space Research in Poland 3 1. SATELLITE GEODESY Compiled by Mariusz Figurski, Grzegorz Nykiel, Paweł Wielgosz, and Anna Krypiak-Gregorczyk Introduction This part of the Polish National Report concerns research on Satellite Geodesy performed in Poland from 2018 to 2020. The activity of the Polish institutions in the field of satellite geodesy and navigation are focused on the several main fields: • global and regional GPS and SLR measurements in the frame of International GNSS Service (IGS), International Laser Ranging Service (ILRS), International Earth Rotation and Reference Systems Service (IERS), European Reference Frame Permanent Network (EPN), • Polish geodetic permanent network – ASG-EUPOS, • modeling of ionosphere and troposphere, • practical utilization of satellite methods in local geodetic applications, • geodynamic study, • metrological control of Global Navigation Satellite System (GNSS) equipment, • use of gravimetric satellite missions, • application of GNSS in overland, maritime and air navigation, • multi-GNSS application in geodetic studies. Report -

Bennu: Implications for Aqueous Alteration History

RESEARCH ARTICLES Cite as: H. H. Kaplan et al., Science 10.1126/science.abc3557 (2020). Bright carbonate veins on asteroid (101955) Bennu: Implications for aqueous alteration history H. H. Kaplan1,2*, D. S. Lauretta3, A. A. Simon1, V. E. Hamilton2, D. N. DellaGiustina3, D. R. Golish3, D. C. Reuter1, C. A. Bennett3, K. N. Burke3, H. Campins4, H. C. Connolly Jr. 5,3, J. P. Dworkin1, J. P. Emery6, D. P. Glavin1, T. D. Glotch7, R. Hanna8, K. Ishimaru3, E. R. Jawin9, T. J. McCoy9, N. Porter3, S. A. Sandford10, S. Ferrone11, B. E. Clark11, J.-Y. Li12, X.-D. Zou12, M. G. Daly13, O. S. Barnouin14, J. A. Seabrook13, H. L. Enos3 1NASA Goddard Space Flight Center, Greenbelt, MD, USA. 2Southwest Research Institute, Boulder, CO, USA. 3Lunar and Planetary Laboratory, University of Arizona, Tucson, AZ, USA. 4Department of Physics, University of Central Florida, Orlando, FL, USA. 5Department of Geology, School of Earth and Environment, Rowan University, Glassboro, NJ, USA. 6Department of Astronomy and Planetary Sciences, Northern Arizona University, Flagstaff, AZ, USA. 7Department of Geosciences, Stony Brook University, Stony Brook, NY, USA. 8Jackson School of Geosciences, University of Texas, Austin, TX, USA. 9Smithsonian Institution National Museum of Natural History, Washington, DC, USA. 10NASA Ames Research Center, Mountain View, CA, USA. 11Department of Physics and Astronomy, Ithaca College, Ithaca, NY, USA. 12Planetary Science Institute, Tucson, AZ, Downloaded from USA. 13Centre for Research in Earth and Space Science, York University, Toronto, Ontario, Canada. 14John Hopkins University Applied Physics Laboratory, Laurel, MD, USA. *Corresponding author. E-mail: Email: [email protected] The composition of asteroids and their connection to meteorites provide insight into geologic processes that occurred in the early Solar System. -

Roles for Socially-Engaged Philosophy of Science in Environmental Policy

Roles for Socially-Engaged Philosophy of Science in Environmental Policy Kevin C. Elliott Lyman Briggs College, Department of Fisheries and Wildlife, and Department of Philosophy, Michigan State University Introduction The philosophy of science has much to contribute to the formulation of public policy. Contemporary policy making draws heavily on scientific information, whether it be about the safety and effectiveness of medical treatments, the pros and cons of different economic policies, the severity of environmental problems, or the best strategies for alleviating inequality and other social problems. When science becomes relevant to public policy, however, it often becomes highly politicized, and figures on opposing sides of the political spectrum draw on opposing bodies of scientific information to support their preferred conclusions.1 One has only to look at contemporary debates over climate change, vaccines, and genetically modified foods to see how these debates over science can complicate policy making.2 When science becomes embroiled in policy debates, questions arise about who to trust and how to evaluate the quality of the available scientific evidence. For example, historians have identified a number of cases where special interest groups sought to influence policy by amplifying highly questionable scientific claims about public-health and environmental issues like tobacco smoking, climate change, and industrial pollution.3 Determining how best to respond to these efforts is a very important question that cuts across multiple -

Breaking the Siege: Guidelines for Struggle in Science Brian Martin Faculty of Arts, University of Wollongong, NSW 2522 [email protected]

Breaking the siege: guidelines for struggle in science Brian Martin Faculty of Arts, University of Wollongong, NSW 2522 http://www.bmartin.cc/ [email protected] When scientists come under attack, it is predictable that the attackers will use methods to minimise public outrage over the attack, including covering up the action, devaluing the target, reinterpreting what is happening, using official processes to give an appearance of justice, and intimidating people involved. To be effective in countering attacks, it is valuable to challenge each of these methods, namely by exposing actions, validating targets, interpreting actions as unfair, mobilising support and not relying on official channels, and standing up to intimidation. On a wider scale, science is constantly under siege from vested interests, especially governments and corporations wanting to use scientists and their findings to serve their agendas at the expense of the public interest. To challenge this system of institutionalised bias, the same sorts of methods can be used. ABSTRACT Key words: science; dissent; methods of attack; methods of resistance; vested interests Scientists and science under siege In 1969, Clyde Manwell was appointed to the second chair environmental issues?” (Wilson and Barnes 1995) — and of zoology at the University of Adelaide. By present-day less than one in five said no. Numerous environmental terminology he was an environmentalist, but at the time scientists have come under attack because of their this term was little known and taking an environmental research or speaking out about it (Kuehn 2004). On stand was uncommon for a scientist. Many senior figures some topics, such as nuclear power and fluoridation, it in government, business and universities saw such stands can be very risky for scientists to take a view contrary as highly threatening. -

The Controversy Over Climate Change in the Public Sphere

THE CONTROVERSY OVER CLIMATE CHANGE IN THE PUBLIC SPHERE by WILLIAM MOSLEY-JENSEN (Under the Direction of Edward Panetta) ABSTRACT The scientific consensus on climate change is not recognized by the public. This is due to many related factors, including the Bush administration’s science policy, the reporting of the controversy by the media, the public’s understanding of science as dissent, and the differing standards of argumentation in science and the public sphere. Al Gore’s An Inconvenient Truth was produced in part as a response to the acceptance of climate dissent by the Bush administration and achieved a rupture of the public sphere by bringing the technical issue forward for public deliberation. The rupture has been sustained by dissenters through the use of argument strategies designed to foster controversy at the expense of deliberation. This makes it incumbent upon rhetorical scholars to theorize the closure of controversy and policymakers to recognize that science will not always have the answers. INDEX WORDS: Al Gore, Argument fields, Argumentation, An Inconvenient Truth, Climate change, Climategate, Controversy, Public sphere, Technical sphere THE CONTROVERSY OVER CLIMATE CHANGE IN THE PUBLIC SPHERE by WILLIAM MOSLEY-JENSEN B.A., The University of Wyoming, 2008 A Thesis Submitted to the Graduate Faculty of The University of Georgia in Partial Fulfillment of the Requirements for the Degree MASTER OF ARTS ATHENS, GEORGIA 2010 © 2010 William Mosley-Jensen All Rights Reserved THE CONTROVERSY OVER CLIMATE CHANGE IN THE PUBLIC SPHERE by WILLIAM MOSLEY-JENSEN Major Professor: Edward Panetta Committee: Thomas Lessl Roger Stahl Electronic Version Approved: Maureen Grasso Dean of the Graduate School The University of Georgia May 2010 iv ACKNOWLEDGEMENTS There are many people that made this project possible through their unwavering support and love. -

Dissent and Heresy in Medicine: Models, Methods, and Strategies Brian Martin*

ARTICLE IN PRESS Social Science & Medicine 58 (2004) 713–725 Dissent and heresy in medicine: models, methods, and strategies Brian Martin* Science, Technology and Society, University of Wollongong, Wollongong NSW 2522, Australia Abstract Understandingthe dynamics of dissent and heresy in medicine can be aided by the use of suitable frameworks. The dynamics of the search for truth vary considerably dependingon whether the search is competitive or cooperative and on whether truth is assumed to be unitary or plural. Insights about dissent and heresy in medicine can be gained by making comparisons to politics and religion. To explain adherence to either orthodoxy or a challenging view, partisans use a standard set of explanations; social scientists use these plus others, especially symmetrical analyses. There is a wide array of methods by which orthodoxy maintains its domination and marginalises challengers. Finally, challengers can adopt various strategies in order to gain a hearing. r 2003 Elsevier Science Ltd. All rights reserved. Keywords: Dissent; Heresy; Orthodoxy; Medical knowledge; Medical research; Strategies Introduction challenges to it, there is a tremendous variation in ideas, support, visibility and outcome. The conventional view is that the human immunode- What is the best term for referringto a challengeto ficiency virus, HIV, is responsible for AIDS. But for orthodoxy? Wolpe (1994) offers an illuminating many years, a few scientists have espoused the incom- typology of internal challenges. One type of challenge patible view that HIV is harmless and is not responsible is to ‘‘knowledge products’’ such as disease for AIDS (Duesberg, 1996; Maggiore, 1999). The issue prognoses that question current knowledge—namely, came to world attention in 2001 when South African what are considered to be facts—while operating President Thabo Mbeki invited a number of the so- within conventional assumptions about scientific meth- called HIV/AIDS dissidents to join an advisory panel. -

Biography Authored Books Edited Books Journal Articles

Kirstie Fryirs Faculty of Science and Engineering Email: [email protected] Phone: +61 2 9850 8367 Biography Kirstie's work focuses on fluvial geomorphology and river management. Her research focusses on how rivers work, how they have evolved, how they have been impacted by anthropogenic disturbance, how catchment sediment budgets and (dis)connectivity work, and how to best use geomorphology in river management practice. She is probably best known as the co-developer of the River Styles Framework and portfolio of professional development short courses (see www.riverstyles.com). The River Styles Framework is a geomorphic approach for the analysis of rivers that includes assessment of river type and behaviour, physical condition and recovery potential. These analyses are used to develop prioritisation and decision support systems in river management practice. Uptake of the River Styles Framework has now occurred in many places on six continents. Kirstie has strong domestic and international collaborations in both academia and industry. She has worked for many years on various river science and management projects as part of multi-disciplinary, collaborative teams that include ecologists, hydrologists, social scientists, practitioners and citizens. Kirstie has also been lucky enough to work in Antarctica for two summer seasons, undertaking research on heavy metal contamination at Casey and Wilkes stations. Kirstie has co-written and co-edited three books titled "Geomorphology and River Management" (Blackwell, 2005), "River Futures" (Island Press, 2008) and "Geomorphic Analysis of River Systems: An Approach to Reading the Landscape" (Wiley, 2013). She holds several research, teaching and postgraduate supervision awards including the international Gordon Warwick medal for excellence in research. -

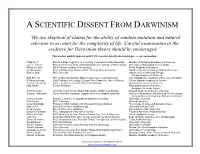

Scientists Dissent List

A SCIENTIFIC DISSENT FROM DARWINISM “We are skeptical of claims for the ability of random mutation and natural selection to account for the complexity of life. Careful examination of the evidence for Darwinian theory should be encouraged.” This was last publicly updated April 2020. Scientists listed by doctoral degree or current position. Philip Skell* Emeritus, Evan Pugh Prof. of Chemistry, Pennsylvania State University Member of the National Academy of Sciences Lyle H. Jensen* Professor Emeritus, Dept. of Biological Structure & Dept. of Biochemistry University of Washington, Fellow AAAS Maciej Giertych Full Professor, Institute of Dendrology Polish Academy of Sciences Lev Beloussov Prof. of Embryology, Honorary Prof., Moscow State University Member, Russian Academy of Natural Sciences Eugene Buff Ph.D. Genetics Institute of Developmental Biology, Russian Academy of Sciences Emil Palecek Prof. of Molecular Biology, Masaryk University; Leading Scientist Inst. of Biophysics, Academy of Sci., Czech Republic K. Mosto Onuoha Shell Professor of Geology & Deputy Vice-Chancellor, Univ. of Nigeria Fellow, Nigerian Academy of Science Ferenc Jeszenszky Former Head of the Center of Research Groups Hungarian Academy of Sciences M.M. Ninan Former President Hindustan Academy of Science, Bangalore University (India) Denis Fesenko Junior Research Fellow, Engelhardt Institute of Molecular Biology Russian Academy of Sciences (Russia) Sergey I. Vdovenko Senior Research Assistant, Department of Fine Organic Synthesis Institute of Bioorganic Chemistry and Petrochemistry Ukrainian National Academy of Sciences (Ukraine) Henry Schaefer Director, Center for Computational Quantum Chemistry University of Georgia Paul Ashby Ph.D. Chemistry Harvard University Israel Hanukoglu Professor of Biochemistry and Molecular Biology Chairman The College of Judea and Samaria (Israel) Alan Linton Emeritus Professor of Bacteriology University of Bristol (UK) Dean Kenyon Emeritus Professor of Biology San Francisco State University David W. -

New Jersey Student Learning Assessment–Science (NJSLA–S)

New Jersey Grade Student Learning Assessment–Science 11 (NJSLA–S) Grado Parent, Student, and Teacher Information Guide Guía de información para Grade 11 los padres, los alumnos y los maestros Spring 2020 Primavera de 2020 Copyright © 2020 by the New Jersey Department of Education. All rights reserved. Table of Contents Parent Information ...........................................................................................................................1 Description of the NJ Student Learning Assessment-Science (NJSLA–S) ................................1 The NJSLA–S Experience ...........................................................................................................1 1. Who will be tested? .................................................................................................................1 2. What types of questions are on the NJSLA–S? ......................................................................1 3. How can a child prepare for the NJSLA–S? ...........................................................................2 4. How long is the 2020 test? ......................................................................................................2 5. How fair is the NJSLA–S? ......................................................................................................2 6. How can I receive more information about the NJSLA–S? ...................................................2 Student Information .........................................................................................................................3 -

Oreskes Testimony 29April2015

Excerpt from Chapter 4 of Oreskes, Naomi and Erik M. Conway, 2010. Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming. (New York: Bloomsbury Press.), reproduced with permission. Constructing a Counter-narrative It took time to work out the complex science of ozone depletion, but scientists, with support from the U.S. government and international scientific organizations, did it. Regulations were put in place based on science, and adjusted in response to advances in it. But running in parallel to this were persistent efforts to challenge the science. Industry representatives and other skeptics doubted that ozone depletion was real, or argued that if it was real, it was inconsequential, or caused by volcanoes. During the early 1980s, anti-environmentalism had taken root in a network of conservative and libertarian “think tanks” in Washington. These think tanks—which included the Cato Institute, the American Enterprise Institute, Heritage Foundation, the Competitive Enterprise Institute, and, of course, the Marshall Institute, variously promoted business interests and “free market” economic policies, the rollback of environmental, health, safety, and labor protections. They were supported by donations from businessmen, corporations, and conservative foundations.i One aspect of the effort to cast doubt on ozone depletion was the construction of a counter-narrative that depicted ozone depletion as natural variation that was being cynically exploited by a corrupt, self-interested, and extremist scientific community to get more money for their research. One of the first people to make this argument was a man who had been a Fellow at the Heritage Foundation in the early 1980s, and whom we have already met: S. -

Dissertation Final

UNIVERSITY OF CALIFORNIA SANTA CRUZ EPISTEMIC INJUSTICE, RESPONSIBILITY AND THE RISE OF PSEUDOSCIENCE: CONTEXTUALLY SENSITIVE DUTIES TO PRACTICE SCIENTIFIC LITERACY A dissertation submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in PHILOSOPHY by Abraham J. Joyal June 2020 The Dissertation of Abraham J. Joyal is approved: _____________________________ Professor Daniel Guevara, chair _____________________________ Professor Abraham Stone _____________________________ Professor Jonathan Ellis ___________________________ Quentin Williams Acting Vice Provost and Dean of Graduate Studies Table Of Contents List of Figures…………………………………………………………………………………………iv Abstract………………………………………………………………………………………………....v Chapter One: That Pseudoscience Follows From Bad Epistemic Luck and Epistemic Injustice………………………………………………………………………………………………....1 Chapter Two: The Critical Engagement Required to Overcome Epistemic Obstacles……….37 Chapter Three: The Myriad Forms of Epistemic Luck……………………………………………56 Chapter Four: Our Reflective and Preparatory Responsibilities………………………………...73 Chapter Five: Medina, Epistemic Friction and The Social Connection Model Revisited.…….91 Chapter Six: Fricker’s Virtue Epistemological Account Revisited……………..………………110 Chapter Seven: Skeptical Concerns……………………………………………………………...119 Conclusion: A New Pro-Science Media…………………………………………………………..134 Bibliography…………………………………………………………………………………………140 iii List of Figures Chainsawsuit Webcomic…………………………………………………………………………….47 Epistemological Figure……………………………………………………………………………....82