Roles for Socially-Engaged Philosophy of Science in Environmental Policy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Breaking the Siege: Guidelines for Struggle in Science Brian Martin Faculty of Arts, University of Wollongong, NSW 2522 [email protected]

Breaking the siege: guidelines for struggle in science Brian Martin Faculty of Arts, University of Wollongong, NSW 2522 http://www.bmartin.cc/ [email protected] When scientists come under attack, it is predictable that the attackers will use methods to minimise public outrage over the attack, including covering up the action, devaluing the target, reinterpreting what is happening, using official processes to give an appearance of justice, and intimidating people involved. To be effective in countering attacks, it is valuable to challenge each of these methods, namely by exposing actions, validating targets, interpreting actions as unfair, mobilising support and not relying on official channels, and standing up to intimidation. On a wider scale, science is constantly under siege from vested interests, especially governments and corporations wanting to use scientists and their findings to serve their agendas at the expense of the public interest. To challenge this system of institutionalised bias, the same sorts of methods can be used. ABSTRACT Key words: science; dissent; methods of attack; methods of resistance; vested interests Scientists and science under siege In 1969, Clyde Manwell was appointed to the second chair environmental issues?” (Wilson and Barnes 1995) — and of zoology at the University of Adelaide. By present-day less than one in five said no. Numerous environmental terminology he was an environmentalist, but at the time scientists have come under attack because of their this term was little known and taking an environmental research or speaking out about it (Kuehn 2004). On stand was uncommon for a scientist. Many senior figures some topics, such as nuclear power and fluoridation, it in government, business and universities saw such stands can be very risky for scientists to take a view contrary as highly threatening. -

The Controversy Over Climate Change in the Public Sphere

THE CONTROVERSY OVER CLIMATE CHANGE IN THE PUBLIC SPHERE by WILLIAM MOSLEY-JENSEN (Under the Direction of Edward Panetta) ABSTRACT The scientific consensus on climate change is not recognized by the public. This is due to many related factors, including the Bush administration’s science policy, the reporting of the controversy by the media, the public’s understanding of science as dissent, and the differing standards of argumentation in science and the public sphere. Al Gore’s An Inconvenient Truth was produced in part as a response to the acceptance of climate dissent by the Bush administration and achieved a rupture of the public sphere by bringing the technical issue forward for public deliberation. The rupture has been sustained by dissenters through the use of argument strategies designed to foster controversy at the expense of deliberation. This makes it incumbent upon rhetorical scholars to theorize the closure of controversy and policymakers to recognize that science will not always have the answers. INDEX WORDS: Al Gore, Argument fields, Argumentation, An Inconvenient Truth, Climate change, Climategate, Controversy, Public sphere, Technical sphere THE CONTROVERSY OVER CLIMATE CHANGE IN THE PUBLIC SPHERE by WILLIAM MOSLEY-JENSEN B.A., The University of Wyoming, 2008 A Thesis Submitted to the Graduate Faculty of The University of Georgia in Partial Fulfillment of the Requirements for the Degree MASTER OF ARTS ATHENS, GEORGIA 2010 © 2010 William Mosley-Jensen All Rights Reserved THE CONTROVERSY OVER CLIMATE CHANGE IN THE PUBLIC SPHERE by WILLIAM MOSLEY-JENSEN Major Professor: Edward Panetta Committee: Thomas Lessl Roger Stahl Electronic Version Approved: Maureen Grasso Dean of the Graduate School The University of Georgia May 2010 iv ACKNOWLEDGEMENTS There are many people that made this project possible through their unwavering support and love. -

Dissent and Heresy in Medicine: Models, Methods, and Strategies Brian Martin*

ARTICLE IN PRESS Social Science & Medicine 58 (2004) 713–725 Dissent and heresy in medicine: models, methods, and strategies Brian Martin* Science, Technology and Society, University of Wollongong, Wollongong NSW 2522, Australia Abstract Understandingthe dynamics of dissent and heresy in medicine can be aided by the use of suitable frameworks. The dynamics of the search for truth vary considerably dependingon whether the search is competitive or cooperative and on whether truth is assumed to be unitary or plural. Insights about dissent and heresy in medicine can be gained by making comparisons to politics and religion. To explain adherence to either orthodoxy or a challenging view, partisans use a standard set of explanations; social scientists use these plus others, especially symmetrical analyses. There is a wide array of methods by which orthodoxy maintains its domination and marginalises challengers. Finally, challengers can adopt various strategies in order to gain a hearing. r 2003 Elsevier Science Ltd. All rights reserved. Keywords: Dissent; Heresy; Orthodoxy; Medical knowledge; Medical research; Strategies Introduction challenges to it, there is a tremendous variation in ideas, support, visibility and outcome. The conventional view is that the human immunode- What is the best term for referringto a challengeto ficiency virus, HIV, is responsible for AIDS. But for orthodoxy? Wolpe (1994) offers an illuminating many years, a few scientists have espoused the incom- typology of internal challenges. One type of challenge patible view that HIV is harmless and is not responsible is to ‘‘knowledge products’’ such as disease for AIDS (Duesberg, 1996; Maggiore, 1999). The issue prognoses that question current knowledge—namely, came to world attention in 2001 when South African what are considered to be facts—while operating President Thabo Mbeki invited a number of the so- within conventional assumptions about scientific meth- called HIV/AIDS dissidents to join an advisory panel. -

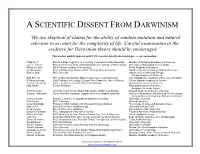

Scientists Dissent List

A SCIENTIFIC DISSENT FROM DARWINISM “We are skeptical of claims for the ability of random mutation and natural selection to account for the complexity of life. Careful examination of the evidence for Darwinian theory should be encouraged.” This was last publicly updated April 2020. Scientists listed by doctoral degree or current position. Philip Skell* Emeritus, Evan Pugh Prof. of Chemistry, Pennsylvania State University Member of the National Academy of Sciences Lyle H. Jensen* Professor Emeritus, Dept. of Biological Structure & Dept. of Biochemistry University of Washington, Fellow AAAS Maciej Giertych Full Professor, Institute of Dendrology Polish Academy of Sciences Lev Beloussov Prof. of Embryology, Honorary Prof., Moscow State University Member, Russian Academy of Natural Sciences Eugene Buff Ph.D. Genetics Institute of Developmental Biology, Russian Academy of Sciences Emil Palecek Prof. of Molecular Biology, Masaryk University; Leading Scientist Inst. of Biophysics, Academy of Sci., Czech Republic K. Mosto Onuoha Shell Professor of Geology & Deputy Vice-Chancellor, Univ. of Nigeria Fellow, Nigerian Academy of Science Ferenc Jeszenszky Former Head of the Center of Research Groups Hungarian Academy of Sciences M.M. Ninan Former President Hindustan Academy of Science, Bangalore University (India) Denis Fesenko Junior Research Fellow, Engelhardt Institute of Molecular Biology Russian Academy of Sciences (Russia) Sergey I. Vdovenko Senior Research Assistant, Department of Fine Organic Synthesis Institute of Bioorganic Chemistry and Petrochemistry Ukrainian National Academy of Sciences (Ukraine) Henry Schaefer Director, Center for Computational Quantum Chemistry University of Georgia Paul Ashby Ph.D. Chemistry Harvard University Israel Hanukoglu Professor of Biochemistry and Molecular Biology Chairman The College of Judea and Samaria (Israel) Alan Linton Emeritus Professor of Bacteriology University of Bristol (UK) Dean Kenyon Emeritus Professor of Biology San Francisco State University David W. -

Sbchinn 1.Pdf

The Prevalence and Effects of Scientific Agreement and Disagreement in Media by Sedona Chinn A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Communication) in The University of Michigan 2020 Doctoral Committee: Associate Professor P. Sol Hart, Chair Professor Stuart Soroka Professor Nicholas Valentino Professor Jan Van den Bulck Sedona Chinn [email protected] ORCID iD: 0000-0002-6135-6743 DEDICATION This dissertation is dedicated to the memory of my grandma, Pamela Boult. Thank you for your unending confidence in my every ambition and unconditional love. ii ACKNOWLEDGEMENTS I am grateful for the support of the Dow Sustainability Fellows Program, which provided much appreciated spaces for creative research and interdisciplinary connections. In particular, I would like to thank the 2017 cohort of doctoral fellows for their support, feedback, and friendship. I would also like to thank the Department of Communication and Media, the Center for Political Studies at the Institute for Social Research, and Rackham Graduate School for supporting this work. I truly appreciate the guidance and support of excellent advisors throughout this endeavor. Thanks to my advisors, Sol Hart and Stuart Soroka, for all of their long hours of teaching, critique, and encouragement. Know that your mentorship has shaped both how I think and has served as a model for how I Would like to act going forward in my professional life. Thanks to Nick Valentino and Jan Van den Bulck for your invaluable comments, insistence that I simplify experimental designs, and for your confidence in my Work. I truly appreciate all the ways in which your support and guidance have both improved the work presented here and my thinking as a researcher. -

Oreskes Testimony 29April2015

Excerpt from Chapter 4 of Oreskes, Naomi and Erik M. Conway, 2010. Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming. (New York: Bloomsbury Press.), reproduced with permission. Constructing a Counter-narrative It took time to work out the complex science of ozone depletion, but scientists, with support from the U.S. government and international scientific organizations, did it. Regulations were put in place based on science, and adjusted in response to advances in it. But running in parallel to this were persistent efforts to challenge the science. Industry representatives and other skeptics doubted that ozone depletion was real, or argued that if it was real, it was inconsequential, or caused by volcanoes. During the early 1980s, anti-environmentalism had taken root in a network of conservative and libertarian “think tanks” in Washington. These think tanks—which included the Cato Institute, the American Enterprise Institute, Heritage Foundation, the Competitive Enterprise Institute, and, of course, the Marshall Institute, variously promoted business interests and “free market” economic policies, the rollback of environmental, health, safety, and labor protections. They were supported by donations from businessmen, corporations, and conservative foundations.i One aspect of the effort to cast doubt on ozone depletion was the construction of a counter-narrative that depicted ozone depletion as natural variation that was being cynically exploited by a corrupt, self-interested, and extremist scientific community to get more money for their research. One of the first people to make this argument was a man who had been a Fellow at the Heritage Foundation in the early 1980s, and whom we have already met: S. -

Dissertation Final

UNIVERSITY OF CALIFORNIA SANTA CRUZ EPISTEMIC INJUSTICE, RESPONSIBILITY AND THE RISE OF PSEUDOSCIENCE: CONTEXTUALLY SENSITIVE DUTIES TO PRACTICE SCIENTIFIC LITERACY A dissertation submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in PHILOSOPHY by Abraham J. Joyal June 2020 The Dissertation of Abraham J. Joyal is approved: _____________________________ Professor Daniel Guevara, chair _____________________________ Professor Abraham Stone _____________________________ Professor Jonathan Ellis ___________________________ Quentin Williams Acting Vice Provost and Dean of Graduate Studies Table Of Contents List of Figures…………………………………………………………………………………………iv Abstract………………………………………………………………………………………………....v Chapter One: That Pseudoscience Follows From Bad Epistemic Luck and Epistemic Injustice………………………………………………………………………………………………....1 Chapter Two: The Critical Engagement Required to Overcome Epistemic Obstacles……….37 Chapter Three: The Myriad Forms of Epistemic Luck……………………………………………56 Chapter Four: Our Reflective and Preparatory Responsibilities………………………………...73 Chapter Five: Medina, Epistemic Friction and The Social Connection Model Revisited.…….91 Chapter Six: Fricker’s Virtue Epistemological Account Revisited……………..………………110 Chapter Seven: Skeptical Concerns……………………………………………………………...119 Conclusion: A New Pro-Science Media…………………………………………………………..134 Bibliography…………………………………………………………………………………………140 iii List of Figures Chainsawsuit Webcomic…………………………………………………………………………….47 Epistemological Figure……………………………………………………………………………....82 -

Responding to Climate Change Skepticism and the Ideological Divide

SUSTAINABILITY.UMICH.EDU/MJS Responding to Climate Change Skepticism and the Ideological Divide Jessica M. Santos School of Natural Resources and Environment, University of Michigan, Ann Arbor, MI 48109 & Climate Central, Princeton, NJ Irina Feygina Climate Central, Princeton, NJ Volume 5, Issue 1 http://dx.doi.org/10.3998/mjs.12333712.0005.102 abstract Climate change is an increasingly politicized issue in the United States, with many members of the American public, especially those who identify as politically con- servative, skeptical about this dangerous phenomenon. A host of social and psy- chological processes have been investigated in an attempt to understand skepticism and resistance to responding to the threat of climate change, including motivated reasoning, system justification theory, social dominance orientation, belief in a just world, the cultural cognition thesis, and solution aversion. In this article, we review recent research into these processes and their implications for understanding the political divide in responding to climate change. We also highlight efforts to test communications interventions aimed at ameliorating these processes underlying climate change skepticism, including framing and scientific consensus. This litera- ture review may be informative for climate change focused communicators, poli- cymakers, practitioners, and academics who directly or indirectly interact with the 5 Michigan Journal of Sustainability, sustainability.umich.edu/mjs public, or who design communication campaigns, policy, initiatives, or programs for the public. Introduction Climate change is a pressing challenge, with the potential to cause dramatic sea level rise, a higher frequency of extreme weather events, worldwide temperature increases, and climatic instability (IPCC 2013). These changes may disrupt societal functioning through increasing global migration, conflict, and disease, while en- dangering worldwide food systems (Burrows and Kinney 2016; FAO 2008; Haines et al. -

The Difference Between Societal Response to the Harm

THE DIFFERENCE BETWEEN SOCIETAL RESPONSE TO THE HARM OF TOBACCO VERSUS THE HARM OF CLIMATE CHANGE: THE ROLE OF PARTY DISCOURSE ON THE POLARIZATION OF PUBLIC OPINION _________________ A Thesis Presented to The faculty of College of Arts and Sciences Ohio University _________________ In Partial Fulfillment of the Requirements for Graduation with Honors in Political Science _________________ By Maya Schneiderman May 2018 1 Table of Contents Abstract………….…………………..……………………………………………....2 Literature Review and Argument ………....………………………………………...4 Section 1: Tobacco….…………..…………………………………………..……...12 Section 2: Climate Change……...….……………………………………….……...22 2.1: Environmental Protection: An American Value………………...23 2.1(a): Climate Science: A Changing Discourse…………………….24 2.2: Inherent Political Party Ideological Differences…………….….28 2.2 (a): Competing Economic Views………………………..29 2.2 (b): Problems Arise Between Parties……………………31 2.3: Manipulation of Public Opinion Through Denial Campaign…..33 2.3 (a): Denial Campaign……………………………....…....39 2.3 (b): Amplification of Denial by Role of Media………....43 Section 3: Discussion and Conclusion…….…….……………………………….. 46 References....………………………………………………………………………52 2 THE DIFFERENCE BETWEEN SOCIETAL RESPONSE TO THE HARM OF TOBACCO VERSUS THE HARM OF CLIMATE CHANGE: THE ROLE OF PARTY DISCOURSE ON THE POLARIZATION OF PUBLIC OPINION ABSTRACT This paper examines the actors involved in effectively altering public perception of scientific evidence through a comparison of the climate change denial campaign versus the tobacco denial campaign. It finds that the denial campaign conducted by the Tobacco Industry was not successful in creating uncertainty about tobacco science among the public, however, the climate change denial campaign has been successful in influencing public perception on the accuracy of climate science. By understanding the role of public perception of the risk of personal harm, this paper identifies how particular denial campaigns are successful in polarizing of public opinion on scientific evidence. -

Rationalism and Empiricism in Modern Medicine

NEWTON_FMT3.DOC 06/04/01 1:33 PM RATIONALISM AND EMPIRICISM IN MODERN MEDICINE WARREN NEWTON* I INTRODUCTION About ten years ago, after fellowships and clinical experience in a commu- nity setting, I had my first experience as a ward attending in a university hospi- tal.1 We were working with cardiac patients, and I was struck by the common treatment each patient received. No matter what the symptoms, patients re- ceived an exercise treadmill, an echocardiogram, and were put on a calcium channel blocker.2 This was remarkable at the time because there were in excess of thirty randomized controlled trials showing the benefit of beta-blockers, a different class of medicines, to treat patients following a heart attack. Indeed, by 1990, there was initial evidence that calcium channel blockers not only failed to improve outcomes, but actually made them worse. The point is not to criticize the medical culture at that hospital—similar ex- amples can be found at every medical center—but rather to explore why there was so much fondness for calcium channel blockers. One factor was the sub- stantial drug company support of faculty research on silent myocardial ische- mia. Another factor was what might be called medical fashion. The most likely explanation, however, was more fundamental. For my cardiology colleagues, it was biologically plausible that calcium channel blockers were better than beta- blockers. Like beta-blockers, calcium channel blockers reduce heart rate and myocardial wall stress, but they lack the side effects of beta-blockers. In other words, what was important to my colleagues was not the outcome of the critical trials, but our understanding of the mechanisms of disease. -

The Consensus Handbook Why the Scientific Consensus on Climate Change Is Important

The Consensus Handbook Why the scientific consensus on climate change is important John Cook Sander van der Linden Edward Maibach Stephan Lewandowsky Written by: John Cook, Center for Climate Change Communication, George Mason University Sander van der Linden, Department of Psychology, University of Cambridge Edward Maibach, Center for Climate Change Communication, George Mason University Stephan Lewandowsky, School of Experimental Psychology, University of Bristol, and CSIRO Oceans and Atmosphere, Hobart, Tasmania, Australia First published in March, 2018. For more information, visit http://www.climatechangecommunication.org/all/consensus-handbook/ Graphic design: Wendy Cook Page 21 image credit: John Garrett Cite as: Cook, J., van der Linden, S., Maibach, E., & Lewandowsky, S. (2018). The Consensus Handbook. DOI:10.13021/G8MM6P. Available at http://www.climatechangecommunication.org/all/consensus-handbook/ Introduction Based on the evidence, 97% of climate scientists have concluded that human- caused climate change is happening. This scientific consensus has been a hot topic in recent years. It’s been referenced by presidents, prime ministers, senators, congressmen, and in numerous television shows and newspaper articles. However, the story of consensus goes back decades. It’s been an underlying theme in climate discussions since the 1990s. Fossil fuel groups, conservative think-tanks, and political strategists were casting doubt on the consensus for over a decade before social scientists began studying the issue. From the 1990s to this day, most of the discussion has been about whether there is a scientific consensus that humans are causing global warming. As the issue has grown in prominence, a second discussion has arisen. Should we even be talking about scientific consensus? Is it productive? Does it distract from other important issues? This handbook provides a brief history of the consensus on climate change. -

Who's Afraid of Dissent? Addressing Concerns About Undermining

Who’s Afraid of Dissent? Addressing Concerns about Undermining Scientiªc Consensus in Public Policy Developments Downloaded from http://direct.mit.edu/posc/article-pdf/22/4/593/1789955/posc_a_00151.pdf by guest on 01 October 2021 Inmaculada de Melo-Martín Weill Cornell Medical College Kristen Intemann Montana State University Many argue that encouraging critical dissent is necessary for promoting scientiªc objectivity and progress. Yet despite its importance, some dissent can have negative consequences, including undermining conªdence in existing scientiªc consensus, confusing the public, and preventing sound policy. For ex- ample, private industries and think tanks have funded dissenting research to create doubt and stall regulations. To protect scientiªcally sound policies, some have responded by attempting to minimize or discourage dissent perceived to be problematic. We argue that targeting dissent as an obstacle to public policy is both misguided and dangerous. 1. Introduction Many have argued that allowing and encouraging public avenues for dis- sent and critical evaluation of scientiªc research is a necessary condition for promoting the objectivity of scientiªc communities and advancing sci- entiªc knowledge (Rescher 1993; Longino 1990, 2002; Solomon 2001). The history of science reveals many cases where an existing scientiªc con- sensus was later shown to be wrong (Kuhn 1962; Solomon 2001). Dissent plays a crucial role in uncovering potential problems and limitations of consensus views. Thus, many have argued that scientiªc communities We are grateful for the thoughtful comments and criticisms we received from two anonymous reviewers, as well as from Sharon Crasnow and Matthew Brown. This paper was substantially improved because of their efforts, though the responsibility for any re- maining problems is ours.