Intel's X86 Architecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Central Processing Unit(CPU). the Brain of Any Computer System Is the CPU

Computer Fundamentals 1'stage Lec. (8 ) College of Computer Technology Dept.Information Networks The central processing unit(CPU). The brain of any computer system is the CPU. It controls the functioning of the other units and process the data. The CPU is sometimes called the processor, or in the personal computer field called “microprocessor”. It is a single integrated circuit that contains all the electronics needed to execute a program. The processor calculates (add, multiplies and so on), performs logical operations (compares numbers and make decisions), and controls the transfer of data among devices. The processor acts as the controller of all actions or services provided by the system. Processor actions are synchronized to its clock input. A clock signal consists of clock cycles. The time to complete a clock cycle is called the clock period. Normally, we use the clock frequency, which is the inverse of the clock period, to specify the clock. The clock frequency is measured in Hertz, which represents one cycle/second. Hertz is abbreviated as Hz. Usually, we use mega Hertz (MHz) and giga Hertz (GHz) as in 1.8 GHz Pentium. The processor can be thought of as executing the following cycle forever: 1. Fetch an instruction from the memory, 2. Decode the instruction (i.e., determine the instruction type), 3. Execute the instruction (i.e., perform the action specified by the instruction). Execution of an instruction involves fetching any required operands, performing the specified operation, and writing the results back. This process is often referred to as the fetch- execute cycle, or simply the execution cycle. -

45-Year CPU Evolution: One Law and Two Equations

45-year CPU evolution: one law and two equations Daniel Etiemble LRI-CNRS University Paris Sud Orsay, France [email protected] Abstract— Moore’s law and two equations allow to explain the a) IC is the instruction count. main trends of CPU evolution since MOS technologies have been b) CPI is the clock cycles per instruction and IPC = 1/CPI is the used to implement microprocessors. Instruction count per clock cycle. c) Tc is the clock cycle time and F=1/Tc is the clock frequency. Keywords—Moore’s law, execution time, CM0S power dissipation. The Power dissipation of CMOS circuits is the second I. INTRODUCTION equation (2). CMOS power dissipation is decomposed into static and dynamic powers. For dynamic power, Vdd is the power A new era started when MOS technologies were used to supply, F is the clock frequency, ΣCi is the sum of gate and build microprocessors. After pMOS (Intel 4004 in 1971) and interconnection capacitances and α is the average percentage of nMOS (Intel 8080 in 1974), CMOS became quickly the leading switching capacitances: α is the activity factor of the overall technology, used by Intel since 1985 with 80386 CPU. circuit MOS technologies obey an empirical law, stated in 1965 and 2 Pd = Pdstatic + α x ΣCi x Vdd x F (2) known as Moore’s law: the number of transistors integrated on a chip doubles every N months. Fig. 1 presents the evolution for II. CONSEQUENCES OF MOORE LAW DRAM memories, processors (MPU) and three types of read- only memories [1]. The growth rate decreases with years, from A. -

The Microarchitecture of a Low Power Register File

The Microarchitecture of a Low Power Register File Nam Sung Kim and Trevor Mudge Advanced Computer Architecture Lab The University of Michigan 1301 Beal Ave., Ann Arbor, MI 48109-2122 {kimns, tnm}@eecs.umich.edu ABSTRACT Alpha 21464, the 512-entry 16-read and 8-write (16-r/8-w) ports register file consumed more power and was larger than The access time, energy and area of the register file are often the 64 KB primary caches. To reduce the cycle time impact, it critical to overall performance in wide-issue microprocessors, was implemented as two 8-r/8-w split register files [9], see because these terms grow superlinearly with the number of read Figure 1. Figure 1-(a) shows the 16-r/8-w file implemented and write ports that are required to support wide-issue. This paper directly as a monolithic structure. Figure 1-(b) shows it presents two techniques to reduce the number of ports of a register implemented as the two 8-r/8-w register files. The monolithic file intended for a wide-issue microprocessor without hardly any register file design is slow because each memory cell in the impact on IPC. Our results show that it is possible to replace a register file has to drive a large number of bit-lines. In register file with 16 read and 8 write ports, intended for an eight- contrast, the split register file is fast, but duplicates the issue processor, with a register file with just 8 read and 8 write contents of the register file in two memory arrays, resulting in ports so that the impact on IPC is a few percent. -

1.1.2. Register File

國 立 交 通 大 學 資訊科學與工程研究所 碩 士 論 文 同步多執行緒架構中可彈性切割與可延展的暫存 器檔案設計之研究 Design of a Flexibly Splittable and Stretchable Register File for SMT Architectures 研 究 生:鐘立傑 指導教授:單智君 教授 中 華 民 國 九 十 六 年 八 月 I II III IV 同步多執行緒架構中可彈性切割與可延展的暫存 器檔案設計之研究 學生:鐘立傑 指導教授:單智君 博士 國立交通大學資訊科學與工程研究所 碩士班 摘 要 如何利用最少的硬體資源來支援同步多執行緒是一個很重要的研究議題,暫存 器檔案(Register file)在微處理器晶片面積中佔有顯著的比例。而且為了支援同步多 執行緒,每一個執行緒享有自己的一份暫存器檔案,這樣的設計會增加晶片的面積。 在本篇論文中,我們提出了一份可彈性切割與可延展的暫存器檔案設計,在這 個設計裡:1.我們可以在需要的時候彈性切割一份暫存器檔案給兩個執行緒來同時 使用,2.適當的延伸暫存器檔案的大小來增加兩個執行緒共用的機會。 藉由我們設計可以得到的益處有:1.增加硬體資源的使用率,2. 減少對於記憶 體的存取以及 3.提升系統的效能。此外我們設計概念可以任意的滿足不同的應用程 式的需求。 V Design of a Flexibly Splittable and Stretchable Register File for SMT Architectures Student:Li-Jie Jhing Advisor:Dr, Jean Jyh-Jiun Shann Institute of Computer Science and Engineering National Chiao-Tung University Abstract How to support simultaneous multithreading (SMT) with minimum resource hence becomes a critical research issue. The register file in a microprocessor typically occupies a significant portion of the chip area, and in order to support SMT, each thread will have a copy of register file. That will increase the area overhead. In this thesis, we propose a register file design techniques that can 1. Split a copy of physical register file flexibly into two independent register sets when required, simultaneously operable for two independent threads. 2. Stretch the size of the physical register file arbitrarily, to increase probability of sharing by two threads. Benefits of these designs are: 1. Increased hardware resource utilization. 2. Reduced memory -

Comparing the Power and Performance of Intel's SCC to State

Comparing the Power and Performance of Intel’s SCC to State-of-the-Art CPUs and GPUs Ehsan Totoni, Babak Behzad, Swapnil Ghike, Josep Torrellas Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA E-mail: ftotoni2, bbehza2, ghike2, [email protected] Abstract—Power dissipation and energy consumption are be- A key architectural challenge now is how to support in- coming increasingly important architectural design constraints in creasing parallelism and scale performance, while being power different types of computers, from embedded systems to large- and energy efficient. There are multiple options on the table, scale supercomputers. To continue the scaling of performance, it is essential that we build parallel processor chips that make the namely “heavy-weight” multi-cores (such as general purpose best use of exponentially increasing numbers of transistors within processors), “light-weight” many-cores (such as Intel’s Single- the power and energy budgets. Intel SCC is an appealing option Chip Cloud Computer (SCC) [1]), low-power processors (such for future many-core architectures. In this paper, we use various as embedded processors), and SIMD-like highly-parallel archi- scalable applications to quantitatively compare and analyze tectures (such as General-Purpose Graphics Processing Units the performance, power consumption and energy efficiency of different cutting-edge platforms that differ in architectural build. (GPGPUs)). These platforms include the Intel Single-Chip Cloud Computer The Intel SCC [1] is a research chip made by Intel Labs (SCC) many-core, the Intel Core i7 general-purpose multi-core, to explore future many-core architectures. It has 48 Pentium the Intel Atom low-power processor, and the Nvidia ION2 (P54C) cores in 24 tiles of two cores each. -

Technology & Energy

This Unit: Technology & Energy • Technology basis • Fabrication (manufacturing) & cost • Transistors & wires CIS 501: Computer Architecture • Implications of transistor scaling (Moore’s Law) • Energy & power Unit 3: Technology & Energy Slides'developed'by'Milo'Mar0n'&'Amir'Roth'at'the'University'of'Pennsylvania'' with'sources'that'included'University'of'Wisconsin'slides' by'Mark'Hill,'Guri'Sohi,'Jim'Smith,'and'David'Wood' CIS 501: Comp. Arch. | Prof. Milo Martin | Technology & Energy 1 CIS 501: Comp. Arch. | Prof. Milo Martin | Technology & Energy 2 Readings Review: Simple Datapath • MA:FSPTCM • Section 1.1 (technology) + 4 • Section 9.1 (power & energy) Register Data Insn File PC s1 s2 d Mem • Paper Mem • G. Moore, “Cramming More Components onto Integrated Circuits” • How are instruction executed? • Fetch instruction (Program counter into instruction memory) • Read registers • Calculate values (adds, subtracts, address generation, etc.) • Access memory (optional) • Calculate next program counter (PC) • Repeat • Clock period = longest delay through datapath CIS 501: Comp. Arch. | Prof. Milo Martin | Technology & Energy 3 CIS 501: Comp. Arch. | Prof. Milo Martin | Technology & Energy 4 Recall: Processor Performance • Programs consist of simple operations (instructions) • Add two numbers, fetch data value from memory, etc. • Program runtime = “seconds per program” = (instructions/program) * (cycles/instruction) * (seconds/cycle) • Instructions per program: “dynamic instruction count” • Runtime count of instructions executed by the program • Determined by program, compiler, instruction set architecture (ISA) • Cycles per instruction: “CPI” (typical range: 2 to 0.5) • On average, how many cycles does an instruction take to execute? • Determined by program, compiler, ISA, micro-architecture Technology & Fabrication • Seconds per cycle: clock period, length of each cycle • Inverse metric: cycles per second (Hertz) or cycles per ns (Ghz) • Determined by micro-architecture, technology parameters • This unit: transistors & semiconductor technology CIS 501: Comp. -

Reverse Engineering X86 Processor Microcode

Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz, Ruhr-University Bochum https://www.usenix.org/conference/usenixsecurity17/technical-sessions/presentation/koppe This paper is included in the Proceedings of the 26th USENIX Security Symposium August 16–18, 2017 • Vancouver, BC, Canada ISBN 978-1-931971-40-9 Open access to the Proceedings of the 26th USENIX Security Symposium is sponsored by USENIX Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz Ruhr-Universitat¨ Bochum Abstract hardware modifications [48]. Dedicated hardware units to counter bugs are imperfect [36, 49] and involve non- Microcode is an abstraction layer on top of the phys- negligible hardware costs [8]. The infamous Pentium fdiv ical components of a CPU and present in most general- bug [62] illustrated a clear economic need for field up- purpose CPUs today. In addition to facilitate complex and dates after deployment in order to turn off defective parts vast instruction sets, it also provides an update mechanism and patch erroneous behavior. Note that the implementa- that allows CPUs to be patched in-place without requiring tion of a modern processor involves millions of lines of any special hardware. While it is well-known that CPUs HDL code [55] and verification of functional correctness are regularly updated with this mechanism, very little is for such processors is still an unsolved problem [4, 29]. known about its inner workings given that microcode and the update mechanism are proprietary and have not been Since the 1970s, x86 processor manufacturers have throughly analyzed yet. -

Memory Hierarchy

Carnegie Mellon Introduction to Cloud Computing Systems I 15‐319, spring 2010 4th Lecture, Jan 26th Majd F. Sakr 15-319 Introduction to Cloud Computing Spring 2010 © Carnegie Mellon Lecture Motivation Overview of a Cloud architecture Systems review . ISA . Memory hierarchy . OS . Networks Next time . Impact on cloud performance 15-319 Introduction to Cloud Computing 2 Spring 2010 © Carnegie Mellon Intel Open Cirrus Cloud ‐ Pittsburgh 15-319 Introduction to Cloud Computing Spring 2010 © Carnegie Mellon Blade Performance Consider bandwidth and latency between these layers Quad Core Processor Disk core core core core L1 L2 Quad Core Processor L3 core core core core L1 Memory L2 15-319 Introduction to Cloud Computing Spring 2010 © Carnegie Mellon Cloud Performance Consider bandwidth and latency of all layers 15-319 Introduction to Cloud Computing Spring 2010 © Carnegie Mellon How could these different bandwidths impact the Cloud’s performance? To understand this, we need to go through a summary of the machine’s components? 15-319 Introduction to Cloud Computing Spring 2010 © Carnegie Mellon Single CPU Machine’s Component . ISA (Processor) . Main Memory . Disk . Operating System . Network 15-319 Introduction to Cloud Computing Spring 2010 © Carnegie Mellon A Single‐CPU Computer Components Datapath Control Register PC File Main IR I/O Bridge Memory Control ALU Unit CPU I/O Bus Disk Network 15-319 Introduction to Cloud Computing Spring 2010 © Carnegie Mellon The Von Neuman machine ‐ Completed 1952 Main memory storing programs and data -

Introduction to Cpu

microprocessors and microcontrollers - sadri 1 INTRODUCTION TO CPU Mohammad Sadegh Sadri Session 2 Microprocessor Course Isfahan University of Technology Sep., Oct., 2010 microprocessors and microcontrollers - sadri 2 Agenda • Review of the first session • A tour of silicon world! • Basic definition of CPU • Von Neumann Architecture • Example: Basic ARM7 Architecture • A brief detailed explanation of ARM7 Architecture • Hardvard Architecture • Example: TMS320C25 DSP microprocessors and microcontrollers - sadri 3 Agenda (2) • History of CPUs • 4004 • TMS1000 • 8080 • Z80 • Am2901 • 8051 • PIC16 microprocessors and microcontrollers - sadri 4 Von Neumann Architecture • Same Memory • Program • Data • Single Bus microprocessors and microcontrollers - sadri 5 Sample : ARM7T CPU microprocessors and microcontrollers - sadri 6 Harvard Architecture • Separate memories for program and data microprocessors and microcontrollers - sadri 7 TMS320C25 DSP microprocessors and microcontrollers - sadri 8 Silicon Market Revenue Rank Rank Country of 2009/2008 Company (million Market share 2009 2008 origin changes $ USD) Intel 11 USA 32 410 -4.0% 14.1% Corporation Samsung 22 South Korea 17 496 +3.5% 7.6% Electronics Toshiba 33Semiconduc Japan 10 319 -6.9% 4.5% tors Texas 44 USA 9 617 -12.6% 4.2% Instruments STMicroelec 55 FranceItaly 8 510 -17.6% 3.7% tronics 68Qualcomm USA 6 409 -1.1% 2.8% 79Hynix South Korea 6 246 +3.7% 2.7% 812AMD USA 5 207 -4.6% 2.3% Renesas 96 Japan 5 153 -26.6% 2.2% Technology 10 7 Sony Japan 4 468 -35.7% 1.9% microprocessors and microcontrollers -

Introduction to Microcoded Implementation of a CPU Architecture

Introduction to Microcoded Implementation of a CPU Architecture N.S. Matloff, revised by D. Franklin January 30, 1999, revised March 2004 1 Microcoding Throughout the years, Microcoding has changed dramatically. The debate over simple computers vs complex computers once raged within the architecture community. In the end, the most popular microcoded computers survived for three reasons - marketshare, technological improvements, and the embracing of the principles used in simple computers. So the two eventually merged into one. To truly understand microcoding, one must understand why they were built, what they are, why they survived, and, finally, what they look like today. 1.1 Motivation Strictly speaking, the term architecture for a CPU refers only to \what the assembly language programmer" sees|the instruction set, addressing modes, and register set. For a given target architecture, i.e. the architecture we wish to build, various implementations are possible. We could have many different internal designs of the CPU chip, all of which produced the same effect, namely the same instruction set, addressing modes, etc. The different internal designs could then all be produced for the different models of that CPU, as in the familiar Intel case. The different models would have different speed capabilities, and probably different prices to the consumer. But the same machine languge program, say a .EXE file in the Intel/DOS case, would run on any CPU in the family. When desigining an instruction set architecture, there is a tradeoff between software and hardware. If you provide very few instructions, it takes more instructions to perform the same task, but the hardware can be very simple. -

Microcode Processor Monday, Feb

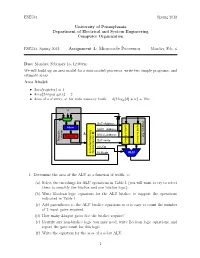

ESE534 Spring 2012 University of Pennsylvania Department of Electrical and System Engineering Computer Organization ESE534, Spring 2012 Assignment 4: Microcode Processor Monday, Feb. 6 Due: Monday, February 13, 12:00pm We will build up an area model for a microcoded processor, write two simple programs, and estimate areas. Area Model: • Area(register) = 4 • Area(2-input gate) = 2 • Area of a d-entry, w-bit wide memory bank = d(2 log2(d) + w) + 10w +1 Register File DST−Address Adder SRC1−Address A D Register SRC2−Address Counter Program DST−write (memory bank) (memory bank) ALUOp ALU Instruction Memory (memory bank) PCMode 1. Determine the area of the ALU as a function of width, w. (a) Select the encodings for ALU operations in Table 1 (you will want to try to select them to simplify the bitslice and non-bitslice logic). (b) Write Boolean logic equations for the ALU bitslice to support the operations indicated in Table 1. (c) Add parentheses to the ALU bitslice equations so it is easy to count the number of 2-input gates required. (d) How many 2-input gates doe the bitslice require? (e) Identify any non-bitslice logic you may need, write Boolean logic equations, and report the gate count for this logic. (f) Write the equation for the area of a w-bit ALU. 1 ESE534 Spring 2012 2. Determine the total area of the memory banks serving as the \register file” as a function of datapath width, w, and number of data items, r, held in each bank (i.e. the number of registers). -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements.