Optimization and Analytics for Air Traffic Management a Dissertation Submitted to the Department of Management Science and Engin

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Giambattista Valli for Impulse Only at Macy’S

Giambattista Valli for Impulse only at macy’s Launch Issue April 2012 Cover Stories Kalyn Hemphill An interview with Project Runway model winner 75 The Fall Collection New York Fashion Week Sneak Preview 23 AD Author Lindsey Isham On Relationships and Her Book “No Sex In The City” 65 Adam Burt Chaplain to Linemen: From Professional Hockey Player to a Family Man 85 Beaded Jump Rope Burn Up to 1000 Colaries Per Hour 102 Dress By Luminaa LLC By Luminaa LLC 5 Graceful Chic / Launch Issue 2012 The Lasting Sheer® Collection Ultra Sheer The NewsBoys In Central Park 81 Fashion Reflection Everyday Women 103 Fun Fience Fabulous 13 Faith and fashion, 29 Nicola Curry Fashion Styles Chrisianity and Catwalk: Do 43 Raychael Arianna these two Meet? 20 The Legend Lives on: 53 Lindy Leiser Alexander MacQueen 59 Sara Presley Resort Collection Beauty 73 Caprice Taylor 31 Coye Nokes’s Queen of the 55 Makeup 101: 93 Andromedo Turre Nile Shoe Collection 5 Tips to keep your face in 99 Melissa Peraldo place all day long 33 Ode to the Little Black Dress 56 Lip Gloss: Winter TLC NUTRITION Soft, sensual and luxuriously beautiful. 37 95 Filling up with Fiber: New York, New York: Exceptional sheerness Easy Casual Styles for 63 Inner Beauty: Four Simple Swaps everyday in the big city Mirror Mirror on the Wall 97 Spice Spotlight: Ginger with run resistant confidence. “I feel ugly today” 43 Fashion Bargain It’s Ultra Sheer perfected! Shopping Tips 119 Home is where the story 51 Relationships begins: The festive, easy Fashion Jewelry for Freedom brunch with friends by WAR -

Download Resume

TANISHA HARPER SAG/AFTRA FILM Fernando Bang Bangs Supporng Dir. Nathan Sapsford Forgeng Sarah Marshall Supporng Dir. Nicholas Stoller Something New Supporng Dir. Sanaa Hamri 7/10 Split Featured Dir. Tommy Reid TELEVISION Coming to Hollywood Guest Star Dir. Phyllis Thinkii Dear White People Guest Star Nelix In The Flow Affion Crocket Co-Star FOX Nick Swardson’s Pretend Co-Star Comedy Central TANISHA HARPER The Wanda Sykes Show Co-Star FOX Supreme Courtships Co-Star FOX email [email protected] Desire Series Regular FOX/My Network TV cell 310.985.1380 The Bold & The Beauful Guest Star CBS web www.tanishaharper.com Models of the Runway Self Lifeme instagram @tanishaharper Project Runway Self Lifeme CONTACT COMMERCIALS *Complete list available on request Manager Sketchers Principal Tim Kendell Marcus Niska Juvéderm Hero Giovanni Messner [email protected] Opmum Amla Hero Unknown Lexus IS Hero Anthony Mandler NTA Talent Agency Bud Light Principal Unknown [email protected] Lexus RX Principal Ace Norton 323.969.0113 American Laser Skin Care Principal Unknown J.C. Penney Principal Saathci & Saatchi MEASUREMENTS HOSTING Height 5’9” / 175cm Weight 125lbs / 57kg Posh Beauty Host poshbeauty.com Hair brown Loreal Style Studio Host Lifeme Network Eyes brown The Look TV Host The Look TV Electric Catwalk Host Cinema Electric SPECIAL SKILLS MUSIC VIDEOS SPORTS Runner (sprint), surfing, tennis, horseback Big Bang "Made" Featured Dir. Dikayl Rimmasch riding, mountain climbing, bicycling, ice 50 Cent "Get Up" Lead Dir. Brothers Strausse skang, rollerblading, skateboarding, Chris Brown "Yeah 3x" Featured Dir. Jamar Hawkins swimming, snowboarding, skiing, boxing, Snoop Dog "Peaches & Cream" Lead Dir. -

Thursday 22.12. Saturday 24.12. Friday 23.12. Sunday 25.12

HELSINKI TIMES TV GUIDE 22 DECEMBER 2011 – 4 JANUARY 2012 23 Helsinki Times TV Guide offers a selection of English and other language broadcasting on Finnish television. thursday 22.12. friday 23.12. TV1 MTV3 NELONEN TV1 MTV3 NELONEN 09:30 Heartbeat 10:05 The Young and the 09:30 Heartbeat 10:05 The Young and the 11:05 YLE News in English Restless 11:05 YLE News in English Restless 11:10 Holby City 11:00 Emmerdale 11:10 Holby City 11:00 Emmerdale 12:10 Coronation Street 13:00 Design Inc. 12:10 Coronation Street 13:00 ER 17:10 Heartbeat 14:05 Kath & Kim 17:10 Heartbeat 14:05 Rita Rocks 19:00 As the Time Goes By 17:00 The Bold and the Beautiful 23:05 Charles at 60- The 14:35 Freaks and Geeks 22:45 Les Enfants FILM 18:00 Emmerdale Passionate Prince DOC 17:00 The Bold and the Beautiful Directed by: Christian 21:00 Mentalist (K13) Prince Charles at home, 18:00 Emmerdale Vincent. Starring: Gérard 22:35 Modern Family abroad, at work and on duty. 22:45 Slap Shot (K15) FILM Blue Bloods Spy Hard Lanvin. France/2005. 23:05 Death Wish 2 (K18) FILM The revealing film goes behind 01:00 Sensing Murder Nelonen 22:00 Nelonen 21:00 In French. the closed doors of his world. SUB 09:00 James Martin: Sweet SUB 09:00 James Martin: Sweet TV2 09:30 A Baby Story TV2 09:30 A Baby Story 07:10 Capri 10:00 Bulging Brides 15:30 Marienhof 10:00 Bulging Brides 06:50 Children’s Programming Three episodes. -

Fourth Global Meet on Rhetoric to Begin Today

MEMBERS OF MOCK FIRE OVER 60 INDIAN DRILLS AT TAKE PART IN FORUM ASHGHAL ASTER BLOOD PLEDGE TO TOWERS, DONATION DONATE MOVENPICK DRIVE IN ORGANS AL RAYYAN PAGE 14 | DATELINE DOHA PAGE 15 | METRO MUSINGS PAGE 15 | METRO MUSINGS Weather Today PRAYER TIMING Fajr: 4:59 am Dhuhr: 11:42 am DUSTY & CLOUDY Asr: 2:43 pm Maghrib: 5:03 pm Sunrise 6:21 am Isha: 6:33 pm Sunset 5:03 pm High 20ºC Low 16ºC Wind 40 kts Visibility moderate Pressure 1013 mb Rel. humidity 40% Friday, January 11, 2013 RECOGNITION OF EXCELLENCE Deputy PM inaugurates HMC patient workshop on Darfur peace care initiative QNA DOHA gets a boost DEPUTY Prime Minister and Minister of State for Cabinet TRIBUNE NEWS NETWORK Director of Medical Affairs HE Ahmed bin DOHA Education and program Abdullah al Mahmoud on leader Dr Abdullatif al Khal Thursday opened a four-day PATIENT care improve- expressed his appreciation workshop on Darfur peace in ment activities led by health- for the support given to the Doha. care teams at Hamad programme by senior corpo- The workshop is being Medical Corporation (HMC) rate leaders, hospital med- organised by the Mediation have received a boost with ical directors, chairpersons Support Team of the United the Clinical Care and heads of clinical depart- Nations-African Union Improvement Training ments. “Quality is a never- Mission in Darfur (UNAMID) Programme (CCITP), which ending journey and we are for Sudan’s Justice and ca help HMC clinicians to grateful for the corporation- Equality Movement (JEM). execute clinical care wide support to our efforts to The delegation of the Minister of Culture, Arts and Heritage HE Dr Hamad bin Abdulaziz al Kuwari presents an award to Qatari artiste Hassan improvement projects. -

The Cable Network in an Era of Digital Media: Bravo and the Constraints of Consumer Citizenship

University of Massachusetts Amherst ScholarWorks@UMass Amherst Doctoral Dissertations Dissertations and Theses Fall August 2014 The Cable Network in an Era of Digital Media: Bravo and the Constraints of Consumer Citizenship Alison D. Brzenchek University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/dissertations_2 Part of the Communication Technology and New Media Commons, Critical and Cultural Studies Commons, Cultural History Commons, Feminist, Gender, and Sexuality Studies Commons, Film and Media Studies Commons, History of Science, Technology, and Medicine Commons, and the Political Economy Commons Recommended Citation Brzenchek, Alison D., "The Cable Network in an Era of Digital Media: Bravo and the Constraints of Consumer Citizenship" (2014). Doctoral Dissertations. 55. https://doi.org/10.7275/bjgn-vg94 https://scholarworks.umass.edu/dissertations_2/55 This Open Access Dissertation is brought to you for free and open access by the Dissertations and Theses at ScholarWorks@UMass Amherst. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. THE CABLE NETWORK IN AN ERA OF DIGITAL MEDIA: BRAVO AND THE CONSTRAINTS OF CONSUMER CITIZENSHIP A Dissertation Presented by ALISON D. BRZENCHEK Submitted to the Graduate School of the University of Massachusetts Amherst in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2014 Department -

Groups Split on Prop 8 Repeal Time

THE VOICE OF CHICAGO’S GAY, LESBIAN, BI AND TRANS COMMUNITY SINCE 1985 Aug. 19, 2009 • vol 24 no 46 www.WindyCityMediaGroup.com Groups split on Prop 8 repeal time BY REX WOCKNER “There’s no question that the community is, EQCA Executive Director Geoff Kors said that Clinton Rues you know, not unified behind one position and “if (other) people want to move forward with DOMA, DADT page 4 Equality California said Aug. 12 that it does not we really feel that we ... owe the LGBT commu- 2010, they’re welcome to it.” support returning to the ballot to try to repeal nity and our allies our best analysis,” Solomon “It’s a democracy and a free country,” Kors Proposition 8 until 2012. said. “We’d be leading people down a path that I said. “If something qualifies, we will support it Other groups are preparing for a 2010 ballot don’t feel comfortable leading them down (if we (but) we think we have one shot over these next fight. They include the Courage Campaign, Love supported 2010). It’s our job to say, ‘We think elections. ... We’ve come to a different conclu- Honor Cherish, Los Angeles’ Stonewall Demo- this 38-month path is the right path.’” sion than other organizations. ... We’re going to cratic Club and at least 40 other organizations. Solomon said the next ballot fight will cost do this right and smart and strategically.” “Donors want to make sure their investments “$40 million to $60 million.” Meanwhile, the Courage Campaign announced to win back marriage are wisely invested,” EQCA “Californians have been static on the issue of Aug. -

'Project Runway's' New Catwalk Page 1 of 3

'Project Runway's' new catwalk Page 1 of 3 Tuesday, August 18, 2009 'Project Runway's' new catwalk Hit reality show moves over to Lifetime, L.A. for its sixth season Mekeisha Madden Toby / Detroit News Television Writer For far too long, Lifetime has been viewed as the victimized women's network. That should change Thursday when Lifetime fights back with its biggest acquisition ever -- "Project Runway" -- which will strut out with a highly buzzed-about sixth season packed with location and production changes. Lifetime bought the rights more than a year ago, but NBC, which owns Bravo, fought the purchase in court until a resolution was reached this past spring. While some fans are grumbling about the network switch, critics are hailing it as a long-overdue move. "I think it's a perfect fit," says Elayne Rapping, a pop culture expert, author and professor who teaches television and society courses at the University at Buffalo-SUNY. "Lifetime is known as a women's channel -- Bravo still seems arty. Women watch these kinds of shows, and Lifetime has a brand name connotation, especially for older women who have watched Lifetime for years." A new basic-cable home isn't the only change of note. The sixth season will take place in Los Angeles instead of New York for the first time in the show's history, a move made to accommodate the familial needs of co-host and supermodel Heidi Klum. Like the switch from Bravo to Lifetime, fans have bemoaned the relocation in countless blogs all over the Internet. -

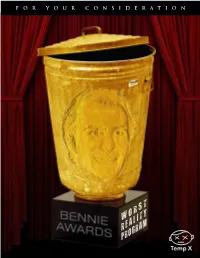

Worst Reality Program PROGRAM TITLE LOGLINE NETWORK the Vexcon Pest Removal Team Helps to Rid Clients of Billy the Exterminator A&E Their Extreme Pest Cases

FOR YOUR CONSIDERATION WORST REALITY PROGRAM PROGRAM TITLE LOGLINE NETWORK The Vexcon pest removal team helps to rid clients of Billy the Exterminator A&E their extreme pest cases. Performance series featuring Criss Angel, a master Criss Angel: Mindfreak illusionist known for his spontaneous street A&E performances and public shows. Reality series which focuses on bounty hunter Duane Dog the Bounty Hunter "Dog" Chapman, his professional life as well as the A&E home life he shares with his wife and their 12 children. A docusoap following the family life of Gene Simmons, Gene Simmons Family Jewels which include his longtime girlfriend, Shannon Tweed, a A&E former Playmate of the Year, and their two children. Reality show following rock star Dee Snider along with Growing Up Twisted A&E his wife and three children. Reality show that follows individuals whose lives have Hoarders A&E been affected by their need to hoard things. Reality series in which the friends and relatives of an individual dealing with serious problems or addictions Intervention come together to set up an intervention for the person in A&E the hopes of getting his or her life back on track. Reality show that follows actress Kirstie Alley as she Kirstie Alley's Big Life struggles with weight loss and raises her two daughters A&E as a single mom. Documentary relaity series that follows a Manhattan- Manhunters: Fugitive Task Force based federal task that tracks down fugitives in the Tri- A&E state area. Individuals with severe anxiety, compulsions, panic Obsessed attacks and phobias are given the chance to work with a A&E therapist in order to help work through their disorder. -

D7.2.1 / Annotated Corpus – Initial Version

D7.2.1 / Annotated Corpus – Initial Version FP7-ICT Strategic Targeted Research Project PHEME (No. 611233) Computing Veracity Across Media, Languages, and Social Networks D7.2.1 Annotated Corpus – Initial Version ___________________________________________________________ Anna Kolliakou, King’s College London Michael Ball, King’s College London Rob Stewart, King’s College London Abstract FP7-ICT Strategic Targeted Research Project PHEME (No. 611233) Deliverable D7.2.1 (WP 7) This deliverable describes the creation and annotation of two corpora of tweets on legal highs and prescribed medication. It provides a short summary of the aims and objectives of WP7, a demonstration work package for the PHEME project, and its demonstration studies. The development of the corpora is presented for each of the two case studies together with a detailed methodology of their annotation. Lastly, both corpora with their corresponding manually-annotated codes are provided. The aim of this deliverable is to describe the initial version of the development and annotation of two corpora of tweets corresponding to the first two demonstration studies of WP7. Keyword list: tweets, corpora, annotation, mephedrone, paliperidone, pregabalin, clozapine. Nature: Report Dissemination: RE Contractual date of delivery: 28.02.15 Actual date of delivery: Reviewed By: Web links: 1 D7.2.1 / Annotated Corpus – Initial Version PHEME Consortium This document is part of the PHEME research project (No. 611233), partially funded by the FP7-ICT Programme. University of Sheffield Universitaet -

Shooter Has Violent History Cally Always a Falling One

ISSUE 27 (157) • 8 – 14 JULY 2010 • €3 • WWW.HELSINKITIMES.FI SUMMER GUIDE BUSINESS LIFESTYLE EAT & DRINK Special Kiviniemi Summer New summer focuses snow Persian section on economy adventure cuisine pages 11-13 page 8 page 14 page 16 LEHTIKUVA / JUSSI NUKARI Did you Finland says know this yes to nuclear about Finnish? power reactors ALLAN BAIN ALLAN BAIN HELSINKI TIMES HELSINKI TIMES ON WHICH syllable/s words are em- THE FINNISH parliament has voted phasised and how sentences are in- to accept the Government’s propos- toned are fundamental elements of al for the building of two new nucle- any language. Yet, when Finnish is ar power reactors. discussed such issues as the seem- On the table were two proposed ingly infi nite number of noun and permits, Teollisuuden Voima’s (TVO) adjective groups or the differenc- and Fennovoima’s. The fi rst was ac- es between the spoken and written cepted with a 121-72 majority while language come up at the expense of the second was only marginally less emphasis and intonation. popular: it was supported 120-72. This is notable because the stress The vote was a highly conten- and intonation of the Finnish lan- tious one, splitting different polit- guage are relatively unique. Emphasis ical parties, members of individual is placed on the fi rst syllable of rough- parties themselves, and Finland’s ly 99.999 per cent of every Finnish citizenry. The vote, however, was word spoken, something one would a rough approximation of senti- Police moves a victim’s body at the crime scene in Porvoo after a shooting on early Tuesday morning. -

Bridging the Gap: Intergenerational Relations in PEO Peos Making a Difference

January—february 10 Bridging the Gap: Intergenerational P.E.O.s Making Relations in P.E.O. a Difference in Afghanistan, the Arctic and at home The P.E.O. Record JANUARY–FEBRUARYPhilanthropic Educational 2010 Organization1 officers ofINTERNATIONAL CHAPTER President Elizabeth E. Garrels Finance Committee 2257 235th St., Mount Pleasant, IA 52641-8582 Chairman, Kathie Herkelmann, 5572 N Adams Way, Bloomfield Hills, MI 48302 First Vice President Susan Reese Sellers 12014 Flintstone Dr., Houston, TX 77070-2715 Nancy Martin, 1111 Army Navy Dr. #801, Arlington, VA 22202-2032 Alix Smith, 9055 E Kalil Dr., Scottsdale, AZ 85260-6835 Second Vice President Maria T. Baseggio Audit Committee 173 Canterbury Ln., Blue Bell, PA 19422-1278 Chairman, Kathie Herkelmann, 5572 N Adams Way, Bloomfield Organizer Beth Ledbetter Hills, MI 48302 910 Tucker Hollow Rd. W, Fall Branch, TN 37656-3622 Nancy Martin, 1111 Army Navy Dr. #801, Arlington, VA 22202-2032 Alix Smith, 9055 E Kalil Dr., Scottsdale, AZ 85260-6835 Recording Secretary Sue Baker 1961 Howland-Wilson Rd. NE, Warren, OH 44484-3918 Study and Research Committee Chairman, Kay Duffield, 1919 Syringa Dr., Missoula, MT 59803 Standing Appointments Vice Chairman, Mary Stroh, 4721 Woodwind Way, Virginia Beach, Administrative Staff VA 23455-4770 Chief Executive Officer Anne Pettygrove Barbara Rosi, 39W600 Oak Shadows Ln., Saint Charles, IL 60175-6983 [email protected] Elizabeth McFarland, 3924 Los Robles Dr., Plano, TX 75074-3831 Director of Finance/Treasurer Kathy A. Soppe Libby Stucky, 7121 Eastridge Dr., Apex, NC 27539-9745 [email protected] Leann Drullinger, 314 S Jeffers, North Platte, NE 69101-5349 Director of Communications/Historian Joyce C. -

Brighten up Brighten E

s s FASHION: CFDA and QVC MEN’S: s EYE: team up for Fashion Targets The link Partying Breast Cancer, page 3. between for Calvin rising Klein and underwear Charlotte s sales and BEAUTY: Chanel opens Ronson, the economy, new space at Selfridges page 4. page 12. in London, page 12. Women’s Wear Daily • The Retailers’ Daily Newspaper • August 24, 2009 • $3.00 WwDMONAccessories/Innerwear/LegweardAY Brighten Up Legwear manufacturers crank up the wattage this spring, WILLIAMS t offering plenty of splashy florals, tie-dyes and kaleidoscopic UR o patterns. Here, Me Moi’s nylon and spandex tights with C by Tigerlily’s swimsuit. For more, see pages 6 and 7. LED ty S; S t ER ob : ELISSA R t AN t N ASSIS Adapting for Survival: o I h RICK; FAS t A Vendors Redoing Model p : SUSANNA t For Era of ‘New Normal’ AN t ASSIS By WWD Staff Get ready for the Post- photo ICS/ Recession Era. t SME Even as vendors and retailers continue o to struggle with nonshopping consumers, R MAC C declining sales and a near-term outlook that o F to remains uncertain, the outlines of the world beyond the economic downturn are coming DE FAC t A into focus. And the result can be summed up in phy one phrase: Less will be more. The NPD Group estimates that 12 percent of vendors will not survive the recession and WILLIAM MUR by nearly 20 percent will abandon expansion p strategies and retrench, focusing on their core ; MAKEU products and markets.