The Impact of Design and Code Reviews on Software Quality: an Empirical Study Based on PSP Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Writing Quality Software

Writing Quality Software About this white paper: This whitepaper was written by David C. Young, an employee of General Dynamics Information Technology (GDIT). Dr. Young is part of a team of GDIT employees who maintain, and support high performance computing systems at the Alabama Supercomputer Center (ASC). This was written in 2020. This paper is written for people who want to write good software, but don’t have a master’s degree in software architecture (or someone managing the project who does). Much of what is here would be covered in a software development practices class, often taught at the master’s degree level. Writing quality software is not only about the satisfaction of a job well done. It is also reflects on you and your professional reputation amongst your peers. In some cases writing quality software can be a factor in getting a job, losing a job, or even life or death. Furthermore, writing quality software should be considered an implicit requirement in every software development project. If the intended useful life of the software is many years, that is yet another reason to do a good job writing it. Introduction Consider this situation, which is all too common. You have written a really neat piece of software. You put it out on github, then tell your colleagues about it. Soon you are bombarded with a series of complaints from people who tried to install, and use your software. Some of those complaints might be; • It won’t install on their version of Linux. • They did the same thing you reported, but got a different answer. -

Studying the Feasibility and Importance of Software Testing: an Analysis

Dr. S.S.Riaz Ahamed / Internatinal Journal of Engineering Science and Technology Vol.1(3), 2009, 119-128 STUDYING THE FEASIBILITY AND IMPORTANCE OF SOFTWARE TESTING: AN ANALYSIS Dr.S.S.Riaz Ahamed Principal, Sathak Institute of Technology, Ramanathapuram,India. Email:[email protected], [email protected] ABSTRACT Software testing is a critical element of software quality assurance and represents the ultimate review of specification, design and coding. Software testing is the process of testing the functionality and correctness of software by running it. Software testing is usually performed for one of two reasons: defect detection, and reliability estimation. The problem of applying software testing to defect detection is that software can only suggest the presence of flaws, not their absence (unless the testing is exhaustive). The problem of applying software testing to reliability estimation is that the input distribution used for selecting test cases may be flawed. The key to software testing is trying to find the modes of failure - something that requires exhaustively testing the code on all possible inputs. Software Testing, depending on the testing method employed, can be implemented at any time in the development process. Keywords: verification and validation (V & V) 1 INTRODUCTION Testing is a set of activities that could be planned ahead and conducted systematically. The main objective of testing is to find an error by executing a program. The objective of testing is to check whether the designed software meets the customer specification. The Testing should fulfill the following criteria: ¾ Test should begin at the module level and work “outward” toward the integration of the entire computer based system. -

Design Review Procedure

IDM UID 2832CF VERSION CREATED ON / VERSION / STATUS 30 Nov 2012 / 3.1/ Approved EXTERNAL REFERENCE MQP Procedure Design Review Procedure This document describes how to conduct IO Design Reviews on ITER Systems. It is applicable to all the Conceptual, Preliminary and Final Design Reviews performed by IO on the ITER Project. Approval Process Name Action Affiliation Author Guigon A. 30-Nov-2012:signed IO/DG/DIP/CIE/TI/SYSA CoAuthor Reviewers Alejaldre C. 19-Dec-2012:recommended IO/DG/SQS Bora D. 04-Dec-2012:recommended Chiocchio S. 03-Dec-2012:recommended IO/DG/DIP/CIE/TI Haange R. 19-Dec-2012:recommended IO/DG/DIP Hawryluk R. 04-Dec-2012:recommended IO/DG/ADM Kondoh M. 12-Dec-2012:recommended IO/DG/DIP/CIE Sands D. 19-Dec-2012:recommended IO/DG/SQS/QA Shirao T. 19-Dec-2012:recommended IO/DG/ODG Bak J.- S. 18-Dec-2012:recommended IO/DG/DIP Alekseev A. 03-Dec-2012:recommended IO/DG/DIP/TKM Merola M. 03-Dec-2012:recommended IO/DG/DIP/TKM/INC Approver Motojima O. 04-Jan-2013:approved IO/DG Document Security: level 1 (IO unclassified) RO: Croset Jean-Philippe Read Access LG: CODAC team, LG: Blanket add right persons, LG: IO Cryogenic Section All, GG: In-kind Management Administration, GG: TBM Committee, LG: IN DA cryogenic collaborator, LG: PBS32 JA, LG: [DOC] Baseline Managers, LG: PBS48 EXT, LG: Cryogenic IN DA PT, LG: Code & Standards external experts, LG: JADA_RH, LG: CDR reviewers, LG: Kraftanlagen, LG: Design Office, LG: SES DR Team, GG: TBM_IM_Teams, LG: FST/TBM staff, LG: IO PA project team, LG: DA PA project team, LG: IO TRO, LG: heatings, -

Optimization of Software Quality Using Management and Technical Review Techniques

International Journal of Computer Trends and Technology (IJCTT) – volume 17 Number 6 Nov 2014 Optimization of Software Quality using Management and Technical Review Techniques Inibehe Emmanuel Akpannah Post Graduate Student (MSc. Information Technology), SRM University, Chennai, India Abstract compromising the security of the entire system. Optimizing the quality of software is a function of Improving the quality of our software process leads the degree of reviews made during the early life of to minimal rework, cost effectiveness and also a software development process. Reviews detect meeting up with schedules, which all lead to errors and potential errors early in the software improvement in capability measurement. Software development process. The errors detected during review is a chain process, it is dynamic and review the early life cycle of software are least expensive needs to be performed at every phase of the to correct. Efficient involvement in software development process until there is a transition to the inspections and technical reviews, help developers testing phase. improve their own skills, thereby mitigating the Software design and code review is a phase in the occurrence of errors in the later stage of software software development process in which development process. The ideas gathered on this programmers (authors of codes), quality assurance paper point that a properly implemented program of (QA) team, and peer reviewers get together to technical and management reviews drastically review design and code [9]. One way of optimizing reduces the time as well as the cost required for the quality of software is finding and correcting testing, debugging, and reworking, and dramatically errors at the early stage of a software development improves the quality of the resulting product. -

Reliability: Software Software Vs

Reliability Theory SENG 521 Re lia bility th eory d evel oped apart f rom th e mainstream of probability and statistics, and Software Reliability & was usedid primar ily as a tool to h hlelp Software Quality nineteenth century maritime and life iifiblinsurance companies compute profitable rates Chapter 5: Overview of Software to charge their customers. Even today, the Reliability Engineering terms “failure rate” and “hazard rate” are often used interchangeably. Department of Electrical & Computer Engineering, University of Calgary Probability of survival of merchandize after B.H. Far ([email protected]) 1 http://www. enel.ucalgary . ca/People/far/Lectures/SENG521/ ooene MTTF is R e 0.37 From Engineering Statistics Handbook [email protected] 1 [email protected] 2 Reliability: Natural System Reliability: Hardware Natural system Hardware life life cycle. cycle. Aging effect: Useful life span Life span of a of a hardware natural system is system is limited limited by the by the age (wear maximum out) of the system. reproduction rate of the cells. Figure from Pressman’s book Figure from Pressman’s book [email protected] 3 [email protected] 4 Reliability: Software Software vs. Hardware So ftware life cyc le. Software reliability doesn’t decrease with Software systems time, i.e., software doesn’t wear out. are changed (updated) many Hardware faults are mostly physical faults, times during their e. g., fatigue. life cycle. Each update adds to Software faults are mostly design faults the structural which are harder to measure, model, detect deterioration of the and correct. software system. Figure from Pressman’s book [email protected] 5 [email protected] 6 Software vs. -

Parasoft Dottest REDUCE the RISK of .NET DEVELOPMENT

Parasoft dotTEST REDUCE THE RISK OF .NET DEVELOPMENT TRY IT https://software.parasoft.com/dottest Complement your existing Visual Studio tools with deep static INCREASE analysis and advanced PROGRAMMING EFFICIENCY: coverage. An automated, non-invasive solution that the related code, and distributed to his or her scans the application codebase to iden- IDE with direct links to the problematic code • Identify runtime bugs without tify issues before they become produc- and a description of how to fix it. executing your software tion problems, Parasoft dotTEST inte- grates into the Parasoft portfolio, helping When you send the results of dotTEST’s stat- • Automate unit and component you achieve compliance in safety-critical ic analysis, coverage, and test traceability testing for instant verification and industries. into Parasoft’s reporting and analytics plat- regression testing form (DTP), they integrate with results from Parasoft dotTEST automates a broad Parasoft Jtest and Parasoft C/C++test, allow- • Automate code analysis for range of software quality practices, in- ing you to test your entire codebase and mit- compliance cluding static code analysis, unit testing, igate risks. code review, and coverage analysis, en- abling organizations to reduce risks and boost efficiency. Tests can be run directly from Visual Stu- dio or as part of an automated process. To promote rapid remediation, each problem detected is prioritized based on configur- able severity assignments, automatical- ly assigned to the developer who wrote It snaps right into Visual Studio as though it were part of the product and it greatly reduces errors by enforcing all your favorite rules. We have stuck to the MS Guidelines and we had to do almost no work at all to have dotTEST automate our code analysis and generate the grunt work part of the unit tests so that we could focus our attention on real test-driven development. -

Design Review Principles and Practice

Design Review Principles and Practice Landscape Institute Inspiring great places Published in 2013 by the Design Council. Cover photo: Granary Square at King’s Cross, © John Sturrock All photos by Design Council unless otherwise stated. Although every care has been taken in preparing this report, no responsibility or liability will be accepted by Design Council, its employees, agents or advisors for its accuracy or completeness. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, copied or transmitted without the prior written consent of the publisher except that the material may be photocopied for non-commercial purposes without permission from the publisher. Contents Foreword 3 The purpose of this guidance document 4 Part One: Principles of Design Review Chapter 1: The essentials of Design Review 6 What is Design Review? 6 Ten principles of Design Review 7 Chapter 2: The role of Design Review in the planning system 8 Design Review and national planning policy 8 How Design Review adds value 9 Chapter 3: Who benefits from Design Review? 10 Local authorities 10 Developers 10 Project design teams 11 Community groups 11 Chapter 4: Local and National Design Review 12 Local Design Review arrangements 12 National Design Review 12 Who reviews what? 12 Part Two: Design Review in practice Chapter 5: Delivering the principles 14 Chapter 6: A robust Design Review process 23 Preparation 23 Review 25 Observers 26 Advice 27 Other practical matters 28 Useful contacts 29 Contents 1 William Gates Building, University of Cambridge, © RMJM Foreword Our standards of design can be so much higher. -

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform Parasoft® dotTEST™ /Jtest (for Java) / C/C++test is an integrated Development Testing solution for automating a broad range of testing best practices proven to improve development team productivity and software quality. dotTEST / Java Test / C/C++ Test also seamlessly integrates with Parasoft SOAtest as an option, which enables end-to-end functional and load testing for complex distributed applications and transactions. Capabilities Overview STATIC ANALYSIS ● Broad support for languages and standards: Security | C/C++ | Java | .NET | FDA | Safety-critical ● Static analysis tool industry leader since 1994 ● Simple out-of-the-box integration into your SDLC ● Prevent and expose defects via multiple analysis techniques ● Find and fix issues rapidly, with minimal disruption ● Integrated with Parasoft's suite of development testing capabilities, including unit testing, code coverage analysis, and code review CODE COVERAGE ANALYSIS ● Track coverage during unit test execution and the data merge with coverage captured during functional and manual testing in Parasoft Development Testing Platform to measure true test coverage. ● Integrate with coverage data with static analysis violations, unit testing results, and other testing practices in Parasoft Development Testing Platform for a complete view of the risk associated with your application ● Achieve test traceability to understand the impact of change, focus testing activities based on risk, and meet compliance -

Rome Laboratory Reliability Engineer's Toolkit

Rome Laboratory Reliability Engineer's Toolkit April 1993 j* ROME LABORATORY RELIABILITY ENGINEER'S TOOLKIT April 1993 An Application Oriented Guide for the Practicing Reliability Engineer Systems Reliability Division Rome Laboratory Air Force Materiel Command (AFMC) 525 Brooks Rd. Griffiss AFB, NY 13441-4505 QUICK REFERENCE Quick Reference Application Index How Do I... ? • Understand the Principles of TQM 2 Understand Basic DoD R&M Policy and Procedures 7 Develop Quantitative Requirements Reliability (R) 11 Maintainability (M) 17 Testability (T) 20 • Tailor R&M Task Requirements 23 R&M Task Application/Priorities 25 Develop a Contract Data Requirements List 26 • Specify Information To Be Included in Proposals 28 Evaluate Contractor Proposals 31 Specify Part Stress Derating 37 Determine the Limitations of Common Cooling Techniques 44 Understand Basic Parts Control 46 Identify Key R&M&T Topics for Evaluation at Design Reviews 55 Evaluate Contactor's Method of Managing Critical Items 62 Understand Design Concerns Associated with Dormant Conditions 63 Understand Basic SMT Design Issues 66 Evaluate Power Supply Reliability 67 Determine Part Failure Modes and Mechanisms 69 Evaluate Fiber Optic Reliability 73 Understand R&M&T Analysis Types and Purposes 77 Understand Reliability Prediction Methods 80 Understand Maintainability Prediction Methods 81 Understand Testability Analysis Methods 84 Evaluate a Reliability Prediction Report 85 Evaluate Existing Reliability Data 86 Evaluate a Maintainability/Testability Analysis Report 87 Evaluate a Failure -

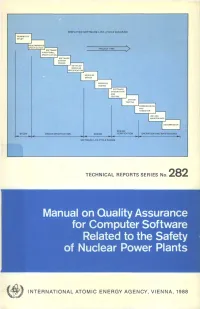

Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants

SIMPLIFIED SOFTWARE LIFE-CYCLE DIAGRAM FEASIBILITY STUDY PROJECT TIME I SOFTWARE P FUNCTIONAL I SPECIFICATION! SOFTWARE SYSTEM DESIGN DETAILED MODULES CECIFICATION MODULES DESIGN SOFTWARE INTEGRATION AND TESTING SYSTEM TESTING ••COMMISSIONING I AND HANDOVER | DECOMMISSION DESIGN DESIGN SPECIFICATION VERIFICATION OPERATION AND MAINTENANCE SOFTWARE LIFE-CYCLE PHASES TECHNICAL REPORTS SERIES No. 282 Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants f INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1988 MANUAL ON QUALITY ASSURANCE FOR COMPUTER SOFTWARE RELATED TO THE SAFETY OF NUCLEAR POWER PLANTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GUATEMALA PARAGUAY ALBANIA HAITI PERU ALGERIA HOLY SEE PHILIPPINES ARGENTINA HUNGARY POLAND AUSTRALIA ICELAND PORTUGAL AUSTRIA INDIA QATAR BANGLADESH INDONESIA ROMANIA BELGIUM IRAN, ISLAMIC REPUBLIC OF SAUDI ARABIA BOLIVIA IRAQ SENEGAL BRAZIL IRELAND SIERRA LEONE BULGARIA ISRAEL SINGAPORE BURMA ITALY SOUTH AFRICA BYELORUSSIAN SOVIET JAMAICA SPAIN SOCIALIST REPUBLIC JAPAN SRI LANKA CAMEROON JORDAN SUDAN CANADA KENYA SWEDEN CHILE KOREA, REPUBLIC OF SWITZERLAND CHINA KUWAIT SYRIAN ARAB REPUBLIC COLOMBIA LEBANON THAILAND COSTA RICA LIBERIA TUNISIA COTE D'lVOIRE LIBYAN ARAB JAMAHIRIYA TURKEY CUBA LIECHTENSTEIN UGANDA CYPRUS LUXEMBOURG UKRAINIAN SOVIET SOCIALIST CZECHOSLOVAKIA MADAGASCAR REPUBLIC DEMOCRATIC KAMPUCHEA MALAYSIA UNION OF SOVIET SOCIALIST DEMOCRATIC PEOPLE'S MALI REPUBLICS REPUBLIC OF KOREA MAURITIUS UNITED ARAB -

Devnet Module 3

Module 3: Software Development and Design DEVASCv1 1 Module Objectives . Module Title: Software Development and Design . Module Objective: Use software development and design best practices. It will comprise of the following sections: Topic Title Topic Objective 3.1 Software Development Compare software development methodologies. 3.2 Software Design Patterns Describe the benefits of various software design patterns. 3.3 Version Control Systems Implement software version control using GIT. 3.4 Coding Basics Explain coding best practices. 3.5 Code Review and Testing Use Python Unit Test to evaluate code. 3.6 Understanding Data Formats Use Python to parse different messaging and data formats. DEVASCv1 2 3.1 Software Development DEVASCv1 3 Introduction . The software development process is also known as the software development life cycle (SDLC). SDLC is more than just coding and also includes gathering requirements, creating a proof of concept, testing, and fixing bugs. DEVASCv1 4 Software Development Life Cycle (SDLC) . SDLC is the process of developing software, starting from an idea and ending with delivery. This process consists of six phases. Each phase takes input from the results of the previous phase. SDLC is the process of developing software, starting from an idea and ending with delivery. This process consists of six phases. Each phase takes input from the results of the previous phase. Although the waterfall methods is still widely used today, it's gradually being superseded by more adaptive, flexible methods that produce better software, faster, with less pain. These methods are collectively known as “Agile development.” DEVASCv1 5 Requirements and Analysis Phase . The requirements and analysis phase involves the product owner and qualified team members exploring the stakeholders' current situation, needs and constraints, present infrastructure, and so on, and determining the problem to be solved by the software. -

Metrics for Gerrit Code Reviews

SPLST'15 Metrics for Gerrit code reviews Samuel Lehtonen and Timo Poranen University of Tampere, School of Information Sciences, Tampere, Finland [email protected],[email protected] Abstract. Code reviews are a widely accepted best practice in mod- ern software development. To enable easier and more agile code reviews, tools like Gerrit have been developed. Gerrit provides a framework for conducting reviews online, with no need for meetings or mailing lists. However, even with the help of tools like Gerrit, following and monitoring the review process becomes increasingly hard, when tens or even hun- dreds of code changes are uploaded daily. To make monitoring the review process easier, we propose a set of metrics to be used with Gerrit code review. The focus is on providing an insight to velocity and quality of code reviews, by measuring different review activities based on data, au- tomatically extracted from Gerrit. When automated, the measurements enable easy monitoring of code reviews, which help in establishing new best practices and improved review process. Keywords: Code quality; Code reviews; Gerrit; Metrics; 1 Introduction Code reviews are a widely used quality assurance practice in software engineer- ing, where developers read and assess each other's code before it is integrated into the codebase or deployed into production. Main motivations for reviews are to detect software defects and to improve code quality while sharing knowledge among developers. Reviews were originally introduced by Fagan [4] already in 1970's. The original, formal type of code inspections are still used in many com- panies, but has been often replaced with more modern types of reviews, where the review is not tied to place or time.