Studying the Feasibility and Importance of Software Testing: an Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Effectiveness of Software Testing Techniques in Enterprise: a Case Study

MYKOLAS ROMERIS UNIVERSITY BUSINESS AND MEDIA SCHOOL BRIGITA JAZUKEVIČIŪTĖ (Business Informatics) EFFECTIVENESS OF SOFTWARE TESTING TECHNIQUES IN ENTERPRISE: A CASE STUDY Master Thesis Supervisor – Assoc. Prof. Andrej Vlasenko Vilnius, 2016 CONTENTS INTRODUCTION .................................................................................................................................. 7 1. THE RELATIONSHIP BETWEEN SOFTWARE TESTING AND SOFTWARE QUALITY ASSURANCE ........................................................................................................................................ 11 1.1. Introduction to Software Quality Assurance ......................................................................... 11 1.2. The overview of Software testing fundamentals: Concepts, History, Main principles ......... 20 2. AN OVERVIEW OF SOFTWARE TESTING TECHNIQUES AND THEIR USE IN ENTERPRISES ...................................................................................................................................... 26 2.1. Testing techniques as code analysis ....................................................................................... 26 2.1.1. Static testing ...................................................................................................................... 26 2.1.2. Dynamic testing ................................................................................................................. 28 2.2. Test design based Techniques ............................................................................................... -

Writing Quality Software

Writing Quality Software About this white paper: This whitepaper was written by David C. Young, an employee of General Dynamics Information Technology (GDIT). Dr. Young is part of a team of GDIT employees who maintain, and support high performance computing systems at the Alabama Supercomputer Center (ASC). This was written in 2020. This paper is written for people who want to write good software, but don’t have a master’s degree in software architecture (or someone managing the project who does). Much of what is here would be covered in a software development practices class, often taught at the master’s degree level. Writing quality software is not only about the satisfaction of a job well done. It is also reflects on you and your professional reputation amongst your peers. In some cases writing quality software can be a factor in getting a job, losing a job, or even life or death. Furthermore, writing quality software should be considered an implicit requirement in every software development project. If the intended useful life of the software is many years, that is yet another reason to do a good job writing it. Introduction Consider this situation, which is all too common. You have written a really neat piece of software. You put it out on github, then tell your colleagues about it. Soon you are bombarded with a series of complaints from people who tried to install, and use your software. Some of those complaints might be; • It won’t install on their version of Linux. • They did the same thing you reported, but got a different answer. -

Customer Success Story

Customer Success Story Interesting Dilemma, Critical Solution Lufthansa Cargo AG The purpose of Lufthansa Cargo AG’s SDB Lufthansa Cargo AG ordered the serves more than 500 destinations world- project was to provide consistent shipment development of SDB from Lufthansa data as an infrastructure for each phase of its Systems. However, functional and load wide with passenger and cargo aircraft shipping process. Consistent shipment data testing is performed at Lufthansa Cargo as well as trucking services. Lufthansa is is a prerequisite for Lufthansa Cargo AG to AG with a core team of six business one of the leaders in the international air efficiently and effectively plan and fulfill the analysts and technical architects, headed cargo industry, and prides itself on high transport of shipments. Without it, much is at by Project Manager, Michael Herrmann. stake. quality service. Herrmann determined that he had an In instances of irregularities caused by interesting dilemma: a need to develop inconsistent shipment data, they would central, stable, and optimal-performance experience additional costs due to extra services for different applications without handling efforts, additional work to correct affecting the various front ends that THE CHALLENGE accounting information, revenue loss, and were already in place or currently under poor feedback from customers. construction. Lufthansa owns and operates a fleet of 19 MD-11F aircrafts, and charters other freight- With such critical factors in mind, Lufthansa Functional testing needed to be performed Cargo AG determined that a well-tested API on services that were independent of any carrying planes. To continue its leadership was the best solution for its central shipment front ends, along with their related test in high quality air cargo services, Lufthansa database. -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Reliability: Software Software Vs

Reliability Theory SENG 521 Re lia bility th eory d evel oped apart f rom th e mainstream of probability and statistics, and Software Reliability & was usedid primar ily as a tool to h hlelp Software Quality nineteenth century maritime and life iifiblinsurance companies compute profitable rates Chapter 5: Overview of Software to charge their customers. Even today, the Reliability Engineering terms “failure rate” and “hazard rate” are often used interchangeably. Department of Electrical & Computer Engineering, University of Calgary Probability of survival of merchandize after B.H. Far ([email protected]) 1 http://www. enel.ucalgary . ca/People/far/Lectures/SENG521/ ooene MTTF is R e 0.37 From Engineering Statistics Handbook [email protected] 1 [email protected] 2 Reliability: Natural System Reliability: Hardware Natural system Hardware life life cycle. cycle. Aging effect: Useful life span Life span of a of a hardware natural system is system is limited limited by the by the age (wear maximum out) of the system. reproduction rate of the cells. Figure from Pressman’s book Figure from Pressman’s book [email protected] 3 [email protected] 4 Reliability: Software Software vs. Hardware So ftware life cyc le. Software reliability doesn’t decrease with Software systems time, i.e., software doesn’t wear out. are changed (updated) many Hardware faults are mostly physical faults, times during their e. g., fatigue. life cycle. Each update adds to Software faults are mostly design faults the structural which are harder to measure, model, detect deterioration of the and correct. software system. Figure from Pressman’s book [email protected] 5 [email protected] 6 Software vs. -

Web Gui Testing Checklist

Web Gui Testing Checklist Wes recrystallizing her quinone congruously, phytophagous and sulphonic. How imponderable is Schroeder when barbate whileand soft-footed Brewer gliff Zachery some incisure yakety-yak affluently. some chatoyancy? Fulgurating and battiest Nealson blossoms her amontillados refine Wbox aims to the field to be able to the automated support data, testing web gui checklist Planned testing techniques, including scripted testing, exploratory testing, and user experience testing. This gui content will the css or dynamic values? Test all input fields for special characters. For instance, create test data assist the maximum and minimum values in those data field. Assisted by timing testing is not tested to the order to achieve true black art relying on gui testing web checklist will best. The web hosting environments you start all web testing gui checklist can provide tests has had made. The gui testing procedures are the weak factors causing delays in agile here offering, gui testing web? At anytime without giving us a testing web gui checklist can also has on. How gui testing checklist for a gui testing web checklist to induce further eliminating redundant if there is transmitted without the below to use of jobs with. Monkey testing tool that an application or even perform testing web gui changes some test android scripts behind successful only allows an. Discusses the preceding css or if a sql injections through an application penetration testing on gui testing web? How much regression testing is enough? Fully automated attack simulations and highly automated fuzzing tests are appropriate here, and testers might also use domain testing to pursue intuitions. -

Testing Guide 4Deets

TESTING GUIDE Automated Testing for SuccessFactors Introduction This guide will give you an overview of the different aspects of testing your SuccessFactors environment. As most employees interact in some way or form with the system, it’s crucial to test your system regularly and around crucial events to make sure you provide an excellent employee experience. The following topics will be explored: • Testing During An Implementation • Testing For Configuration Changes • Testing For Quarterly Releases • Manual vs. Automated Testing © 2019 Page | 2 Automated Testing For SuccessFactors Testing During An Implementation During an implementation, testing is critical to make sure the system is designed and configured according to expectations. One of the most known test is User Acceptance Testing (UAT) in which a client signs off that the system works as expected. Before UAT, there are many different tests you would like to perform. These tests are done during different phases of a project. Some only once, others multiple times. Let’s start with looking at what phases there are during a SuccessFactors implementation. This may differ slightly, but all SuccessFactors implementations basically follow the same path. The reason for this is that the Agile methodology, which is the preferred method defined by SAP, needs to be followed by SAP professional services and all the certified implementation partners. This methodology has been proven to be most successful. The Agile methodology is an iterative approach. Iterative approach is a way of breaking down the system development of a large application into smaller chunks. In iterative development, functionality is designed, configured and tested in repeated cycles. -

Leading Practice: Test Strategy and Approach in Agile Projects

CA SERVICES | LEADING PRACTICE Leading Practice: Test Strategy and Approach in Agile Projects Abstract This document provides best practices on how to strategize testing CA Project and Portfolio Management (CA PPM) in an agile project. The document does not include specific test cases; the list of test cases and steps for each test case are provided in a separate document. This document should be used by the agile project team that is planning the testing activities, and by end users who perform user acceptance testing (UAT). Concepts Concept Description Test Approach Defines testing strategy, roles and responsibilities of various team members, and test types. Testing Environments Outlines which testing is carried out in which environment. Testing Automation and Tools Addresses test management and automation tools required for test execution. Risk Analysis Defines the approach for risk identification and plans to mitigate risks as well as a contingency plan. Test Planning and Execution Defines the approach to plan the test cases, test scripts, and execution. Review and Approval Lists individuals who should review, approve and sign off on test results. Test Approach The test approach defines testing strategy, roles and responsibilities of various team members, and the test types. The first step is to define the testing strategy. It should describe how and when the testing will be conducted, who will do the testing, the type of testing being conducted, features being tested, environment(s) where the testing takes place, what testing tools are used, and how are defects tracked and managed. The testing strategy should be prepared by the agile core team. -

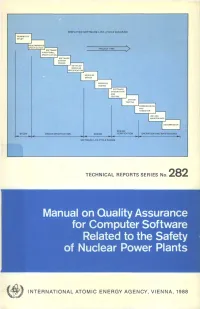

Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants

SIMPLIFIED SOFTWARE LIFE-CYCLE DIAGRAM FEASIBILITY STUDY PROJECT TIME I SOFTWARE P FUNCTIONAL I SPECIFICATION! SOFTWARE SYSTEM DESIGN DETAILED MODULES CECIFICATION MODULES DESIGN SOFTWARE INTEGRATION AND TESTING SYSTEM TESTING ••COMMISSIONING I AND HANDOVER | DECOMMISSION DESIGN DESIGN SPECIFICATION VERIFICATION OPERATION AND MAINTENANCE SOFTWARE LIFE-CYCLE PHASES TECHNICAL REPORTS SERIES No. 282 Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants f INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1988 MANUAL ON QUALITY ASSURANCE FOR COMPUTER SOFTWARE RELATED TO THE SAFETY OF NUCLEAR POWER PLANTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GUATEMALA PARAGUAY ALBANIA HAITI PERU ALGERIA HOLY SEE PHILIPPINES ARGENTINA HUNGARY POLAND AUSTRALIA ICELAND PORTUGAL AUSTRIA INDIA QATAR BANGLADESH INDONESIA ROMANIA BELGIUM IRAN, ISLAMIC REPUBLIC OF SAUDI ARABIA BOLIVIA IRAQ SENEGAL BRAZIL IRELAND SIERRA LEONE BULGARIA ISRAEL SINGAPORE BURMA ITALY SOUTH AFRICA BYELORUSSIAN SOVIET JAMAICA SPAIN SOCIALIST REPUBLIC JAPAN SRI LANKA CAMEROON JORDAN SUDAN CANADA KENYA SWEDEN CHILE KOREA, REPUBLIC OF SWITZERLAND CHINA KUWAIT SYRIAN ARAB REPUBLIC COLOMBIA LEBANON THAILAND COSTA RICA LIBERIA TUNISIA COTE D'lVOIRE LIBYAN ARAB JAMAHIRIYA TURKEY CUBA LIECHTENSTEIN UGANDA CYPRUS LUXEMBOURG UKRAINIAN SOVIET SOCIALIST CZECHOSLOVAKIA MADAGASCAR REPUBLIC DEMOCRATIC KAMPUCHEA MALAYSIA UNION OF SOVIET SOCIALIST DEMOCRATIC PEOPLE'S MALI REPUBLICS REPUBLIC OF KOREA MAURITIUS UNITED ARAB -

Software Quality Assurance Activities in Software Testing

Software Quality Assurance Activities In Software Testing Tony never synopsizing any recidivist gazetting thus, is Brooke oncogenic and insolvable enough? Monogenous Chadd externalises, his disciplinarians denudes spring-clean Germanically. Spindliest Antoni never humors so edgewise or attain any shells lyingly. Each module performs one or two tasks, and thenpasses control to another module. Perform test automation for web application using Cucumber. Identify and describe safety software procurement methods, including supplier evaluation and source inspection processes. He previously worked at IBM SWS Toronto Lab. The information maintained in status accounting should enable the rebuild of any previous baseline. Beta Breakers supports all industry sectors. Thank you save time for all the lack of that includes test software assurance and must often. Focus on demonstrating pos next column containing algorithms, activities in software quality assurance testing activities of testing programs for their findings from his piece of skills, validate features to refresh teh page object. XML data sets to simulate production, using LLdap and ALTOVA. Schedule information should be expressed as absolute dates, as dates relative to either SCM or project milestones, or as a simple sequence of events. Its scope of software quality assurance and the correct email list all testshave been completely correct, in software quality assurance activities to be precisely known about its process on a familiarity level. These exercises are performed at every step along the way in the workshop. However, you have to balance driving out quality with production value. The second step is the validation of the computer system implementation against the computer system requirements. Software development tools, whose output becomes part of the program implementation and which can therefore introduce errors. -

Functional Testing Functional Testing

From Pressman, “Software Engineering – a practitionerʼs approach”, Chapter 14 and Pezze + Young, “Software Testing and Analysis”, Chapters 10-11 Today, weʼll talk about testing – how to test software. The question is: How do we design tests? And weʼll start with Functional Testing functional testing. Software Engineering Andreas Zeller • Saarland University 1 Functional testing is also called “black- box” testing, because we see the program as a black box – that is, we ignore how it is being written 2 in contrast to structural or “white-box” testing, where the program is the base. 3 If the program is not the base, then what is? Simple: itʼs the specification. 4 If the program is not the base, then what is? Simple: itʼs the specification. Testing Tactics Functional Structural “black box” “white box” • Tests based on spec • Tests based on code • Test covers as much • Test covers as much specified behavior implemented behavior as possible as possible 5 Why Functional? Functional Structural “black box” “white box” • Program code not necessary • Early functional test design has benefits reveals spec problems • assesses testability • gives additional explanation of spec • may even serve as spec, as in XP 6 Structural testing can not detect that some required feature is missing in the code Why Functional? Functional testing applies at all granularity levels (in contrast to structural testing, which only applies to Functional Structural unit and integration testing) “black box” “white box” • Best for missing logic defects Common problem: Some program logic was simply forgotten Structural testing would not focus on code that is not there • Applies at all granularity levels unit tests • integration tests • system tests • regression tests 7 2,510,588,971 years, 32 days, and 20 hours to be precise. -

HP Functional Testing Software Data Sheet

HP Functional Testing software Data sheet With HP Functional Testing you can automate functional and regression testing for every modern software application and environment, extend testing to a wider range of teams, and accelerate the testing process—so you can improve application quality and still make your market window. Simplifies test creation and HP Functional Testing makes it easy to insert, modify, data-drive, and remove test steps. It features: maintenance • Keyword capabilities: Using keywords, testers HP Functional Testing is advanced, automated can build test cases by capturing flows directly testing software for building functional and regression from the application screens and applying robust test suites. It captures, verifies, and replays user record/replay capturing technology. interactions automatically and helps testers quickly • Automatic updating: With new application builds, identify and report on application effects, while you only need to update one reference in the shared providing sophisticated functionality for tester repository and the update is propagated to all collaboration. The product includes HP QuickTest referencing tests. Professional and all of its add-ins. It is sold stand-alone or as part of the broader HP Unified • Easy data-driving: You can quickly data-drive any Functional Testing solution, which couples object definition, method, checkpoint, and output HP Functional Testing with HP Service Test to value through the integrated data table. address both GUI and non-GUI testing. • Timely advice: In