International Control of Powerful Technology: Lessons from the Baruch Plan for Nuclear Weapons

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A New Effort to Achieve World

Marshall and the Atomic Bomb Marshall and the Atomic Bomb By Frank Settle General George C. Marshall and the Atomic Bomb (Praeger, 2016) provides the first full narrative describing General Marshall’s crucial role in the first decade of nuclear weapons that included the Manhattan Project, the use of the atomic bomb on Japan, and their management during the early years of the Cold War. Marshall is best known today as the architect of the plan for Europe’s recovery in the aftermath of World War II—the Marshall Plan. He also earned acclaim as the master strategist of the Allied victory in World War II. Marshall mobilized and equipped the Army and Air Force under a single command, serving as the primary conduit for information between the Army and the Air Force, as well as the president and secretary of war. As Army Chief of Staff during World War II, he developed a close working relationship with Admiral Earnest King, Chief of Naval Operations; worked with Congress and leaders of industry on funding and producing resources for the war; and developed and implemented the successful strategy the Allies pursued in fighting the war. Last but not least of his responsibilities was the production of the atomic bomb. The Beginnings An early morning phone call to General Marshall and a letter to President Franklin Roosevelt led to Marshall’s little known, nonetheless critical, role in the development and use of the atomic bomb. The call, received at 3:00 a.m. on September 1, 1939, informed Marshall that German dive bombers had attacked Warsaw. -

Revisiting One World Or None

Revisiting One World or None. Sixty years ago, atomic energy was new and the world was still reverberating from the shocks of the atomic bombings of Hiroshima and Nagasaki. Immediately after the end of the Second World War, the first instinct of the administration and Congress was to protect the “secret” of the atomic bomb. The atomic scientists—what today would be called nuclear physicists— who had worked on the Manhattan project to develop atomic bombs recognized that there was no secret, that any technically advanced nation could, with adequate resources, reproduce what they had done. They further believed that when a single aircraft, eventually a single missile, armed with a single bomb could destroy an entire city, no defense system would ever be adequate. Given this combination, the world seemed headed for a period of unprecedented danger. Many of the scientists who had worked on the Manhattan Project felt a special responsibility to encourage a public debate about these new and terrifying products of modern science. They formed an organization, the Federation of Atomic Scientists, dedicated to avoiding the threat of nuclear weapons and nuclear proliferation. Convinced that neither guarding the nuclear “secret” nor any defense could keep the world safe, they encouraged international openness in nuclear physics research and in the expected nuclear power industry as the only way to avoid a disastrous arms race in nuclear weapons. William Higinbotham, the first Chairman of the Association of Los Alamos Scientists and the first Chairman of the Federation of Atomic Scientists, wanted to expand the organization beyond those who had worked on the Manhattan Project, for two reasons. -

The Development of Military Nuclear Strategy And

The Development of Military Nuclear Strategy and Anglo-American Relations, 1939 – 1958 Submitted by: Geoffrey Charles Mallett Skinner to the University of Exeter as a thesis for the degree of Doctor of Philosophy in History, July 2018 This thesis is available for Library use on the understanding that it is copyright material and that no quotation from the thesis may be published without proper acknowledgement. I certify that all material in this thesis which is not my own work has been identified and that no material has previously been submitted and approved for the award of a degree by this or any other University. (Signature) ……………………………………………………………………………… 1 Abstract There was no special governmental partnership between Britain and America during the Second World War in atomic affairs. A recalibration is required that updates and amends the existing historiography in this respect. The wartime atomic relations of those countries were cooperative at the level of science and resources, but rarely that of the state. As soon as it became apparent that fission weaponry would be the main basis of future military power, America decided to gain exclusive control over the weapon. Britain could not replicate American resources and no assistance was offered to it by its conventional ally. America then created its own, closed, nuclear system and well before the 1946 Atomic Energy Act, the event which is typically seen by historians as the explanation of the fracturing of wartime atomic relations. Immediately after 1945 there was insufficient systemic force to create change in the consistent American policy of atomic monopoly. As fusion bombs introduced a new magnitude of risk, and as the nuclear world expanded and deepened, the systemic pressures grew. -

A Singing, Dancing Universe Jon Butterworth Enjoys a Celebration of Mathematics-Led Theoretical Physics

SPRING BOOKS COMMENT under physicist and Nobel laureate William to the intensely scrutinized narrative on the discovery “may turn out to be the greatest Henry Bragg, studying small mol ecules such double helix itself, he clarifies key issues. He development in the field of molecular genet- as tartaric acid. Moving to the University points out that the infamous conflict between ics in recent years”. And, on occasion, the of Leeds, UK, in 1928, Astbury probed the Wilkins and chemist Rosalind Franklin arose scope is too broad. The tragic figure of Nikolai structure of biological fibres such as hair. His from actions of John Randall, head of the Vavilov, the great Soviet plant geneticist of the colleague Florence Bell took the first X-ray biophysics unit at King’s College London. He early twentieth century who perished in the diffraction photographs of DNA, leading to implied to Franklin that she would take over Gulag, features prominently, but I am not sure the “pile of pennies” model (W. T. Astbury Wilkins’ work on DNA, yet gave Wilkins the how relevant his research is here. Yet pulling and F. O. Bell Nature 141, 747–748; 1938). impression she would be his assistant. Wilkins such figures into the limelight is partly what Her photos, plagued by technical limitations, conceded the DNA work to Franklin, and distinguishes Williams’s book from others. were fuzzy. But in 1951, Astbury’s lab pro- PhD student Raymond Gosling became her What of those others? Franklin Portugal duced a gem, by the rarely mentioned Elwyn assistant. It was Gosling who, under Franklin’s and Jack Cohen covered much the same Beighton. -

![I. I. Rabi Papers [Finding Aid]. Library of Congress. [PDF Rendered Tue Apr](https://docslib.b-cdn.net/cover/8589/i-i-rabi-papers-finding-aid-library-of-congress-pdf-rendered-tue-apr-428589.webp)

I. I. Rabi Papers [Finding Aid]. Library of Congress. [PDF Rendered Tue Apr

I. I. Rabi Papers A Finding Aid to the Collection in the Library of Congress Manuscript Division, Library of Congress Washington, D.C. 1992 Revised 2010 March Contact information: http://hdl.loc.gov/loc.mss/mss.contact Additional search options available at: http://hdl.loc.gov/loc.mss/eadmss.ms998009 LC Online Catalog record: http://lccn.loc.gov/mm89076467 Prepared by Joseph Sullivan with the assistance of Kathleen A. Kelly and John R. Monagle Collection Summary Title: I. I. Rabi Papers Span Dates: 1899-1989 Bulk Dates: (bulk 1945-1968) ID No.: MSS76467 Creator: Rabi, I. I. (Isador Isaac), 1898- Extent: 41,500 items ; 105 cartons plus 1 oversize plus 4 classified ; 42 linear feet Language: Collection material in English Location: Manuscript Division, Library of Congress, Washington, D.C. Summary: Physicist and educator. The collection documents Rabi's research in physics, particularly in the fields of radar and nuclear energy, leading to the development of lasers, atomic clocks, and magnetic resonance imaging (MRI) and to his 1944 Nobel Prize in physics; his work as a consultant to the atomic bomb project at Los Alamos Scientific Laboratory and as an advisor on science policy to the United States government, the United Nations, and the North Atlantic Treaty Organization during and after World War II; and his studies, research, and professorships in physics chiefly at Columbia University and also at Massachusetts Institute of Technology. Selected Search Terms The following terms have been used to index the description of this collection in the Library's online catalog. They are grouped by name of person or organization, by subject or location, and by occupation and listed alphabetically therein. -

Rethinking Atomic Diplomacy and the Origins of the Cold War

Journal of Political Science Volume 29 Number 1 Article 5 November 2001 Rethinking Atomic Diplomacy and the Origins of the Cold War Gregory Paul Domin Follow this and additional works at: https://digitalcommons.coastal.edu/jops Part of the Political Science Commons Recommended Citation Domin, Gregory Paul (2001) "Rethinking Atomic Diplomacy and the Origins of the Cold War," Journal of Political Science: Vol. 29 : No. 1 , Article 5. Available at: https://digitalcommons.coastal.edu/jops/vol29/iss1/5 This Article is brought to you for free and open access by the Politics at CCU Digital Commons. It has been accepted for inclusion in Journal of Political Science by an authorized editor of CCU Digital Commons. For more information, please contact [email protected]. Rethinking Atomic Diplomacy and the Origins of the Cold War Gregory Paul Domin Mercer University This paper argues that the conflict between nuclear na tionalist and nuclear internationalist discourses over atomic weapons policy was critical in the articulation of a new system of American power . The creation of the atomic bomb destroyed the Roosevelt's vision of a post war world order while confronting United States (and Soviet) policymakers with alternatives that, before the bomb's use, only a handful of individuals had contem plated. The bomb remade the world . My argument is that the atomic bomb did not cause the Cold War, but without it, the Cold War could not have occurred . INTRODUCTION he creation of the atomic bomb destroyed the Roose veltian vision of a postwar world order while confronting TUnited States (and Soviet) policymakers with a new set of alternatives that, prior to the bomb's use, only a bare handful of individuals among those privy to the secret of the Manhattan Project had even contemplated. -

Iasinstitute for Advanced Study

IAInsti tSute for Advanced Study Faculty and Members 2012–2013 Contents Mission and History . 2 School of Historical Studies . 4 School of Mathematics . 21 School of Natural Sciences . 45 School of Social Science . 62 Program in Interdisciplinary Studies . 72 Director’s Visitors . 74 Artist-in-Residence Program . 75 Trustees and Officers of the Board and of the Corporation . 76 Administration . 78 Past Directors and Faculty . 80 Inde x . 81 Information contained herein is current as of September 24, 2012. Mission and History The Institute for Advanced Study is one of the world’s leading centers for theoretical research and intellectual inquiry. The Institute exists to encourage and support fundamental research in the sciences and human - ities—the original, often speculative thinking that produces advances in knowledge that change the way we understand the world. It provides for the mentoring of scholars by Faculty, and it offers all who work there the freedom to undertake research that will make significant contributions in any of the broad range of fields in the sciences and humanities studied at the Institute. Y R Founded in 1930 by Louis Bamberger and his sister Caroline Bamberger O Fuld, the Institute was established through the vision of founding T S Director Abraham Flexner. Past Faculty have included Albert Einstein, I H who arrived in 1933 and remained at the Institute until his death in 1955, and other distinguished scientists and scholars such as Kurt Gödel, George F. D N Kennan, Erwin Panofsky, Homer A. Thompson, John von Neumann, and A Hermann Weyl. N O Abraham Flexner was succeeded as Director in 1939 by Frank Aydelotte, I S followed by J. -

UC San Diego UC San Diego Electronic Theses and Dissertations

UC San Diego UC San Diego Electronic Theses and Dissertations Title The new prophet : Harold C. Urey, scientist, atheist, and defender of religion Permalink https://escholarship.org/uc/item/3j80v92j Author Shindell, Matthew Benjamin Publication Date 2011 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA, SAN DIEGO The New Prophet: Harold C. Urey, Scientist, Atheist, and Defender of Religion A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in History (Science Studies) by Matthew Benjamin Shindell Committee in charge: Professor Naomi Oreskes, Chair Professor Robert Edelman Professor Martha Lampland Professor Charles Thorpe Professor Robert Westman 2011 Copyright Matthew Benjamin Shindell, 2011 All rights reserved. The Dissertation of Matthew Benjamin Shindell is approved, and it is acceptable in quality and form for publication on microfilm and electronically: ___________________________________________________________________ ___________________________________________________________________ ___________________________________________________________________ ___________________________________________________________________ ___________________________________________________________________ Chair University of California, San Diego 2011 iii TABLE OF CONTENTS Signature Page……………………………………………………………………...... iii Table of Contents……………………………………………………………………. iv Acknowledgements…………………………………………………………………. -

16. a New Kind of First Lady: Eleanor Roosevelt in the White House

fdr4freedoms 1 16. A New Kind of First Lady: Eleanor Roosevelt in the White House Franklin D. Roosevelt and Eleanor Roosevelt at the dedication of a high school in their hometown of Hyde Park, New York, October 5, 1940. Having overcome the shyness that plagued her in youth, ER as First Lady displayed a great appetite for meeting people from all walks of life and learning about their lives and troubles. FDRL Eleanor Roosevelt revolutionized the role of First Lady. No writers; corresponded with thousands of citizens; and served presidential wife had been so outspoken about the nation’s as the administration’s racial conscience. affairs or more hardworking in its causes. ER conveyed to the Although polls at the time of her death in 1962 revealed American people a deep concern for their welfare. In all their ER to be one of the most admired women in the world, not diversity, Americans loved her in return. everyone liked her, especially during her husband’s presidency, When she could have remained aloof or adopted the when those who differed with her strongly held progressive ceremonial role traditional for First Ladies, ER chose to beliefs reviled ER as too forward for a First Lady. She did not immerse herself in all the challenges of the 1930s and ‘40s. shy away from controversy. Her support of a West Virginia She dedicated her tireless spirit to bolstering Americans Subsistence Homestead community spurred debate over against the fear and despair inflicted by the Great Depression the value of communal housing and rural development. By and World War II. -

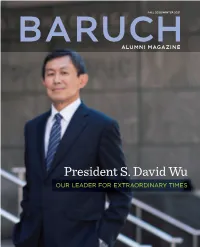

President S. David Wu

FALL 2020/WINTER 2021 BARUCHALUMNI MAGAZINE President S. David Wu OUR LEADER FOR EXTRAORDINARY TIMES MESSAGE FROM FALL 2020/WINTER 2021 THE PRESIDENT IN THIS ISSUE Dear Baruch Alumni, I feel incredibly honored and privileged to join the Baruch community at a pivotal moment in the College’s history. As the nation grapples with the pandemic, the resulting 6 CAMPUS WELCOME Baruch Alumni Magazine economic damage, and the reckoning to end systemic racism, the entire Baruch A Q&A with President S. David Wu: His Vision for Cheryl de Jong–Lambert community unified and overcame unimaginable challenges to continue core College Director of Communications operations and deliver distance learning while supporting our students to keep pace Baruch College and Public Higher Education in the U.S. EDITOR IN CHIEF: Diane Harrigan with their education. I have been impressed not only with Baruch’s remarkable, resilient Baruch’s new president, S. David Wu, PhD, talks personal history, first impressions, and bold initiatives, students, faculty, and staff but with the alumni community. Your welcome has been which include reimagining college education—Baruch style—in the new normal. Says President Wu, SENIOR EDITOR: Gregory M. Leporati genuine and heartfelt, your connection to your alma mater strong, and your appreciation “Baruch shows what is possible at a time when our country desperately needs a more robust and more GRAPHIC DESIGN: Vanguard for the value and impact of your Baruch education has been an inspiration for me. inclusive system of public higher education.” OFFICE OF ALUMNI RELATIONS AND VOLUNTEER ENGAGEMENT My first 100 days were illuminating and productive. -

President Harry S Truman's Office Files, 1945–1953

A Guide to the Microfilm Edition of RESEARCH COLLECTIONS IN AMERICAN POLITICS Microforms from Major Archival and Manuscript Collections General Editor: William E. Leuchtenburg PRESIDENT HARRY S TRUMAN’S OFFICE FILES, 1945–1953 Part 2: Correspondence File UNIVERSITY PUBLICATIONS OF AMERICA A Guide to the Microfilm Edition of RESEARCH COLLECTIONS IN AMERICAN POLITICS Microforms from Major Archival and Manuscript Collections General Editor: William E. Leuchtenburg PRESIDENT HARRY S TRUMAN’S OFFICE FILES, 1945–1953 Part 2: Correspondence File Project Coordinators Gary Hoag Paul Kesaris Robert E. Lester Guide compiled by David W. Loving A microfilm project of UNIVERSITY PUBLICATIONS OF AMERICA An Imprint of CIS 4520 East-West Highway • Bethesda, Maryland 20814-3389 LCCN: 90-956100 Copyright© 1989 by University Publications of America. All rights reserved. ISBN 1-55655-151-7. TABLE OF CONTENTS Introduction ............................................................................................................................ v Scope and Content Note ....................................................................................................... xi Source and Editorial Note ..................................................................................................... xiii Reel Index Reel 1 A–Atomic Energy Control Commission, United Nations ......................................... 1 Reel 2 Attlee, Clement R.–Benton, William ........................................................................ 2 Reel 3 Bowles, Chester–Chronological -

Eleanor Roosevelt and the Arthurdale Subsistence Housing Project

Volume 4, Issue No. 1. Turning Coal to Diamond: Eleanor Roosevelt and the Arthurdale Subsistence Housing Project Renée Trepagnier Tulane University, New Orleans, Louisiana, USA ÒÏ Abstract: In 1933, Eleanor Roosevelt (ER), the First Lady of the United States, initiated a subsistence housing project, Arthurdale, funded by the federal government to help a poor coal mining town in West Virginia rise above poverty. ER spotlighted subsistence housing as a promising venture for poor American workers to develop economic stability and community unification. She encountered harsh pushback from the federal government, the American public, and private industries. Deemed communistic and excessively expensive, Arthrudale pushed the boundaries of federal government involvement in community organization. Bureaucratic and financial issues impacted the community’s employment rates, income, and community cooperation. ER persisted, perhaps too blindly, until the federal government declared the project a failure and pulled out to avoid further financial loses. ER’s involvement in Arthurdale’s administration and bureaucracy radically shifted the role of the First Lady, a position with no named responsibilities or regulations. Before ER, First Ladies never exercised authority in federally regulated projects and rarely publicly presented their opinions. Did ER’s involvement in Arthurdale hinder or promote the project’s success? Should a First Lady involve herself in federal policy? If so, how much authority should she possess? “Nothing we do in this world is ever wasted and I have come to the conclusion that practically nothing we ever do stands by itself. If it is good, it will serve some good purpose in the future. If it is evil, it may haunt us and handicap our efforts in unimagined ways” –Eleanor Roosevelt 1961 Crushing Poverty in West Virginia In the mid to late 19th century, industrialization developed in the United States, necessitating a demand for coal.