Kernel Methods for Virtual Screening

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Abstract DRAYTON, JOSEPHINE BENTLEY

Abstract DRAYTON, JOSEPHINE BENTLEY. Methylated Medium- and Long-Chain Fatty Acids as Novel Sources of Anaplerotic Carbon for Exercising Mice. (Under the direction of Dr. Jack Odle and Dr. Lin Xi.) We hypothesized that methylated fatty acids (e.g. 2-methylpentanoic acid (2MeP), phytanic acid or pristanic acid) would provide a novel source of anaplerotic carbon and thereby enhance fatty acid oxidation, especially under stressed conditions when tricarboxylic acid (TCA) cycle intermediates are depleted. The optimal dose of 2MeP, hexanoic acid (C6) and pristanic acid for increasing in vitro [1-14C]-oleic acid oxidation in liver or skeletal muscle homogenates from fasted mice was determined using incremental doses of 0, 0.25, 0.5, 0.75, or 1.0 mM. The 0.25 mM amount maximally stimulated liver tissue oxidation of 14 14 14 [1- C]-oleate to CO2 and [ C]-acid soluble products (ASP; P < 0.05). Similar incubations of 0.25 mM 2MeP, C6, palmitate, phytanic acid, or pristanic acid or 0.1 mM malate or propionyl-CoA were conducted with liver or skeletal muscle homogenates from exercised or sedentary mice. In vitro oxidation of [1-14C]-oleic acid in liver homogenates with 2MeP 14 14 increased mitochondrial CO2 accumulation (P <0.05), but no change in [ C]-ASP accumulation as compared to C6 (P > 0.05). Phytanic acid treatment increased [14C]-ASP accumulation in liver tissue as compared to palmitate (P < 0.05). Exercise increased [14C] accumulations (P < 0.05). Results were consistent with our hypothesis that methyl-branched fatty acids (2-MeP, phytanic and pristanic acids) provide a novel source of anaplerotic carbon and thereby stimulate in vitro fatty acid oxidation in liver and skeletal muscle tissues. -

Design Novel Dual Agonists for Treating Type-2 Diabetes by Targeting Peroxisome Proliferator-Activated Receptors with Core Hopping Approach

Design Novel Dual Agonists for Treating Type-2 Diabetes by Targeting Peroxisome Proliferator-Activated Receptors with Core Hopping Approach Ying Ma1., Shu-Qing Wang1,3*., Wei-Ren Xu2, Run-Ling Wang1*, Kuo-Chen Chou3 1 Tianjin Key Laboratory on Technologies Enabling Development of Clinical Therapeutics and Diagnostics (Theranostics), School of Pharmacy, Tianjin Medical University, Tianjin, China, 2 Tianjin Institute of Pharmaceutical Research (TIPR), Tianjin, China, 3 Gordon Life Science Institute, San Diego, California, United States of America Abstract Owing to their unique functions in regulating glucose, lipid and cholesterol metabolism, PPARs (peroxisome proliferator- activated receptors) have drawn special attention for developing drugs to treat type-2 diabetes. By combining the lipid benefit of PPAR-alpha agonists (such as fibrates) with the glycemic advantages of the PPAR-gamma agonists (such as thiazolidinediones), the dual PPAR agonists approach can both improve the metabolic effects and minimize the side effects caused by either agent alone, and hence has become a promising strategy for designing effective drugs against type-2 diabetes. In this study, by means of the powerful ‘‘core hopping’’ and ‘‘glide docking’’ techniques, a novel class of PPAR dual agonists was discovered based on the compound GW409544, a well-known dual agonist for both PPAR-alpha and PPAR- gamma modified from the farglitazar structure. It was observed by molecular dynamics simulations that these novel agonists not only possessed the same function as GW409544 did in activating PPAR-alpha and PPAR-gamma, but also had more favorable conformation for binding to the two receptors. It was further validated by the outcomes of their ADME (absorption, distribution, metabolism, and excretion) predictions that the new agonists hold high potential to become drug candidates. -

Punicic Acid Triggers Ferroptotic Cell Death in Carcinoma Cells

nutrients Article Punicic Acid Triggers Ferroptotic Cell Death in Carcinoma Cells Perrine Vermonden 1, Matthias Vancoppenolle 1, Emeline Dierge 1,2, Eric Mignolet 1,Géraldine Cuvelier 1, Bernard Knoops 1, Melissa Page 1, Cathy Debier 1, Olivier Feron 2,† and Yvan Larondelle 1,*,† 1 Louvain Institute of Biomolecular Science and Technology (LIBST), UCLouvain, Croix du Sud 4-5/L7.07.03, B-1348 Louvain-la-Neuve, Belgium; [email protected] (P.V.); [email protected] (M.V.); [email protected] (E.D.); [email protected] (E.M.); [email protected] (G.C.); [email protected] (B.K.); [email protected] (M.P.); [email protected] (C.D.) 2 Pole of Pharmacology and Therapeutics (FATH), Institut de Recherche Expérimentale et Clinique (IREC), UCLouvain, 57 Avenue Hippocrate B1.57.04, B-1200 Brussels, Belgium; [email protected] * Correspondence: [email protected]; Tel.: +32-478449925 † These authors contributed equally to this work. Abstract: Plant-derived conjugated linolenic acids (CLnA) have been widely studied for their pre- ventive and therapeutic properties against diverse diseases such as cancer. In particular, punicic acid (PunA), a conjugated linolenic acid isomer (C18:3 c9t11c13) present at up to 83% in pomegranate seed oil, has been shown to exert anti-cancer effects, although the mechanism behind its cytotoxicity remains unclear. Ferroptosis, a cell death triggered by an overwhelming accumulation of lipid perox- ides, has recently arisen as a potential mechanism underlying CLnA cytotoxicity. In the present study, we show that PunA is highly cytotoxic to HCT-116 colorectal and FaDu hypopharyngeal carcinoma cells grown either in monolayers or as three-dimensional spheroids. -

Dietary Pomegranate Pulp to Improve Meat Fatty Acid Composition in Lambs

Dietary pomegranate pulp to improve meat fatty acid composition in lambs Natalello A., Luciano G., Morbidini L., Priolo A., Biondi L., Pauselli M., Lanza M., Valenti B. in Ruiz R. (ed.), López-Francos A. (ed.), López Marco L. (ed.). Innovation for sustainability in sheep and goats Zaragoza : CIHEAM Options Méditerranéennes : Série A. Séminaires Méditerranéens; n. 123 2019 pages 173-176 Article available on line / Article disponible en ligne à l’adresse : -------------------------------------------------------------------------------------------------------------------------------- ------------------------------------------ http://om.ciheam.org/article.php?IDPDF=00007880 -------------------------------------------------------------------------------------------------------------------------------- ------------------------------------------ To cite this article / Pour citer cet article -------------------------------------------------------------------------------------------------------------------------------- ------------------------------------------ Natalello A., Luciano G., Morbidini L., Priolo A., Biondi L., Pauselli M., Lanza M., Valenti B. Dietary pomegranate pulp to improve meat fatty acid composition in lambs. In : Ruiz R. (ed.), López- Francos A. (ed.), López Marco L. (ed.). Innovation for sustainability in sheep and goats. Zaragoza : CIHEAM, 2019. p. 173-176 (Options Méditerranéennes : Série A. Séminaires Méditerranéens; n. 123) -------------------------------------------------------------------------------------------------------------------------------- -

Dualism of Peroxisome Proliferator-Activated Receptor Α/Γ: a Potent Clincher in Insulin Resistance

AEGAEUM JOURNAL ISSN NO: 0776-3808 Dualism of Peroxisome Proliferator-Activated Receptor α/γ: A Potent Clincher in Insulin Resistance Mr. Ravikumar R. Thakar1 and Dr. Nilesh J. Patel1* 1Faculty of Pharmacy, Shree S. K. Patel College of Pharmaceutical Education & Research, Ganpat University, Gujarat, India. [email protected] Abstract: Diabetes mellitus is clinical syndrome which is signalised by augmenting level of sugar in blood stream, which produced through lacking of insulin level and defective insulin activity or both. As per worldwide epidemiology data suggested that the numbers of people with T2DM living in developing countries is increasing with 80% of people with T2DM. Peroxisome proliferator-activated receptors are a family of ligand-activated transcription factors; modulate the expression of many genes. PPARs have three isoforms namely PPARα, PPARβ/δ and PPARγ that play a central role in regulating glucose, lipid and cholesterol metabolism where imbalance can lead to obesity, T2DM and CV ailments. It have pathogenic role in diabetes. PPARα is regulates the metabolism of lipids, carbohydrates, and amino acids, activated by ligands such as polyunsaturated fatty acids, and drugs used as Lipid lowering agents. PPAR β/δ could envision as a therapeutic option for the correction of diabetes and a variety of inflammatory conditions. PPARγ is well categorized, an element of the PPARs, also pharmacological effective as an insulin resistance lowering agents, are used as a remedy for insulin resistance integrated with type- 2 diabetes mellitus. There are mechanistic role of PPARα, PPARβ/δ and PPARγ in diabetes mellitus and insulin resistance. From mechanistic way, it revealed that dual PPAR-α/γ agonist play important role in regulating both lipids as well as glycemic levels with essential safety issues. -

Substrate Specificity of Rat Liver Mitochondrial Carnitine Palmitoyl Transferase I: Evidence Against S-Oxidation of Phytanic Acid in Rat Liver Mitochondria

FEBS 15107 FEBS Letters 359 (1995) 179 183 Substrate specificity of rat liver mitochondrial carnitine palmitoyl transferase I: evidence against s-oxidation of phytanic acid in rat liver mitochondria Harmeet Singh*, Alfred Poulos Department of Chemical Pathology, Women's and Children's Hospital 72 King William Road, North Adelaide, SA 5006, Australia Received 23 December 1994 demonstrated that CPTI activity is reversibly inhibited by Abstract The two branched chain fatty acids pristanic acid malonyl-CoA. Recently, it has been shown that malonyl-CoA (2,6,10,14-tetramethylpentadecanoic acid) and phytanic acid inhibits peroxisomal, microsomal and plasma membrane car- (3,7,11,15-tetramethylhexadecanoic acid) were converted to co- nitine acyl transferases [8,10,13]. Therefore, in order to under- enzyme A thioesters by rat liver mitochondrial outer membranes. However, these branched chain fatty acids could not be converted stand the role of CPTI in fatty acid oxidation, CPTI must be to pristanoyl and phytanoyl carnitines, respectively, by mitochon- separated from other carnitine acyl transferases. In view of the drial outer membranes. As expected, the unbranched long chain difficulties in isolating CPTI in the active state, we decided to fatty acids, stearic acid and palmitic acid, were rapidly converted investigate CPTI activity in highly purified mitochondrial outer to stearoyl and palmitoyl carnitines, respectively, by mitochon- membranes from rat liver. We present evidence that branched drial outer membranes. These observations indicate that the chain fatty acids with ~- or fl-methyl groups are poor substrates branched chain fatty acids could not be transported into mito- for CPTI, suggesting that these branched chain fatty acids chondria. -

PHYSICS and SOCIETY

PHYSICS and SOCIETY THE NEWSLETTER OF THE FORUM ON PHYSICS AND SOCIETY, PUBLISHED BY THE AMERICAN PHYSICAL SOCIETY, 335 EAST 45th ST., NEW YORK, NY 10017 PRINTED BY THE PENNY·SAVER, MANSFIELD, PA 16933 . Volume 12, Number 2 April 1983 TABLE OF CONTENTS Forum Executive Committee.......................................................................................................................2 Minutes of the Executive Committee Meeting in New york................................................................................3 News of the Forum...................................................................................................................................4 Forum Studies on Nuclear Weapons by Leo Sartori...........................................................................................5 APS Councillor's Report by Mike Cosper.........................................................................................................5 APS Statement on Nuclear Arms limitation....................................................................................................B COPS Report by Earl Collen.........................................................................................................................9 Announcements .......................................................................................................................................9 International Freeze AppeaL .....................................................................................................................10 -

Toxicology Reports

Antioxidant effect of pomegranate against streptozotocin- nicotinamide generated oxidative stress induced diabetic rats Author Aboonabi, Anahita, Rahmat, Asmah, Othman, Fauziah Published 2014 Journal Title Toxicology Reports Version Version of Record (VoR) DOI https://doi.org/10.1016/j.toxrep.2014.10.022 Copyright Statement © 2014 The Authors. Published by Elsevier Ireland Ltd. This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/3.0/). Downloaded from http://hdl.handle.net/10072/142319 Griffith Research Online https://research-repository.griffith.edu.au Toxicology Reports 1 (2014) 915–922 Contents lists available at ScienceDirect Toxicology Reports journa l homepage: www.elsevier.com/locate/toxrep Antioxidant effect of pomegranate against streptozotocin-nicotinamide generated oxidative stress induced diabetic rats a,∗ a,1 b,2 Anahita Aboonabi , Asmah Rahmat , Fauziah Othman a Department of Nutrition and Dietetics, Faculty of Medicine and Health Sciences, University Putra Malaysia, 43400 Serdang, Selangor, Malaysia b Department of Human Anatomy, Faculty of Medicine and Health Sciences, University Putra Malaysia, 43400 Serdang, Selangor, Malaysia a r t a b i c s t l e i n f o r a c t Article history: Oxidative stress attributes a crucial role in chronic complication of diabetes. The aim of this Received 17 September 2014 study was to determine the most effective part of pomegranate on oxidative stress markers Received in revised form 13 October 2014 and antioxidant enzyme activities against streptozotocin-nicotinamide (STZ-NA)-induced Accepted 27 October 2014 diabetic rats. Male Sprague-Dawley rats were randomly divided into six groups. -

Ataxia with Loss of Purkinje Cells in a Mouse Model for Refsum Disease

Ataxia with loss of Purkinje cells in a mouse model for Refsum disease Sacha Ferdinandussea,1,2, Anna W. M. Zomerb,1, Jasper C. Komena, Christina E. van den Brinkb, Melissa Thanosa, Frank P. T. Hamersc, Ronald J. A. Wandersa,d, Paul T. van der Saagb, Bwee Tien Poll-Thed, and Pedro Britesa Academic Medical Center, Departments of aClinical Chemistry (Laboratory of Genetic Metabolic Diseases) and dPediatrics, Emma’s Children Hospital, University of Amsterdam, 1105 AZ Amsterdam, The Netherlands; bHubrecht Institute, Royal Netherlands Academy of Arts and Sciences 3584 CT Utrecht, The Netherlands; and cRehabilitation Hospital ‘‘De Hoogstraat’’ Rudolf Magnus Institute of Neuroscience, 3584 CG Utrecht, The Netherlands Edited by P. Borst, The Netherlands Cancer Institute, Amsterdam, The Netherlands, and approved October 3, 2008 (received for review June 23, 2008) Refsum disease is caused by a deficiency of phytanoyl-CoA hy- Clinically, Refsum disease is characterized by cerebellar droxylase (PHYH), the first enzyme of the peroxisomal ␣-oxidation ataxia, polyneuropathy, and progressive retinitis pigmentosa, system, resulting in the accumulation of the branched-chain fatty culminating in blindness, (1, 3). The age of onset of the symptoms acid phytanic acid. The main clinical symptoms are polyneuropathy, can vary from early childhood to the third or fourth decade of cerebellar ataxia, and retinitis pigmentosa. To study the patho- life. No treatment is available for patients with Refsum disease, genesis of Refsum disease, we generated and characterized a Phyh but they benefit from a low phytanic acid diet. Phytanic acid is knockout mouse. We studied the pathological effects of phytanic derived from dietary sources only, specifically from the chloro- acid accumulation in Phyh؊/؊ mice fed a diet supplemented with phyll component phytol. -

Review Reviewed Work(S): Hearts and Minds by Bert Schneider and Peter Davis Review By: Peter Biskind Source: Cinéaste, Vol

Review Reviewed Work(s): hearts and minds by Bert Schneider and Peter Davis Review by: Peter Biskind Source: Cinéaste, Vol. 7, No. 1 (1975), pp. 31-32 Published by: Cineaste Publishers, Inc. Stable URL: https://www.jstor.org/stable/41685779 Accessed: 11-09-2019 15:10 UTC JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at https://about.jstor.org/terms Cineaste Publishers, Inc. is collaborating with JSTOR to digitize, preserve and extend access to Cinéaste This content downloaded from 138.37.228.89 on Wed, 11 Sep 2019 15:10:03 UTC All use subject to https://about.jstor.org/terms the virtue of HEARTS AND MINDS that in a presumably descended from Ralph Waldo crazy-quilt of interviews, stock footage, vérité, Emerson, embody the very essence of New and film clips, it manages to breathe new life England rectitude, yet utter some of the film's Brn reviews into the worn out images of the war that passed sfllliest remarks. ("The strength of our system is from film to film until they became no longer that you can rely on President Nixon for shocking but comforting, like old friends orleadership.") Jhe mixture of foolishness, familiar faces. HEARTS AND MINDS contains intelligence, and pain in this sequence makes it the original footage of many of these scenes difficult to watch. -

Us 2018 / 0296525 A1

UN US 20180296525A1 ( 19) United States (12 ) Patent Application Publication (10 ) Pub. No. : US 2018/ 0296525 A1 ROIZMAN et al. ( 43 ) Pub . Date: Oct. 18 , 2018 ( 54 ) TREATMENT OF AGE - RELATED MACULAR A61K 38 /1709 ( 2013 .01 ) ; A61K 38 / 1866 DEGENERATION AND OTHER EYE (2013 . 01 ) ; A61K 31/ 40 ( 2013 .01 ) DISEASES WITH ONE OR MORE THERAPEUTIC AGENTS (71 ) Applicant: MacRegen , Inc ., San Jose , CA (US ) (57 ) ABSTRACT ( 72 ) Inventors : Keith ROIZMAN , San Jose , CA (US ) ; The present disclosure provides therapeutic agents for the Martin RUDOLF , Luebeck (DE ) treatment of age - related macular degeneration ( AMD ) and other eye disorders. One or more therapeutic agents can be (21 ) Appl. No .: 15 /910 , 992 used to treat any stages ( including the early , intermediate ( 22 ) Filed : Mar. 2 , 2018 and advance stages ) of AMD , and any phenotypes of AMD , including geographic atrophy ( including non -central GA and Related U . S . Application Data central GA ) and neovascularization ( including types 1 , 2 and 3 NV ) . In certain embodiments , an anti - dyslipidemic agent ( 60 ) Provisional application No . 62/ 467 ,073 , filed on Mar . ( e . g . , an apolipoprotein mimetic and / or a statin ) is used 3 , 2017 . alone to treat or slow the progression of atrophic AMD Publication Classification ( including early AMD and intermediate AMD ) , and / or to (51 ) Int. CI. prevent or delay the onset of AMD , advanced AMD and /or A61K 31/ 366 ( 2006 . 01 ) neovascular AMD . In further embodiments , two or more A61P 27 /02 ( 2006 .01 ) therapeutic agents ( e . g ., any combinations of an anti - dys A61K 9 / 00 ( 2006 . 01 ) lipidemic agent, an antioxidant, an anti- inflammatory agent, A61K 31 / 40 ( 2006 .01 ) a complement inhibitor, a neuroprotector and an anti - angio A61K 45 / 06 ( 2006 .01 ) genic agent ) that target multiple underlying factors of AMD A61K 38 / 17 ( 2006 .01 ) ( e . -

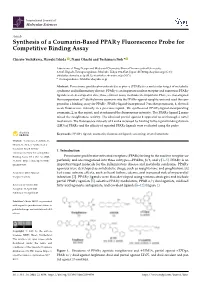

Synthesis of a Coumarin-Based PPAR Fluorescence Probe for Competitive Binding Assay

International Journal of Molecular Sciences Article Synthesis of a Coumarin-Based PPARγ Fluorescence Probe for Competitive Binding Assay Chisato Yoshikawa, Hiroaki Ishida , Nami Ohashi and Toshimasa Itoh * Laboratory of Drug Design and Medicinal Chemistry, Showa Pharmaceutical University, 3-3165 Higashi-Tamagawagakuen, Machida, Tokyo 194-8543, Japan; [email protected] (C.Y.); [email protected] (H.I.); [email protected] (N.O.) * Correspondence: [email protected] Abstract: Peroxisome proliferator-activated receptor γ (PPARγ) is a molecular target of metabolic syndrome and inflammatory disease. PPARγ is an important nuclear receptor and numerous PPARγ ligands were developed to date; thus, efficient assay methods are important. Here, we investigated the incorporation of 7-diethylamino coumarin into the PPARγ agonist rosiglitazone and used the com- pound in a binding assay for PPARγ. PPARγ-ligand-incorporated 7-methoxycoumarin, 1, showed weak fluorescence intensity in a previous report. We synthesized PPARγ-ligand-incorporating coumarin, 2, in this report, and it enhanced the fluorescence intensity. The PPARγ ligand 2 main- tained the rosiglitazone activity. The obtained partial agonist 6 appeared to act through a novel mechanism. The fluorescence intensity of 2 and 6 increased by binding to the ligand binding domain (LBD) of PPARγ and the affinity of reported PPARγ ligands were evaluated using the probe. Keywords: PPARγ ligand; coumarin; fluorescent ligand; screening; crystal structure Citation: Yoshikawa, C.; Ishida, H.; Ohashi, N.; Itoh, T. Synthesis of a Coumarin-Based PPARγ 1. Introduction Fluorescence Probe for Competitive Binding Assay. Int. J. Mol. Sci. 2021, Peroxisome proliferator-activated receptors (PPARs) belong to the nuclear receptor su- 22, 4034.