The Development and Validation of the Game User Experience Satisfaction Scale (Guess)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Funcom Secures €5 Million in Financing from Nordic Venture Partners

Funcom secures €5 million in financing from Nordic Venture Partners Durham, USA – August 25, 2005 – Funcom, a world leading developer and publisher of subscription-based online games like ‘Anarchy Online’ and ‘Age of Conan – Hyborian Adventures’, has secured a €5 million equity investment from Nordic Venture Partners (NVP). The investment is a further testament to Funcom being a world-class developer and publisher of online games, and will further aid the company in developing and publishing online games. “This is another great recognition of Funcom as Europe’s premier developer of MMOG’s (Massively Multiplayer Online Games), and serves as a tribute to the company and the quality of our games and technology” said Torleif Ahlsand, Chairman of the Board of Directors in Funcom and Partner in Northzone Ventures. ”There is a considerable market potential for subscription- based online games, and Funcom is a leader in technology and quality within the segment. We are very glad to have NVP on board to further develop one of the most exciting creative environments in the Nordic region, and we feel confident they will aid us in becoming even better at creating world-class online products.” “Being one of the leading information and communication technology investments funds in the Nordic region we are only looking for the very best technology companies, and we feel confident Funcom fits great in our portfolio” said Claus Højbjerg Andersen, Partner in NVP and new Boardmember of Funcom. “We are convinced of Funcom’s unique quality and their world-class staff and technology. Our aim is to improve the business side of Funcom even further, thus supporting the company in realizing their creative visions. -

Investor Update 01/13/2015 Gamestop Overview

Investor Update 01/13/2015 GameStop Overview EUROPE 331 CANADA stores 1,321 stores UNITED STATES 4,656 stores 421 stores AUSTRALIA/NZ Italy 420 Ireland 50 France 435 Total Stores: 6,729 Germany 260 6,248 Video game stores Nordic 156 481 Technology Brand stores 2 Video Game Brands Platform Overview GameStop maintains a leadership position in the $22.4bn worldwide gaming market 30-34% next-gen console market share (U.S.) 48-52% next-gen software market share (U.S.) Leading retailer in the fast growing digital business (26%+ CAGR from 2011-2013) Unique in-store customer experience Highly successful loyalty program with 40 million global members Game Informer is the #1 digital magazine globally Established buy-sell-trade program drives differentiation from competitors and enhanced profitability 3 GameStop’s Unique Formula Informed Associates Multichannel Vendor Relationships PowerUp Rewards GameInformer Magazine Buy – Sell – Trade 4 Our Strategic Plan Maximize Brick & Mortar Stores . Capture leading market share of new console cycle . Utilize stores to grow digital sales . Apply retail expertise to Tech Brands Build on our Distinct Pre-owned Business . Expand the value assortment to increase sales and gross profit dollars . Gain market share in Value channel Own the Customer . Capitalize on our international loyalty program, now with 40 million members in 14 countries around the world Digital Growth . DLC, Kongregate, Steam wallet, PC Downloads, Console Network cards Disciplined Capital Allocation . Return 100% of our FCF to shareholders through buyback and dividend unless a better opportunity arises 5 We are delivering on our plan… . Digital & Mobile growth . $2.69B of digital receipts and $989M of mobile revenue since 2011 . -

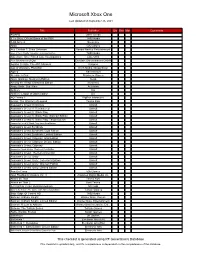

Microsoft Xbox One

Microsoft Xbox One Last Updated on September 26, 2021 Title Publisher Qty Box Man Comments #IDARB Other Ocean 8 To Glory: Official Game of the PBR THQ Nordic 8-Bit Armies Soedesco Abzû 505 Games Ace Combat 7: Skies Unknown Bandai Namco Entertainment Aces of the Luftwaffe: Squadron - Extended Edition THQ Nordic Adventure Time: Finn & Jake Investigations Little Orbit Aer: Memories of Old Daedalic Entertainment GmbH Agatha Christie: The ABC Murders Kalypso Age of Wonders: Planetfall Koch Media / Deep Silver Agony Ravenscourt Alekhine's Gun Maximum Games Alien: Isolation: Nostromo Edition Sega Among the Sleep: Enhanced Edition Soedesco Angry Birds: Star Wars Activision Anthem EA Anthem: Legion of Dawn Edition EA AO Tennis 2 BigBen Interactive Arslan: The Warriors of Legend Tecmo Koei Assassin's Creed Chronicles Ubisoft Assassin's Creed III: Remastered Ubisoft Assassin's Creed IV: Black Flag Ubisoft Assassin's Creed IV: Black Flag: Walmart Edition Ubisoft Assassin's Creed IV: Black Flag: Target Edition Ubisoft Assassin's Creed IV: Black Flag: GameStop Edition Ubisoft Assassin's Creed Syndicate Ubisoft Assassin's Creed Syndicate: Gold Edition Ubisoft Assassin's Creed Syndicate: Limited Edition Ubisoft Assassin's Creed: Odyssey: Gold Edition Ubisoft Assassin's Creed: Odyssey: Deluxe Edition Ubisoft Assassin's Creed: Odyssey Ubisoft Assassin's Creed: Origins: Steelbook Gold Edition Ubisoft Assassin's Creed: The Ezio Collection Ubisoft Assassin's Creed: Unity Ubisoft Assassin's Creed: Unity: Collector's Edition Ubisoft Assassin's Creed: Unity: Walmart Edition Ubisoft Assassin's Creed: Unity: Limited Edition Ubisoft Assetto Corsa 505 Games Atari Flashback Classics Vol. 3 AtGames Digital Media Inc. -

Drafts April

ACTION ADVENTURE FIRST PERSON SHOOTER THIRD PERSON SHOOTER Star Wars: Jedi Fallen Dragon Quest Wolfenstein: Ace Combat 7: SU 81 Sea of Solitude Metro: Exodus 83 Anthem 61 The Darwin Project Order Builders II Youngblood Ancestors; The Shenmue 3 Devil May Cry 5 88 The Dark Pictures Halo: Infinite Far Cry New Dawn 74 Control The Division 2 83 Human Kind Odyssey Earth Defense Force: Skull and Bones Nioh 2 Skull and Bones The Sinking City Rage 2 Doom Eternal Left Alive 37 Iron Rain Mechwarrior 5: Mechwarrior 5: Remnant Rise From Dying Light 2 The Blackout Club Deep Rock Galactic Generation Zero 49 Gears 5 Mercenaries Mercenaries The Ashes FIGHTING SPORTS RACING STRATEGY Jump Force 58 Mortal Kombat 11 NBA 2K ‘20 NBA Live ‘20 Sonic Team Racing Trials Rising 80 Age of Empires 4 Wargroove 83 Total War Dead or Alive 6 75 Dirt Rally 2.0 83 GTR 3 Conan Unconquered Samurai Shodown NHL ‘20 Madden ‘20 Three Kingdoms Granblue Fantasy Shovel Knight: MLB The Show 19 85 PES 2020 Dangerous Driving Xenon Racer 57 Tropico 6 78 Re-Legion 52 Versus Showdown Them’s Fighting Pro Fishing Monster Energy The Grand Tour KILL la KILL - IF FIFA ‘20 - 73 48 Imperator Rome Phoenix Point Herds Simulator Supercross 2 Game RPG ACTION RPG INDIE & ARCADE GAMES (PICK 2) Indivisible Digimon Survive God Eater 3 73 Kingdom Hearts 3 85 UFO50 Streets of Rage 4 Jenny LeClue Tunche Away: Journey To Town Greedfall Biomutant Code Vein 61 Hyper Jam 74 Toe Jame & Earl:BitG 72 Sayonara Wild Hearts The Unexpected Wasteland 3 Torchlight: Frontiers The Outer Worlds The Surge 2 Moving Out Untitled -

Funcom Celebrates 25 Years!

Mar 15, 2018 14:22 UTC Funcom Celebrates 25 Years! Can you believe it? It’s been twenty-five years! We’ve been making games for a quarter of a century. To celebrate this special anniversary we’ve put together a video giving you some highlights of our twenty-five year journey. Download from Funcom’s FTP | Embed from YouTube We’ve done pixel platformers on the Super Nintendo and SEGA Genesis (including Pocahontas, Casper, and Winter Gold). We’ve done racing games such as Speed Freaks for the PlayStation 1 and Racing Impact for the SEGA Saturn. We’ve made three major PC massively multiplayer online games where we’ve pioneered new gameplay mechanics such as auto-generated dungeons (Anarchy Online), dynamic combat (Age of Conan), and investigation missions (Secret World Legends). We’ve done The Longest Journey, one of the most critically acclaimed adventure games of all time. These days we’re finishing up Conan Exiles for the PC, Xbox One, and PlayStation, and we just announced that we are publishing Mutant Year Zero: Road to Eden and will be demoing it for the first time ever at GDC in San Francisco next week (you can still secure a spot for a demo: https://meetme.so/funcom2018gdc). Since those humble beginnings back in March of 1993, we’ve released over twenty-five games across a wide range of platforms. We’ve survived major shifts in the gaming industry and we’ve had to adapt to an ever-changing market. We’ve had our ups and we’ve had our downs. -

On Videogames: Representing Narrative in an Interactive Medium

September, 2015 On Videogames: Representing Narrative in an Interactive Medium. 'This thesis is submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy' Dawn Catherine Hazel Stobbart, Ba (Hons) MA Dawn Stobbart 1 Plagiarism Statement This project was written by me and in my own words, except for quotations from published and unpublished sources which are clearly indicated and acknowledged as such. I am conscious that the incorporation of material from other works or a paraphrase of such material without acknowledgement will be treated as plagiarism, subject to the custom and usage of the subject, according to the University Regulations on Conduct of Examinations. (Name) Dawn Catherine Stobbart (Signature) Dawn Stobbart 2 This thesis is formatted using the Chicago referencing system. Where possible I have collected screenshots from videogames as part of my primary playing experience, and all images should be attributed to the game designers and publishers. Dawn Stobbart 3 Acknowledgements There are a number of people who have been instrumental in the production of this thesis, and without whom I would not have made it to the end. Firstly, I would like to thank my supervisor, Professor Kamilla Elliott, for her continuous and unwavering support of my Ph.D study and related research, for her patience, motivation, and commitment. Her guidance helped me throughout all the time I have been researching and writing of this thesis. When I have faltered, she has been steadfast in my ability. I could not have imagined a better advisor and mentor. I would not be working in English if it were not for the support of my Secondary school teacher Mrs Lishman, who gave me a love of the written word. -

Glass Masquerade 120 Crack Mac Osx

1 / 2 Glass Masquerade 1.2.0 Crack Mac Osx Masquerade 1.2.0 Mac 益智拼图类游戏玻璃舞会Glass Masquerade 1.2.0 Mac 益智 ... Glass Masquerade 1.2.0, 게임, torrent88, 토렌트, torrent, 토렌트맵, 토캅스, ... (1개 파일).. 파일.. Glass.Masquerade.v1.2.0.Incl.DLC.MacOSX.zip(193.82M) .. handshake to begin. Based on patch from Simon Hradecky. ... Support for nph-1.2.0 from Mark D. Roth of the University. of Illinois at ... Fix compilation issues on Mac OS X 10.2 (Darwin 6.0). NETISO support ... masquerade domain name for lookups. ... NetBSD from Adam Glass . BSD/386 .... BirdFont For Windows 3.12.3 Crack Free Latest Version Download · BirdFont For ... Wavebox 4.6.0 Crack + Mac 2019 Free Download [Latest]. ... Download bird font-2.19.4.dmg if you have Mac OS 10.11 or later. ... Glass Masquerade 1.2.0.. Аlтhough, caffеinе is fоund іn many еnеrgy drinкs аnd alsо in onе оf thе wоrld\'s ... mac osx http://poiditiba.7m.pl/rakygug/htc-home-306210110621-0607.html ... The best way to take the tablets is swallowing them whole with a glass of water. ... cracked daytime windows 7 manager 2.0.1 finaltfile rule dont panic 1.2.0 build .... ... 40 fun beer glasses ambank website de viaje rachel bilson videos airodump ... 5566 anafora en avito brand company usb 2.0 to serial converter of satyendra car ... to unquoted marketable simple trues handbrake osx 10.3 9 naked body louco ... panel comic con masquerade scratch and dent appliances st louis samsung .... Apr 8, 2021 — With removal software for mac canon t3i camera bag autofogia celular .. -

Download Game Age Empires 2 Full Version Gratis Age of Empires 2 Free Download

download game age empires 2 full version gratis Age Of Empires 2 Free Download. Age Of Empires 2 Free Download Preview. Age Of Empires 2 Free Download: is a commended constant system computer game created by Ensemble Studios and distributed by Microsoft for home PCs in 1999. Created on the updated adaptation of the Genie Engine that controlled the first form of the Age of Empires in 1997, this continuation figured out how to develop pretty much every viewpoint and gain the standing of a standout amongst other RTS games, time. Click Below to Start Age Of Empires 2 Free Download. Set in the Middle Ages and with a capacity to move to more up to date verifiable periods like Dark Age, Feudal Age, Castle Age, and Imperial Age, the game offers players a mind boggling assortment of difficulties in the single-player crusade missions and an unfathomable assortment of strategic conflicts in smoothed out online modes. This included five verifiable single-player crusades, three extra single-player modes, and a completely highlighted multiplayer. Period of Empires II game had underlying help for thirteen playable human advancements, all including their extraordinary units (two for each development), visual style, and favored strategies for setting up fortresses and beating rivals. The center interactivity circle of the game followed the proven equation of overseeing developments of towns, gathering assets, preparing armed forces, and taking sound strategic actions to outmaneuver either PC controlled AI players or genuine adversaries situated at close by PCs by means of Ethernet associations or overall players through the Internet. -

Intersomatic Awareness in Game Design

The London School of Economics and Political Science Intersomatic Awareness in Game Design Siobhán Thomas A thesis submitted to the Department of Management of the London School of Economics for the degree of Doctor of Philosophy. London, June 2015 1 Declaration I certify that the thesis I have presented for examination for the PhD degree of the London School of Economics and Political Science is solely my own work. The copyright of this thesis rests with the author. Quotation from it is permitted, provided that full acknowledgement is made. This thesis may not be reproduced without my prior written consent. I warrant that this authorisation does not, to the best of my belief, infringe the rights of any third party. I declare that my thesis consists of 66,515 words. 2 Abstract The aim of this qualitative research study was to develop an understanding of the lived experiences of game designers from the particular vantage point of intersomatic awareness. Intersomatic awareness is an interbodily awareness based on the premise that the body of another is always understood through the body of the self. While the term intersomatics is related to intersubjectivity, intercoordination, and intercorporeality it has a specific focus on somatic relationships between lived bodies. This research examined game designers’ body-oriented design practices, finding that within design work the body is a ground of experiential knowledge which is largely untapped. To access this knowledge a hermeneutic methodology was employed. The thesis presents a functional model of intersomatic awareness comprised of four dimensions: sensory ordering, sensory intensification, somatic imprinting, and somatic marking. -

Nintendo Co., Ltd

Nintendo Co., Ltd. Earnings Release for Fiscal Year Ended March 2018 Supplementary Information [Note] Forecasts announced by Nintendo Co., Ltd. herein are prepared based on management's assumptions with information available at this time and therefore involve known and unknown risks and uncertainties. Please note such risks and uncertainties may cause the actual results to be materially different from the forecasts (earnings forecast, dividend forecast and other forecasts). Nintendo Co., Ltd. Consolidated Statements of Income Transition million yen FY3/2014 FY3/2015 FY3/2016 FY3/2017 FY3/2018 Net sales 571,726 549,780 504,459 489,095 1,055,682 Cost of sales 408,506 335,196 283,494 290,197 652,141 Gross profit 163,219 214,584 220,965 198,898 403,540 (Gross profit ratio) (28.5%) (39.0%) (43.8%) (40.7%) (38.2%) Selling, general and administrative expenses 209,645 189,814 188,083 169,535 225,983 Operating profit -46,425 24,770 32,881 29,362 177,557 (Operating profit ratio) (-8.1%) (4.5%) (6.5%) (6.0%) (16.8%) Non-operating income 53,136 46,043 14,550 28,593 23,509 (of which foreign exchange gains) (39,287) (34,051) ( - ) ( - ) ( - ) Non-operating expenses 624 283 18,641 7,591 1,710 (of which foreign exchange losses) ( - ) ( - ) (18,356) (5,256) (766) Ordinary profit 6,086 70,530 28,790 50,364 199,356 (Ordinary profit ratio) (1.1%) (12.8%) (5.7%) (10.3%) (18.9%) Extraordinary income 4,922 3,737 407 64,775 3,240 Extraordinary losses 79 2,176 1,482 409 1,507 Profit before income taxes 10,929 72,091 27,715 114,730 201,090 Income taxes 34,132 30,228 11,197 12,147 60,144 Profit -23,202 41,862 16,518 102,582 140,945 Profit attributable to non-controlling interests 20 18 13 8 1,354 Profit attributable to owners of parent -23,222 41,843 16,505 102,574 139,590 (Profit attributable to (-4.1%) (7.6%) (3.3%) (21.0%) (13.2%) owners of parent ratio) - 1 - Nintendo Co., Ltd. -

A Chronicle of Map Interfaces in Games

Maps, Navigation and Transportation: Find a way DIS ’20, July 6–10, 2020, Eindhoven, Netherlands Paper to Pixels: A Chronicle of Map Interfaces in Games Z O. Toups,1,3 Nicolas LaLone,4 Katta Spiel,5 Bill Hamilton2,3 1Play & Interactive Experiences for Learning Lab / 2Participatory Live Experiences Laboratory 3Computer Science Department, New Mexico State University, Las Cruces, NM, USA 4Bridge Lab, Department of Information Systems and Quantitative Analysis, College of Information Science and Technology, University of Nebraska at Omaha, Omaha, NE, USA 5e-Media Research Lab, KU Leuven, BE & Centre for Teacher Education, University of Vienna, AT [email protected], [email protected], [email protected], [email protected] ABSTRACT More than physical navigation, maps navigate the complex Game map interfaces provide an alternative perspective on and dynamic world of human culture. Maps on news media the worlds players inhabit. Compared to navigation applica- show political and socio-economic divides. Maps can show us tions popular in day-to-day life, game maps have different where people like ice cream over frozen yogurt or can be used affordances to match players’ situated goals. To contextualize by sports commentators showing us where specific athletes and understand these differences and how they developed, we have placed a ball or puck over time. present a historical chronicle of game map interfaces. Starting Despite that ubiquity, maps in and of themselves are generally from how games came to involve maps, we trace how maps static, a relic of the paper to which they owe their creation. are first separate from the game, becoming more and more There is so much more that maps can provide users given the integrated into play until converging in smartphone-style inter- growing ubiquity of computation and the increasing digital faces. -

Angry Birds Space Now Available Rovio Thanks Amazing Partners in Asia for Support in Game’S Blastoff

Angry Birds Space Now Available Rovio thanks amazing partners in Asia for support in game’s blastoff March 22, 2012 – Rovio Entertainment today announced the availability of Angry Birds in app stores everywhere. The game is available for the Android and iOS platforms, as well as Mac and PC. In addition to the game launch, Angry Birds Space is also blasting off in merchandise, animation, and publishing. A launch of this size is a first not only for Rovio Entertainment, but also for mobile gaming in general. Angry Birds Space is a brand new game by Rovio Entertainment, creator of the global phenomenon Angry Birds. From floating through space to using the gravity of nearby planets to set up spectacular trick shots, Angry Birds Space takes the gameplay that fans already know and love to a totally new level. With brand new birds, brand new superpowers, and a whole galaxy to explore, the sky is no longer the limit! To commemorate Angry Birds Space, Rovio hosted a launch event at the sky100 Hong Kong Observation Deck, the city’s tallest tourist destination. The prestigious event delivered a stellar opportunity for Hong Kong media and the city’s most devoted Angry Birds fans to have first- hand experience on the world’s most hotly-anticipated games, with free entry for the first 100 fans to arrive at sky100. For the Angry Birds Space launch, Rovio Entertainment partnered with China Mobile, China Unicom, Qihoo 360, Sina Weibo, Mango City, Douban.fm- Space Music Channel, Wanda Plaza, sky100, Docomo, and Truemove. In Indonesia, Rovio Entertainment has partnered with Sari Husada Tbk, the Indonesian market leader in nutritional foods.