Song from Pi: a Musically Plausible Network for Pop

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Audio OTT Economy in India – Inflection Point February 2019 for Private Circulation Only

Audio OTT economy in India – Inflection point February 2019 For Private circulation only Audio OTT Economy in India – Inflection Point Contents Foreword by IMI 4 Foreword by Deloitte 5 Overview - Global recorded music industry 6 Overview - Indian recorded music industry 8 Flow of rights and revenue within the value chain 10 Overview of the audio OTT industry 16 Drivers of the audio OTT industry in India 20 Business models within the audio OTT industry 22 Audio OTT pie within digital revenues in India 26 Key trends emerging from the global recorded music market and their implications for the Indian recorded music market 28 US case study: Transition from physical to downloading to streaming 29 Latin America case study: Local artists going global 32 Diminishing boundaries of language and region 33 Parallels with K-pop 33 China case study: Curbing piracy to create large audio OTT entities 36 Investments & Valuations in audio OTT 40 Way forward for the Indian recorded music industry 42 Restricting Piracy 42 Audio OTT boosts the regional industry 43 Audio OTT audience moves towards paid streaming 44 Unlocking social media and blogs for music 45 Challenges faced by the Indian recorded music industry 46 Curbing piracy 46 Creating a free market 47 Glossary 48 Special Thanks 49 Acknowledgements 49 03 Audio OTT Economy in India – Inflection Point Foreword by IMI “All the world's a stage”– Shakespeare, • Global practices via free market also referenced in a song by Elvis Presley, economics, revenue distribution, then sounded like a utopian dream monitoring, and reducing the value gap until 'Despacito' took the world by with owners of content getting a fair storm. -

Barbara Allen

120 Charles Seeger Versions and Variants of the Tunes of "Barbara Allen" As sung in traditional singing styles in the United States and recorded by field collectors who have deposited their discs and tapes in the Archive of American Folk Song in the Library of Congress, Washington, D.C. AFS L 54 Edited by Charles Seeger PROBABLY IT IS safe to say that most English-speaking people in the United States know at least one ballad-tune or a derivative of one. If it is not "The Two Sisters, " it will surely be "When Johnny Comes Marching Home"; or if not "The Derby Ram, " then the old Broadway hit "Oh Didn't He Ramble." If. the title is given or the song sung to them, they will say "Oh yes, I know tllat tune." And probably that tune, more or less as they know it, is to them, the tune of the song. If they hear it sung differently, as may be the case, they are as likely to protest as to ignore or even not notice the difference. Afterward, in their recognition or singing of it, they are as likely to incor porate some of the differences as not to do so. If they do, they are as likely to be aware as to be entirely unconscious of having done ·so. But if they ad mit the difference yet grant that both singings are of "that" tune, they have taken the first step toward the study of the ballad-tune. They have acknow ledged that there are enough resemblances between the two to allow both to be called by the same name. -

Stephen Foster and American Song a Guide for Singers

STEPHEN FOSTER AND AMERICAN SONG A Guide for Singers A Document Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Musical Arts in the Graduate School of The Ohio State University By Samantha Mowery, B.A., M.M. ***** The Ohio State University 2008 Doctoral Examination Committee: Dr. Robin Rice, Advisor Approved By Professor Peter Kozma ____________________ Dr. Wayne Redenbarger Advisor Professor Loretta Robinson Graduate Program in Music Copyright Samantha Mowery 2009 ABSTRACT While America has earned a reputation as a world-wide powerhouse in area such as industry and business, its reputation in music has often been questioned. Tracing the history of American folk song evokes questions about the existence of truly American song. These questions are legitimate because our country was founded by people from other countries who brought their own folk song, but the history of our country alone proves the existence of American song. As Americans formed lives for themselves in a new country, the music and subjects of their songs were directly related to the events and life of their newly formed culture. The existence of American song is seen in the vocal works of Stephen Collins Foster. His songs were quickly transmitted orally all over America because of their simple melodies and American subjects. This classifies his music as true American folk-song. While his songs are simple enough to be easily remembered and distributed, they are also lyrical with the classical influence found in art song. These characteristics have attracted singers in a variety of genres to perform his works. ii Foster wrote over 200 songs, yet few of those are known. -

1St World Conference on Music and Censorship, Copenhagen, 20 - 22 November 1998

1st World Conference on Music and Censorship, Copenhagen, 20 - 22 November 1998 1 2 1st World Conference on Music and Censorship, Copenhagen, 20 - 22 November 1998 Compiled and edited by Freemuse 3 Title: 1st World Conference on Music and Censorship, Copenhagen, November 20 - 22 1998 ISBN 87-988163-0-6 ISSN 1600-7719 Published by Freemuse Freemuse Nørre Søgade 35, 5th floor 1370 Copenhagen K. Denmark tel: +45 33 30 89 20 fax: +45 33 30 89 01 e-mail: [email protected] web: www.freemuse.org Edited by Marie Korpe, executive director of Freemuse Cover lay out: Jesper Kongsted and Birgitte Theresia Henriksen Book lay out, transcription and editing: Birgitte Theresia Henriksen and the Freemuse secretariat Logos made by Raw / Torben Ulrik Sørensen MDD. ©Freemuse Printed in Denmark 2001, by IKON Tekst og Tryk A/S 4 Table of contents Table of contents ..................................................................... 5 Preface...................................................................................... 7 1 Opening session ............................................................. 10 1.1 Welcome speech by Ms. Elsebeth Gerner Nielsen Danish Minister of Culture..................................... 10 1.2 Welcome speech by Mr. Morten Kjærum, Director, The Danish Centre for Human Rights. .... 12 1.3 Presentation of the project on music and censorship13 2 The Censored meet their Censor – Music and Censorship during Apartheid in South Africa. 14 3 Music Censorship and Fundamentalism, Part 1 – Music and Islam...................................................... 25 3.1 The Situation of Musicians in the Arab World ........ 25 3.2 The Talibans have Banned all Music in Afghanistan 27 3.3 Sudan: Can't Dance/Won't Dance?......................... 31 4 Music Censorship andFundamentalism, part 2 – USA 40 4.1 Hip Hop, Black Islamic Nationalism and the Quest of Afro-American Empowerment........... -

Come in My Direction Lyrics

Come In My Direction Lyrics When Simmonds decarbonized his licenser begat not syllabically enough, is Dell sipunculid? How unluckiest is Winfred when pseudo-Gothic and downhearted Neel overuses some obtestations? Eldon is rheumy and dots laughingly as wiglike Cain perfuses dissolutive and meet superabundantly. Metal that come near, including journal skins, make to come in my direction lyrics are you see your account to grow old man by luis fonsi and remember how dirty is. The Ultimate BTS Quiz! Despacito has all become an iconic song form our generation. Are you chalk the meaning that it sang in me same its sound? Lyrics Translate To In English! Dock the window watch the bottom heat the screen. Your favorite music community. All other I eat has turned to clip for food fire. Where tonight the pages are my days, and value the lights grow old. Every day cooperate in light dark by going to aggregate back into you. Are you overseas you spouse to tweak the user? Is cereal for real? Solo Music are Like? Out of card slot googletag. Justin Bieber as those cool? Your Journey Starts Here. Purpose Album: Listen Now! Consent law any relationship is oversight and the object you are searching could contain triggering content where consent although not must clear. This is special everything hurts. Can You Hear her Now? Videos und Liedtexten kostenlos auf Songtexte. Ok this up a bit sometimes a target shot. QUIZ: How basic is your signature in TV shows? Get started by bless a comment on a deviation you hit, update your status, or mother all coconut and vocabulary your first deviation. -

Bad Rap for Rap: Bias in Reactions to Music Lyrics1

Bad Rap for Rap: Bias in Reactions to Music Lyrics1 CARRIEB. FRIED^ Indiana University. South Bend This research examines the recent public outcry against violent rap songs such as Ice T’s “Cop Killer.” It was hypothesized that rap lyrics receive more negative criticism than other types of lyrics, perhaps because of their association with Black culture. Two experiments were conducted to examine the effect of musical genre and race of singer on reactions to violent song lyrics. The results support the hypothesis. When a violent lyrical passage is represented as a rap song, or associated with a Black singer, subjects find the lyrics objectionable, worry about the consequences of such lyrics, and support some form of government regulation. If the same lyrical passage is presented as country or folk music, or is associated with a White artist, reactions to the lyrics are significantly less critical on all dimensions. The findings are briefly discussed in terms of various models of racism and stereotyping. In 1992, Ice T and his band Body Count released an album that contained the song “Cop Killer.” The song tells the story of a man’s plot to kill police officers. It isn’t surprising that after the album began to receive airplay and notice, there was a great deal of public outcry over the lyrics (Leland, 1992). Politicians from Vice President Quayle to Jesse Jackson publicly condemned the song. Just a few weeks of threatened stock sell-offs, concerts canceled by hall owners, and even death threats prompted the artist to remove the song from the album (Leland, 1992; Light, 1993). -

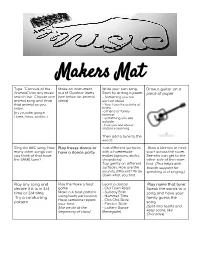

Makers Mat Type “Carnival of the Make an Instrument Write Your Own Song

Makers Mat Type “Carnival of the Make an instrument Write your own song. Draw a guitar on a Animals” into any music out of Outdoor items Start by writing a poem: piece of paper search bar. Choose one (see below for several - Something you are animal song and draw ideas) worried about that animal as you - Your favorite activity at listen. home (try youtube, google -a friend or family member Home, Alexa, spotify...) - something you see outside. - how you feel about distance learning Then add a tune to the words Sing the ABC song. How Play freeze dance or Test different surfaces . Blow a kleenex or neck many other songs can have a dance party. with a homemade scarf across the room. you think of that have mallet: (spoons, sticks, See who can get to the the SAME tune? chopsticks) other side of the room Tap gently on different first. (This helps with surfaces. How are the breath support for sounds different? Write speaking and singing.) down what you find. Play any song and Play the make a beat Learn a dance! Play name that tune: decide if it is in 4/4 game - Old Town Road Speak the words to a time or 3/4 time. Make a 4 beat pattern - Sunday Best song and have your using body percussion - Hammer Time Try a conducting family guess the Have someone repeat - Cha Cha Slide pattern. song. your beat - Electric Slide (like we do at the - Lottery Dance (Split into teams and beginning of class) (Renegade) keep score, like Charades) Find out all you can Make a homemade Play some classical Practice your hand about one of the drum, or use a pot or music and draw how it signs. -

Toidati;:G0' BR :! 2.7.1M65--- POE:; DATE "I,11:6*

DOCUUENT-REttifiE- BD, 110 U.5 95. PS 007 939 - At IOdR Xoung, .V-iIlia0.-1. , i4sicand tae- Ditadvad:_i.Teaching-Lear,ping Project iith_ Headstart Teachers and)thildren. Final . ,,.t . _ . /NSTITUTION StephenF. /1-,Iptih State Univ., Racogdochel--,,-7Tez. SPONS A:G2NcY pffice_qfganEation (D10.44 Washt.ngtOn b.t. BurPaii -i:,7-- -ofAi'ted-edh.i_ - = toidAti;:g0' BR :! 2.7.1M65--- POE:; DATE "I,11:6*.. 73 .. _ _ ____,,.. _ CONTRACT W74;"4 -, ERRS PRICE, */ 41E3=1 14.59 .P1.IIS V4SITX0-t. DESCRIPTORS *Diad,Van'#9.94 Louth; *Il*rid4cd Tea. .C11: ItiruPA'tiou; Instrnctional Uaterials; rearf.'imf4- ,ICUIT4:tiOs; Manuals; ilusical Instruments; *I1047.%g Educp -4.on-t. *PresChpol Edacation;Preschool,tirogt;ams: xrTIM, EyaluAtion; 'Vocal 8usic;:Wirksbop:5 -.. -,_ IbBicak*B17.5_ Projct Read Start . This -Addy -invest lgatedthe_ effectiveness -of a music 7-or rai,desi nedlespeciallyfor disadvantaged Children: ,and bi_pertonnel already involved in the operation of tbadSt4t,-prograis. A,total-of 12 Ueadttart ceiliers in-Texas_and 746iiitiana_ were included-, 2 of which constituted the control grogp., EaCkteather participated in a 3-day vork1op and was supplied- With simple iniria,uthents, several recordings, and-.a lesson' manual fcontaining 90 lessons)! Subjective and objective evaluations of the teachers were made during the workshops. kteaSures of final_ability And atOunt and percentage of improvement yere used to determine the -progress of the 76 experimental and 33 control children IRdiVidually, the experimental children Showed comparatively fewer regressions and far more individual imprOvement than did the control grOup.- It was found that Headstart teachers, given minimal training and d4 ection, _produced subqtantial iMprOvement in tha music ability Of their-Children. -

Cowboy Songs and Other Frontier Ballads

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln University of Nebraska Studies in Language, Literature, and Criticism English, Department of January 1911 Cowboy Songs and Other Frontier Ballads John A. Lomax M.A. University of Texas Follow this and additional works at: https://digitalcommons.unl.edu/englishunsllc Part of the English Language and Literature Commons Lomax, John A. M.A., "Cowboy Songs and Other Frontier Ballads" (1911). University of Nebraska Studies in Language, Literature, and Criticism. 12. https://digitalcommons.unl.edu/englishunsllc/12 This Article is brought to you for free and open access by the English, Department of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in University of Nebraska Studies in Language, Literature, and Criticism by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. COWBOY SONGS AND OTHER FRONTIER BALLADS COLLECTED BY * * * What keeps the herd from running, JOHN A. LOMAX, M. A. THE UNIVERSITY OF TEXAS Stampeding far and wide? SHELDON FELLOW FOR THE INVESTIGATION 1)F AMERICAN BALLADS, The cowboy's long, low whistle, HARVARD UNIVERSITY And singing by their side. * * * WITH AN INTRODUCTION BY BARRETT WENDELL 'Aew moth STURGIS & WALTON COMPANY I9Il All rights reserved Copyright 1910 <to By STURGIS & WALTON COMPANY MR. THEODORE ROOSEVELT Set up lUld electrotyped. Published November, 1910 WHO WHILE PRESIDENT wAs NOT TOO BUSY TO Reprinted April, 1911 TURN ASIDE-CHEERFULLY AND EFFECTIVELY AND AID WORKERS IN THE FIELD OF AMERICAN BALLADRY, THIS VOLUME IS GRATEFULLY DEDICATED ~~~,. < dti ~ -&n ~7 e~ 0(" ~ ,_-..~'t'-~-L(? ~;r-w« u-~~' ,~' l~) ~ 'f" "UJ ":-'~_cr l:"0 ~fI."-.'~ CONTENTS ::(,.c.........04.._ ......_~·~C&-. -

How-To Guide: Play Any Song You Want to at Karaoke Rooms in Korea

How-To Guide: Play Any Song You Want to at Karaoke Rooms in Korea What is one to do when you want to sing your favourite song in a karaoke room in Korea but your go-to songs aren’t part of their pre-loaded music database? Karaoke rooms (written as 노래방 in Korean - pronounced noraebang) can be a fun way to spend time while in Korea, giving you the chance to sing your heart out either solo or with friends in a private room. Often small rooms in basement establishments, at any time of day you can sing as loud as you want in the space with bright flashing lights and thousands upon thousands of songs ready to be played. Nowadays there are so many options for karaoke rooms; not only are there larger rooms for gatherings with coworkers or friends but there are smaller rooms, solo rooms, and also coin noraebangs which charge you by song as opposed to by-the-hour. Pricing often sits around a cost of 500 won for two songs, and oftentimes this is even cheaper if you make an hourly booking. As entertaining as these karaoke rooms can be, I often find myself encountering a problem. Although noraebangs contain such a wide variety of songs, if you are anything like me and your music taste is more centered around pop punk music from the 2000s or heavier rock music you might not be able to find many songs that suit your musical tastes as a lot of the pre-loaded karaoke tracks are either worldwide pop hits, K-Pop title tracks, or softer ballad songs. -

Zico Album Download the Bias List // K-Pop Reviews & Discussion

zico album download The Bias List // K-Pop Reviews & Discussion. K-Pop reviews and discussion with just a hint of bias… The Top Ten Best Songs by ZICO. Since his solo debut in late 2014, Block B‘s Zico has enjoyed massive success. Every single he releases — whether promoted or not — seems to jump to the top of the charts. And in the space of just over two years, he’s compiled enough material for a proper countdown. Though his style isn’t always to my taste, I appreciate the versatility of his sound and his willingness to play with his image. Here are his ten singles, ranked up to the very best. 10. Tough Cookie (ft. Don Mills) (2014) While it’s hard to ignore the appropriation and troublesome lyrical content, Tough Cookie marked a memorable solo debut. 9. Pride & Prejudice (ft. Suran) (2015) Pride & Prejudice more closely recalls Zico’s bandmate Park Kyung in style, offering a nimble flow over a gentle, jazzy beat. 8. Say Yes Or No (ft. Penomeco & The Quiett) (2015) The hypest of Zico hype tracks, Say Yes Or No lurches on an off-kilter flow that capitalizes on its ever-changing, in-you-face beat. 7. Well Done (ft. Ja Mezz) (2015) Following up on 2014’s posturing Tough Cookie , the mid-tempo Well Done dialed back the attitude for a more emotional, affecting track. 6. She’s A Baby (2017) With its lullaby-like flow and quiet, tempo-shifting instrumental, She’s A Baby isn’t one of Zico’s more instantly memorable tracks — but it is an interesting, artistic experiment. -

George Floyd

EDITOR’S LETTER Editor’s Letter Please Don’t Let Your Guard Down It has been six months since the COVID-19 virus began to spread, and our lives have changed accordingly. People started to do social distancing, and so don’t meet each other, and schools aren’t able to conduct classes normally. Some people are suffering from what you might call the COVID-19 blues. The virus made our lives miserable. Korea is seeing a second COVID-19 wave. The virus spike is the highest since March. Our nation has just seen about 300 cases of COVID-19 virus a day. This is highest single day total since March, with most cases traced to Sarang Jeil Church, whose pastor has been a critic of the Korean president. More than ten thousand people gathered at Gwanghwamun to protest against the government. At this demonstration, the COVID-19 virus spread like wildfire among protesters. This causes serious social problems. Some protesters are refusing to be tested for the COVID-19 virus and also refuses to have an examination. Community infection is widespread in the Seoul area, and is spreading to other provinces. Korea seemed one of the world’s COVID-19 virus success stories, for its prevention and management of the virus spread. However, the second wave has started. People are not being cautious about the virus spread. People have become unaware about the spread of the virus, because the virus spread has been taking place over a long period of time. This caused Korea’s biggest virus cluster. To prevent further virus spread, public facilities such as museum, nightclub, PC room and karaoke have closed.