What Is Computational Propaganda and How Do We Defeat It?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ENISA Threat Landscape Report 2016 15 Top Cyber-Threats and Trends

ENISA Threat Landscape Report 2016 15 Top Cyber-Threats and Trends FINAL VERSION 1.0 ETL 2016 JANUARY 2017 www.enisa.europa.eu European Union Agency For Network and Information Security ENISA Threat Landscape Report 2016 Final version | 1.0 | OPSEC | January 2017 About ENISA The European Union Agency for Network and Information Security (ENISA) is a centre of network and information security expertise for the EU, its member states, the private sector and Europe’s citizens. ENISA works with these groups to develop advice and recommendations on good practice in information security. It assists EU member states in implementing relevant EU legislation and works to improve the resilience of Europe’s critical information infrastructure and networks. ENISA seeks to enhance existing expertise in EU member states by supporting the development of cross-border communities committed to improving network and information security throughout the EU. More information about ENISA and its work can be found at www.enisa.europa.eu. Contact For queries on this paper, please use [email protected] or [email protected] For media enquiries about this paper, please use [email protected]. Acknowledgements ENISA would like to thank the members of the ENISA ETL Stakeholder group: Pierluigi Paganini, Chief Security Information Officer, IT, Paul Samwel, Banking, NL, Tom Koehler, Consulting, DE, Jason Finlayson, Consulting, IR, Stavros Lingris, CERT, EU, Jart Armin, Worldwide coalitions/Initiatives, International, Thomas Häberlen, Member State, DE, Neil Thacker, Consulting, UK, Shin Adachi, Security Analyst, US, R. Jane Ginn, Consulting, US, Polo Bais, Member State, NL. The group has provided valuable input, has supported the ENISA threat analysis and has reviewed ENISA material. -

Does the Planned Obsolescence Influence Consumer Purchase Decison? the Effects of Cognitive Biases: Bandwagon

FUNDAÇÃO GETULIO VARGAS ESCOLA DE ADMINISTRAÇÃO DO ESTADO DE SÃO PAULO VIVIANE MONTEIRO DOES THE PLANNED OBSOLESCENCE INFLUENCE CONSUMER PURCHASE DECISON? THE EFFECTS OF COGNITIVE BIASES: BANDWAGON EFFECT, OPTIMISM BIAS AND PRESENT BIAS ON CONSUMER BEHAVIOR. SÃO PAULO 2018 VIVIANE MONTEIRO DOES THE PLANNED OBSOLESCENCE INFLUENCE CONSUMER PURCHASE DECISIONS? THE EFFECTS OF COGNITIVE BIASES: BANDWAGON EFFECT, OPTIMISM BIAS AND PRESENT BIAS ON CONSUMER BEHAVIOR Applied Work presented to Escola de Administraçaõ do Estado de São Paulo, Fundação Getúlio Vargas as a requirement to obtaining the Master Degree in Management. Research Field: Finance and Controlling Advisor: Samy Dana SÃO PAULO 2018 Monteiro, Viviane. Does the planned obsolescence influence consumer purchase decisions? The effects of cognitive biases: bandwagons effect, optimism bias on consumer behavior / Viviane Monteiro. - 2018. 94 f. Orientador: Samy Dana Dissertação (MPGC) - Escola de Administração de Empresas de São Paulo. 1. Bens de consumo duráveis. 2. Ciclo de vida do produto. 3. Comportamento do consumidor. 4. Consumidores – Atitudes. 5. Processo decisório – Aspectos psicológicos. I. Dana, Samy. II. Dissertação (MPGC) - Escola de Administração de Empresas de São Paulo. III. Título. CDU 658.89 Ficha catalográfica elaborada por: Isabele Oliveira dos Santos Garcia CRB SP-010191/O Biblioteca Karl A. Boedecker da Fundação Getulio Vargas - SP VIVIANE MONTEIRO DOES THE PLANNED OBSOLESCENCE INFLUENCE CONSUMERS PURCHASE DECISIONS? THE EFFECTS OF COGNITIVE BIASES: BANDWAGON EFFECT, OPTIMISM BIAS AND PRESENT BIAS ON CONSUMER BEHAVIOR. Applied Work presented to Escola de Administração do Estado de São Paulo, of the Getulio Vargas Foundation, as a requirement for obtaining a Master's Degree in Management. Research Field: Finance and Controlling Date of evaluation: 08/06/2018 Examination board: Prof. -

An Examination of the Impact of Astroturfing on Nationalism: A

social sciences $€ £ ¥ Article An Examination of the Impact of Astroturfing on Nationalism: A Persuasion Knowledge Perspective Kenneth M. Henrie 1,* and Christian Gilde 2 1 Stiller School of Business, Champlain College, Burlington, VT 05401, USA 2 Department of Business and Technology, University of Montana Western, Dillon, MT 59725, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-802-865-8446 Received: 7 December 2018; Accepted: 23 January 2019; Published: 28 January 2019 Abstract: One communication approach that lately has become more common is astroturfing, which has been more prominent since the proliferation of social media platforms. In this context, astroturfing is a fake grass-roots political campaign that aims to manipulate a certain audience. This exploratory research examined how effective astroturfing is in mitigating citizens’ natural defenses against politically persuasive messages. An experimental method was used to examine the persuasiveness of social media messages related to coal energy in their ability to persuade citizens’, and increase their level of nationalism. The results suggest that citizens are more likely to be persuaded by an astroturfed message than people who are exposed to a non-astroturfed message, regardless of their political leanings. However, the messages were not successful in altering an individual’s nationalistic views at the moment of exposure. The authors discuss these findings and propose how in a long-term context, astroturfing is a dangerous addition to persuasive communication. Keywords: astroturfing; persuasion knowledge; nationalism; coal energy; social media 1. Introduction Astroturfing is the simulation of a political campaign which is intended to manipulate the target audience. Often, the purpose of such a campaign is not clearly recognizable because a political effort is disguised as a grassroots operation. -

The First Amendment, the Public-Private Distinction, and Nongovernmental Suppression of Wartime Political Debate Gregory P

Working Paper Series Villanova University Charles Widger School of Law Year 2004 The First Amendment, The Public-Private Distinction, and Nongovernmental Suppression of Wartime Political Debate Gregory P. Magarian Villanova University School of Law, [email protected] This paper is posted at Villanova University Charles Widger School of Law Digital Repository. http://digitalcommons.law.villanova.edu/wps/art6 THE FIRST AMENDMENT, THE PUBLIC -PRIVA TE DISTINCTION, AND NONGOVERNMENTAL SUPPRESSION OF WARTIME POLITICAL DEBATE 1 BY GREGORY P. MAGARIAN DRAFT 5-12-04 TABLE OF CONTENTS INTRODUCTION ......................................................................................... 1 I. CONFRONTING NONGOVERNMENTAL CENSORSHIP OF POLITICAL DEBATE IN WARTIME .................. 5 A. The Value and Vulnerability of Wartime Political Debate ........................................................................... 5 1. The Historical Vulnerability of Wartime Political Debate to Nongovernmental Suppression ....................................................................... 5 2. The Public Rights Theory of Expressive Freedom and the Necessity of Robust Political Debate for Democratic Self -Government........................ 11 B. Nongovernmental Censorship of Political Speech During the “War on Terrorism” ............................................... 18 1. Misinformation and Suppression of Information by News Media ............................................ 19 2. Exclusions of Political Speakers from Privately Owned Public Spaces. -

Political Astroturfing Across the World

Political astroturfing across the world Franziska B. Keller∗ David Schochy Sebastian Stierz JungHwan Yang§ Paper prepared for the Harvard Disinformation Workshop Update 1 Introduction At the very latest since the Russian Internet Research Agency’s (IRA) intervention in the U.S. presidential election, scholars and the broader public have become wary of coordi- nated disinformation campaigns. These hidden activities aim to deteriorate the public’s trust in electoral institutions or the government’s legitimacy, and can exacerbate political polarization. But unfortunately, academic and public debates on the topic are haunted by conceptual ambiguities, and rely on few memorable examples, epitomized by the often cited “social bots” who are accused of having tried to influence public opinion in various contemporary political events. In this paper, we examine “political astroturfing,” a particular form of centrally co- ordinated disinformation campaigns in which participants pretend to be ordinary citizens acting independently (Kovic, Rauchfleisch, Sele, & Caspar, 2018). While the accounts as- sociated with a disinformation campaign may not necessarily spread incorrect information, they deceive the audience about the identity and motivation of the person manning the ac- count. And even though social bots have been in the focus of most academic research (Fer- rara, Varol, Davis, Menczer, & Flammini, 2016; Stella, Ferrara, & De Domenico, 2018), seemingly automated accounts make up only a small part – if any – of most astroturf- ing campaigns. For instigators of astroturfing, relying exclusively on social bots is not a promising strategy, as humans are good at detecting low-quality information (Pennycook & Rand, 2019). On the other hand, many bots detected by state-of-the-art social bot de- tection methods are not part of an astroturfing campaign, but unrelated non-political spam ∗Hong Kong University of Science and Technology yThe University of Manchester zGESIS – Leibniz Institute for the Social Sciences §University of Illinois at Urbana-Champaign 1 bots. -

Propaganda Fitzmaurice

Propaganda Fitzmaurice Propaganda Katherine Fitzmaurice Brock University Abstract This essay looks at how the definition and use of the word propaganda has evolved throughout history. In particular, it examines how propaganda and education are intrinsically linked, and the implications of such a relationship. Propaganda’s role in education is problematic as on the surface, it appears to serve as a warning against the dangers of propaganda, yet at the same time it disseminates the ideology of a dominant political power through curriculum and practice. Although propaganda can easily permeate our thoughts and actions, critical thinking and awareness can provide the best defense against falling into propaganda’s trap of conformity and ignorance. Keywords: propaganda, education, indoctrination, curriculum, ideology Katherine Fitzmaurice is a Master’s of Education (M.Ed.) student at Brock University. She is currently employed in the private business sector and is a volunteer with several local educational organizations. Her research interests include adult literacy education, issues of access and equity for marginalized adults, and the future and widening of adult education. Email: [email protected] 63 Brock Education Journal, 27(2), 2018 Propaganda Fitzmaurice According to the Oxford English Dictionary (OED, 2011) the word propaganda can be traced back to 1621-23, when it first appeared in “Congregatio de progapanda fide,” meaning “congregation for propagating the faith.” This was a mission, commissioned by Pope Gregory XV, to spread the doctrine of the Catholic Church to non-believers. At the time, propaganda was defined as “an organization, scheme, or movement for the propagation of a particular doctrine, practice, etc.” (OED). -

Blood, Sweat, and Fear: Workers' Rights in U.S. Meat and Poultry Plants

BLOOD, SWEAT, AND FEAR Workers’ Rights in U.S. Meat and Poultry Plants Human Rights Watch Copyright © 2004 by Human Rights Watch. All rights reserved. Printed in the United States of America ISBN: 1-56432-330-7 Cover photo: © 1999 Eugene Richards/Magnum Photos Cover design by Rafael Jimenez Human Rights Watch 350 Fifth Avenue, 34th floor New York, NY 10118-3299 USA Tel: 1-(212) 290-4700, Fax: 1-(212) 736-1300 [email protected] 1630 Connecticut Avenue, N.W., Suite 500 Washington, DC 20009 USA Tel:1-(202) 612-4321, Fax:1-(202) 612-4333 [email protected] 2nd Floor, 2-12 Pentonville Road London N1 9HF, UK Tel: 44 20 7713 1995, Fax: 44 20 7713 1800 [email protected] Rue Van Campenhout 15, 1000 Brussels, Belgium Tel: 32 (2) 732-2009, Fax: 32 (2) 732-0471 [email protected] 8 rue des Vieux-Grenadiers 1205 Geneva Tel: +41 22 320 55 90, Fax: +41 22 320 55 11 [email protected] Web Site Address: http://www.hrw.org Listserv address: To receive Human Rights Watch news releases by email, subscribe to the HRW news listserv of your choice by visiting http://hrw.org/act/subscribe-mlists/subscribe.htm Human Rights Watch is dedicated to protecting the human rights of people around the world. We stand with victims and activists to prevent discrimination, to uphold political freedom, to protect people from inhumane conduct in wartime, and to bring offenders to justice. We investigate and expose human rights violations and hold abusers accountable. We challenge governments and those who hold power to end abusive practices and respect international human rights law. -

Hard-Rock Mining, Labor Unions, and Irish Nationalism in the Mountain West and Idaho, 1850-1900

UNPOLISHED EMERALDS IN THE GEM STATE: HARD-ROCK MINING, LABOR UNIONS AND IRISH NATIONALISM IN THE MOUNTAIN WEST AND IDAHO, 1850-1900 by Victor D. Higgins A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts in History Boise State University August 2017 © 2017 Victor D. Higgins ALL RIGHTS RESERVED BOISE STATE UNIVERSITY GRADUATE COLLEGE DEFENSE COMMITTEE AND FINAL READING APPROVALS of the thesis submitted by Victor D. Higgins Thesis Title: Unpolished Emeralds in the Gem State: Hard-rock Mining, Labor Unions, and Irish Nationalism in the Mountain West and Idaho, 1850-1900 Date of Final Oral Examination: 16 June 2017 The following individuals read and discussed the thesis submitted by student Victor D. Higgins, and they evaluated his presentation and response to questions during the final oral examination. They found that the student passed the final oral examination. John Bieter, Ph.D. Chair, Supervisory Committee Jill K. Gill, Ph.D. Member, Supervisory Committee Raymond J. Krohn, Ph.D. Member, Supervisory Committee The final reading approval of the thesis was granted by John Bieter, Ph.D., Chair of the Supervisory Committee. The thesis was approved by the Graduate College. ACKNOWLEDGEMENTS The author appreciates all the assistance rendered by Boise State University faculty and staff, and the university’s Basque Studies Program. Also, the Idaho Military Museum, the Idaho State Archives, the Northwest Museum of Arts and Culture, and the Wallace District Mining Museum, all of whom helped immensely with research. And of course, Hunnybunny for all her support and patience. iv ABSTRACT Irish immigration to the United States, extant since the 1600s, exponentially increased during the Irish Great Famine of 1845-52. -

Getting Beneath the Surface: Scapegoating and the Systems Approach in a Post-Munro World Introduction the Publication of The

Getting beneath the surface: Scapegoating and the Systems Approach in a post-Munro world Introduction The publication of the Munro Review of Child Protection: Final Report (2011) was the culmination of an extensive and expansive consultation process into the current state of child protection practice across the UK. The report focused on the recurrence of serious shortcomings in social work practice and proposed an alternative system-wide shift in perspective to address these entrenched difficulties. Inter-woven throughout the report is concern about the adverse consequences of a pervasive culture of individual blame on professional practice. The report concentrates on the need to address this by reconfiguring the organisational responses to professional errors and shortcomings through the adoption of a ‘systems approach’. Despite the pre-occupation with ‘blame’ within the report there is, surprisingly, at no point an explicit reference to the dynamics and practices of ‘scapegoating’ that are so closely associated with organisational blame cultures. Equally notable is the absence of any recognition of the reasons why the dynamics of individual blame and scapegoating are so difficult to overcome or to ‘resist’. Yet this paper argues that the persistence of scapegoating is a significant impediment to the effective implementation of a systems approach as it risks distorting understanding of what has gone wrong and therefore of how to prevent it in the future. It is hard not to agree wholeheartedly with the good intentions of the developments proposed by Munro, but equally it is imperative that a realistic perspective is retained in relation to the challenges that would be faced in rolling out this new organisational agenda. -

Spiral of Silence and the Iraq War

Rochester Institute of Technology RIT Scholar Works Theses 12-1-2008 Spiral of silence and the Iraq war Jessica Drake Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Drake, Jessica, "Spiral of silence and the Iraq war" (2008). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Spiral Of Silence And The Iraq War 1 Running Head: SPIRAL OF SILENCE AND THE IRAQ WAR The Rochester Institute of Technology Department of Communication College of Liberal Arts Spiral of Silence, Public Opinion and the Iraq War: Factors Influencing One’s Willingness to Express their Opinion by Jessica Drake A Paper Submitted In partial fulfillment of the Master of Science degree in Communication & Media Technologies Degree Awarded: December 11, 2008 Spiral Of Silence And The Iraq War 2 The members of the Committee approve the thesis of Jessica Drake presented on 12/11/2008 ___________________________________________ Bruce A. Austin, Ph.D. Chairman and Professor of Communication Department of Communication Thesis Advisor ___________________________________________ Franz Foltz, Ph.D. Associate Professor Department of Science, Technology and Society/Public Policy Thesis Advisor ___________________________________________ Rudy Pugliese, Ph.D. Professor of Communication Coordinator, Communication & Media Technologies Graduate Degree Program Department of Communication Thesis Advisor Spiral Of Silence And The Iraq War 3 Table of Contents Abstract ………………………………………………………………………………………… 4 Introduction ……………………………………………………………………………………. 5 Project Rationale ………………………………………………………………………………. 8 Review of Literature …………………………………………………………………………. 10 Method ………………………………………………………………………………………... -

Illinois Congressional Delegation Bios

Illinois Congressional Delegation Bios Senator Richard Durbin (D-IL) Senator Dick Durbin, a Democrat from Springfield, is the 47th U.S. Senator from the State of Illinois, the state’s senior senator, and the convener of Illinois’ bipartisan congressional delegation. Durbin also serves as the Assistant Democratic Leader, the second highest ranking position among the Senate Democrats. Also known as the Minority Whip, Senator Durbin has been elected to this leadership post by his Democratic colleagues every two years since 2005. Elected to the U.S. Senate on November 5, 1996, and re-elected in 2002, 2008, and 2014, Durbin fills the seat left vacant by the retirement of his long-time friend and mentor, U.S. Senator Paul Simon. Durbin sits on the Senate Judiciary, Appropriations, and Rules Committees. He is the Ranking Member of the Judiciary Committee's Subcommittee on the Constitution and the Appropriations Committee's Defense Subcommittee. Senator Tammy Duckworth (D-IL) U.S. Senator Tammy Duckworth is an Iraq War Veteran, Purple Heart recipient and former Assistant Secretary of the Department of Veterans Affairs. She was among the first Army women to fly combat missions during Operation Iraqi Freedom. Duckworth served in the Reserve Forces for 23 years before retiring from military service in 2014 at the rank of Lieutenant Colonel. She was elected to the U.S. Senate in 2016 after representing Illinois’s Eighth Congressional District in the U.S. House of Representatives for two terms. In 2004, Duckworth was deployed to Iraq as a Black Hawk helicopter pilot for the Illinois Army National Guard. -

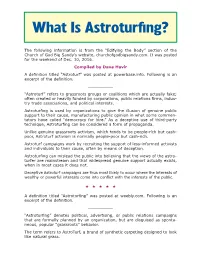

What Is Astroturfing? Churchofgodbigsandy.Com

What Is Astroturfing? The following information is from the “Edifying the Body” section of the Church of God Big Sandy’s website, churchofgodbigsandy.com. It was posted for the weekend of Dec. 10, 2016. Compiled by Dave Havir A definition titled “Astroturf” was posted at powerbase.info. Following is an excerpt of the definition. __________ “Astroturf” refers to grassroots groups or coalitions which are actually fake; often created or heavily funded by corporations, public relations firms, indus- try trade associations, and political interests. Astroturfing is used by organizations to give the illusion of genuine public support to their cause, manufacturing public opinion in what some commen- tators have called “democracy for hire.” As a deceptive use of third-party technique, Astroturfing can be considered a form of propaganda. Unlike genuine grassroots activism, which tends to be people-rich but cash- poor, Astroturf activism is normally people-poor but cash-rich. Astroturf campaigns work by recruiting the support of less-informed activists and individuals to their cause, often by means of deception. Astroturfing can mislead the public into believing that the views of the astro- turfer are mainstream and that widespread genuine support actually exists, when in most cases it does not. Deceptive Astroturf campaigns are thus most likely to occur where the interests of wealthy or powerful interests come into conflict with the interests of the public. ★★★★★ A definition titled “Astroturfing” was posted at weebly.com. Following is an excerpt of the definition. __________ “Astroturfing” denotes political, advertising, or public relations campaigns that are formally planned by an organization, but are disguised as sponta- neous, popular “grassroots” behavior.