Political Astroturfing Across the World

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![Arxiv:1805.10105V1 [Cs.SI] 25 May 2018](https://docslib.b-cdn.net/cover/1872/arxiv-1805-10105v1-cs-si-25-may-2018-61872.webp)

Arxiv:1805.10105V1 [Cs.SI] 25 May 2018

Effects of Social Bots in the Iran-Debate on Twitter Andree Thieltges, Orestis Papakyriakopoulos, Juan Carlos Medina Serrano, Simon Hegelich Bavarian School of Public Policy Technical University of Munich Abstract Ahmadinejad caused nationwide unrests and protests. As these protests grew, the Iranian government shut down for- 2018 started with massive protests in Iran, bringing back the eign news coverage and restricted the use of cell phones, impressions of the so called “Arab Spring” and it’s revolution- text-messaging and internet access. Nevertheless, Twitter ary impact for the Maghreb states, Syria and Egypt. Many reports and scientific examinations considered online social and Facebook “became vital tools to relay news and infor- mation on anti-government protest to the people inside and networks (OSN’s) such as Twitter or Facebook to play a criti- 1 cal role in the opinion making of people behind those protests. outside Iran” . While Ayatollah Khameini characterized the Beside that, there is also evidence for directed manipulation influence of Twitter as “deviant” and inappropriate on Ira- of opinion with the help of social bots and fake accounts. nian domestic affairs2, most of the foreign news coverage So, it is obvious to ask, if there is an attempt to manipulate hailed Twitter to be “a new and influential medium for social the opinion-making process related to the Iranian protest in movements and international politics” (Burns and Eltham OSN by employing social bots, and how such manipulations 2009). Beside that, there was already a critical view upon will affect the discourse as a whole. Based on a sample of the influence of OSN’s as “tools” to express political opin- ca. -

An Examination of the Impact of Astroturfing on Nationalism: A

social sciences $€ £ ¥ Article An Examination of the Impact of Astroturfing on Nationalism: A Persuasion Knowledge Perspective Kenneth M. Henrie 1,* and Christian Gilde 2 1 Stiller School of Business, Champlain College, Burlington, VT 05401, USA 2 Department of Business and Technology, University of Montana Western, Dillon, MT 59725, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-802-865-8446 Received: 7 December 2018; Accepted: 23 January 2019; Published: 28 January 2019 Abstract: One communication approach that lately has become more common is astroturfing, which has been more prominent since the proliferation of social media platforms. In this context, astroturfing is a fake grass-roots political campaign that aims to manipulate a certain audience. This exploratory research examined how effective astroturfing is in mitigating citizens’ natural defenses against politically persuasive messages. An experimental method was used to examine the persuasiveness of social media messages related to coal energy in their ability to persuade citizens’, and increase their level of nationalism. The results suggest that citizens are more likely to be persuaded by an astroturfed message than people who are exposed to a non-astroturfed message, regardless of their political leanings. However, the messages were not successful in altering an individual’s nationalistic views at the moment of exposure. The authors discuss these findings and propose how in a long-term context, astroturfing is a dangerous addition to persuasive communication. Keywords: astroturfing; persuasion knowledge; nationalism; coal energy; social media 1. Introduction Astroturfing is the simulation of a political campaign which is intended to manipulate the target audience. Often, the purpose of such a campaign is not clearly recognizable because a political effort is disguised as a grassroots operation. -

Understanding Users' Perspectives of News Bots in the Age of Social Media

sustainability Article Utilizing Bots for Sustainable News Business: Understanding Users’ Perspectives of News Bots in the Age of Social Media Hyehyun Hong 1 and Hyun Jee Oh 2,* 1 Department of Advertising and Public Relations, Chung-Ang University, Seoul 06974, Korea; [email protected] 2 Department of Communication Studies, Hong Kong Baptist University, Kowloon Tong, Kowloon, Hong Kong SAR, China * Correspondence: [email protected] Received: 15 July 2020; Accepted: 10 August 2020; Published: 12 August 2020 Abstract: The move of news audiences to social media has presented a major challenge for news organizations. How to adapt and adjust to this social media environment is an important issue for sustainable news business. News bots are one of the key technologies offered in the current media environment and are widely applied in news production, dissemination, and interaction with audiences. While benefits and concerns coexist about the application of bots in news organizations, the current study aimed to examine how social media users perceive news bots, the factors that affect their acceptance of bots in news organizations, and how this is related to their evaluation of social media news in general. An analysis of the US national survey dataset showed that self-efficacy (confidence in identifying content from a bot) was a successful predictor of news bot acceptance, which in turn resulted in a positive evaluation of social media news in general. In addition, an individual’s perceived prevalence of social media news from bots had an indirect effect on acceptance by increasing self-efficacy. The results are discussed with the aim of providing a better understanding of news audiences in the social media environment, and practical implications for the sustainable news business are suggested. -

Supplementary Materials For

www.sciencemag.org/content/359/6380/1094/suppl/DC1 Supplementary Materials for The science of fake news David M. J. Lazer,*† Matthew A. Baum,* Yochai Benkler, Adam J. Berinsky, Kelly M. Greenhill, Filippo Menczer, Miriam J. Metzger, Brendan Nyhan, Gordon Pennycook, David Rothschild, Michael Schudson, Steven A. Sloman, Cass R. Sunstein, Emily A. Thorson, Duncan J. Watts, Jonathan L. Zittrain *These authors contributed equally to this work. †Corresponding author. Email: [email protected] Published 9 March 2018, Science 359, 1094 (2018) DOI: 10.1126/science.aao2998 This PDF file includes: List of Author Affiliations Supporting Materials References List of Author Affiliations David M. J. Lazer,1,2*† Matthew A. Baum,3* Yochai Benkler,4,5 Adam J. Berinsky,6 Kelly M. Greenhill,7,3 Filippo Menczer,8 Miriam J. Metzger,9 Brendan Nyhan,10 Gordon Pennycook,11 David Rothschild,12 Michael Schudson,13 Steven A. Sloman,14 Cass R. Sunstein,4 Emily A. Thorson,15 Duncan J. Watts,12 Jonathan L. Zittrain4,5 1Network Science Institute, Northeastern University, Boston, MA 02115, USA. 2Institute for Quantitative Social Science, Harvard University, Cambridge, MA 02138, USA 3John F. Kennedy School of Government, Harvard University, Cambridge, MA 02138, USA. 4Harvard Law School, Harvard University, Cambridge, MA 02138, USA. 5 Berkman Klein Center for Internet and Society, Cambridge, MA 02138, USA. 6Department of Political Science, Massachussets Institute of Technology, Cambridge, MA 02139, USA. 7Department of Political Science, Tufts University, Medford, MA 02155, USA. 8School of Informatics, Computing, and Engineering, Indiana University, Bloomington, IN 47405, USA. 9Department of Communication, University of California, Santa Barbara, Santa Barbara, CA 93106, USA. -

Frame Viral Tweets.Pdf

BIG IDEAS! Challenging Public Relations Research and Practice Viral Tweets, Fake News and Social Bots in Post-Factual Politics: The Case of the French Presidential Elections 2017 Alex Frame, Gilles Brachotte, Eric Leclercq, Marinette Savonnet [email protected] 20th Euprera Congress, Aarhus, 27-29th September 2018 Post-Factual Politics in the Age of Social Media 1. Algorithms based on popularity rather than veracity, linked to a business model based on views and likes, where novelty and sensationalism are of the essence; 2. Social trends of “whistle-blowing” linked to conspiracy theories and a pejorative image of corporate or institutional communication, which cast doubt on the neutrality of traditionally so-called expert figures, such as independent bodies or academics; 3. Algorithm-fuelled social dynamics on social networking sites which structure publics by similarity, leading to a fragmented digital public sphere where like-minded individuals congregate digitally, providing an “echo-chamber” effect susceptible to encourage even the most extreme political views and the “truths” upon which they are based. Fake News at the Presidential Elections Fake News at the Presidential Elections Fake News at the Presidential Elections Political Social Bots on Twitter Increasingly widespread around the world, over at least the last decade. Kremlin bot army (Lawrence Alexander on GlobalVoices.org; Stukal et al., 2017). DFRLab (Atlantic Council) Social Bots A “social bot” is: “a computer algorithm that automatically produces content and -

Empirical Comparative Analysis of Bot Evidence in Social Networks

Bots in Nets: Empirical Comparative Analysis of Bot Evidence in Social Networks Ross Schuchard1(&) , Andrew Crooks1,2 , Anthony Stefanidis2,3 , and Arie Croitoru2 1 Computational Social Science Program, Department of Computational and Data Sciences, George Mason University, Fairfax, VA 22030, USA [email protected] 2 Department of Geography and Geoinformation Science, George Mason University, Fairfax, VA 22030, USA 3 Criminal Investigations and Network Analysis Center, George Mason University, Fairfax, VA 22030, USA Abstract. The emergence of social bots within online social networks (OSNs) to diffuse information at scale has given rise to many efforts to detect them. While methodologies employed to detect the evolving sophistication of bots continue to improve, much work can be done to characterize the impact of bots on communication networks. In this study, we present a framework to describe the pervasiveness and relative importance of participants recognized as bots in various OSN conversations. Specifically, we harvested over 30 million tweets from three major global events in 2016 (the U.S. Presidential Election, the Ukrainian Conflict and Turkish Political Censorship) and compared the con- versational patterns of bots and humans within each event. We further examined the social network structure of each conversation to determine if bots exhibited any particular network influence, while also determining bot participation in key emergent network communities. The results showed that although participants recognized as social bots comprised only 0.28% of all OSN users in this study, they accounted for a significantly large portion of prominent centrality rankings across the three conversations. This includes the identification of individual bots as top-10 influencer nodes out of a total corpus consisting of more than 2.8 million nodes. -

Disinformation, and Influence Campaigns on Twitter 'Fake News'

Disinformation, ‘Fake News’ and Influence Campaigns on Twitter OCTOBER 2018 Matthew Hindman Vlad Barash George Washington University Graphika Contents Executive Summary . 3 Introduction . 7 A Problem Both Old and New . 9 Defining Fake News Outlets . 13 Bots, Trolls and ‘Cyborgs’ on Twitter . 16 Map Methodology . 19 Election Data and Maps . 22 Election Core Map Election Periphery Map Postelection Map Fake Accounts From Russia’s Most Prominent Troll Farm . 33 Disinformation Campaigns on Twitter: Chronotopes . 34 #NoDAPL #WikiLeaks #SpiritCooking #SyriaHoax #SethRich Conclusion . 43 Bibliography . 45 Notes . 55 2 EXECUTIVE SUMMARY This study is one of the largest analyses to date on how fake news spread on Twitter both during and after the 2016 election campaign. Using tools and mapping methods from Graphika, a social media intelligence firm, we study more than 10 million tweets from 700,000 Twitter accounts that linked to more than 600 fake and conspiracy news outlets. Crucially, we study fake and con- spiracy news both before and after the election, allowing us to measure how the fake news ecosystem has evolved since November 2016. Much fake news and disinformation is still being spread on Twitter. Consistent with other research, we find more than 6.6 million tweets linking to fake and conspiracy news publishers in the month before the 2016 election. Yet disinformation continues to be a substantial problem postelection, with 4.0 million tweets linking to fake and conspiracy news publishers found in a 30-day period from mid-March to mid-April 2017. Contrary to claims that fake news is a game of “whack-a-mole,” more than 80 percent of the disinformation accounts in our election maps are still active as this report goes to press. -

Social Bots and Social Media Manipulation in 2020: the Year in Review

Social Bots and Social Media Manipulation in 2020: The Year in Review Ho-Chun Herbert Chang∗a, Emily Chen∗b,c, Meiqing Zhang∗a, Goran Muric∗b, and Emilio Ferraraa,b,c aUSC Annenberg School of Communication bUSC Information Sciences Institute cUSC Department of Computer Science *These authors contributed equally to this work Abstract The year 2020 will be remembered for two events of global significance: the COVID-19 pandemic and 2020 U.S. Presidential Election. In this chapter, we summarize recent studies using large public Twitter data sets on these issues. We have three primary ob- jectives. First, we delineate epistemological and practical considerations when combin- ing the traditions of computational research and social science research. A sensible bal- ance should be struck when the stakes are high between advancing social theory and concrete, timely reporting of ongoing events. We additionally comment on the compu- tational challenges of gleaning insight from large amounts of social media data. Second, we characterize the role of social bots in social media manipulation around the discourse on the COVID-19 pandemic and 2020 U.S. Presidential Election. Third, we compare re- sults from 2020 to prior years to note that, although bot accounts still contribute to the emergence of echo-chambers, there is a transition from state-sponsored campaigns to do- mestically emergent sources of distortion. Furthermore, issues of public health can be confounded by political orientation, especially from localized communities of actors who spread misinformation. We conclude that automation and social media manipulation arXiv:2102.08436v1 [cs.SI] 16 Feb 2021 pose issues to a healthy and democratic discourse, precisely because they distort repre- sentation of pluralism within the public sphere. -

The Rise of Social Botnets: Attacks and Countermeasures

1 The Rise of Social Botnets: Attacks and Countermeasures Jinxue Zhang, Student Member, IEEE, Rui Zhang, Member, IEEE, Yanchao Zhang, Senior Member, IEEE, and Guanhua Yan Abstract—Online social networks (OSNs) are increasingly threatened by social bots which are software-controlled OSN accounts that mimic human users with malicious intentions. A social botnet refers to a group of social bots under the control of a single botmaster, which collaborate to conduct malicious behavior while mimicking the interactions among normal OSN users to reduce their individual risk of being detected. We demonstrate the effectiveness and advantages of exploiting a social botnet for spam distribution and digital-influence manipulation through real experiments on Twitter and also trace-driven simulations. We also propose the corresponding countermeasures and evaluate their effectiveness. Our results can help understand the potentially detrimental effects of social botnets and help OSNs improve their bot(net) detection systems. Index Terms—Social bot, social botnet, spam distribution, digital influence F 1 INTRODUCTION NLINE social networks (OSNs) are increasingly threat- two new social botnet attacks on Twitter, one of the most O ened by social bots [2] which are software-controlled OSN popular OSNs with over 302M monthly active users as of June accounts that mimic human users with malicious intentions. 2015 and over 500M new tweets daily. Then we propose two For example, according to a May 2012 article in Bloomberg defenses on the reported attacks, respectively. Our results help Businessweek,1 as many as 40% of the accounts on Facebook, understand the potentially detrimental effects of social botnets Twitter, and other popular OSNs are spammer accounts (or and shed the light for Twitter and other OSNs to improve their social bots), and about 8% of the messages sent via social bot(net) detection systems. -

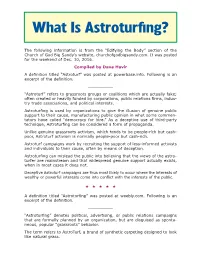

What Is Astroturfing? Churchofgodbigsandy.Com

What Is Astroturfing? The following information is from the “Edifying the Body” section of the Church of God Big Sandy’s website, churchofgodbigsandy.com. It was posted for the weekend of Dec. 10, 2016. Compiled by Dave Havir A definition titled “Astroturf” was posted at powerbase.info. Following is an excerpt of the definition. __________ “Astroturf” refers to grassroots groups or coalitions which are actually fake; often created or heavily funded by corporations, public relations firms, indus- try trade associations, and political interests. Astroturfing is used by organizations to give the illusion of genuine public support to their cause, manufacturing public opinion in what some commen- tators have called “democracy for hire.” As a deceptive use of third-party technique, Astroturfing can be considered a form of propaganda. Unlike genuine grassroots activism, which tends to be people-rich but cash- poor, Astroturf activism is normally people-poor but cash-rich. Astroturf campaigns work by recruiting the support of less-informed activists and individuals to their cause, often by means of deception. Astroturfing can mislead the public into believing that the views of the astro- turfer are mainstream and that widespread genuine support actually exists, when in most cases it does not. Deceptive Astroturf campaigns are thus most likely to occur where the interests of wealthy or powerful interests come into conflict with the interests of the public. ★★★★★ A definition titled “Astroturfing” was posted at weebly.com. Following is an excerpt of the definition. __________ “Astroturfing” denotes political, advertising, or public relations campaigns that are formally planned by an organization, but are disguised as sponta- neous, popular “grassroots” behavior. -

Supervised Machine Learning Bot Detection Techniques to Identify

SMU Data Science Review Volume 1 | Number 2 Article 5 2018 Supervised Machine Learning Bot Detection Techniques to Identify Social Twitter Bots Phillip George Efthimion Southern Methodist University, [email protected] Scott aP yne Southern Methodist University, [email protected] Nicholas Proferes University of Kentucky, [email protected] Follow this and additional works at: https://scholar.smu.edu/datasciencereview Part of the Theory and Algorithms Commons Recommended Citation Efthimion, hiP llip George; Payne, Scott; and Proferes, Nicholas (2018) "Supervised Machine Learning Bot Detection Techniques to Identify Social Twitter Bots," SMU Data Science Review: Vol. 1 : No. 2 , Article 5. Available at: https://scholar.smu.edu/datasciencereview/vol1/iss2/5 This Article is brought to you for free and open access by SMU Scholar. It has been accepted for inclusion in SMU Data Science Review by an authorized administrator of SMU Scholar. For more information, please visit http://digitalrepository.smu.edu. Efthimion et al.: Supervised Machine Learning Bot Detection Techniques to Identify Social Twitter Bots Supervised Machine Learning Bot Detection Techniques to Identify Social Twitter Bots Phillip G. Efthimion1, Scott Payne1, Nick Proferes2 1Master of Science in Data Science, Southern Methodist University 6425 Boaz Lane, Dallas, TX 75205 {pefthimion, mspayne}@smu.edu [email protected] Abstract. In this paper, we present novel bot detection algorithms to identify Twitter bot accounts and to determine their prevalence in current online discourse. On social media, bots are ubiquitous. Bot accounts are problematic because they can manipulate information, spread misinformation, and promote unverified information, which can adversely affect public opinion on various topics, such as product sales and political campaigns. -

You Must Be a Bot! How Beliefs Shape Twitter Profile Perceptions

Disagree? You Must be a Bot! How Beliefs Shape Twitter Profile Perceptions MAGDALENA WISCHNEWSKI, University of Duisburg-Essen, Germany REBECCA BERNEMANN, University of Duisburg-Essen, Germany THAO NGO, University of Duisburg-Essen, Germany NICOLE KRÄMER, University of Duisburg-Essen, Germany In this paper, we investigate the human ability to distinguish political social bots from humans on Twitter. Following motivated reasoning theory from social and cognitive psychology, our central hypothesis is that especially those accounts which are opinion- incongruent are perceived as social bot accounts when the account is ambiguous about its nature. We also hypothesize that credibility ratings mediate this relationship. We asked N = 151 participants to evaluate 24 Twitter accounts and decide whether the accounts were humans or social bots. Findings support our motivated reasoning hypothesis for a sub-group of Twitter users (those who are more familiar with Twitter): Accounts that are opinion-incongruent are evaluated as relatively more bot-like than accounts that are opinion-congruent. Moreover, it does not matter whether the account is clearly social bot or human or ambiguous about its nature. This was mediated by perceived credibility in the sense that congruent profiles were evaluated to be more credible resulting in lower perceptions as bots. CCS Concepts: • Human-centered computing ! Empirical studies in HCI. Additional Key Words and Phrases: motivated reasoning, social bots, Twitter, credibility, partisanship, bias ACM Reference Format: Magdalena Wischnewski, Rebecca Bernemann, Thao Ngo, and Nicole Krämer. 2021. Disagree? You Must be a Bot! How Beliefs Shape Twitter Profile Perceptions. In CHI Conference on Human Factors in Computing Systems (CHI ’21), May 8–13, 2021, Yokohama, Japan.