From Anti-Vaxxer Moms to Militia Men: Influence Operations, Narrative Weaponization, and the Fracturing of American Identity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Examination of the Impact of Astroturfing on Nationalism: A

social sciences $€ £ ¥ Article An Examination of the Impact of Astroturfing on Nationalism: A Persuasion Knowledge Perspective Kenneth M. Henrie 1,* and Christian Gilde 2 1 Stiller School of Business, Champlain College, Burlington, VT 05401, USA 2 Department of Business and Technology, University of Montana Western, Dillon, MT 59725, USA; [email protected] * Correspondence: [email protected]; Tel.: +1-802-865-8446 Received: 7 December 2018; Accepted: 23 January 2019; Published: 28 January 2019 Abstract: One communication approach that lately has become more common is astroturfing, which has been more prominent since the proliferation of social media platforms. In this context, astroturfing is a fake grass-roots political campaign that aims to manipulate a certain audience. This exploratory research examined how effective astroturfing is in mitigating citizens’ natural defenses against politically persuasive messages. An experimental method was used to examine the persuasiveness of social media messages related to coal energy in their ability to persuade citizens’, and increase their level of nationalism. The results suggest that citizens are more likely to be persuaded by an astroturfed message than people who are exposed to a non-astroturfed message, regardless of their political leanings. However, the messages were not successful in altering an individual’s nationalistic views at the moment of exposure. The authors discuss these findings and propose how in a long-term context, astroturfing is a dangerous addition to persuasive communication. Keywords: astroturfing; persuasion knowledge; nationalism; coal energy; social media 1. Introduction Astroturfing is the simulation of a political campaign which is intended to manipulate the target audience. Often, the purpose of such a campaign is not clearly recognizable because a political effort is disguised as a grassroots operation. -

The Alt-Right Comes to Power by JA Smith

USApp – American Politics and Policy Blog: Long Read Book Review: Deplorable Me: The Alt-Right Comes to Power by J.A. Smith Page 1 of 6 Long Read Book Review: Deplorable Me: The Alt- Right Comes to Power by J.A. Smith J.A Smith reflects on two recent books that help us to take stock of the election of President Donald Trump as part of the wider rise of the ‘alt-right’, questioning furthermore how the left today might contend with the emergence of those at one time termed ‘a basket of deplorables’. Deplorable Me: The Alt-Right Comes to Power Devil’s Bargain: Steve Bannon, Donald Trump and the Storming of the Presidency. Joshua Green. Penguin. 2017. Kill All Normies: Online Culture Wars from 4chan and Tumblr to Trump and the Alt-Right. Angela Nagle. Zero Books. 2017. Find these books: In September last year, Hillary Clinton identified within Donald Trump’s support base a ‘basket of deplorables’, a milieu comprising Trump’s newly appointed campaign executive, the far-right Breitbart News’s Steve Bannon, and the numerous more or less ‘alt right’ celebrity bloggers, men’s rights activists, white supremacists, video-gaming YouTubers and message board-based trolling networks that operated in Breitbart’s orbit. This was a political misstep on a par with putting one’s opponent’s name in a campaign slogan, since those less au fait with this subculture could hear only contempt towards anyone sympathetic to Trump; while those within it wore Clinton’s condemnation as a badge of honour. Bannon himself was insouciant: ‘we polled the race stuff and it doesn’t matter […] It doesn’t move anyone who isn’t already in her camp’. -

Who Supports Donald J. Trump?: a Narrative- Based Analysis of His Supporters and of the Candidate Himself Mitchell A

University of Puget Sound Sound Ideas Summer Research Summer 2016 Who Supports Donald J. Trump?: A narrative- based analysis of his supporters and of the candidate himself Mitchell A. Carlson 7886304 University of Puget Sound, [email protected] Follow this and additional works at: http://soundideas.pugetsound.edu/summer_research Part of the American Politics Commons, and the Political Theory Commons Recommended Citation Carlson, Mitchell A. 7886304, "Who Supports Donald J. Trump?: A narrative-based analysis of his supporters and of the candidate himself" (2016). Summer Research. Paper 271. http://soundideas.pugetsound.edu/summer_research/271 This Article is brought to you for free and open access by Sound Ideas. It has been accepted for inclusion in Summer Research by an authorized administrator of Sound Ideas. For more information, please contact [email protected]. 1 Mitchell Carlson Professor Robin Dale Jacobson 8/24/16 Who Supports Donald J. Trump? A narrative-based analysis of his supporters and of the candidate himself Introduction: The Voice of the People? “My opponent asks her supporters to recite a three-word loyalty pledge. It reads: “I’m With Her.” I choose to recite a different pledge. My pledge reads: ‘I’m with you—the American people.’ I am your voice.” So said Donald J. Trump, Republican presidential nominee and billionaire real estate mogul, in his speech echoing Richard Nixon’s own convention speech centered on law-and-order in 1968.1 2 Introduced by his daughter Ivanka, Trump claimed at the Republican National Convention in Cleveland, Ohio that he—and he alone—is the voice of the people. -

Political Astroturfing Across the World

Political astroturfing across the world Franziska B. Keller∗ David Schochy Sebastian Stierz JungHwan Yang§ Paper prepared for the Harvard Disinformation Workshop Update 1 Introduction At the very latest since the Russian Internet Research Agency’s (IRA) intervention in the U.S. presidential election, scholars and the broader public have become wary of coordi- nated disinformation campaigns. These hidden activities aim to deteriorate the public’s trust in electoral institutions or the government’s legitimacy, and can exacerbate political polarization. But unfortunately, academic and public debates on the topic are haunted by conceptual ambiguities, and rely on few memorable examples, epitomized by the often cited “social bots” who are accused of having tried to influence public opinion in various contemporary political events. In this paper, we examine “political astroturfing,” a particular form of centrally co- ordinated disinformation campaigns in which participants pretend to be ordinary citizens acting independently (Kovic, Rauchfleisch, Sele, & Caspar, 2018). While the accounts as- sociated with a disinformation campaign may not necessarily spread incorrect information, they deceive the audience about the identity and motivation of the person manning the ac- count. And even though social bots have been in the focus of most academic research (Fer- rara, Varol, Davis, Menczer, & Flammini, 2016; Stella, Ferrara, & De Domenico, 2018), seemingly automated accounts make up only a small part – if any – of most astroturf- ing campaigns. For instigators of astroturfing, relying exclusively on social bots is not a promising strategy, as humans are good at detecting low-quality information (Pennycook & Rand, 2019). On the other hand, many bots detected by state-of-the-art social bot de- tection methods are not part of an astroturfing campaign, but unrelated non-political spam ∗Hong Kong University of Science and Technology yThe University of Manchester zGESIS – Leibniz Institute for the Social Sciences §University of Illinois at Urbana-Champaign 1 bots. -

Nationalism, Neo-Liberalism, and Ethno-National Populism

H-Nationalism Nationalism, Neo-Liberalism, and Ethno-National Populism Blog Post published by Yoav Peled on Thursday, December 3, 2020 In this post, Yoav Peled, Tel Aviv University, discusses the relations between ethno- nationalism, neo-liberalism, and right-wing populism. Donald Trump’s failure to be reelected by a relatively narrow margin in the midst of the Coronavirus crisis points to the strength of ethno-national populism in the US, as elsewhere, and raises the question of the relations between nationalism and right- wing populism. Historically, American nationalism has been viewed as the prime example of inclusive civic nationalism, based on “constitutional patriotism.” Whatever the truth of this characterization, in the Trump era American civic nationalism is facing a formidable challenge in the form of White Christian nativist ethno-nationalism that utilizes populism as its mobilizational strategy. The key concept common to both nationalism and populism is “the people.” In nationalism the people are defined through vertical inclusion and horizontal exclusion -- by formal citizenship or by cultural-linguistic boundaries. Ideally, though not necessarily in practice, within the nation-state ascriptive markers such as race, religion, place of birth, etc., are ignored by the state. Populism on the other hand defines the people through both vertical and horizontal exclusion, by ascriptive markers as well as by class position (“elite” vs. “the people”) and even by political outlook. Israel’s Prime Minister Benjamin Netanyahu once famously averred that leftist Jewish Israelis “forgot how to be Jews,” and Trump famously stated that Jewish Americans who vote Democratic are traitors to their country, Israel. -

Classism, Ableism, and the Rise of Epistemic Injustice Against White, Working-Class Men

CLASSISM, ABLEISM, AND THE RISE OF EPISTEMIC INJUSTICE AGAINST WHITE, WORKING-CLASS MEN A thesis submitted in partial fulfillment of the requirements for the degree of Master of Humanities by SARAH E. BOSTIC B.A., Wright State University, 2017 2019 Wright State University WRIGHT STATE UNIVERSITY GRADUATE SCHOOL April 24, 2019 I HEREBY RECOMMEND THAT THE THESIS PREPARED UNDER MY SUPERVISION BY Sarah E. Bostic ENTITLED Classism, Ableism, and the Rise of Epistemic Injustice Against White, Working-Class Men BE ACCEPTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF Master of Humanities. __________________________ Kelli Zaytoun, Ph.D. Thesis Director __________________________ Valerie Stoker, Ph.D. Chair, Humanities Committee on Final Examination: ___________________________ Kelli Zaytoun, Ph.D. ___________________________ Jessica Penwell-Barnett, Ph.D. ___________________________ Donovan Miyasaki, Ph.D. ___________________________ Barry Milligan, Ph.D. Interim Dean of the Graduate School ABSTRACT Bostic, Sarah E. M.Hum. Master of Humanities Graduate Program, Wright State University, 2019. Classism, Ableism, and the Rise of Epistemic Injustice Against White, Working-Class Men. In this thesis, I set out to illustrate how epistemic injustice functions in this divide between white working-class men and the educated elite. I do this by discussing the discursive ways in which working-class knowledge and experience are devalued as legitimate sources of knowledge. I demonstrate this by using critical discourse analysis to interpret the underlying attitudes and ideologies in comments made by Clinton and Trump during their 2016 presidential campaigns. I also discuss how these ideologies are positively or negatively perceived by Trump’s working-class base. Using feminist standpoint theory and phenomenology as a lens of interpretation, I argue that white working-class men are increasingly alienated from progressive politics through classist and ableist rhetoric. -

What Is Astroturfing? Churchofgodbigsandy.Com

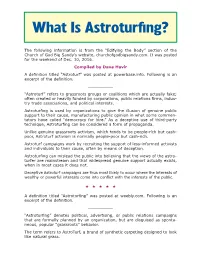

What Is Astroturfing? The following information is from the “Edifying the Body” section of the Church of God Big Sandy’s website, churchofgodbigsandy.com. It was posted for the weekend of Dec. 10, 2016. Compiled by Dave Havir A definition titled “Astroturf” was posted at powerbase.info. Following is an excerpt of the definition. __________ “Astroturf” refers to grassroots groups or coalitions which are actually fake; often created or heavily funded by corporations, public relations firms, indus- try trade associations, and political interests. Astroturfing is used by organizations to give the illusion of genuine public support to their cause, manufacturing public opinion in what some commen- tators have called “democracy for hire.” As a deceptive use of third-party technique, Astroturfing can be considered a form of propaganda. Unlike genuine grassroots activism, which tends to be people-rich but cash- poor, Astroturf activism is normally people-poor but cash-rich. Astroturf campaigns work by recruiting the support of less-informed activists and individuals to their cause, often by means of deception. Astroturfing can mislead the public into believing that the views of the astro- turfer are mainstream and that widespread genuine support actually exists, when in most cases it does not. Deceptive Astroturf campaigns are thus most likely to occur where the interests of wealthy or powerful interests come into conflict with the interests of the public. ★★★★★ A definition titled “Astroturfing” was posted at weebly.com. Following is an excerpt of the definition. __________ “Astroturfing” denotes political, advertising, or public relations campaigns that are formally planned by an organization, but are disguised as sponta- neous, popular “grassroots” behavior. -

A New Study Finds That Trump Supporters Are More Likely to Be Islamophobic, Racist, Transphobic and Homophobic

A ‘basket of deplorables’? A new study finds that Trump supporters are more likely to be Islamophobic, racist, transphobic and homophobic. blogs.lse.ac.uk/usappblog/2016/10/10/a-basket-of-deplorables-a-new-study-finds-that-trump-supporters-are-more-likely-to-be-islamophobic-racist-transphobic-and-homophobic/ 10/10/2016 Last month Hillary Clinton stepped into controversy when she described ‘half’ of Donald Trump’s supporters as a ‘basket of deplorables’. In a new study, Karen L. Blair looks at how Clinton and Trump voters’ attitudes on themes such as sexism, authoritarianism and Islamophobia differ. She finds that Islamophobia is closely linked with support for Trump, and that the strongest predictor of voting for someone other than Clinton or Trump was not disagreeing with Clinton ideologically, but ambivalent sexism. On September 9th, 2016, Hillary Clinton gave a speech at the LGBT for Hillary Gala in New York City in which she referred to ‘half’ of Donald Trump’s supporters as a ‘basket of deplorables’ who espouse ‘racist, sexist, homophobic, xenophobic [and] Islamophobic’ sentiments. Although Clinton had prefaced her comments by acknowledging that she was about to make an overgeneralization, the backlash to her comments was swift, with Trump charging that her comments showed her true ‘contempt for everyday Americans’. Clinton apologized for her remarks, but doubled down in depicting Trump’s campaign as one based in ‘bigotry and racist rhetoric.’ Was there any truth to Hillary Clinton’s depiction of Trump supporters? To what extent can -

How Do Internet Memes Speak Security? by Loui Marchant

How do Internet memes speak security? by Loui Marchant This work is licensed under a Creative Commons Attribution 3.0 License. Abstract Recently, scholars have worked to widen the scope of security studies to address security silences by considering how visuals speak security and banal acts securitise. Research on Internet memes and their co-constitution with political discourse is also growing. This article seeks to merge this literature by engaging in a visual security analysis of everyday memes. A bricolage-inspired method analyses the security speech of memes as manifestations, behaviours and ideals. The case study of Pepe the Frog highlights how memes are visual ‘little security nothings’ with power to speak security in complex and polysemic ways which can reify or challenge wider security discourse. Keywords: Memes, Security, Visual, Everyday, Internet. Introduction Security Studies can be broadly defined as analysing “the politics of the pursuit of freedom from threat” (Buzan 2000: 2). In recent decades, scholars have attempted to widen security studies beyond traditional state-centred concerns as “the focus on the state in the production of security creates silences” (Croft 2006: 387). These security silences can be defined as “places where security is produced and is articulated that are important and valid, and yet are not usual in the study of security” (2006: 387). A widening approach seeks to address these silences, allowing for the analysis of environmental, economic and societal threats which are arguably just as relevant to international relations as those emanating from state actors. Visual and everyday security studies are overlapping approaches which seek to break these silences by analysing iterations of security formerly overlooked by security studies. -

Media Manipulation and Disinformation Online Alice Marwick and Rebecca Lewis CONTENTS

Media Manipulation and Disinformation Online Alice Marwick and Rebecca Lewis CONTENTS Executive Summary ....................................................... 1 What Techniques Do Media Manipulators Use? ....... 33 Understanding Media Manipulation ............................ 2 Participatory Culture ........................................... 33 Who is Manipulating the Media? ................................. 4 Networks ............................................................. 34 Internet Trolls ......................................................... 4 Memes ................................................................. 35 Gamergaters .......................................................... 7 Bots ...................................................................... 36 Hate Groups and Ideologues ............................... 9 Strategic Amplification and Framing ................. 38 The Alt-Right ................................................... 9 Why is the Media Vulnerable? .................................... 40 The Manosphere .......................................... 13 Lack of Trust in Media ......................................... 40 Conspiracy Theorists ........................................... 17 Decline of Local News ........................................ 41 Influencers............................................................ 20 The Attention Economy ...................................... 42 Hyper-Partisan News Outlets ............................. 21 What are the Outcomes? .......................................... -

A Recognitive Theory of Identity and the Structuring of Public Space

A RECOGNITIVE THEORY OF IDENTITY AND THE STRUCTURING OF PUBLIC SPACE Benjamin Carpenter [email protected] Doctoral Thesis University of East Anglia School of Politics, Philosophy, Language and Communication Studies September 2020 This copy of the thesis has been supplied on condition that anyone who consults it is understood to recognise that its copyright rests with the author and that use of any information derived there from must be in accordance with current UK Copyright Law. In addition, any quotation or extract must include full attribution. ABSTRACT This thesis presents a theory of the self as produced through processes of recognition that unfold and are conditioned by public, political spaces. My account stresses the dynamic and continuous processes of identity formation, understanding the self as continually composed through intersubjective processes of recognition that unfold within and are conditioned by the public spaces wherein subjects appear before one another. My theory of the self informs a critique of contemporary identity politics, understanding the justice sought by such politics as hampered by identity enclosure. In contrast to my understanding of the self, the self of identity enclosure is understood as a series of connecting, philosophical pathologies that replicate conditions of oppression through their ontological, epistemological, and phenomenological positions on the self and political space. The politics of enclosure hinge upon a presumptive fixity, understanding the self as abstracted from political spaces of appearance, as a factic entity that is simply given once and for all. Beginning with Hegel's account of identity as recognised, I stress the phenomenological dimensions of recognition, using these to demonstrate how recognition requires a fundamental break from the fixity and rigidity often displayed within the politics of enclosure. -

Introduction: What Is Computational Propaganda?

!1 Introduction: What Is Computational Propaganda? Digital technologies hold great promise for democracy. Social media tools and the wider resources of the Internet o"er tremendous access to data, knowledge, social networks, and collective engagement opportunities, and can help us to build better democracies (Howard, 2015; Margetts et al., 2015). Unwelcome obstacles are, however, disrupting the creative democratic applications of information technologies (Woolley, 2016; Gallacher et al., 2017; Vosoughi, Roy, & Aral, 2018). Massive social platforms like Facebook and Twitter are struggling to come to grips with the ways their creations can be used for political control. Social media algorithms may be creating echo chambers in which public conversations get polluted and polarized. Surveillance capabilities are outstripping civil protections. Political “bots” (software agents used to generate simple messages and “conversations” on social media) are masquerading as genuine grassroots movements to manipulate public opinion. Online hate speech is gaining currency. Malicious actors and digital marketers run junk news factories that disseminate misinformation to harm opponents or earn click-through advertising revenue.# It is no exaggeration to say that coordinated e"orts are even now working to seed chaos in many political systems worldwide. Some militaries and intelligence agencies are making use of social media as conduits to undermine democratic processes and bring down democratic institutions altogether (Bradshaw & Howard, 2017). Most democratic governments are preparing their legal and regulatory responses. But unintended consequences from over- regulation, or regulation uninformed by systematic research, may be as damaging to democratic systems as the threats themselves.# We live in a time of extraordinary political upheaval and change, with political movements and parties rising and declining rapidly (Kreiss, 2016; Anstead, 2017).