History, Historians and Development Policy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

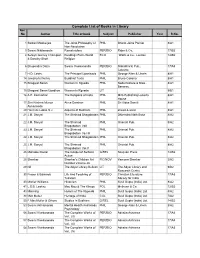

Complete List of Books in Library Acc No Author Title of Book Subject Publisher Year R.No

Complete List of Books in Library Acc No Author Title of book Subject Publisher Year R.No. 1 Satkari Mookerjee The Jaina Philosophy of PHIL Bharat Jaina Parisat 8/A1 Non-Absolutism 3 Swami Nikilananda Ramakrishna PER/BIO Rider & Co. 17/B2 4 Selwyn Gurney Champion Readings From World ECO `Watts & Co., London 14/B2 & Dorothy Short Religion 6 Bhupendra Datta Swami Vivekananda PER/BIO Nababharat Pub., 17/A3 Calcutta 7 H.D. Lewis The Principal Upanisads PHIL George Allen & Unwin 8/A1 14 Jawaherlal Nehru Buddhist Texts PHIL Bruno Cassirer 8/A1 15 Bhagwat Saran Women In Rgveda PHIL Nada Kishore & Bros., 8/A1 Benares. 15 Bhagwat Saran Upadhya Women in Rgveda LIT 9/B1 16 A.P. Karmarkar The Religions of India PHIL Mira Publishing Lonavla 8/A1 House 17 Shri Krishna Menon Atma-Darshan PHIL Sri Vidya Samiti 8/A1 Atmananda 20 Henri de Lubac S.J. Aspects of Budhism PHIL sheed & ward 8/A1 21 J.M. Sanyal The Shrimad Bhagabatam PHIL Dhirendra Nath Bose 8/A2 22 J.M. Sanyal The Shrimad PHIL Oriental Pub. 8/A2 Bhagabatam VolI 23 J.M. Sanyal The Shrimad PHIL Oriental Pub. 8/A2 Bhagabatam Vo.l III 24 J.M. Sanyal The Shrimad Bhagabatam PHIL Oriental Pub. 8/A2 25 J.M. Sanyal The Shrimad PHIL Oriental Pub. 8/A2 Bhagabatam Vol.V 26 Mahadev Desai The Gospel of Selfless G/REL Navijvan Press 14/B2 Action 28 Shankar Shankar's Children Art FIC/NOV Yamuna Shankar 2/A2 Number Volume 28 29 Nil The Adyar Library Bulletin LIT The Adyar Library and 9/B2 Research Centre 30 Fraser & Edwards Life And Teaching of PER/BIO Christian Literature 17/A3 Tukaram Society for India 40 Monier Williams Hinduism PHIL Susil Gupta (India) Ltd. -

Colony and Empire, Colonialism and Imperialism: a Meaningful Distinction?

Comparative Studies in Society and History 2021;63(2):280–309. 0010-4175/21 © The Author(s), 2021. Published by Cambridge University Press on behalf of the Society for the Comparative Study of Society and History doi:10.1017/S0010417521000050 Colony and Empire, Colonialism and Imperialism: A Meaningful Distinction? KRISHAN KUMAR University of Virginia, Charlottesville, VA, USA It is a mistaken notion that planting of colonies and extending of Empire are necessarily one and the same thing. ———Major John Cartwright, Ten Letters to the Public Advertiser, 20 March–14 April 1774 (in Koebner 1961: 200). There are two ways to conquer a country; the first is to subordinate the inhabitants and govern them directly or indirectly.… The second is to replace the former inhabitants with the conquering race. ———Alexis de Tocqueville (2001[1841]: 61). One can instinctively think of neo-colonialism but there is no such thing as neo-settler colonialism. ———Lorenzo Veracini (2010: 100). WHAT’ S IN A NAME? It is rare in popular usage to distinguish between imperialism and colonialism. They are treated for most intents and purposes as synonyms. The same is true of many scholarly accounts, which move freely between imperialism and colonialism without apparently feeling any discomfort or need to explain themselves. So, for instance, Dane Kennedy defines colonialism as “the imposition by foreign power of direct rule over another people” (2016: 1), which for most people would do very well as a definition of empire, or imperialism. Moreover, he comments that “decolonization did not necessarily Acknowledgments: This paper is a much-revised version of a presentation given many years ago at a seminar on empires organized by Patricia Crone, at the Institute for Advanced Study, Princeton. -

Academic Programmes and Admissions 5 – 9

43rd ANNUAL REPORT 1 April, 2012 – 31 March, 2013 PART – II JAWAHARLAL NEHRU UNIVERSITY NEW DELHI www.jnu.ac.in CONTENTS THE LEGEND 1 – 4 ACADEMIC PROGRAMMES AND ADMISSIONS 5 – 9 UNIVERSITY BODIES 10 – 18 SCHOOLS AND CENTRES 19 – 297 ● School of Arts and Aesthetics (SA&A) 19 – 29 ● School of Biotechnology (SBT) 31 – 34 ● School of Computational and Integrative Sciences (SCIS) 35 – 38 ● School of Computer & Systems Sciences (SC&SS) 39 – 43 ● School of Environmental Sciences (SES) 45 – 52 ● School of International Studies (SIS) 53 – 105 ● School of Language, Literature & Culture Studies (SLL&CS) 107 – 136 ● School of Life Sciences (SLS) 137 – 152 ● School of Physical Sciences (SPS) 153 – 156 ● School of Social Sciences (SSS) 157 – 273 ● Centre for the Study of Law & Governance (CSLG) 275 – 281 ● Special Centre for Molecular Medicine (SCMM) 283 – 287 ● Special Centre for Sanskrit Studies (SCSS) 289 – 297 ACADEMIC STAFF COLLEGE 299 – 304 STUDENT’S ACTIVITIES 305 – 313 ENSURING EQUALITY 314 – 322 LINGUISTIC EMPOWERMENT CELL 323 – 325 UNIVERSITY ADMINISTRATION 327 – 329 CAMPUS DEVELOPMENT 330 UNIVERSITY FINANCE 331 – 332 OTHER ACTIVITIES 333 – 341 ● Gender Sensitisation Committee Against Sexual Harassment 333 ● Alumni Affairs 334 ● Jawaharlal Nehru Institute of Advanced Studies 335 – 337 ● International Collaborations 338 – 339 ● Institutional Ethics Review Board Research on Human Subjects 340 – 341 JNU Annual Report 2012-13 iii CENTRAL FACILITIES 342 – 358 ● University Library 342 – 349 ● University Science Instrumentation Centre 349 – 350 -

INDIAN NATIONAL CONGRESS 1885-1947 Year Place President

INDIAN NATIONAL CONGRESS 1885-1947 Year Place President 1885 Bombay W.C. Bannerji 1886 Calcutta Dadabhai Naoroji 1887 Madras Syed Badruddin Tyabji 1888 Allahabad George Yule First English president 1889 Bombay Sir William 1890 Calcutta Sir Pherozeshah Mehta 1891 Nagupur P. Anandacharlu 1892 Allahabad W C Bannerji 1893 Lahore Dadabhai Naoroji 1894 Madras Alfred Webb 1895 Poona Surendranath Banerji 1896 Calcutta M Rahimtullah Sayani 1897 Amraoti C Sankaran Nair 1898 Madras Anandamohan Bose 1899 Lucknow Romesh Chandra Dutt 1900 Lahore N G Chandravarkar 1901 Calcutta E Dinsha Wacha 1902 Ahmedabad Surendranath Banerji 1903 Madras Lalmohan Ghosh 1904 Bombay Sir Henry Cotton 1905 Banaras G K Gokhale 1906 Calcutta Dadabhai Naoroji 1907 Surat Rashbehari Ghosh 1908 Madras Rashbehari Ghosh 1909 Lahore Madanmohan Malaviya 1910 Allahabad Sir William Wedderburn 1911 Calcutta Bishan Narayan Dhar 1912 Patna R N Mudhalkar 1913 Karachi Syed Mahomed Bahadur 1914 Madras Bhupendranath Bose 1915 Bombay Sir S P Sinha 1916 Lucknow A C Majumdar 1917 Calcutta Mrs. Annie Besant 1918 Bombay Syed Hassan Imam 1918 Delhi Madanmohan Malaviya 1919 Amritsar Motilal Nehru www.bankersadda.com | www.sscadda.com| www.careerpower.in | www.careeradda.co.inPage 1 1920 Calcutta Lala Lajpat Rai 1920 Nagpur C Vijaya Raghavachariyar 1921 Ahmedabad Hakim Ajmal Khan 1922 Gaya C R Das 1923 Delhi Abul Kalam Azad 1923 Coconada Maulana Muhammad Ali 1924 Belgaon Mahatma Gandhi 1925 Cawnpore Mrs.Sarojini Naidu 1926 Guwahati Srinivas Ayanagar 1927 Madras M A Ansari 1928 Calcutta Motilal Nehru 1929 Lahore Jawaharlal Nehru 1930 No session J L Nehru continued 1931 Karachi Vallabhbhai Patel 1932 Delhi R D Amritlal 1933 Calcutta Mrs. -

Domestic Colonies and Colonialism Vs Imperialism in Western Political Thought and Practise

Domestic Colonies and Colonialism vs Imperialism in Western Political Thought and Practise Over the last thirty years, there has been a rapidly expanding literature on colonialism and imperialism in the canon of modern western political thought including research done by James Tully, Glen Coulthard, David Armitage, Karuna Mantena, Duncan Bell, Jennifer Pitts, Inder Marwah, Uday Mehta and myself. In various ways, it has been argued the defense of colonization and/or imperialism is embedded within and instrumental to key modern western, particularly liberal, political theories. In this paper, I provide a new way to approach this question based on a largely overlooked historical reality - ‘domestic’ colonies. Using a domestic lens to re-examine colonialism, I demonstrate it is not only possible but necessary to distinguish colonialism from imperialism. While it is popular to see them as indistinguishable in most post-colonial scholarship, I will argue the existence of domestic colonies and their justifications require us to rethink not only the scope and meaning of colonies and colonialism but how they differ from empires and imperialism. Domestic colonies (proposed and/or created from the middle of the 19th century to the start of the 20th century) were rural entities inside the borders of one’s own state (as opposed to overseas) within which certain kinds of fellow citizens (as opposed to foreigners) were segregated and engaged in agrarian labour to ‘improve’ them and the ‘uncultivated’ land upon which they laboured. Three kinds of domestic colonies were proposed based upon the population within them: labour or home colonies for the ‘idle poor’ (vagrants, unemployed, beggars), farm 1 colonies for the ‘irrational’ (mentally ill, disabled, epileptic) and utopian colonies for political, religious and/or racial minorities. -

Dadabhai Naoroji: a Reformer of the British Empire Between Bombay and Westminster

Imperial Portaits Dadabhai Naoroji: A reformer of the British empire between Bombay and Westminster Teresa SEGURA-GARCIA ABSTRACT From the closing decades of the nineteenth century to the early twentieth century, Dadabhai Naoroji (1825‒1917) was India’s foremost intellectual celebrity — by the end of his career, he was known as ‘the Grand Old Man of India’. He spent most his life moving between western India and England in various capacities — as social reformer, academic, businessman, and politician. His life’s work was the redress of the inequalities of imperialism through political activism in London, at the heart of the British empire, where colonial policy was formulated. This project brought him to Westminster, where he became Britain’s first Indian member of parliament (MP). As a Liberal Party MP, he represented the constituency of Central Finsbury, London, from 1892 to 1895. Above all, he used the political structures, concepts, and language of the British empire to reshape it from within and, in this way, improve India’s political destiny. Dadabhai Naoroji, 1889. Naoroji wears a phenta, the distinctive conical hat of the Parsis. While he initially wore it in London, he largely abandoned it after 1886. This sartorial change was linked to his first attempt to reach Westminster, as one of his British collaborators advised him to switch to European hats. He ignored this advice: he mostly opted for an uncovered head, thus rejecting the colonial binary of the rulers versus the ruled. Source : National Portrait Gallery. Debate on the Indian Council Cotton Duties. by Sydney Prior Hall, published in The Graphic, 2 March 1895. -

Indian National Congress Sessions

Indian National Congress Sessions INC sessions led the course of many national movements as well as reforms in India. Consequently, the resolutions passed in the INC sessions reflected in the political reforms brought about by the British government in India. Although the INC went through a major split in 1907, its leaders reconciled on their differences soon after to give shape to the emerging face of Independent India. Here is a list of all the Indian National Congress sessions along with important facts about them. This list will help you prepare better for SBI PO, SBI Clerk, IBPS Clerk, IBPS PO, etc. Indian National Congress Sessions During the British rule in India, the Indian National Congress (INC) became a shiny ray of hope for Indians. It instantly overshadowed all the other political associations established prior to it with its very first meeting. Gradually, Indians from all walks of life joined the INC, therefore making it the biggest political organization of its time. Most exam Boards consider the Indian National Congress Sessions extremely noteworthy. This is mainly because these sessions played a great role in laying down the foundational stone of Indian polity. Given below is the list of Indian National Congress Sessions in chronological order. Apart from the locations of various sessions, make sure you also note important facts pertaining to them. Indian National Congress Sessions Post Liberalization Era (1990-2018) Session Place Date President 1 | P a g e 84th AICC Plenary New Delhi Mar. 18-18, Shri Rahul Session 2018 Gandhi Chintan Shivir Jaipur Jan. 18-19, Smt. -

The Dynamics of Inclusion and Exclusion of Settler Colonialism: the Case of Palestine

NEAR EAST UNIVERSITY GRADUATE SCHOOL OF SOCIAL SCIENCES INTERNATIONAL RELATIONS PROGRAM WHO IS ELIGIBLE TO EXIST? THE DYNAMICS OF INCLUSION AND EXCLUSION OF SETTLER COLONIALISM: THE CASE OF PALESTINE WALEED SALEM PhD THESIS NICOSIA 2019 WHO IS ELIGIBLE TO EXIST? THE DYNAMICS OF INCLUSION AND EXCLUSION OF SETTLER COLONIALISM: THE CASE OF PALESTINE WALEED SALEM NEAREAST UNIVERSITY GRADUATE SCHOOL OF SOCIAL SCIENCES INTERNATIONAL RELATIONS PROGRAM PhD THESIS THESIS SUPERVISOR ASSOC. PROF. DR. UMUT KOLDAŞ NICOSIA 2019 ACCEPTANCE/APPROVAL We as the jury members certify that the PhD Thesis ‘Who is Eligible to Exist? The Dynamics of Inclusion and Exclusion of Settler Colonialism: The Case of Palestine’prepared by PhD Student Waleed Hasan Salem, defended on 26/4/2019 4 has been found satisfactory for the award of degree of Phd JURY MEMBERS ......................................................... Assoc. Prof. Dr. Umut Koldaş (Supervisor) Near East University Faculty of Economics and Administrative Sciences, Department of International Relations ......................................................... Assoc. Prof.Dr.Sait Ak şit(Head of Jury) Near East University Faculty of Economics and Administrative Sciences, Department of International Relations ......................................................... Assoc. Prof.Dr.Nur Köprülü Near East University Faculty of Economics and Administrative Sciences, Department of Political Science ......................................................... Assoc. Prof.Dr.Ali Dayıoğlu European University of Lefke -

Issue 3, September 2015

Econ Journal Watch Scholarly Comments on Academic Economics Volume 12, Issue 3, September 2015 COMMENTS Education Premiums in Cambodia: Dummy Variables Revisited and Recent Data John Humphreys 339–345 CHARACTER ISSUES Why Weren’t Left Economists More Opposed and More Vocal on the Export- Import Bank? Veronique de Rugy, Ryan Daza, and Daniel B. Klein 346–359 Ideology Über Alles? Economics Bloggers on Uber, Lyft, and Other Transportation Network Companies Jeremy Horpedahl 360–374 SYMPOSIUM CLASSICAL LIBERALISM IN ECON, BY COUNTRY (PART II) Venezuela: Without Liberals, There Is No Liberalism Hugo J. Faria and Leonor Filardo 375–399 Classical Liberalism and Modern Political Economy in Denmark Peter Kurrild-Klitgaard 400–431 Liberalism in India G. P. Manish, Shruti Rajagopalan, Daniel Sutter, and Lawrence H. White 432–459 Classical Liberalism in Guatemala Andrés Marroquín and Fritz Thomas 460–478 WATCHPAD Of Its Own Accord: Adam Smith on the Export-Import Bank Daniel B. Klein 479–487 Discuss this article at Journaltalk: http://journaltalk.net/articles/5891 ECON JOURNAL WATCH 12(3) September 2015: 339–345 Education Premiums in Cambodia: Dummy Variables Revisited and Recent Data John Humphreys1 LINK TO ABSTRACT In their 2010 Asian Economic Journal paper, Ashish Lall and Chris Sakellariou made a valuable contribution to the understanding of education in Cambodia. Their paper represents the most robust analysis of the Cambodian education premium yet published, reporting premiums for men and women from three different time periods (1997, 2004, 2007), including a series of control variables in their regressions, and using both OLS and IV methodology.2 Following a convention of education economics, Lall and Sakellariou (2010) use a variation of the standard Mincer model (see Heckman et al. -

Important Indian National Congress Sessions

Important Indian National Congress Sessions drishtiias.com/printpdf/important-indian-national-congress-sessions Introduction The Indian National Congress was founded at Bombay in December 1885. The early leadership – Dadabhai Naoroji, Pherozeshah Mehta, Badruddin Tyabji, W.C. Bonnerji, Surendranath Banerji, Romesh Chandra Dutt, S. Subramania Iyer, among others – was largely from Bombay and Calcutta. A retired British official, A.O. Hume, also played a part in bringing Indians from the various regions together. Formation of Indian National Congress was an effort in the direction of promoting the process of nation building. In an effort to reach all regions, it was decided to rotate the Congress session among different parts of the country. The President belonged to a region other than where the Congress session was being held. Sessions First Session: held at Bombay in 1885. President: W.C. Bannerjee Formation of Indian National Congress. Second Session: held at Calcutta in 1886. President: Dadabhai Naoroji Third Session: held at Madras in 1887. President: Syed Badruddin Tyabji, first muslim President. Fourth Session: held at Allahabad in 1888. President: George Yule, first English President. 1896: Calcutta. President: Rahimtullah Sayani National Song ‘Vande Mataram’ sung for the first time by Rabindranath Tagore. 1899: Lucknow. President: Romesh Chandra Dutt. Demand for permanent fixation of Land revenue 1901: Calcutta. President: Dinshaw E.Wacha First time Gandhiji appeared on the Congress platform 1/4 1905: Benaras. President: Gopal Krishan Gokhale Formal proclamation of Swadeshi movement against government 1906: Calcutta. President: Dadabhai Naoroji Adopted four resolutions on: Swaraj (Self Government), Boycott Movement, Swadeshi & National Education 1907: Surat. President: Rash Bihari Ghosh Split in Congress- Moderates & Extremist Adjournment of Session 1910: Allahabad. -

Fiction | 'The Crow-Flight of the New York Times' by Prabhakar Singh

Fiction | ‘The Crow-flight of The New York Times’ by Prabhakar Singh Ruchir often spent his quiet summer vacations in Darbhanga, the place of his maternal grandparents. It was on a hot and dusty midsummer day of 2020 that he laid his hands on the decomposing paperback, Maila Anchal, tucked in a forgotten corner of his uncle’s bookshelf dug into the thick walls of the first floor. Ruchir turned the pages holding the book in his left hand. Broken into two halves at its spine, the novel had soiled pages with occasional holes and dog-ears. He looked at the book for a whole minute and thought of stitching and pasting the spine back into the book. A gust of wind laden with a green scent of the leaves washed in last night’s rain came through the grove of Mango trees from his grandparent’s garden. A few of the pages from the middle scattered around the low-floor bed. “Was Phanishwar Nath Renu born in Darbhanga, Maa?” “I don’t know. Come eat. Keep questions for your Grandpa.” Toshi, their meek dog, ran about the garden after a squirrel. Toshi was lazy, and liked to keep a low profile. Tired by his small pursuits, Toshi lay stretched on the overgrown lawn grass. It looked up expectantly when Ruchir called for Grandpa, “Babujee…” Grandpa’s villa and the garden had kept Ruchir busy for half the summer. Five mango trees, a tall neem tree, one guava tree, three shrubs of lemon, a bed of twenty rose bushes and two patches of summer vegetables made up the house campus. -

Emergent Sexual Formations in Contemporary India

Neo-Liberalism, Post-Colonialism and Hetero-Sovereignties Neo-Liberalism, Post-Colonialism and Hetero-Sovereignties: Emergent Sexual Formations in Contemporary India Stephen Legg Srila Roy School of Geography School of Sociology and Social Policy University of Nottingham University of Nottingham Nottingham Nottingham NG7 2RD NG7 2RD United Kingdom United Kingdom [email protected] [email protected] Keywords: neo-liberalism, post-colonialism, India, sexuality, sovereignty, feminism Forthcoming in Interventions: International Journal of Postcolonial Studies (ISSN: 1369-801X, ESSN: 1469-929X) as the introduction to a special edition entitled “Emergent Sexual Formations in Contemporary India”. 1 Neo-Liberalism, Post-Colonialism and Hetero-Sovereignties India’s post-colonial present pushes, prises and protests the conceptual limits of postcolonialism. It has long since ceased to be living in the shadow only of the moment of independence (August 1947). What of the (after)lives of processes that do not neatly confine themselves to dates or moments? Of communal movements; the decline of communism; the rise of an aggressively neo-liberal capitalism; or of campaigns for the rights of women and sexual minorities? The latter two have gained increasing attention for the way in which they attend to the complex intersections of religious and cultural traditions, criminal and religious law, personal and private realms, local and global processes, rights and norms, and the consumption and production of gendered and sexed identities. Given the richness of this field of study, we decided to host two symposia at the University of Nottingham examining gender and sexuality in contemporary India from historical perspectives.1 The discussions exposed many of the tensions inherent in such diverse fields and over such an expansive geographical region, namely between unstable binaries such as: South Asia-India; gay-straight; masculine-feminine; historical-contemporary; regulation-desire; reality- fantasy; virtual-actual; and representational-material.