Linear Algebra Notes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

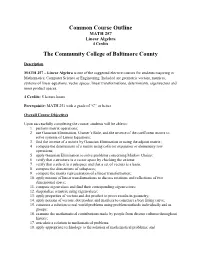

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

Introduction to Linear Bialgebra

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of New Mexico University of New Mexico UNM Digital Repository Mathematics and Statistics Faculty and Staff Publications Academic Department Resources 2005 INTRODUCTION TO LINEAR BIALGEBRA Florentin Smarandache University of New Mexico, [email protected] W.B. Vasantha Kandasamy K. Ilanthenral Follow this and additional works at: https://digitalrepository.unm.edu/math_fsp Part of the Algebra Commons, Analysis Commons, Discrete Mathematics and Combinatorics Commons, and the Other Mathematics Commons Recommended Citation Smarandache, Florentin; W.B. Vasantha Kandasamy; and K. Ilanthenral. "INTRODUCTION TO LINEAR BIALGEBRA." (2005). https://digitalrepository.unm.edu/math_fsp/232 This Book is brought to you for free and open access by the Academic Department Resources at UNM Digital Repository. It has been accepted for inclusion in Mathematics and Statistics Faculty and Staff Publications by an authorized administrator of UNM Digital Repository. For more information, please contact [email protected], [email protected], [email protected]. INTRODUCTION TO LINEAR BIALGEBRA W. B. Vasantha Kandasamy Department of Mathematics Indian Institute of Technology, Madras Chennai – 600036, India e-mail: [email protected] web: http://mat.iitm.ac.in/~wbv Florentin Smarandache Department of Mathematics University of New Mexico Gallup, NM 87301, USA e-mail: [email protected] K. Ilanthenral Editor, Maths Tiger, Quarterly Journal Flat No.11, Mayura Park, 16, Kazhikundram Main Road, Tharamani, Chennai – 600 113, India e-mail: [email protected] HEXIS Phoenix, Arizona 2005 1 This book can be ordered in a paper bound reprint from: Books on Demand ProQuest Information & Learning (University of Microfilm International) 300 N. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

What's in a Name? the Matrix As an Introduction to Mathematics

St. John Fisher College Fisher Digital Publications Mathematical and Computing Sciences Faculty/Staff Publications Mathematical and Computing Sciences 9-2008 What's in a Name? The Matrix as an Introduction to Mathematics Kris H. Green St. John Fisher College, [email protected] Follow this and additional works at: https://fisherpub.sjfc.edu/math_facpub Part of the Mathematics Commons How has open access to Fisher Digital Publications benefited ou?y Publication Information Green, Kris H. (2008). "What's in a Name? The Matrix as an Introduction to Mathematics." Math Horizons 16.1, 18-21. Please note that the Publication Information provides general citation information and may not be appropriate for your discipline. To receive help in creating a citation based on your discipline, please visit http://libguides.sjfc.edu/citations. This document is posted at https://fisherpub.sjfc.edu/math_facpub/12 and is brought to you for free and open access by Fisher Digital Publications at St. John Fisher College. For more information, please contact [email protected]. What's in a Name? The Matrix as an Introduction to Mathematics Abstract In lieu of an abstract, here is the article's first paragraph: In my classes on the nature of scientific thought, I have often used the movie The Matrix to illustrate the nature of evidence and how it shapes the reality we perceive (or think we perceive). As a mathematician, I usually field questions elatedr to the movie whenever the subject of linear algebra arises, since this field is the study of matrices and their properties. So it is natural to ask, why does the movie title reference a mathematical object? Disciplines Mathematics Comments Article copyright 2008 by Math Horizons. -

Multivector Differentiation and Linear Algebra 0.5Cm 17Th Santaló

Multivector differentiation and Linear Algebra 17th Santalo´ Summer School 2016, Santander Joan Lasenby Signal Processing Group, Engineering Department, Cambridge, UK and Trinity College Cambridge [email protected], www-sigproc.eng.cam.ac.uk/ s jl 23 August 2016 1 / 78 Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. 2 / 78 Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. 3 / 78 Functional Differentiation: very briefly... Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. 4 / 78 Summary Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... 5 / 78 Overview The Multivector Derivative. Examples of differentiation wrt multivectors. Linear Algebra: matrices and tensors as linear functions mapping between elements of the algebra. Functional Differentiation: very briefly... Summary 6 / 78 We now want to generalise this idea to enable us to find the derivative of F(X), in the A ‘direction’ – where X is a general mixed grade multivector (so F(X) is a general multivector valued function of X). Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. Then, provided A has same grades as X, it makes sense to define: F(X + tA) − F(X) A ∗ ¶XF(X) = lim t!0 t The Multivector Derivative Recall our definition of the directional derivative in the a direction F(x + ea) − F(x) a·r F(x) = lim e!0 e 7 / 78 Let us use ∗ to denote taking the scalar part, ie P ∗ Q ≡ hPQi. -

Linear Algebra for Dummies

Linear Algebra for Dummies Jorge A. Menendez October 6, 2017 Contents 1 Matrices and Vectors1 2 Matrix Multiplication2 3 Matrix Inverse, Pseudo-inverse4 4 Outer products 5 5 Inner Products 5 6 Example: Linear Regression7 7 Eigenstuff 8 8 Example: Covariance Matrices 11 9 Example: PCA 12 10 Useful resources 12 1 Matrices and Vectors An m × n matrix is simply an array of numbers: 2 3 a11 a12 : : : a1n 6 a21 a22 : : : a2n 7 A = 6 7 6 . 7 4 . 5 am1 am2 : : : amn where we define the indexing Aij = aij to designate the component in the ith row and jth column of A. The transpose of a matrix is obtained by flipping the rows with the columns: 2 3 a11 a21 : : : an1 6 a12 a22 : : : an2 7 AT = 6 7 6 . 7 4 . 5 a1m a2m : : : anm T which evidently is now an n × m matrix, with components Aij = Aji = aji. In other words, the transpose is obtained by simply flipping the row and column indeces. One particularly important matrix is called the identity matrix, which is composed of 1’s on the diagonal and 0’s everywhere else: 21 0 ::: 03 60 1 ::: 07 6 7 6. .. .7 4. .5 0 0 ::: 1 1 It is called the identity matrix because the product of any matrix with the identity matrix is identical to itself: AI = A In other words, I is the equivalent of the number 1 for matrices. For our purposes, a vector can simply be thought of as a matrix with one column1: 2 3 a1 6a2 7 a = 6 7 6 . -

Appendix a Spinors in Four Dimensions

Appendix A Spinors in Four Dimensions In this appendix we collect the conventions used for spinors in both Minkowski and Euclidean spaces. In Minkowski space the flat metric has the 0 1 2 3 form ηµν = diag(−1, 1, 1, 1), and the coordinates are labelled (x ,x , x , x ). The analytic continuation into Euclidean space is madethrough the replace- ment x0 = ix4 (and in momentum space, p0 = −ip4) the coordinates in this case being labelled (x1,x2, x3, x4). The Lorentz group in four dimensions, SO(3, 1), is not simply connected and therefore, strictly speaking, has no spinorial representations. To deal with these types of representations one must consider its double covering, the spin group Spin(3, 1), which is isomorphic to SL(2, C). The group SL(2, C) pos- sesses a natural complex two-dimensional representation. Let us denote this representation by S andlet us consider an element ψ ∈ S with components ψα =(ψ1,ψ2) relative to some basis. The action of an element M ∈ SL(2, C) is β (Mψ)α = Mα ψβ. (A.1) This is not the only action of SL(2, C) which one could choose. Instead of M we could have used its complex conjugate M, its inverse transpose (M T)−1,or its inverse adjoint (M †)−1. All of them satisfy the same group multiplication law. These choices would correspond to the complex conjugate representation S, the dual representation S,and the dual complex conjugate representation S. We will use the following conventions for elements of these representations: α α˙ ψα ∈ S, ψα˙ ∈ S, ψ ∈ S, ψ ∈ S. -

Things You Need to Know About Linear Algebra Math 131 Multivariate

the standard (x; y; z) coordinate three-space. Nota- tions for vectors. Geometric interpretation as vec- tors as displacements as well as vectors as points. Vector addition, the zero vector 0, vector subtrac- Things you need to know about tion, scalar multiplication, their properties, and linear algebra their geometric interpretation. Math 131 Multivariate Calculus We start using these concepts right away. In D Joyce, Spring 2014 chapter 2, we'll begin our study of vector-valued functions, and we'll use the coordinates, vector no- The relation between linear algebra and mul- tation, and the rest of the topics reviewed in this tivariate calculus. We'll spend the first few section. meetings reviewing linear algebra. We'll look at just about everything in chapter 1. Section 1.2. Vectors and equations in R3. Stan- Linear algebra is the study of linear trans- dard basis vectors i; j; k in R3. Parametric equa- formations (also called linear functions) from n- tions for lines. Symmetric form equations for a line dimensional space to m-dimensional space, where in R3. Parametric equations for curves x : R ! R2 m is usually equal to n, and that's often 2 or 3. in the plane, where x(t) = (x(t); y(t)). A linear transformation f : Rn ! Rm is one that We'll use standard basis vectors throughout the preserves addition and scalar multiplication, that course, beginning with chapter 2. We'll study tan- is, f(a + b) = f(a) + f(b), and f(ca) = cf(a). We'll gent lines of curves and tangent planes of surfaces generally use bold face for vectors and for vector- in that chapter and throughout the course. -

MA 242 LINEAR ALGEBRA C1, Solutions to Second Midterm Exam

MA 242 LINEAR ALGEBRA C1, Solutions to Second Midterm Exam Prof. Nikola Popovic, November 9, 2006, 09:30am - 10:50am Problem 1 (15 points). Let the matrix A be given by 1 −2 −1 2 −1 5 6 3 : 5 −4 5 4 5 (a) Find the inverse A−1 of A, if it exists. (b) Based on your answer in (a), determine whether the columns of A span R3. (Justify your answer!) Solution. (a) To check whether A is invertible, we row reduce the augmented matrix [A I3]: 1 −2 −1 1 0 0 1 −2 −1 1 0 0 2 −1 5 6 0 1 0 3 ∼ : : : ∼ 2 0 3 5 1 1 0 3 : 5 −4 5 0 0 1 0 0 0 −7 −2 1 4 5 4 5 Since the last row in the echelon form of A contains only zeros, A is not row equivalent to I3. Hence, A is not invertible, and A−1 does not exist. (b) Since A is not invertible by (a), the Invertible Matrix Theorem says that the columns of A cannot span R3. Problem 2 (15 points). Let the vectors b1; : : : ; b4 be defined by 3 2 −1 0 0 5 1 0 −1 1 0 1 1 0 0 1 b1 = ; b2 = ; b3 = ; and b4 = : −2 −5 3 0 B C B C B C B C B 4 C B 7 C B 0 C B −3 C @ A @ A @ A @ A (a) Determine if the set B = fb1; b2; b3; b4g is linearly independent by computing the determi- nant of the matrix B = [b1 b2 b3 b4]. -

Handout 9 More Matrix Properties; the Transpose

Handout 9 More matrix properties; the transpose Square matrix properties These properties only apply to a square matrix, i.e. n £ n. ² The leading diagonal is the diagonal line consisting of the entries a11, a22, a33, . ann. ² A diagonal matrix has zeros everywhere except the leading diagonal. ² The identity matrix I has zeros o® the leading diagonal, and 1 for each entry on the diagonal. It is a special case of a diagonal matrix, and A I = I A = A for any n £ n matrix A. ² An upper triangular matrix has all its non-zero entries on or above the leading diagonal. ² A lower triangular matrix has all its non-zero entries on or below the leading diagonal. ² A symmetric matrix has the same entries below and above the diagonal: aij = aji for any values of i and j between 1 and n. ² An antisymmetric or skew-symmetric matrix has the opposite entries below and above the diagonal: aij = ¡aji for any values of i and j between 1 and n. This automatically means the digaonal entries must all be zero. Transpose To transpose a matrix, we reect it across the line given by the leading diagonal a11, a22 etc. In general the result is a di®erent shape to the original matrix: a11 a21 a11 a12 a13 > > A = A = 0 a12 a22 1 [A ]ij = A : µ a21 a22 a23 ¶ ji a13 a23 @ A > ² If A is m £ n then A is n £ m. > ² The transpose of a symmetric matrix is itself: A = A (recalling that only square matrices can be symmetric). -

Schaum's Outline of Linear Algebra (4Th Edition)

SCHAUM’S SCHAUM’S outlines outlines Linear Algebra Fourth Edition Seymour Lipschutz, Ph.D. Temple University Marc Lars Lipson, Ph.D. University of Virginia Schaum’s Outline Series New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto Copyright © 2009, 2001, 1991, 1968 by The McGraw-Hill Companies, Inc. All rights reserved. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior writ- ten permission of the publisher. ISBN: 978-0-07-154353-8 MHID: 0-07-154353-8 The material in this eBook also appears in the print version of this title: ISBN: 978-0-07-154352-1, MHID: 0-07-154352-X. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occurrence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in corporate training programs. To contact a representative please e-mail us at [email protected]. TERMS OF USE This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licensors reserve all rights in and to the work. -

Bases for Infinite Dimensional Vector Spaces Math 513 Linear Algebra Supplement

BASES FOR INFINITE DIMENSIONAL VECTOR SPACES MATH 513 LINEAR ALGEBRA SUPPLEMENT Professor Karen E. Smith We have proven that every finitely generated vector space has a basis. But what about vector spaces that are not finitely generated, such as the space of all continuous real valued functions on the interval [0; 1]? Does such a vector space have a basis? By definition, a basis for a vector space V is a linearly independent set which generates V . But we must be careful what we mean by linear combinations from an infinite set of vectors. The definition of a vector space gives us a rule for adding two vectors, but not for adding together infinitely many vectors. By successive additions, such as (v1 + v2) + v3, it makes sense to add any finite set of vectors, but in general, there is no way to ascribe meaning to an infinite sum of vectors in a vector space. Therefore, when we say that a vector space V is generated by or spanned by an infinite set of vectors fv1; v2;::: g, we mean that each vector v in V is a finite linear combination λi1 vi1 + ··· + λin vin of the vi's. Likewise, an infinite set of vectors fv1; v2;::: g is said to be linearly independent if the only finite linear combination of the vi's that is zero is the trivial linear combination. So a set fv1; v2; v3;:::; g is a basis for V if and only if every element of V can be be written in a unique way as a finite linear combination of elements from the set.