Autonomous Vehicles and AI-Chaperone Liability

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Post Pop Depression Late Show with Stephen Colbert

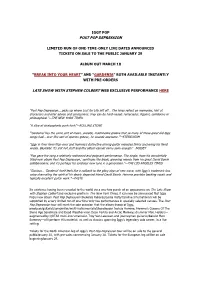

IGGY POP POST POP DEPRESSION LIMITED RUN OF ONE-TIME-ONLY LIVE DATES ANNOUNCED TICKETS ON SALE TO THE PUBLIC JANUARY 29 ALBUM OUT MARCH 18 “BREAK INTO YOUR HEART” AND “GARDENIA” BOTH AVAILABLE INSTANTLY WITH PRE-ORDERS LATE SHOW WITH STEPHEN COLBERT WEB EXCLUSIVE PERFORMANCE HERE “Post Pop Depression... picks up where Lust for Life left off… The lyrics reflect on memories, hint at characters and offer advice and confessions; they can be hard-nosed, remorseful, flippant, combative or philosophical.”—THE NEW YORK TIMES “A slice of stratospheric punk-funk”—ROLLING STONE “‘Gardenia’ has the same sort of mean, skeletal, machinelike groove that so many of those great old Iggy songs had… over this sort of spartan groove, he sounds awesome.”—STEREOGUM “Iggy in finer form than ever and Homme’s distinctive driving guitar melodies firmly anchoring his florid vocals. Basically: it’s shit hot stuff and this album cannot come soon enough”—NOISEY “Pop gave the song a relatively restrained and poignant performance. The single, from his wonderfully titled new album Post Pop Depression,’ continues the bleak, grooving moods from his great David Bowie collaborations, and it's perhaps his catchiest new tune in a generation.”—THE LOS ANGELES TIMES “Glorious… ’Gardenia’ itself feels like a callback to the glory days of new wave, with Iggy’s trademark low voice channeling the spirit of his dearly departed friend David Bowie. Homme provides backing vocals and typically excellent guitar work.”—PASTE Its existence having been revealed to the world via a one-two punch of an appearance on The Late Show with Stephen Colbert and exclusive profile in The New York Times, it can now be announced that Iggy Pop’s new album Post Pop Depression (Rekords Rekords/Loma Vista/Caroline International) will be supported by a very limited run of one-time-only live performances in specially selected venues. -

Uk Films for Sale Efm 2017

UK FILMS FOR SALE EFM 2017 UK FILM CENTRE, STAND 39, EUROPEAN FILM MARKET, MARTIN-GROPIUS-BAU, NIEDERKIRCHNER STR 7, 10785 BERLIN weareukfilm.com @weareukfilm 10X10 TAltitude Film Sales Cast: Luke Evans, Kelly Reilly Charlie Kemball +49 30 206033 401 Genre: Thriller [email protected] Director: Suzi Ewing Market Office: MGB # 132 Status: Production Home Office tel: +44 20 7478 7612 Synopsis: After meticulous planning and preparation, Lewis snatches Cathy off the busy streets and locks her away in a soundproofed room measuring 10 feet by 10 feet. His motive - to have Cathy confess to a dark secret that she is determined to keep hidden. But, Cathy has no intention of giving up so easily. 47 Meters Down TAltitude Film Sales Cast: Claire Holt, Mandy Moore, Matthew Modine Charlie Kemball +49 30 206033 401 Genre: Thriller [email protected] Director: Johannes Roberts Market Office: MGB # 132 Status: Completed Home Office tel: +44 20 7478 7612 Synopsis: Twenty-something sisters Kate and Lisa are gorgeous, fun-loving and ready for the holiday of a lifetime in Mexico. When the opportunity to go cage diving to view Great White sharks presents itself, thrill-seeking Kate jumps at the chance while Lisa takes some convincing. After some not so gentle persuading, Lisa finally agrees and the girls soon find themselves two hours off the coast of Huatulco and about to come face to face with nature's fiercest predator. But what should have been the trip to end all trips soon becomes a living nightmare when the shark cage breaks free from their boat, sinks and they find themselves trapped at the bottom of the ocean. -

THE 2X2 MATRIX of TORT REFORM's DISTRIBUTIONS

Brooklyn Law School BrooklynWorks Faculty Scholarship Winter 2011 The 2x2 aM trix of Tort Reform's Distributions Anita Bernstein Brooklyn Law School, [email protected] Follow this and additional works at: https://brooklynworks.brooklaw.edu/faculty Part of the Other Law Commons Recommended Citation 60 DePaul L. Rev. 273 (2010-2011) This Article is brought to you for free and open access by BrooklynWorks. It has been accepted for inclusion in Faculty Scholarship by an authorized administrator of BrooklynWorks. THE 2x2 MATRIX OF TORT REFORM'S DISTRIBUTIONS Anita Bernstein* INTRODUCTION From having lived, all persons know that predictability and unpre- dictability each enhance our human existence and also oppress us. We depend on predictability. Individuals and entities made up of human beings-a wide category that includes institutions, businesses, and societies-cannot flourish without schedules, routines, itineraries, habits, ground rules, inferences derived from premises, and protocols of engineering and design. Predictability makes trust and hope possi- ble: human life without it would be an abyss of terror, despair, and starvation. We would die if it disappeared. Unpredictability, for its part, complements predictability. It lifts our spirits, assuages our boredom, imparts wisdom, and (hand in hand with predictability) generates economic profit. It is present in every thrill we will ever live to feel. The dichotomous contrast of these two abstract nouns does some of the work of a related yet distinct juxtaposition: security -

The Impact of the Civil Jury on American Tort Law

Pepperdine Law Review Volume 38 Issue 2 Symposium: Does the World Still Need Article 8 United States Tort Law? Or Did it Ever? 2-15-2011 The Impact of the Civil Jury on American Tort Law Michael D. Green Follow this and additional works at: https://digitalcommons.pepperdine.edu/plr Part of the Torts Commons Recommended Citation Michael D. Green The Impact of the Civil Jury on American Tort Law, 38 Pepp. L. Rev. Iss. 2 (2011) Available at: https://digitalcommons.pepperdine.edu/plr/vol38/iss2/8 This Symposium is brought to you for free and open access by the Caruso School of Law at Pepperdine Digital Commons. It has been accepted for inclusion in Pepperdine Law Review by an authorized editor of Pepperdine Digital Commons. For more information, please contact [email protected], [email protected], [email protected]. The Impact of the Civil Jury on American Tort Law Michael D. Green* I. INTRODUCTION II. USING No-DUTY WHEN THE COURT BELIEVES THAT THERE Is No NEGLIGENCE OR AN ABSENCE OF CAUSATION III. USING NO-DUTY IN A SIMILAR FASHION TO PART II, WHEN THERE ARE OTHER GOOD AND SUFFICIENT (BUT UNSPECIFIED) REASONS FOR No LIABILITY IV. EMPLOYING UNFORESEEABILITY TO CONCLUDE THAT THERE IS NO DUTY BASED ON CASE-SPECIFIC FACTS OF THE SORT THAT WOULD ORDINARILY BE FOR THE JURY OR OTHERWISE EMPLOYING UNFORESEEABILITY INAN INCOHERENT FASHION V. DESCRIBING THE DUTY DETERMINATION INFACT-SPECIFIC TERMS BEFORE CONCLUDING No DUTY EXISTS OR THAT A MORE STRINGENT DUTY THAN REASONABLE CARE APPLIES VI. CONCLUSION I. INTRODUCTION pruritus: the symptom of itching, an uncomfortable sensation leading to the urge to scratch. -

Psaudio Copper

Issue 95 OCTOBER 7TH, 2019 Welcome to Copper #95! This is being written on October 4th---or 10/4, in US notation. That made me recall one of my former lives, many years and many pounds ago: I was a UPS driver. One thing I learned from the over-the- road drivers was that the popular version of CB-speak, "10-4, good buddy" was not generally used by drivers, as it meant something other than just, "hi, my friend". The proper and socially-acceptable term was "10-4, good neighbor." See? You never know what you'll learn here. In #95, Professor Larry Schenbeck takes a look at the mysteries of timbre---and no, that's not pronounced like a lumberjack's call; Dan Schwartz returns to a serious subject --unfortunately; Richard Murison goes on a sea voyage; Roy Hall pays a bittersweet visit to Cuba; Anne E. Johnson’s Off the Charts looks at the long and mostly-wonderful career of Leon Russell; J.I. Agnew explains how machine screws brought us sound recording; Bob Wood continues wit his True-Life Radio Tales; Woody Woodward continues his series on Jeff Beck; Anne’s Trading Eights brings us classic cuts from Miles Davis; Tom Gibbs is back to batting .800 in his record reviews; and I get to the bottom of things in The Audio Cynic, and examine direct some off-the-wall turntables in Vintage Whine. Copper #95 wraps up with Charles Rodrigues on extreme room treatment, and a lovely Parting Shot from my globe-trotting son, Will Leebens. -

Danny Postel Analyzing Conflict

St. Norbert Times Volume 90 Issue 4 Article 1 10-17-2018 Danny Postel Analyzing Conflict Follow this and additional works at: https://digitalcommons.snc.edu/snctimes Part of the Christianity Commons, Creative Writing Commons, Digital Humanities Commons, English Language and Literature Commons, History Commons, Journalism Studies Commons, Music Commons, Other Arts and Humanities Commons, Photography Commons, Reading and Language Commons, Religious Thought, Theology and Philosophy of Religion Commons, Technical and Professional Writing Commons, and the Television Commons Recommended Citation (2018) "Danny Postel Analyzing Conflict," St. Norbert Times: Vol. 90 : Iss. 4 , Article 1. Available at: https://digitalcommons.snc.edu/snctimes/vol90/iss4/1 This Full Issue is brought to you for free and open access by the English at Digital Commons @ St. Norbert College. It has been accepted for inclusion in St. Norbert Times by an authorized editor of Digital Commons @ St. Norbert College. For more information, please contact [email protected]. October 17, 2018 Volume 90 | Issue 4 | Serving our Community without Fear or Favor since 1929 INDEX: NEWS: Follow Me Printing Danny Postel Analyzing Conflict SAMANTHA DYSON | NEWS EDITOR SEE PAGE 2 > OPINION: Alcohol in Green Bay SEE PAGE 6 > FEATURES: Title IX Demonstration SEE PAGE 8 > ENTERTAINMENT: Theories for Avengers 4 SEE PAGE 11 > SPORTS: Men’s Basketball Preview SEE PAGE 15 > Danny Postel speaks about Middle Eastern Conflict | Jack Zampino (’19) In times and places of Studies Program (MENA) in the Fort Howard Theatre. that they are an unending Use Your Voice! great turmoil, many peo- at Northwestern University, In addition, he was pres- problem that is impossible ple seek to understand the wishes to provide another ent in Dr. -

DUR 19/03/2016 : CUERPO E : 3 : Página 1

SÁBADO 19 DE MARZO DE 2016 3 TÍMPANO LO NUEVO EL ROCKERO LANZÓ SU ‘POST POP DEPRESSION’ Iggy Pop ysuladooscuro ■ Este material fue trabajado junto a Josh Homme, fundador del grupo de ■ El músico ya avisó que será la última producción de su extensa carrera rock Queen of the Stone Age, además de Matt Helders y Dean Fertita. profesional que abarca más de 50 años. EFE halagos los nueve temas que Londres componen la compilación decimoséptima de Pop, so- El rockero estadounidense bre la que el rockero ya avi- Iggy Pop publicó ayer su só que sería la última de su nuevo álbum ‘Post Pop De- extensa carrera profesional pression’, un trabajo que la de más de 50 años. crítica británica ha califica- La revista musical del do de “brillante”, con gran- Reino Unido NME le ha des toques de “oscuridad” y otorgado la máxima pun- en el que “se proyecta la tuación -cinco estrellas- al sombra de David Bowie”. reseñar que Pop y Homme El nuevo material es el han “creado algo brillante resultado de la colaboración de la nada”. entre James Newell Oster- ‘Post Pop Depression’ se berg, el hombre de 68 años erige como un álbum “desa- que se esconde tras Iggy fiante y heroico”, plagado Pop, y el fundador del grupo de “rock de garaje inteligen- de rock Queen of the Stone te”, que muestra “lo que Age, Josh Homme, que ejer- más preocupa” al estadou- ce esta vez de productor. nidense en estos momentos: A ellos se les han unido el sexo y la muerte. -

BNP Paribas SA, Et Al

Case 1:16-cv-03228-AJN Document 193 Filed 02/16/21 Page 1 of 21 UNITED STATES DISTRICT COURT 2/16/21 SOUTHERN DISTRICT OF NEW YORK Entesar Osman Kashef, et al., Plaintiffs, 16-cv-3228 (AJN) –v– OPINION & ORDER BNP Paribas SA, et al., Defendants. ALISON J. NATHAN, District Judge: This putative class action is brought on behalf of victims of the Sudanese government’s campaign of human rights abuses from 1997 to 2009. Plaintiffs bring various state law claims against Defendant financial institution and its subsidiaries for assisting the Sudanese government in avoiding U.S. sanctions, which Plaintiffs claim provided the Regime with funding used to perpetrate the atrocities. The Court previously granted the Defendants’ motion to dismiss in light of the act of state doctrine and timeliness, but that decision was reversed by the Second Circuit. Dkt. Nos. 101, 106. Following remand, the Defendants renewed their motion to dismiss Plaintiffs’ Second Amended Complaint for failure to state a claim. For the reasons described in this opinion, the motion is granted in part and denied in part. I. BACKGROUND A. Factual Background Plaintiffs were victims of horrific human rights abuses undertaken by the Government of Sudan between 1997 and 2009, including “beatings, maiming, sexual assault, rape, infection with HIV, loss of property, displacement from their homes, and watching family members be killed.” Second Amended Complaint (“SAC”), Dkt. No. 49, ¶ 24; see also SAC ¶¶ 30-50 (outlining 1 Case 1:16-cv-03228-AJN Document 193 Filed 02/16/21 Page 2 of 21 specific abuses suffered by each representative Plaintiff). -

Revisiting the United States Application of Punitive Damages: Separating Myth from Reality

REVISITING THE UNITED STATES APPLICATION OF PUNITIVE DAMAGES: SEPARATING MYTH FROM REALITY Patrick S. Ryan* I. ABSTRACT ........................................ 70 II. INTRODUCTION ..................................... 70 m. THE UNFORTUNATE TENDENCY TO BELIEVE URBAN LEGENDS .......................................... 71 IV. THE PET IN THE MICROWAVE .......................... 72 V. PUNITIVE DAMAGES: THE UNDERLYING POLICY .......... 74 A. The Punishingand Deterrent Functions of Punitive Damages ...................................... 74 B. A Delicate Balance: Weighing "Enormity" of the Offense againstthe Wealth of the Defendant .......... 76 VI. THE MCDONALD'S CASE IN THE EUROPEAN PRESS ......... 76 A. McDonald's: The Facts ......................... 80 B. McDonald's: The New Mexico Trial Court Ruling .... 80 C. Calculationof Damages ......................... 82 D. McDonald's: The New Mexico Appeals Court Ruling & Settlement ............................. 82 VII. BMW v. GORE: THE FACTS AND THE ALABAMA TRIAL COURT RULING ..................................... 83 A. BMW v. Gore: The Alabama Supreme CourtRuling ... 84 B. BMW v. Gore: The United States Supreme Court R uling ........................................ 85 1. The First Guidepost: Degree of Reprehensibility.. 85 2. The Second Guidepost: Ratio Between Damages and Actual Harm ........................... 86 3. The Third Guidepost: A Review of Sanctions for Comparable Misconduct ..................... 87 C. BMW v. Gore: Remand to the Alabama Supreme Court ....................................... -

WDR 3 Open Sounds

Musikliste WDR 3 Open Sounds Iggy Pop 70 Zwischen Proto-Punk und Post-Chanson Eine Sendung von Thomas Mense Redaktion Markus Heuger 22.04.2017, 22:04 Uhr Webseite: www1.wdr.de/radio/wdr3/programm/sendungen/wdr3-open-sounds/index.html // Email: akustische.kunst(at)wdr.de 1. Main Street Eyes K/T: Iggy Pop (James Osterberg Music/Bug Music BMI 1990) CD: Brick By Brick, 1990, Virgin Länge: 3.02 Minuten 2. 1969 K: James Osterberg (Iggy Pop)/ Ron Asheton, 1969 CD: The Stooges, 1988 Elektra/Asylum Records, LC 0192 Länge: 0.53 3. Search and Destroy K: Iggy Pop/ James Williamson, 1973 I: Iggy and The Stooges CD: Iggy And The Stooges: Raw Power, 1973, 1997 Sony Entertainment LC 0162 Länge: 0.42 5. I wanna live K: Iggy Pop/ Whitey Kirst CD: Iggy Pop: Naughty Little Doggie, 1996, Virgin 1996, LC 3098 Länge: 0.12 6. I wanna be your dog K/T: Pop/ Scott Asheton/ Ron Asheton/ Dave Alexander CD: The Stooges, 1969/1988 Elektra/Asylum LC 0192 Länge: 1.12 WDR 3 Open Sounds // Musikliste // 1 7. Penetration K/T: Iggy Pop/James Williamson CD: Iggy And The Stooges: Raw Power, 1973, 1997 Sony Entertainment LC 0162 Länge: 2.30 8 . Dirt K/T: Iggy Pop/ Ron Asheton/ Scott Asheton CS. Funhouse, 1988 Elektra/ Asylum Records, LC 0192 Länge: 2.55 9. Gimme danger K:T. Iggy Pop/ James Williamson CD: Iggy And The Stooges: Raw Power, 1973, 1997 Sony Entertainment LC 0162 Länge:2.35 10. Nightclubbing K/T: David Bowie/Iggy Pop James Osterberg Music/Bewlay Bros.s:A.R.L./Fleur Music CD: Iggy Pop-The Idiot, Virgin Records 1990,077778615224 Länge: 1.00 11. -

Showtime Unlimited Schedule August 01 2013 12:00 Am 12:30 Am 1:00

Showtime Unlimited Schedule August 01 2013 12:00 am 12:30 am 1:00 am 1:30 am 2:00 am 2:30 am 3:00 am 3:30 am 4:00 am 4:30 am 5:00 am 5:30 am SHOWTIME Elizabeth R Elizabeth: The Golden Age (2:05) PG13 Blues Brothers 2000 PG13 SHO 2 #1 Cheerleader Wild Cherry R Humpday R Don't Go in the Woods (4:05) TVMA Shadow..... Camp (11:15) TVMA TVPG SHOWTIME SHOWCASE Trevor Noah: African Tanya X (1:15) TVMA Chatroom R Dave Foley: Relatively Taking..... American (12:05) TVMA Well (4:40) TVMA (5:40) R SHO BEYOND The Ninth Gate (11:35) R Batbabe: The Dark Nightie TVMA Malice in Wonderland R When Time Expires PG13 SHO EXTREME Kevin...... TV14 The Fixer TVMA Blast (2:20) R Murder in Mind R T.N.T. R SHO NEXT Rosencrantz and Guildenstern Dirty Love (1:40) R The Samaritan (3:15) R Debra Digiovanni: Single, are Undead (12:15) TVMA Awkward, Female TVMA SHO WOMEN Hide and Seek (12:10) R Barely Legal TVMA Trade R Touched.... R SHOWTIME FAMILY ZONE The Princess Stallion (11:40) TVG Max Is Missing (1:10) PG Whiskers (2:50) G The Song Spinner G FLiX The... R Farewell My Concubine R Silent Partner (3:25) R The Ruby Ring TVG THE MOVIE CHANNEL The... PG13 The Constant Gardener R Burning Palms (2:40) R The Three Musketeers (4:35) PG THE MOVIE CHANNEL XTRA The Chaperone PG13 Tim and Eric's Billion Dollar Movie (2:15) R Afterschool TVMA 6:00 am 6:30 am 7:00 am 7:30 am 8:00 am 8:30 am 9:00 am 9:30 am 10:00 am 10:30 am 11:00 am 11:30 am SHOWTIME D3: The Mighty Ducks (6:05) PG Battle Of The High School The Last Play at Shea (9:35) TVPG New.. -

Reforming British Defamation Law Stephen Bates

Hastings Communications and Entertainment Law Journal Volume 34 | Number 2 Article 3 1-1-2012 Libel Capital No More? Reforming British Defamation Law Stephen Bates Follow this and additional works at: https://repository.uchastings.edu/ hastings_comm_ent_law_journal Part of the Communications Law Commons, Entertainment, Arts, and Sports Law Commons, and the Intellectual Property Law Commons Recommended Citation Stephen Bates, Libel Capital No More? Reforming British Defamation Law, 34 Hastings Comm. & Ent. L.J. 233 (2012). Available at: https://repository.uchastings.edu/hastings_comm_ent_law_journal/vol34/iss2/3 This Article is brought to you for free and open access by the Law Journals at UC Hastings Scholarship Repository. It has been accepted for inclusion in Hastings Communications and Entertainment Law Journal by an authorized editor of UC Hastings Scholarship Repository. For more information, please contact [email protected]. Libel Capital No More? Reforming British Defamation Law by STEPHEN BATES I. Introduction ....................................................................................................................... 233 II. Substantive Issues in British Defamation Law ............................................................... 241 A. Jurisdiction and Libel Tourism ................................................................................. 242 B. Truth Defense ............................................................................................................ 246 C. Harm to Reputation .................................................................................................