Glossary of Digital Video Terms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Specific Signals to Ensure Optimum CAY

.4 versions of the 1515 are available .Specific signals to ensure optimum to suit specific needs. Each version CAY performance. Custom signals supports composite and GBR formats also available. plus the following component analog .Optional component digital (D1- formats: 4:2:2) signals to meet SMPTE Version M ...M-IITM RP125-l987 standards. Version S SMPTE Parallel .Optional composite digital (D2) Version SP ...Betacam TMand outputs of all available NTSC test Betacam SpTM signals. Version U Universal: BetacamTM .Optional composite digital (D2) to + M-IITM + SMPTE analog (NTSC) converter module. + S- VHS + formats .Digital signal storage for greater .Sized for side-by-side rack mount- precision and reliable replication. ing with a waveform monitor/ .Modular design allows easy testing vectorscope. and maintenance. .Full genlock with remote control- .l2-character source ID. .Black burst option to provide full RS-170A sync generator capability. .Dual Timing Pulses and Timing Bow tie test signals to guarantee precise interchannel timing and amplitude measurements in CAV. Providing both Component Analog timing can be adjusted from 45 micro- -COMPONENT Video and Composite NTSC test sig- seconds of advance to 15 microseconds nals, the Magni 1515fills a vital niche of delay, and overlaid on the test signal DIGITAL OUTPUT in today's studio or post-production for viewing on a picture monitor and This option (Option -04) provides 4:2:2 environment by allowing the perform- setting. component digital signals to SMPTE RP125-1987 standards. 75% Color Bars ance of equipment in either format to The reference input is switch-selectable, are available at the output connector be measured without costly duplication loop-through or 75 Ohm internally ter- with any signal selection from the of test instrumentation. -

SC/H and Color-Framing (Orig

The Final Word on SC/H and Color-framing (Orig. 2/1990 Rev. 7 1/2000 © Eric Wenocur) Anyone editing with 1” Type-C VTRs and today’s complex interformat systems has experienced the unwanted appearance of a horizontal shift in the picture, and the ensuing search for the cause. While the terms “SC/H phase” and “color-framing” are used regularly, they continue to be sources of confusion and misunderstanding to users of VTRs and editing equipment. Although at times we are tempted to attribute problems to the “gremlins” that we know live in our facilities, there really are answers to most color-framing issues! This article is intended to help explain and de-mystify the principles of color-framing in the hope that solutions become more obvious. It will cover a small amount of SC/H phase technical theory, and then explore TBC operation, 1” VTR color-framing and editing, Betacam and MII operation, framestores, and setting up an SC/H timed system. Emphasis has been placed on both the theory and its practical ramifications. SC/H BACKGROUND BASICS The term SC/H phase, short for “subcarrier-to-horizontal” phase, describes a phase (time) relationship between colorburst and horizontal sync in a composite video signal. Because of the relationship between the frequencies of horizontal rate, subcarrier, and number of lines per frame, the phase of colorburst changes 90 degrees every field, while the phase of picture subcarrier (chroma) changes 180 degrees every two fields. Consequently, every two fields the chroma and burst are in phase, but 180 degrees reversed from the previous frame. -

ASPECT RATIO Aspect Ratios Are One of the More Confusing Parts of Video

ASPECT RATIO Aspect ratios are one of the more confusing parts of video, although they used to be simple. That's because television and movie content was all about the same size, 4:3 (also known as 1.33:1, meaning that the picture is 1.33 times as long as it is high). The Academy Standard before 1952 was 1.37:1, so there was virtually no problem showing movies on TV. However, as TV began to cut into Hollywood's take at the theater, the quest was on to differentiate theater offerings in ways that could not be seen on TV. Thus, innovations such as widescreen film, Technicolor, and even 3-D were born. Widescreen film was one of the innovations that survived and has since dominated the cinema. Today, you tend to find films in one of two widescreen aspect ratios: 1. Academy Standard (or "Flat"), which has an aspect ratio of 1.85:1 2. Anamorphic Scope (or "Scope"), which has an aspect ratio of 2.35:1. Scope is also called Panavision or CinemaScope. HDTV is specified at a 16:9, or 1.78:1, aspect ratio.If your television isn't widescreen and you want to watch a widescreen film, you have a problem. The most common approach in the past has been what's called Pan and Scan. For each frame o a film, a decision is made as to what constitutes the action area. That part of the film frame is retained, and the rest is lost.. This can often leave out the best parts of a picture. -

Bakalářská Práce

Univerzita Hradec Králové Pedagogická fakulta Katedra technických předmětů OBRAZOVÁ PODPORA VÝUKY TECHNICKÝCH PŘEDMĚTŮ - TECHNIKA A TECHNOLOGIE PRO DYNAMICKÝ OBRAZ Bakalářská práce Autor: Pavel Bédi Studijní program: B 1801 Informatika Studijní obor: Informatika se zaměřením na vzdělávání Základy techniky se zaměřením na vzdělávání Vedoucí práce: doc. PaedDr. René Drtina, Ph.D. Hradec Králové 2020 UNIVERZITA HRADEC KRÁLOVÉ Studijní program: Informatika Přírodovědecká fakulta Forma studia: Prezenční Akademický rok: 2018/2019 Obor/kombinace: Informatika se zaměřením na vzdělávání – Základy techniky se zaměřením na vzdělávání (BIN-ZTB) Obor v rámci kterého má být VŠKP vypracována: Základy techniky se zaměřením na vzdělávání Podklad pro zadání BAKALÁŘSKÉ práce studenta Jméno a příjmení: Pavel Bédi Osobní číslo: S17IN020BP Adresa: Řešetova Lhota 41, Studnice – Řešetova Lhota, 54701 Náchod 1, Česká republika Téma práce: Obrazová podpora výuky technických předmětů – technika a technologie pro dynamický obraz Téma práce anglicky: Image support for the teaching of technical subjects – technique and technology for dynamic image Vedoucí práce: doc. PaedDr. René Drtina, Ph.D. Katedra technických předmětů Zásady pro vypracování: Cílem bakalářské práce je podat ucelený přehled o možnostech tvorby vidoezáznamů, snímací technice a produkčních SW. Předpokládá se, že na Bc práci bude navazovat diplomová práce s kompletně zpracovaným didaktickým materiálem pro vybrané téma. Předpokládané členění Bc práce (možné zadání pro dva studenty při rozdělení na oblast audio a video): Specifika tvorby dokumentárního a výukového videozáznamu pro technická zařízení. Digitální videokamery a jejich vlastnosti, záznamové a obrazové formáty a jejich kompatibilita, vysokorychlostní kamery. Snímací objektivy, testy rozlišovací schopnosti. Pomocná technika pro digitální video – nekonečné pozadí, osvětlovací technika, předsádky, atd. Zařízení pro záznam zvuku, mikrofony a jejich příslušenství, zásady pro snímání tzv. -

24P and Panasonic AG-DVX100 and AJ-SDX900 Camcorder Support in Vegas and DVD Architect Software

® 24p and Panasonic AG-DVX100 and AJ-SDX900 camcorder support in Vegas and DVD Architect Software Revision 3, Updated 05.27.04 The information contained in this document is subject to change without notice and does not represent a commitment on the part of Sony Pictures Digital Media Software and Services. The software described in this manual is provided under the terms of a license agreement or nondisclosure agreement. The software license agreement specifies the terms and conditions for its lawful use. Sound Forge, ACID, Vegas, DVD Architect, Vegas+DVD, Acoustic Mirror, Wave Hammer, XFX, and Perfect Clarity Audio are trademarks or registered trademarks of Sony Pictures Digital Inc. or its affiliates in the United States and other countries. All other trademarks or registered trademarks are the property of their respective owners in the United States and other countries. Copyright © 2004 Sony Pictures Digital Inc. This document can be reproduced for noncommercial reference or personal/private use only and may not be resold. Any reproduction in excess of 15 copies or electronic transmission requires the written permission of Sony Pictures Digital Inc. Table of Contents What is covered in this document? Background ................................................................................................................................................................. 3 Vegas .......................................................................................................................................................................... -

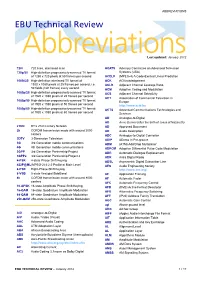

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership -

Creating 4K/UHD Content Poster

Creating 4K/UHD Content Colorimetry Image Format / SMPTE Standards Figure A2. Using a Table B1: SMPTE Standards The television color specification is based on standards defined by the CIE (Commission 100% color bar signal Square Division separates the image into quad links for distribution. to show conversion Internationale de L’Éclairage) in 1931. The CIE specified an idealized set of primary XYZ SMPTE Standards of RGB levels from UHDTV 1: 3840x2160 (4x1920x1080) tristimulus values. This set is a group of all-positive values converted from R’G’B’ where 700 mv (100%) to ST 125 SDTV Component Video Signal Coding for 4:4:4 and 4:2:2 for 13.5 MHz and 18 MHz Systems 0mv (0%) for each ST 240 Television – 1125-Line High-Definition Production Systems – Signal Parameters Y is proportional to the luminance of the additive mix. This specification is used as the color component with a color bar split ST 259 Television – SDTV Digital Signal/Data – Serial Digital Interface basis for color within 4K/UHDTV1 that supports both ITU-R BT.709 and BT2020. 2020 field BT.2020 and ST 272 Television – Formatting AES/EBU Audio and Auxiliary Data into Digital Video Ancillary Data Space BT.709 test signal. ST 274 Television – 1920 x 1080 Image Sample Structure, Digital Representation and Digital Timing Reference Sequences for The WFM8300 was Table A1: Illuminant (Ill.) Value Multiple Picture Rates 709 configured for Source X / Y BT.709 colorimetry ST 296 1280 x 720 Progressive Image 4:2:2 and 4:4:4 Sample Structure – Analog & Digital Representation & Analog Interface as shown in the video ST 299-0/1/2 24-Bit Digital Audio Format for SMPTE Bit-Serial Interfaces at 1.5 Gb/s and 3 Gb/s – Document Suite Illuminant A: Tungsten Filament Lamp, 2854°K x = 0.4476 y = 0.4075 session display. -

Digital Video in Multimedia Pdf

Digital video in multimedia pdf Continue Digital Electronic Representation of Moving Visual Images This article is about the digital methods applied to video. The standard digital video storage format can be viewed on DV. For other purposes, see Digital Video (disambiguation). Digital video is an electronic representation of moving visual images (video) in the form of coded digital data. This contrasts with analog video, which is a moving visual image with analog signals. Digital video includes a series of digital images displayed in quick succession. Digital video was first commercially introduced in 1986 in Sony D1 format, which recorded a non-repressive standard digital video definition component. In addition to uncompressed formats, today's popular compressed digital video formats include H.264 and MPEG-4. Modern interconnect standards for digital video include HDMI, DisplayPort, Digital Visual Interface (DVI) and Serial Digital Interface (SDI). Digital video can be copied without compromising quality. In contrast, when analog sources are copied, they experience loss of generation. Digital video can be stored in digital media, such as Blu-ray Disc, in computer data storage, or transmitted over the Internet to end users who watch content on a desktop or digital smart TV screen. In everyday practice, digital video content, such as TV shows and movies, also includes a digital audio soundtrack. History Digital Video Cameras Additional Information: Digital Cinematography, Image Sensor, and Video Camera Base for Digital Video Cameras are metallic oxide-semiconductor (MOS) image sensors. The first practical semiconductor image sensor was a charging device (CCD) invented in 1969 using MOS capacitor technology. -

HD-SDI, HDMI, and Tempus Fugit

TECHNICALL Y SPEAKING... By Steve Somers, Vice President of Engineering HD-SDI, HDMI, and Tempus Fugit D-SDI (high definition serial digital interface) and HDMI (high definition multimedia interface) Hversion 1.3 are receiving considerable attention these days. “These days” really moved ahead rapidly now that I recall writing in this column on HD-SDI just one year ago. And, exactly two years ago the topic was DVI and HDMI. To be predictably trite, it seems like just yesterday. As with all things digital, there is much change and much to talk about. HD-SDI Redux difference channels suffice with one-half 372M spreads out the image information The HD-SDI is the 1.5 Gbps backbone the sample rate at 37.125 MHz, the ‘2s’ between the two channels to distribute of uncompressed high definition video in 4:2:2. This format is sufficient for high the data payload. Odd-numbered lines conveyance within the professional HD definition television. But, its robustness map to link A and even-numbered lines production environment. It’s been around and simplicity is pressing it into the higher map to link B. Table 1 indicates the since about 1996 and is quite literally the bandwidth demands of digital cinema and organization of 4:2:2, 4:4:4, and 4:4:4:4 savior of high definition interfacing and other uses like 12-bit, 4096 level signal data with respect to the available frame delivery at modest cost over medium-haul formats, refresh rates above 30 frames per rates. distances using RG-6 style video coax. -

Cinematography

CINEMATOGRAPHY ESSENTIAL CONCEPTS • The filmmaker controls the cinematographic qualities of the shot – not only what is filmed but also how it is filmed • Cinematographic qualities involve three factors: 1. the photographic aspects of the shot 2. the framing of the shot 3. the duration of the shot In other words, cinematography is affected by choices in: 1. Photographic aspects of the shot 2. Framing 3. Duration of the shot 1. Photographic image • The study of the photographic image includes: A. Range of tonalities B. Speed of motion C. Perspective 1.A: Tonalities of the photographic image The range of tonalities include: I. Contrast – black & white; color It can be controlled with lighting, filters, film stock, laboratory processing, postproduction II. Exposure – how much light passes through the camera lens Image too dark, underexposed; or too bright, overexposed Exposure can be controlled with filters 1.A. Tonality - cont Tonality can be changed after filming: Tinting – dipping developed film in dye Dark areas remain black & gray; light areas pick up color Toning - dipping during developing of positive print Dark areas colored light area; white/faintly colored 1.A. Tonality - cont • Photochemically – based filmmaking can have the tonality fixed. Done by color timer or grader in the laboratory • Digital grading used today. A scanner converts film to digital files, creating a digital intermediate (DI). DI is adjusted with software and scanned back onto negative 1.B.: Speed of motion • Depends on the relation between the rate at which -

EMERGENCY REGULATION TEXT Patient Electronic Property

DEPARTMENT OF STATE HOSPITALS EMERGENCY REGULATION TEXT Patient Electronic Property California Code of Regulations Title 9. Rehabilitative and Developmental Services Division 1. Department of Mental Health Chapter 16. State Hospital Operations Article 3. Safety and Security Amend section 4350, title 9, California Code of Regulations to read as follows: [Note: Set forth is the amendments to the proposed emergency regulatory language. The amendments are shown in underline to indicate additions and strikeout to indicate deletions from the existing regulatory text.] § 4350. Contraband Electronic Devices with Communication and Internet Capabilities. (a) Except as provided in subsection (d), patients are prohibited from having personal access to, possession, or on-site storage of the following items: (1) Electronic devices with the capability to connect to a wired (for example, Ethernet, Plain Old Telephone Service (POTS), Fiber Optic) and/or a wireless (for example, Bluetooth, Cellular, Wi-Fi [802.11a/b/g/n], WiMAX) communications network to send and/or receive information including, but not limited to, the following: (A) Desktop computers; laptop computers; tablets; single-board computers or motherboards such as “Raspberry Pi;” cellular or satellite phones; personal digital assistant (PDA); graphing calculators; and satellite, shortwave, CB and GPS radios. (B) Devices without native capabilities that can be modified for network communication. The modification may or may not be supported by the product vendor and may be a hardware and/or software configuration change. (2) Digital media recording devices, including but not limited to CD, DVD, Blu-Ray burners. Page 2 (3) Voice or visual recording devices in any format. (4) Items capable of patient-accessible memory storage, including but not limited to: (A) Any device capable of accessible digital memory or remote memory access. -

Magnetic Tape Storage and Handling a Guide for Libraries and Archives

Magnetic Tape Storage and Handling Guide Magnetic Tape Storage and Handling A Guide for Libraries and Archives June 1995 The Commission on Preservation and Access is a private, nonprofit organization acting on behalf of the nation’s libraries, archives, and universities to develop and encourage collaborative strategies for preserving and providing access to the accumulated human record. Additional copies are available for $10.00 from the Commission on Preservation and Access, 1400 16th St., NW, Ste. 740, Washington, DC 20036-2217. Orders must be prepaid, with checks in U.S. funds made payable to “The Commission on Preservation and Access.” This paper has been submitted to the ERIC Clearinghouse on Information Resources. The paper in this publication meets the minimum requirements of the American National Standard for Information Sciences-Permanence of Paper for Printed Library Materials ANSI Z39.48-1992. ISBN 1-887334-40-8 No part of this publication may be reproduced or transcribed in any form without permission of the publisher. Requests for reproduction for noncommercial purposes, including educational advancement, private study, or research will be granted. Full credit must be given to the author, the Commission on Preservation and Access, and the National Media Laboratory. Sale or use for profit of any part of this document is prohibited by law. Page i Magnetic Tape Storage and Handling Guide Magnetic Tape Storage and Handling A Guide for Libraries and Archives by Dr. John W. C. Van Bogart Principal Investigator, Media Stability Studies National Media Laboratory Published by The Commission on Preservation and Access 1400 16th Street, NW, Suite 740 Washington, DC 20036-2217 and National Media Laboratory Building 235-1N-17 St.