Lecture 4: Temperature

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

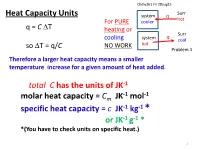

Total C Has the Units of JK-1 Molar Heat Capacity = C Specific Heat Capacity

Chmy361 Fri 28aug15 Surr Heat Capacity Units system q hot For PURE cooler q = C ∆T heating or q Surr cooling system cool ∆ C NO WORK hot so T = q/ Problem 1 Therefore a larger heat capacity means a smaller temperature increase for a given amount of heat added. total C has the units of JK-1 -1 -1 molar heat capacity = Cm JK mol specific heat capacity = c JK-1 kg-1 * or JK-1 g-1 * *(You have to check units on specific heat.) 1 Which has the higher heat capacity, water or gold? Molar Heat Cap. Specific Heat Cap. -1 -1 -1 -1 Cm Jmol K c J kg K _______________ _______________ Gold 25 129 Water 75 4184 2 From Table 2.2: 100 kg of water has a specific heat capacity of c = 4.18 kJ K-1kg-1 What will be its temperature change if 418 kJ of heat is removed? ( Relates to problem 1: hiker with wet clothes in wind loses body heat) q = C ∆T = c x mass x ∆T (assuming C is independent of temperature) ∆T = q/ (c x mass) = -418 kJ / ( 4.18 kJ K-1 kg-1 x 100 kg ) = = -418 kJ / ( 4.18 kJ K-1 kg-1 x 100 kg ) = -418/418 = -1.0 K Note: Units are very important! Always attach units to each number and make sure they cancel to desired unit Problems you can do now (partially): 1b, 15, 16a,f, 19 3 A Detour into the Microscopic Realm pext Pressure of gas, p, is caused by enormous numbers of collisions on the walls of the container. -

Useful Constants

Appendix A Useful Constants A.1 Physical Constants Table A.1 Physical constants in SI units Symbol Constant Value c Speed of light 2.997925 × 108 m/s −19 e Elementary charge 1.602191 × 10 C −12 2 2 3 ε0 Permittivity 8.854 × 10 C s / kgm −7 2 μ0 Permeability 4π × 10 kgm/C −27 mH Atomic mass unit 1.660531 × 10 kg −31 me Electron mass 9.109558 × 10 kg −27 mp Proton mass 1.672614 × 10 kg −27 mn Neutron mass 1.674920 × 10 kg h Planck constant 6.626196 × 10−34 Js h¯ Planck constant 1.054591 × 10−34 Js R Gas constant 8.314510 × 103 J/(kgK) −23 k Boltzmann constant 1.380622 × 10 J/K −8 2 4 σ Stefan–Boltzmann constant 5.66961 × 10 W/ m K G Gravitational constant 6.6732 × 10−11 m3/ kgs2 M. Benacquista, An Introduction to the Evolution of Single and Binary Stars, 223 Undergraduate Lecture Notes in Physics, DOI 10.1007/978-1-4419-9991-7, © Springer Science+Business Media New York 2013 224 A Useful Constants Table A.2 Useful combinations and alternate units Symbol Constant Value 2 mHc Atomic mass unit 931.50MeV 2 mec Electron rest mass energy 511.00keV 2 mpc Proton rest mass energy 938.28MeV 2 mnc Neutron rest mass energy 939.57MeV h Planck constant 4.136 × 10−15 eVs h¯ Planck constant 6.582 × 10−16 eVs k Boltzmann constant 8.617 × 10−5 eV/K hc 1,240eVnm hc¯ 197.3eVnm 2 e /(4πε0) 1.440eVnm A.2 Astronomical Constants Table A.3 Astronomical units Symbol Constant Value AU Astronomical unit 1.4959787066 × 1011 m ly Light year 9.460730472 × 1015 m pc Parsec 2.0624806 × 105 AU 3.2615638ly 3.0856776 × 1016 m d Sidereal day 23h 56m 04.0905309s 8.61640905309 -

Chapter 5. Calorimetiric Properties

Chapter 5. Calorimetiric Properties (1) Specific and molar heat capacities (2) Latent heats of crystallization or fusion A. Heat Capacity - The specific heat capacity is the heat which must be added per kg of a substance to raise the temperature by one degree 1. Specific heat capacity at const. Volume ∂U c = (J/kg· K) v ∂ T v 2. Specific heat capacity at cont. pressure ∂H c = (J/kg· K) p ∂ T p 3. molar heat capacity at constant volume Cv = Mcv (J/mol· K) 4. molar heat capacity at constat pressure ∂H C = M = (J/mol· K) p cp ∂ T p Where H is the enthalpy per mol ◦ Molar heat capacity of solid and liquid at 25℃ s l - Table 5.1 (p.111)에 각각 group 의 Cp (Satoh)와 Cp (Show)에 대한 data 를 나타내었다. l s - Table 5.2 (p.112,113)에 각 polymer 에 대한 Cp , Cp 값들을 나타내었다. - (Ex 5.1)에 polypropylene 의 Cp 를 구하는 것을 나타내었다. - ◦ Specific heat as a function of temperature - 그림 5.1 에 polypropylene 의 molar heat capacity 에 대한 그림을 나타 내었다. ◦ The slopes of the heat capacity for solid polymer dC s 1 p = × −3 s 3 10 C p (298) dt dC l 1 p = × −3 l 1.2 10 C p (298) dt l s (see Table 5.3) for slope of the Cp , Cp ◦ 식 (5.1)과 (5.2)에 온도에 따른 Cp 값 계산 (Ex) calculate the heat capacity of polypropylene with a degree of crystallinity of 30% at 25℃ (p.110) (sol) by the addition of group contributions (see table 5.1) s l Cp (298K) Cp (298K) ( -CH2- ) 25.35 30.4(from p.111) ( -CH- ) 15.6 20.95 ( -CH3 ) 30.9 36.9 71.9 88.3 ∴ Cp(298K) = 0.3 (71.9J/mol∙ K) + 0.7(88.3J/mol∙ K) = 83.3 (J/mol∙ K) - It is assumed that the semi crystalline polymer consists of an amorphous fraction l s with heat capacity Cp and a crystalline Cp P 116 Theoretical Background P 117 The increase of the specific heat capacity with temperature depends on an increase of the vibrational degrees of freedom. -

Equipartition of Energy

Equipartition of Energy The number of degrees of freedom can be defined as the minimum number of independent coordinates, which can specify the configuration of the system completely. (A degree of freedom of a system is a formal description of a parameter that contributes to the state of a physical system.) The position of a rigid body in space is defined by three components of translation and three components of rotation, which means that it has six degrees of freedom. The degree of freedom of a system can be viewed as the minimum number of coordinates required to specify a configuration. Applying this definition, we have: • For a single particle in a plane two coordinates define its location so it has two degrees of freedom; • A single particle in space requires three coordinates so it has three degrees of freedom; • Two particles in space have a combined six degrees of freedom; • If two particles in space are constrained to maintain a constant distance from each other, such as in the case of a diatomic molecule, then the six coordinates must satisfy a single constraint equation defined by the distance formula. This reduces the degree of freedom of the system to five, because the distance formula can be used to solve for the remaining coordinate once the other five are specified. The equipartition theorem relates the temperature of a system with its average energies. The original idea of equipartition was that, in thermal equilibrium, energy is shared equally among all of its various forms; for example, the average kinetic energy per degree of freedom in the translational motion of a molecule should equal that of its rotational motions. -

Fastest Frozen Temperature for a Thermodynamic System

Fastest Frozen Temperature for a Thermodynamic System X. Y. Zhou, Z. Q. Yang, X. R. Tang, X. Wang1 and Q. H. Liu1, 2, ∗ 1School for Theoretical Physics, School of Physics and Electronics, Hunan University, Changsha, 410082, China 2Synergetic Innovation Center for Quantum Effects and Applications (SICQEA), Hunan Normal University,Changsha 410081, China For a thermodynamic system obeying both the equipartition theorem in high temperature and the third law in low temperature, the curve showing relationship between the specific heat and the temperature has two common behaviors: it terminates at zero when the temperature is zero Kelvin and converges to a constant value of specific heat as temperature is higher and higher. Since it is always possible to find the characteristic temperature TC to mark the excited temperature as the specific heat almost reaches the equipartition value, it is also reasonable to find a temperature in low temperature interval, complementary to TC . The present study reports a possibly universal existence of the such a temperature #, defined by that at which the specific heat falls fastest along with decrease of the temperature. For the Debye model of solids, below the temperature # the Debye's law manifest itself. PACS numbers: I. INTRODUCTION A thermodynamic system usually obeys two laws in opposite limits of temperature; in high temperature the equipar- tition theorem works and in low temperature the third law of thermodynamics holds. From the shape of a curve showing relationship between the specific heat and the temperature, the behaviors in these two limits are qualitatively clear: in high temperature it approaches to a constant whereas it goes over to zero during the temperature is lowering to the zero Kelvin. -

Atkins' Physical Chemistry

Statistical thermodynamics 2: 17 applications In this chapter we apply the concepts of statistical thermodynamics to the calculation of Fundamental relations chemically significant quantities. First, we establish the relations between thermodynamic 17.1 functions and partition functions. Next, we show that the molecular partition function can be The thermodynamic functions factorized into contributions from each mode of motion and establish the formulas for the 17.2 The molecular partition partition functions for translational, rotational, and vibrational modes of motion and the con- function tribution of electronic excitation. These contributions can be calculated from spectroscopic data. Finally, we turn to specific applications, which include the mean energies of modes of Using statistical motion, the heat capacities of substances, and residual entropies. In the final section, we thermodynamics see how to calculate the equilibrium constant of a reaction and through that calculation 17.3 Mean energies understand some of the molecular features that determine the magnitudes of equilibrium constants and their variation with temperature. 17.4 Heat capacities 17.5 Equations of state 17.6 Molecular interactions in A partition function is the bridge between thermodynamics, spectroscopy, and liquids quantum mechanics. Once it is known, a partition function can be used to calculate thermodynamic functions, heat capacities, entropies, and equilibrium constants. It 17.7 Residual entropies also sheds light on the significance of these properties. 17.8 Equilibrium constants Checklist of key ideas Fundamental relations Further reading Discussion questions In this section we see how to obtain any thermodynamic function once we know the Exercises partition function. Then we see how to calculate the molecular partition function, and Problems through that the thermodynamic functions, from spectroscopic data. -

Exercise 18.4 the Ideal Gas Law Derived

9/13/2015 Ch 18 HW Ch 18 HW Due: 11:59pm on Monday, September 14, 2015 To understand how points are awarded, read the Grading Policy for this assignment. Exercise 18.4 ∘ A 3.00L tank contains air at 3.00 atm and 20.0 C. The tank is sealed and cooled until the pressure is 1.00 atm. Part A What is the temperature then in degrees Celsius? Assume that the volume of the tank is constant. ANSWER: ∘ T = 175 C Correct Part B If the temperature is kept at the value found in part A and the gas is compressed, what is the volume when the pressure again becomes 3.00 atm? ANSWER: V = 1.00 L Correct The Ideal Gas Law Derived The ideal gas law, discovered experimentally, is an equation of state that relates the observable state variables of the gaspressure, temperature, and density (or quantity per volume): pV = NkBT (or pV = nRT ), where N is the number of atoms, n is the number of moles, and R and kB are ideal gas constants such that R = NA kB, where NA is Avogadro's number. In this problem, you should use Boltzmann's constant instead of the gas constant R. Remarkably, the pressure does not depend on the mass of the gas particles. Why don't heavier gas particles generate more pressure? This puzzle was explained by making a key assumption about the connection between the microscopic world and the macroscopic temperature T . This assumption is called the Equipartition Theorem. The Equipartition Theorem states that the average energy associated with each degree of freedom in a system at T 1 k T k = 1.38 × 10−23 J/K absolute temperature is 2 B , where B is Boltzmann's constant. -

Lecture 8 - Dec 2, 2013 Thermodynamics of Earth and Cosmos; Overview of the Course

Physics 5D - Lecture 8 - Dec 2, 2013 Thermodynamics of Earth and Cosmos; Overview of the Course Reminder: the Final Exam is next Wednesday December 11, 4:00-7:00 pm, here in Thimann Lec 3. You can bring with you two sheets of paper with any formulas or other notes you like. A PracticeFinalExam is at http://physics.ucsc.edu/~joel/Phys5D The Physics Department has elected to use the new eCommons Evaluations System to collect end of the quarter instructor evaluations from students in our courses. All students in our courses will have access to the evaluation tool in eCommons, whether or not the instructor used an eCommons site for course work. Students will receive an email from the department when the evaluation survey is available. The email will provide information regarding the evaluation as well as a link to the evaluation in eCommons. Students can click the link, login to eCommons and find the evaluation to take and submit. Alternately, students can login to ecommons.ucsc.edu and click the Evaluation System tool to see current available evaluations. Monday, December 2, 13 20-8 Heat Death of the Universe If we look at the universe as a whole, it seems inevitable that, as more and more energy is converted to unavailable forms, the total entropy S will increase and the ability to do work anywhere will gradually decrease. This is called the “heat death of the universe”. It was a popular theme in the late 19th century, after physicists had discovered the 2nd Law of Thermodynamics. Lord Kelvin calculated that the sun could only shine for about 20 million years, based on the idea that its energy came from gravitational contraction. -

Experimental Determination of Heat Capacities and Their Correlation with Theoretical Predictions Waqas Mahmood, Muhammad Sabieh Anwar, and Wasif Zia

Experimental determination of heat capacities and their correlation with theoretical predictions Waqas Mahmood, Muhammad Sabieh Anwar, and Wasif Zia Citation: Am. J. Phys. 79, 1099 (2011); doi: 10.1119/1.3625869 View online: http://dx.doi.org/10.1119/1.3625869 View Table of Contents: http://ajp.aapt.org/resource/1/AJPIAS/v79/i11 Published by the American Association of Physics Teachers Additional information on Am. J. Phys. Journal Homepage: http://ajp.aapt.org/ Journal Information: http://ajp.aapt.org/about/about_the_journal Top downloads: http://ajp.aapt.org/most_downloaded Information for Authors: http://ajp.dickinson.edu/Contributors/contGenInfo.html Downloaded 13 Aug 2013 to 157.92.4.6. Redistribution subject to AAPT license or copyright; see http://ajp.aapt.org/authors/copyright_permission Experimental determination of heat capacities and their correlation with theoretical predictions Waqas Mahmood, Muhammad Sabieh Anwar,a) and Wasif Zia School of Science and Engineering, Lahore University of Management Sciences (LUMS), Opposite Sector U, D. H. A., Lahore 54792, Pakistan (Received 25 January 2011; accepted 23 July 2011) We discuss an experiment for determining the heat capacities of various solids based on a calorimetric approach where the solid vaporizes a measurable mass of liquid nitrogen. We demonstrate our technique for copper and aluminum, and compare our data with Einstein’s model of independent harmonic oscillators and the more accurate Debye model. We also illustrate an interesting material property, the Verwey transition -

Thermodynamic Temperature

Thermodynamic temperature Thermodynamic temperature is the absolute measure 1 Overview of temperature and is one of the principal parameters of thermodynamics. Temperature is a measure of the random submicroscopic Thermodynamic temperature is defined by the third law motions and vibrations of the particle constituents of of thermodynamics in which the theoretically lowest tem- matter. These motions comprise the internal energy of perature is the null or zero point. At this point, absolute a substance. More specifically, the thermodynamic tem- zero, the particle constituents of matter have minimal perature of any bulk quantity of matter is the measure motion and can become no colder.[1][2] In the quantum- of the average kinetic energy per classical (i.e., non- mechanical description, matter at absolute zero is in its quantum) degree of freedom of its constituent particles. ground state, which is its state of lowest energy. Thermo- “Translational motions” are almost always in the classical dynamic temperature is often also called absolute tem- regime. Translational motions are ordinary, whole-body perature, for two reasons: one, proposed by Kelvin, that movements in three-dimensional space in which particles it does not depend on the properties of a particular mate- move about and exchange energy in collisions. Figure 1 rial; two that it refers to an absolute zero according to the below shows translational motion in gases; Figure 4 be- properties of the ideal gas. low shows translational motion in solids. Thermodynamic temperature’s null point, absolute zero, is the temperature The International System of Units specifies a particular at which the particle constituents of matter are as close as scale for thermodynamic temperature. -

Molar Heat Capacity (Cv) for Saturated and Compressed Liquid and Vapor Nitrogen from 65 to 300 K at Pressures to 35 Mpa

Volume 96, Number 6, November-December 1991 Journal of Research of the National Institute of Standards and Technology [J. Res. Nat!. Inst. Stand. Technol. 96, 725 (1991)] Molar Heat Capacity (Cv) for Saturated and Compressed Liquid and Vapor Nitrogen from 65 to 300 K at Pressures to 35 MPa Volume 96 Number 6 November-December 1991 J. W. Magee Molar heat capacities at constant vol- the Cv (fi,T) data and also show satis- ume (C,) for nitrogen have been mea- factory agreement with published heat National Institute of Standards sured with an automated adiabatic capacity data. The overall uncertainty and Technology, calorimeter. The temperatures ranged of the Cy values ranges from 2% in the Boulder, CO 80303 from 65 to 300 K, while pressures were vapor to 0.5% in the liquid. as high as 35 MPa. Calorimetric data were obtained for a total of 276 state Key words: calorimeter; heat capacity; conditions on 14 isochores. Extensive high pressure; isochorlc; liquid; mea- results which were obtained in the satu- surement; nitrogen; saturation; vapor. rated liquid region (C^^^' and C„) demonstrate the internal consistency of Accepted: August 30, 1991 Glossary ticular, the heat capacity at constant volume {Cv) is a,b,c,d,e Coefficients for Eq. (7) related to P(j^T) by: c. Molar heat capacity at constant volume a Molar heat capacity in the ideal gas state (1) C„<2> Molar heat capacity of a two-phase sample Jo a Molar heat capacity of a saturated liquid sample where C? is the ideal gas heat capacity. Conse- Vbamb Volume of the calorimeter containing sample quently, Cv measurements which cover a wide Vr, Molar volume, dm' • mol"' range of {P, p, T) states are beneficial to the devel- P Pressure, MPa opment of an accurate equation of state for the P. -

Kinetic Energy of a Free Quantum Brownian Particle

entropy Article Kinetic Energy of a Free Quantum Brownian Particle Paweł Bialas 1 and Jerzy Łuczka 1,2,* ID 1 Institute of Physics, University of Silesia, 41-500 Chorzów, Poland; [email protected] 2 Silesian Center for Education and Interdisciplinary Research, University of Silesia, 41-500 Chorzów, Poland * Correspondence: [email protected] Received: 29 December 2017; Accepted: 9 February 2018; Published: 12 February 2018 Abstract: We consider a paradigmatic model of a quantum Brownian particle coupled to a thermostat consisting of harmonic oscillators. In the framework of a generalized Langevin equation, the memory (damping) kernel is assumed to be in the form of exponentially-decaying oscillations. We discuss a quantum counterpart of the equipartition energy theorem for a free Brownian particle in a thermal equilibrium state. We conclude that the average kinetic energy of the Brownian particle is equal to thermally-averaged kinetic energy per one degree of freedom of oscillators of the environment, additionally averaged over all possible oscillators’ frequencies distributed according to some probability density in which details of the particle-environment interaction are present via the parameters of the damping kernel. Keywords: quantum Brownian motion; kinetic energy; equipartition theorem 1. Introduction One of the enduring milestones of classical statistical physics is the theorem of the equipartition of energy [1], which states that energy is shared equally amongst all energetically-accessible degrees of freedom of a system and relates average energy to the temperature T of the system. In particular, for each degree of freedom, the average kinetic energy is equal to Ek = kBT/2, where kB is the Boltzmann constant.