Bayesian Filters State Estimation on Directed Graphs: on the Toronto Subway System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Toronto Subway System, ON

Canadian Geotechnical Society Canadian Geotechnical Achievements 2017 Toronto Subway System Geotechnical Investigation and Design of Tunnels Geographical location Key References Wong, JW. 1981. Geotechnical/ Toronto, Ontario geological aspects of the Toronto subway system. Toronto Transit Commission. When it began or was completed Walters, DL, Main, A, Palmer, S, Construction began in 1949 and continues today. Westland, J, and Bihendi, H. 2011. Managing subsurface risk for Toronto- York Spadina Subway extension project. Why a Canadian geotechnical achievement? 2011 Pan-Am and CGS Geotechnical Conference. Construction of the Toronto Transit Commission (TTC) subway system, the first in Canada, began in 1949 and currently consists Photograph and Map of four subway lines. In total there are currently approximately 68 km of subway tracks and 69 stations. Over one million trips are taken daily on the TTC during weekdays. The subway system has been expanded in several stages, from 1954, when the first line (the Yonge Line) was opened, to 2002, when the most recently completed line (the Sheppard Line) was opened. Currently the Toronto-York Spadina Subway Extension is under construction. The subway tunnels have been constructed using a number of different technologies over the years including: open cut excavation, open/closed shield tunneling under ambient air pressure and compressed air, hand mining and earth pressurized tunnel boring machines. The subway tunnels range in design from reinforced concrete box structures to approximately 6 m diameter precast concrete segmental liners or cast iron segmental liners. Looking east inside newly constructed TTC subway The tunnels have been advanced through various geological tunnel, 1968. (City of Toronto Archives) formations: from hard glacial tills to saturated alluvial sands and silts, and from glaciolacustrine clays to shale bedrock. -

Rapid Transit in Toronto Levyrapidtransit.Ca TABLE of CONTENTS

The Neptis Foundation has collaborated with Edward J. Levy to publish this history of rapid transit proposals for the City of Toronto. Given Neptis’s focus on regional issues, we have supported Levy’s work because it demon- strates clearly that regional rapid transit cannot function eff ectively without a well-designed network at the core of the region. Toronto does not yet have such a network, as you will discover through the maps and historical photographs in this interactive web-book. We hope the material will contribute to ongoing debates on the need to create such a network. This web-book would not been produced without the vital eff orts of Philippa Campsie and Brent Gilliard, who have worked with Mr. Levy over two years to organize, edit, and present the volumes of text and illustrations. 1 Rapid Transit in Toronto levyrapidtransit.ca TABLE OF CONTENTS 6 INTRODUCTION 7 About this Book 9 Edward J. Levy 11 A Note from the Neptis Foundation 13 Author’s Note 16 Author’s Guiding Principle: The Need for a Network 18 Executive Summary 24 PART ONE: EARLY PLANNING FOR RAPID TRANSIT 1909 – 1945 CHAPTER 1: THE BEGINNING OF RAPID TRANSIT PLANNING IN TORONTO 25 1.0 Summary 26 1.1 The Story Begins 29 1.2 The First Subway Proposal 32 1.3 The Jacobs & Davies Report: Prescient but Premature 34 1.4 Putting the Proposal in Context CHAPTER 2: “The Rapid Transit System of the Future” and a Look Ahead, 1911 – 1913 36 2.0 Summary 37 2.1 The Evolving Vision, 1911 40 2.2 The Arnold Report: The Subway Alternative, 1912 44 2.3 Crossing the Valley CHAPTER 3: R.C. -

TTC Typography History

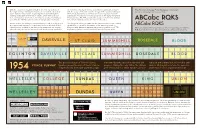

With the exception of Eglinton Station, 11 of the 12 stations of The intention of using Helvetica and Univers is unknown, however The Toronto Subway Font (Designer Unknown) the original Yonge Subway line have been renovated extensively. with the usage of the latter on the design of the Spadina Subway in Based on Futura by Paul Renner (1928) Some stations retained the original typefaces but with tighter 1978, it may have been an internal decision to try and assimilate tracking and subtle differences in weight, while other stations subsequent renovations of existing stations in the aging Yonge and were renovated so poorly there no longer is a sense of simplicity University lines. The TTC avoided the usage of the Toronto Subway seen with the 1954 designs in terms of typographical harmony. font on new subway stations for over two decades. ABCabc RQKS Queen Station, for example, used Helvetica (LT Std 75 Bold) in such The Sheppard Subway in 2002 saw the return of the Toronto Subway an irresponsible manner; it is repulsively inconsistent with all the typeface as it is used for the names of the stations posted on ABCabc RQKS other stations, and due to the renovators preserving the original platfrom level. Helvetica became the primary typeface for all TTC There are subtle differences between the two typefaces, notably the glass tile trim, the font weight itself looks botched and unsuitable. wayfinding signages and informational material system-wide. R, Q, K, and S; most have different terminals, spines, and junctions. ST CLAIR SUMMERHILL BLOOR DANGER DA N GER Danger DO NOT ENTER Do Not Enter Do Not Enter DAVISVILLE ST CL AIR SUMMERHILL ROSEDALE BLOOR EGLINTON DAVISVILLE ST CLAIR SUMMERHILL ROSEDALE BLOOR EGLINTON DAVISVILLE ST CLAIR SUMMERHILL ROSEDALE BLOOR The specially-designed Toronto Subway that embodied the spirit of modernism and replaced with a brutal mix of Helvetica and YONGE SUBWAY typeface graced the walls of the 12 stations, progress. -

Relief Line Update

Relief Line Update Mathieu Goetzke, Vice-President, Planning David Phalp, Manager, Rapid Transit Planning FEBRUARY 7, 2019 EXECUTIVE SUMMARY • The Relief Line South alignment identified by the City of Toronto as preferred, has a Benefit Cost Ratio (BCR) above 1.0, demonstrating the project’s value; however, since it is close to 1.0, it is highly sensitive to costs, so more detailed design work and procurement method choice will be of importance to maintain or improve this initial BCR. • Forecasts suggest that Relief Line South will attract ridership to unequivocally justify subway-level service; transit-oriented development opportunities can further boost ridership. • Transit network forecasts show that Relief Line South needs to be in operation before the Yonge North Subway Extension. Relief Line North provides further crowding relief for Line 1. RELIEF LINE UPDATE 2 SUBWAY EXPANSION - PROJECT STATUS Both Relief Line North and South and the Yonge North Subway Extension are priority projects included in the 2041 Regional Transportation Plan. RELIEF LINE UPDATE 3 RELIEF LINE SOUTH: Initial Business Case Alignments Evaluated • Metrolinx is developing an Initial Business Case on Relief Line South, evaluating six alignments according to the Metrolinx Business Case Guidance and the Auditor General’s 2018 recommendations • Toronto City Council approved the advancement of alignment “A” (Pape- Queen via Carlaw & Eastern) • Statement of Completion of the Transit Project Assessment Process (TPAP) received October 24, 2018. RELIEF LINE UPDATE 4 RELIEF -

Toronto Subway Air Quality Health Impact Assessment

Toronto Subway Air Quality Health Impact Assessment Prepared for: Toronto Public Health City of Toronto November 15, 2019 Toronto Subway Air Quality Health Impact Assessment Project Summary Introduction Toronto’s subway is a critical part of the Toronto Transit Commission’s (TTC) public transportation network. First opened in 1954, it spans over 77 km of track and 75 stations across the city. On an average weekday over 1,400,000 customer-trips are taken on the subway. In 2017, Health Canada reported levels of air pollution in the TTC subway system that were elevated compared with outdoor air. The Toronto Board of Health requested that Toronto Public Health (TPH) oversee an independent study to understand the potential health impacts of air quality issues for passengers in the Toronto subway system - The Toronto Subway Air Quality Health Impact Assessment (TSAQ HIA). The TSAQ HIA takes a holistic approach to assessing how subway use may positively and/or negatively impact the health and well-being of Torontonians. The HIA used a human health risk assessment (HHRA) approach to calculate the potential health risks from exposure to air pollutants in the subway, and the results of the HHRA were incorporated into the HIA. The TSAQ HIA sought to answer three overarching questions: 1. What is the potential health risk to current passengers from air pollutants in the subway system? 2. What are the potential health benefits to mitigation measures that could be implemented to improve air quality in the TTC subway system? 3. What is the overall impact of the TTC’s subway system on the health and well-being of Torontonians? Results of the Toronto Subway Air Qualty Health Impact Assessment The TSAQ HIA assessed the overall impact of subway use on the health and well-being of Torontonians. -

Service Summary September 1, 2019 to October 12, 2019

Service Summary September 1, 2019 to October 12, 2019 Data compiled by the Strategy and Service Planning Department SERVICE SUMMARY – Introduction Abbreviations Avg spd..... Average speed (km/h) NB ............. Northbound This is a summary of all transit service operated by the Toronto Transit Commission for the period Dep ........... Departure SB ............. Southbound indicated. All rapid transit, streetcar, bus, and community bus routes and services are listed. The RT ............. Round trip EB ............. Eastbound summary identifies the routes, gives the names and destinations, the garage or carhouse from which Term ......... Terminal time WB ............ Westbound Veh type ... Vehicle type the service is operated, the characteristics of the service, and the times of the first and last trips on each route. The headway operated on each route is shown, together with the combined or average Division abbreviations headway on the route, if more than one branch is operated. The number and type of vehicles Arw ........... Arrow Road Mal ............ Malvern Rus ............ Russell/Leslie operated on the route are listed, as well as the round-trip driving time, the total terminal time, and the Bir ............. Birchmount MtD ........... Mount Dennis Wil ............. Wilson Bus average speed of the route (driving time only, not including terminal time). DanSub..... Danforth Subway Qsy ........... Queensway WilSub ....... Wilson Subway The first and last trip times shown are the departure times for the first or last trip which covers the Egl ............ Eglinton Ron ........... Roncesvalles W-T ........... Wheel-Trans entire branch. In some cases, earlier or later trips are operated which cover only part of the routing, and the times for these trips are not shown. -

Assessment of Provincial Proposals Line 2 East Extension

EX9.1 Attachment 5 – Assessment of Provincial Proposals Line 2 East Extension As directed by City Council in April 2019, City and TTC staff have assessed the Province’s proposed 3-stop Line 2 East Extension. The details of this assessment are provided in this attachment. 1. Project Summary 1.1 Project Description The Line 2 East Extension (L2EE), as proposed by the Province, is an approximately 8 kilometre underground extension of Line 2 Bloor-Danforth from Kennedy Station that will have three stations – at Lawrence and McCowan, Scarborough Centre and Sheppard and McCowan, as shown in Figure 1. The Province's plan for the L2EE is similar to what was being planned by the City and TTC prior to 2016. Figure 1 - Line 2 East Extension Reference Map Note: Map produced by City/TTC based on current understanding of project Attachment 5 – Assessment of Line 2 East Extension Page 1 of 9 As proposed, the extension will be fully integrated with the existing Line 2 and have through service at Kennedy Station. A turn-back may be included east of Kennedy Station to enable reduced service to Scarborough Centre, subject to demand and service standards. The extension will require approximately seven additional six-car, 138-metre-long trains to provide the service. The trains would be interoperable with the other trains on Line 2. With the station at Sheppard and McCowan supporting storage of up to six trains, there is sufficient storage and maintenance capacity existing at the TTC’s Line 2 storage and maintenance facilities to accommodate this increase in fleet size. -

Eglinton Crosstown West Extension Initial Business Case February 2020

Eglinton Crosstown West Extension Initial Business Case February 2020 Eglinton Crosstown West Extension Initial Business Case February 2020 Contents Executive Summary 1 Scope 1 Method of Analysis 1 Findings 3 Strategic Case 3 Economic Case 3 Financial Case 4 Deliverability and Operations Case 4 Summary 4 Introduction 7 Background 8 Business Case Overview 10 Problem Statement 13 Case for Change 14 Problem Statement 14 Opportunity for Change 15 Key Drivers 16 Strategic Value 18 iv Investment Options 24 Introduction 25 Study Area 25 Options Development 25 Options for Analysis 27 Assumptions for Analysis and Travel Demand Modelling 33 Strategic Case 34 Introduction 35 Strategic Objective 1 – Connect More Places with Better Frequent Rapid Transit 38 Criterion 1: To provide high quality transit to more people in more places 38 Criterion 2: To address the connectivity gap between Eglinton Crosstown LRT and Transitway BRT 40 Strategic Objective 2 – Improve Transit’s Convenience and Attractiveness 42 Criterion 2: To provide more reliable, safe and enjoyable travel experience 42 Criterion 2: To boost transit use and attractiveness among local residents and workers 45 Strategic Objective 3 – Promote Healthier and More Sustainable Travel Behaviours 52 Criterion 1: To improve liveability through reduction in traffic delays, auto dependency and air pollution 52 Criterion 2: To encourage use of active modes to access stations 53 v Strategic Objective 4 – Encourage Transit-Supportive Development 57 Criterion 1: Compatibility with Existing Neighbourhood -

Model City Hall 2018 City Planning and Sustainability Selina Hsu and Sajid Mahmud

Model City Hall 2018 City Planning and Sustainability Selina Hsu and Sajid Mahmud Greetings Delegates, It is our pleasure to welcome you to Model City Hall 2018. As the world changes more rapidly, we must step up to address the many old and new issues that will affect our way of life. Toronto has long been lumbering and suffering with the congestion on the Line 1 Yonge-University subway; we are under pressure to make our urban environments more sustainable and healthy for ourselves and future generations; and we are look to problems of the future, such as great climate change and natural disasters. Things care constantly changing in our city, and although shovels are in the ground on Eglinton and we have weathered the storms before, the Torontonians of tomorrow must work together to improve the place we call home. We look forward to reading your position papers, listening to your thoughts and ideas, and reading the resolutions that you put forth to deal with these pressing issues. We will be judging delegates and selecting the Best Delegate, Outstanding Delegate, and Honorable Mention based on knowledge, diplomacy, problem-solving skills, and leadership qualities. It is encouraged that delegates have done ample research so that they can offer interesting solutions and generate dynamic, interesting debate. We hope to provide you all with an enjoyable experience that will give you some perspective. Welcome to Model City Hall 2018! With warmest regards, Selina Hsu and Sajid Mahmud Chairs of the City Planning and Sustainability Committee 1 Model City Hall 2018 City Planning and Sustainability Selina Hsu and Sajid Mahmud TOPIC 1: Livable and Sustainable Streets Around the world, there is an increasing emphasis on developing livable and sustainable city streets. -

Applying Life Cycle Assessment to Analyze the Environmental Sustainability of Public Transit Modes for the City of Toronto

Applying life cycle assessment to analyze the environmental sustainability of public transit modes for the City of Toronto by Ashton Ruby Taylor A thesis submitted to the Department of Geography & Planning in conformity with the requirements for the Degree of Master of Science Queen’s University Kingston, Ontario, Canada September, 2016 Copyright © Ashton Ruby Taylor, 2016 Abstract One challenge related to transit planning is selecting the appropriate mode: bus, light rail transit (LRT), regional express rail (RER), or subway. This project uses data from life cycle assessment to develop a tool to measure energy requirements for different modes of transit, on a per passenger-kilometer basis. For each of the four transit modes listed, a range of energy requirements associated with different vehicle models and manufacturers was developed. The tool demonstrated that there are distinct ranges where specific transit modes are the best choice. Diesel buses are the clear best choice from 7-51 passengers, LRTs make the most sense from 201-427 passengers, and subways are the best choice above 918 passengers. There are a number of other passenger loading ranges where more than one transit mode makes sense; in particular, LRT and RER represent very energy-efficient options for ridership ranging from 200 to 900 passengers. The tool developed in the thesis was used to analyze the Bloor-Danforth subway line in Toronto using estimated ridership for weekday morning peak hours. It was found that ridership across the line is for the most part actually insufficient to justify subways over LRTs or RER. This suggests that extensions to the existing Bloor-Danforth line should consider LRT options, which could service the passenger loads at the ends of the line with far greater energy efficiency. -

Ontario Line Early Works - Presentation by Metrolinx

REPORT FOR ACTION Ontario Line Early Works - Presentation by Metrolinx Date: February 4, 2021 To: The Board of Governors of Exhibition Place From: Don Boyle, Chief Executive Officer Wards: All Wards SUMMARY Announced by the Province of Ontario in 2019, the proposed Ontario Line is one of four priority transit projects Metrolinx is leading for the Greater Toronto Area (GTA). The line will be the largest single expansion in Toronto’s subway history, helping to ease congestion on existing transit lines throughout the city, and bring transit to underserviced neighbourhoods. The Ontario Line will bring nearly 16 kilometres of much-needed subway service to Toronto to make it faster and easier for hundreds of thousands of people to get where they need to be each day. The line will stretch across the city, from the Ontario Science Centre in the northeast to Exhibition Place in the southwest. Current plans for the Ontario Line include 15 stations including 6 interchange stations, and over 40 new connections to GO train lines and existing subway, streetcar, and bus lines. Staff form Metrolinx will be attending the Board meeting of February 19, 2021 to do a power point presentation on the design and progress of the Ontario Line development to date for the benefit of the Board Members. RECOMMENDATIONS The Chief Executive Officer recommends that: 1.The Board receive this report for information. Ontario Early Line Works - Presentation by Metrolinx Page 1 of 3 FINANCIAL IMPACT There are no financial implications associated with this report. DECISION HISTORY The Exhibition Place 2017-2019 Strategic Plan has a focus on Public Space and Infrastructure, which includes an objective to identify the linkages to future public transit and road networks. -

Canada Rail Opportunities Scoping Report Preface

01 Canada Rail Opportunities Canada Rail Opportunities Scoping Report Preface Acknowledgements Photo and image credits The authors would like to thank the Agence Métropolitaine de Transport following organisations for their help and British Columbia Ministry of Transportation support in the creation of this publication: and Infrastructure Agence Métropolitaine de Transport BC Transit Alberta Ministry of Transport Calgary Transit Alberta High Speed Rail City of Brampton ARUP City of Hamilton Balfour Beatty City of Mississauga Bombardier City of Ottawa Calgary Transit Edmonton Transit Canadian National Railway Helen Hemmingsen, UKTI Toronto Canadian Urban Transit Association Metrolinx Edmonton Transit OC Transpo GO Transit Sasha Musij, UKTI Calgary Metrolinx Société de Transport de Montréal RailTerm TransLink SNC Lavalin Toronto Transit Commission Toronto Transit Commission Wikimedia Commons Wikipedia Front cover image: SkyTrain in Richmond, Vancouver Canada Rail Opportunities Contents Preface Foreword 09 About UK Trade & Investment 10 High Value Opportunities Programme 11 Executive Summary 12 1.0 Introduction 14 2.0 Background on Canada 15 2.1 Macro Economic Review 16 2.2 Public-Private Partnerships 18 3.0 Overview of the Canadian Rail Sector 20 4.0 Review of Urban Transit Operations and Opportunities by Province 21 4.1 Summary Table of Existing Urban Transit Rail Infrastructure and Operations 22 4.2 Summary Table of Key Project Opportunities 24 4.3 Ontario 26 4.4 Québec 33 4.5 Alberta 37 4.6 British Columbia 41 5.0 In-Market suppliers 45 5.1 Contractors 45 5.2 Systems and Rolling Stock 48 5.3 Consultants 49 6.0 Concluding Remarks 51 7.0 Annexes 52 7.1 Doing Business in Canada 52 7.2 Abbreviations 53 7.3 Bibliography 54 7.4 List of Reference Websites 56 7.5 How can UKTI Help UK Organisations Succeed in Canada 58 Contact UKTI 59 04 Canada Rail Opportunities About the Authors David Bill Helen Hemmingsen David is the International Helen Hemmingsen is a Trade Officer Development Director for the UK with the British Consulate General Railway Industry Association (RIA).