A Bayesian Surrogate Constitutive Model to Estimate Failure Probability of Rubber-Like Materials

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Engineering Viscoelasticity

ENGINEERING VISCOELASTICITY David Roylance Department of Materials Science and Engineering Massachusetts Institute of Technology Cambridge, MA 02139 October 24, 2001 1 Introduction This document is intended to outline an important aspect of the mechanical response of polymers and polymer-matrix composites: the field of linear viscoelasticity. The topics included here are aimed at providing an instructional introduction to this large and elegant subject, and should not be taken as a thorough or comprehensive treatment. The references appearing either as footnotes to the text or listed separately at the end of the notes should be consulted for more thorough coverage. Viscoelastic response is often used as a probe in polymer science, since it is sensitive to the material’s chemistry and microstructure. The concepts and techniques presented here are important for this purpose, but the principal objective of this document is to demonstrate how linear viscoelasticity can be incorporated into the general theory of mechanics of materials, so that structures containing viscoelastic components can be designed and analyzed. While not all polymers are viscoelastic to any important practical extent, and even fewer are linearly viscoelastic1, this theory provides a usable engineering approximation for many applications in polymer and composites engineering. Even in instances requiring more elaborate treatments, the linear viscoelastic theory is a useful starting point. 2 Molecular Mechanisms When subjected to an applied stress, polymers may deform by either or both of two fundamen- tally different atomistic mechanisms. The lengths and angles of the chemical bonds connecting the atoms may distort, moving the atoms to new positions of greater internal energy. -

Viscoelasticity of Filled Elastomers: Determination of Surface-Immobilized Components and Their Role in the Reinforcement of SBR-Silica Nanocomposites

Viscoelasticity of Filled Elastomers: Determination of Surface-Immobilized Components and their Role in the Reinforcement of SBR-Silica Nanocomposites Dissertation zur Erlangung des akademischen Grades doctor rerum naturalium (Dr. rer. nat.) genehmigt durch die Naturwissenschaftliche Fakultät II Institut für Physik der Martin-Luther-Universität Halle-Wittenberg vorgelegt von M. Sc. Anas Mujtaba geboren am 03.04.1980 in Lahore, Pakistan Halle (Saale), January 15th, 2014 Gutachter: 1. Prof. Dr. Thomas Thurn-Albrecht 2. Prof. Dr. Manfred Klüppel 3. Prof. Dr. Rene Androsch Öffentliche Verteidigung: July 3rd, 2014 In loving memory of my beloved Sister Rabbia “She will be in my Heart ξ by my Side for the Rest of my Life” Contents 1 Introduction 1 2 Theoretical Background 5 2.1 Elastomers . .5 2.1.1 Fundamental Theories on Rubber Elasticity . .7 2.2 Fillers . 10 2.2.1 Carbon Black . 12 2.2.2 Silica . 12 2.3 Filled Rubber Reinforcement . 15 2.3.1 Occluded Rubber . 16 2.3.2 Payne Effect . 17 2.3.3 The Kraus Model for the Strain-Softening Effect . 18 2.3.4 Filler Network Reinforcement . 20 3 Experimental Methods 29 3.1 Dynamic Mechanical Analysis . 29 3.1.1 Temperature-dependent Measurement (Temperature Sweeps) . 31 3.1.2 Time-Temperature Superposition (Master Curves) . 31 3.1.3 Strain-dependent Measurement (Payne Effect) . 33 3.2 Low-field NMR . 34 3.2.1 Theoretical Concept . 34 3.2.2 Experimental Details . 39 4 Optimizing the Tire Tread 43 4.1 Relation Between Friction and the Mechanical Properties of Tire Rubbers 46 4.2 Usage of tan δ As Loss Parameter . -

Elasticity and Viscoelasticity

CHAPTER 2 Elasticity and Viscoelasticity CHAPTER 2.1 Introduction to Elasticity and Viscoelasticity JEAN LEMAITRE Universite! Paris 6, LMT-Cachan, 61 avenue du President! Wilson, 94235 Cachan Cedex, France For all solid materials there is a domain in stress space in which strains are reversible due to small relative movements of atoms. For many materials like metals, ceramics, concrete, wood and polymers, in a small range of strains, the hypotheses of isotropy and linearity are good enough for many engineering purposes. Then the classical Hooke’s law of elasticity applies. It can be de- rived from a quadratic form of the state potential, depending on two parameters characteristics of each material: the Young’s modulus E and the Poisson’s ratio n. 1 c * ¼ A s s ð1Þ 2r ijklðE;nÞ ij kl @c * 1 þ n n eij ¼ r ¼ sij À skkdij ð2Þ @sij E E Eandn are identified from tensile tests either in statics or dynamics. A great deal of accuracy is needed in the measurement of the longitudinal and transverse strains (de Æ10À6 in absolute value). When structural calculations are performed under the approximation of plane stress (thin sheets) or plane strain (thick sheets), it is convenient to write these conditions in the constitutive equation. Plane stress ðs33 ¼ s13 ¼ s23 ¼ 0Þ: 2 3 1 n 6 À 0 7 2 3 6 E E 72 3 6 7 e11 6 7 s11 6 7 6 1 76 7 4 e22 5 ¼ 6 0 74 s22 5 ð3Þ 6 E 7 6 7 e12 4 5 s12 1 þ n Sym E Handbook of Materials Behavior Models Copyright # 2001 by Academic Press. -

The Thermodynamic Properties of Elastomers: Equation of State and Molecular Structure

CH 351L Wet Lab 4 / p. 1 The Thermodynamic Properties of Elastomers: Equation of State and Molecular Structure Objective To determine the macroscopic thermodynamic equation of state of an elastomer, and relate it to its microscopic molecular properties. Introduction We are all familiar with the very useful properties of such objects as rubber bands, solid rubber balls, and tires. Materials such as these, which are capable of undergoing large reversible extensions and compressions, are called elastomers. An example of such a material is natural rubber, obtained from the plant Hevea brasiliensis. An elastomer has rather unusual physical properties; for example, an ordinary elastic band can be stretched up to 15 times its original length and then be restored to its original size. Although we might initially consider elastomers to be a solids, many have isothermal compressibilities comparable with that of liquids (e.g. toluene), about 10-4 atm-1 (compared with solids such as polystyrene or aluminum which have values of ~10–6 atm–1). Certain evidence suggests that an elastomer is a disordered "solid," i.e., a glass, that cannot flow as a result of internal, structural restrictions. The reversible deformability of an elastomer is reminiscent of a gas. In fact the term elastic was first used by Robert Boyle (1660) in describing a gas, "There is a spring or elastical power in the air in which we live." In this experiment, you will encounter certain formal thermodynamic similarities between an elastomer and a gas. One of the rather dramatic and anomalous properties of an elastomer is that once brought to an extended form, it contracts upon heating. -

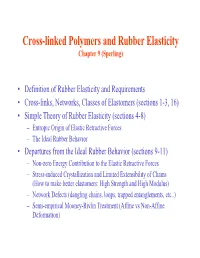

Cross-Linked Polymers and Rubber Elasticity Chapter 9 (Sperling)

Cross-linked Polymers and Rubber Elasticity Chapter 9 (Sperling) • Definition of Rubber Elasticity and Requirements • Cross-links, Networks, Classes of Elastomers (sections 1-3, 16) • Simple Theory of Rubber Elasticity (sections 4-8) – Entropic Origin of Elastic Retractive Forces – The Ideal Rubber Behavior • Departures from the Ideal Rubber Behavior (sections 9-11) – Non-zero Energy Contribution to the Elastic Retractive Forces – Stress-induced Crystallization and Limited Extensibility of Chains (How to make better elastomers: High Strength and High Modulus) – Network Defects (dangling chains, loops, trapped entanglements, etc..) – Semi-empirical Mooney-Rivlin Treatment (Affine vs Non-Affine Deformation) Definition of Rubber Elasticity and Requirements • Definition of Rubber Elasticity: Very large deformability with complete recoverability. • Molecular Requirements: – Material must consist of polymer chains. Need to change conformation and extension under stress. – Polymer chains must be highly flexible. Need to access conformational changes (not w/ glassy, crystalline, stiff mat.) – Polymer chains must be joined in a network structure. Need to avoid irreversible chain slippage (permanent strain). One out of 100 monomers must connect two different chains. Connections (covalent bond, crystallite, glassy domain in block copolymer) Cross-links, Networks and Classes of Elastomers • Chemical Cross-linking Process: Sol-Gel or Percolation Transition • Gel Characteristics: – Infinite Viscosity – Non-zero Modulus – One giant Molecule – Solid -

Elastomeric Materials

ELASTOMERIC MATERIALS TAMPERE UNIVERSITY OF TECHNOLOGY THE LABORATORY OF PLASTICS AND ELASTOMER TECHNOLOGY Kalle Hanhi, Minna Poikelispää, Hanna-Mari Tirilä Summary On this course the students will get the basic information on different grades of rubber and thermoelasts. The chapters focus on the following subjects: - Introduction - Rubber types - Rubber blends - Thermoplastic elastomers - Processing - Design of elastomeric products - Recycling and reuse of elastomeric materials The first chapter introduces shortly the history of rubbers. In addition, it cover definitions, manufacturing of rubbers and general properties of elastomers. In this chapter students get grounds to continue the studying. The second chapter focus on different grades of elastomers. It describes the structure, properties and application of the most common used rubbers. Some special rubbers are also covered. The most important rubber type is natural rubber; other generally used rubbers are polyisoprene rubber, which is synthetic version of NR, and styrene-butadiene rubber, which is the most important sort of synthetic rubber. Rubbers always contain some additives. The following chapter introduces the additives used in rubbers and some common receipts of rubber. The important chapter is Thermoplastic elastomers. Thermoplastic elastomers are a polymer group whose main properties are elasticity and easy processability. This chapter introduces the groups of thermoplastic elastomers and their properties. It also compares the properties of different thermoplastic elastomers. The chapter Processing give a short survey to a processing of rubbers and thermoplastic elastomers. The following chapter covers design of elastomeric products. It gives the most important criteria in choosing an elastomer. In addition, dimensioning and shaping of elastomeric product are discussed The last chapter Recycling and reuse of elastomeric materials introduces recycling methods. -

Rubber As an Aid to Teach Thermodynamics∗ the Discovery by a Blind Scientist

GENERAL ARTICLE Rubber as an Aid to Teach Thermodynamics∗ The Discovery by a Blind Scientist Geethamma V G and Sampath V The behaviour of rubber differs from that of conventional materials. Rubber heats up on stretching and cools on re- traction. Also, stretched rubber shrinks on heating (ther- moelastic shrinkage) while a stretched metal elongates. The elastic recovery of rubber is due to its tendency to maximize the entropy. The same property also causes the thermoe- lastic shrinkage. Metallic materials possess energy elastic- Geethamma V G was a ity, while ideal rubber possesses entropy elasticity. The ther- Fulbright Fellow at modynamic behaviour of rubber is similar to that of gaseous University of Illinois, USA materials. Hence rubber can be used as an aid for teaching and a Royal Society International Postdoctoral thermodynamics. Fellow at Cavendish Lab, University of Cambridge, UK. She was also a Young 1. Gough–Joule Effect Scientist Awardee. John Gough was not born blind. But by a sheer quirk of fate, he lost his eyesight due to smallpox before turning three. However, his senses of touch and hearing were intact, and he was bestowed with a sharp inquisitive mind and adequate skills for experimen- V Sampath is presently a tation. He tended to be philosophical too. John Dalton, a well Professor of Metallurgical known British scientist, who proposed the atomic theory, helped and Materials Engg. at IIT Gough by reading out to and writing for him. He had great admi- Madras. He holds a PhD from IISc, Bengaluru and has ration for Gough. three decades of research and In 1805, Gough observed two important properties of rubber [1]. -

Rubber and Rubber Balloons

I. Miiller P. Strehlow Rubber and Rubber Balloons Paradigms of Thermodynamics Springer Contents 1 Stability of Two Rubber Balloons 1 2 Kinetic Theory of Rubber 7 2.1 Rubber Elasticity is "Entropy Induced" 7 2.2 Entropy of a Rubber Molecule 10 2.3 The Rubber Molecule with Arbitrarily Oriented Links 12 2.4 Entropy and Disorder, Entropic Elasticity 14 2.5 Entropy of a Rubber Bar 14 2.6 Uniaxial Force-Stretch Curve , 16 2.7 Biaxial Loading 17 2.8 Application to a Balloon 19 2.9 Criticism of the Kinetic Theory of Rubber 20 3 Non-linear Elasticity 21 3.1 Deformation Gradient and Stress-Stretch Relation 21 3.2 Material Symmetry and Isotropy 22 3.3 Material Objectivity 24 3.4 Representation of an Isotropic Function 24 3.5 Incompressibility. Mooney-Rivlin Material 26 3.6 Biaxial Stretching of a Mooney-Rivlin Membrane 27 3.7 Free Energy 27 3.8 Rubber Balloon with the Mooney-Rivlin Equation 28 3.9 Surface Tension 29 3.10 Cylindrical Balloon 30 3.11 Treloar's Biaxial Experiment 32 4 Biaxial Stretching of a Rubber Membrane - A Paradigm of Stability, Symmetry-Breaking, and Hysteresis 35 4.1 Load-Deformation Relations of a Biaxially Loaded Mooney-Rivlin Membrane 35 4.2 Deformation of a Square Membrane Under Symmetric Loading 35 4.3 Stability Criteria 37 4.4 Minimal Free Energy 38 4.5 Free Enthalpy, Gibbs Free Energy 39 VI Contents 4.6 Symmetry Breaking 41 4.7 Hysteresis 43 4.8 Non-monotone Force-Stretch Relation 44 4.9 Hysteresis and Breakthrough. -

Elasticity: Thermodynamic Treatment André Zaoui, Claude Stolz

Elasticity: Thermodynamic Treatment André Zaoui, Claude Stolz To cite this version: André Zaoui, Claude Stolz. Elasticity: Thermodynamic Treatment. Encyclopedia of Materials: Sci- ence and Technology, Elsevier Science, pp.2445-2449, 2000, 10.1016/B0-08-043152-6/00436-8. hal- 00112293 HAL Id: hal-00112293 https://hal.archives-ouvertes.fr/hal-00112293 Submitted on 31 Oct 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Elasticity: Thermodynamic Treatment A similar treatment can be applied to the second principle that relates to the existence of an absolute The elastic behavior of materials is usually described temperature, T, and of a thermodynamic function, the by direct stress–strain relationships which use the fact entropy, obeying a constitutive inequality ensuring that there is a one-to-one connection between the the positivity of the dissipated energy. This leads to the strain and stress tensors ε and σ; some mechanical following local inequality: elastic potentials can then be defined so as to express E G ! the stress tensor as the derivative of the strain potential q r with respect to the strain tensor, or conversely the ρscjdiv k & 0 (2) T T latter as the derivative of the complementary (stress) F H potential with respect to the former. -

Lecture 11: Rubber Elasticity 1 Suggested Reading 2 Thermodynamics of Rubber

Prof. Tibbitt Lecture 11 Networks & Gels Lecture 11: Rubber elasticity Prof. Mark W. Tibbitt { ETH Z¨urich { 02 April 2019 1 Suggested reading • Polymer Physics { Rubinstein and Colby: Chapter 7 2 Thermodynamics of rubber In the last section, we considered cross-linking and gelation reactions that formed polymer networks and gels. As reaction proceeds sufficiently far beyond the gel point, pc, most of the polymer content will be included in the gel as a single macroscopic network polymer (Pgel ≈ 1). These soft solids are a broadly useful class of materials and as engineers we are interested in understanding their mechanical properties. A class of soft, polymer networks that have been studied in detail are rubbers, which comprise many commodity products such as rubber bands, car tires, gaskets, and adhesives. These materials can undergo large elastic deformations without breaking; here, we explore the physics that enable these extremely useful properties. As we will see, entropic elasticity of polymer chains is the origin of these fascinating mechanical properties. Let us consider a polymer network. Thermodynamics describes the change in internal energy of this system as the sum of all changes in energy. This includes the heat added to the system T dS, the work done to change the volume of the polymer network −pdV , and work done to deform the polymer network fdL, where f is the force of deformation and L is the deformation length. dU = T dS − pdV + fdL: (1) This differential form describes the change in internal energy of the polymer network with a corresponding entropy change dS, volume change dV , or change in network length dL and is a thermodynamic state function of S, V , and L. -

Mechanics of Elastomeric Molecular Composites

Mechanics of elastomeric molecular composites Pierre Millereaua, Etienne Ducrota,1, Jess M. Cloughb,c, Meredith E. Wisemand, Hugh R. Browne, Rint P. Sijbesmab,c, and Costantino Cretona,f,2 aLaboratoire Sciences et Ingénierie de la Matière Molle, ESPCI Paris, PSL University, Sorbonne Université, CNRS, F-75005 Paris, France; bLaboratory of Macromolecular and Organic Chemistry, Eindhoven University of Technology, 5600 MB Eindhoven, The Netherlands; cInstitute for Complex Molecular Systems, Eindhoven University of Technology, 5600 MB Eindhoven, The Netherlands; dDSM Ahead, Royal DSM, 6167 RD Geleen, The Netherlands; eAustralian Institute of Innovative Materials, University of Wollongong Innovation Campus, North Wollongong, NSW 2522, Australia; and fGlobal Station for Soft Matter, Global Institution for Collaborative Research and Education, Hokkaido University, Sapporo 001-0021, Japan Edited by David A. Weitz, Harvard University, Cambridge, MA, and approved July 30, 2018 (received for review May 5, 2018) A classic paradigm of soft and extensible polymer materials is the classical laminated or fabric composites made by imbibing stiff difficulty of combining reversible elasticity with high fracture carbon or aramid fiber fabrics with a polymerizable epoxy resin toughness, in particular for moduli above 1 MPa. Our recent (20). In the macroscopic composite, elastic properties are mainly discovery of multiple network acrylic elastomers opened a pathway controlled by the fibers, whereas in the multiple network elas- to obtain precisely such a combination. -

Thermal and Mechanical Behavior of Rubber Systems

University of Massachusetts Amherst ScholarWorks@UMass Amherst Doctoral Dissertations 1896 - February 2014 1-1-1997 Thermal and mechanical behavior of rubber systems. David James Macon University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/dissertations_1 Recommended Citation Macon, David James, "Thermal and mechanical behavior of rubber systems." (1997). Doctoral Dissertations 1896 - February 2014. 956. https://scholarworks.umass.edu/dissertations_1/956 This Open Access Dissertation is brought to you for free and open access by ScholarWorks@UMass Amherst. It has been accepted for inclusion in Doctoral Dissertations 1896 - February 2014 by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. THERMAL AND MECHANICAL BEHAVIOR OF RUBBER SYSTEMS A Dissertation Presented by DAVID JAMES MACON Submitted to the Graduate School of the University of Massachusetts Amherst in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY February 1997 Department of Polymer Science and Engineering © Copyright by David James Macon 1997 All Rights Reserved THERMAL AND MECHANICAL BEHAVIOR OF RUBBER SYSTEMS A Dissertation Presented by DAVID JAMES MACON Approved as to style and content by: Richkd J. Farris, Chair rnes A. Donovar^-^cfember William J. MacKnight, Member Richard J. Farris, Department Head Polymer Science and Engineering ACKNOWLEDGMENTS I have heard it said that graduate school is an exotic form of punishment. Whoever said this was obviously not in the Farris research group. I am much indebted to Dr. Farris for his guidance during my stay at the University of Massachusetts. I appreciate the freedom he gave me to conduct my research, but also to give me support when I was struggling.