Augmentation in the Wild: User Centered Development and Evaluation of Augmented Reality Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MUSIC NOTES: Exploring Music Listening Data As a Visual Representation of Self

MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall A thesis submitted in partial fulfillment of the requirements for the degree of: Master of Design University of Washington 2016 Committee: Kristine Matthews Karen Cheng Linda Norlen Program Authorized to Offer Degree: Art ©Copyright 2016 Chad Philip Hall University of Washington Abstract MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall Co-Chairs of the Supervisory Committee: Kristine Matthews, Associate Professor + Chair Division of Design, Visual Communication Design School of Art + Art History + Design Karen Cheng, Professor Division of Design, Visual Communication Design School of Art + Art History + Design Shelves of vinyl records and cassette tapes spark thoughts and mem ories at a quick glance. In the shift to digital formats, we lost physical artifacts but gained data as a rich, but often hidden artifact of our music listening. This project tracked and visualized the music listening habits of eight people over 30 days to explore how this data can serve as a visual representation of self and present new opportunities for reflection. 1 exploring music listening data as MUSIC NOTES a visual representation of self CHAD PHILIP HALL 2 A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF: master of design university of washington 2016 COMMITTEE: kristine matthews karen cheng linda norlen PROGRAM AUTHORIZED TO OFFER DEGREE: school of art + art history + design, division -

Daniel Gilbert & Anton Kristiansson

xand • Ale er Dur en efe kin lt as • M T Nummer 10 • 2009 Sveriges största musiktidning i l • d n e o h i s H i j V e l s m i h • T O • a s k u w i o c o u L d • • I k s a Daniel GIlBert & AntoN KristianssoN Det gamla och det nya – samma Göteborg Joel Alme Pascal Fuck Buttons Bästa musiken 2009 Bibio Nile Dälek Up North Tildeh Hjelm This Is Head Riddarna 5 Årets album 7 Årets låtar Är trovärdighet på 8 Om autotunen må ni berätta väg ut? 8 Skivbolaget som blev ett fanzine Jag måste erkänna att jag vacklat i min tro illa om Henrik Berggren om han levt ett glas- på sistone. En tro som säger mig att trovär- sigt stekarliv. Att leva som man lär har sin ledare dighet i musiken är lika viktigt som i livet. Är poäng. Tycker jag. 10 Blågult guld det då så eller är jag bara en verklighetsfrån- I hiphopvärlden har trovärdighet å vänd drömmare? andra sidan varit A och O från dag ett. Där innehåll Riddarna Tankarna kring den vacklande trovärdig- förväntas man rappa om det man varit med hetssträvan kom till mig på en cykeltur till om. Att ha langat knark med ena handen på This Is Head kontoret. Jag tänkte på hårdrockare som pistolkolven är standard. Kulhålen ska finnas sjunger om Satan, döden, krig och annat där som bevis, liksom. Men det är lätt att Tildeh Hjelm hemskt. Kan det verkligen vara sant att så stelna i en form, och inte vilja utvecklas. -

TRONDHEIM, MARINEN 21.-22. AUGUST Årets Nykommer 2008 Norsk Rockforbund

- FESTIVALAVIS-FESTIVALAVIS-FESTIVALAVIS-FESTIVALAVIS-FES TRONDHEIM, MARINEN 21.-22. AUGUST Årets nykommer 2008 Norsk Rockforbund 24 band på 3 scener i 2 dager. Tenk deg om 2 ganger før du finner på noe annet. RÖYKSOPP, MOTORPSYCHO, ANE BRUN, ULVER, THÅSTRÖM(SE) CALEXICO(US), LATE OF THE PIER(UK), PRIMAL SCREAM(UK) GANG OF FOUR(UK), JAGA JAZZIST, RUPHUS, SKAMBANKT, HARRYS GYM, BLACK DEBBATH, SLAGSMÅLSKLUBBEN(SE), FILTHY DUKES(UK) MARINA AND THE DIAMONDS (UK), I WAS A KING, KIM HIORTHØY, STINA STJERN, 22, AMISH 82, THE SCHOOL, FJORDEN BABY! Blåsvart er aktivitet og mat FESTIVALINFO FESTIVALINFO Blått er scener Grønt er servering og bong Rødt er toaletter Orange er inngang Lilla er kino Pstereo no igjæn? Greit å vite FESTIVALSMARTS I sine tre første leveår Marinen Vi ber publikum være forsiktig med elvebredden og Vi gadd ikke å forfatte egne regler for festivalvett så vi har Pstereo opplevd Marinen er Trondheims mest idylliske friluftsområde. kanten ved kanonoppstillingen. Om vaktene ber deg flytte tjuvlånte de som allerede er nedskrevet av Norsk Rockfor- en bratt oppadgående Området ligger helt nede ved Nidelvens bredder, og har deg fra kanten eller elvebredden så er det kult om du hører bund. De kan for øvrig også oppsummeres slik; Man skal formkurve. Festivalen Nidarosdomen som nærmeste nabo. Når du kommer etter. Ingen ønsker skader under festivalen. ikke plage andre, man skal være grei og snill, og for øvrig har altså kommet for å inn på festivalområdet, så ønsker vi at du skal ta hensyn kan man gjøre hva man vil. bli. Tre ganger gjen- til områdets verdighet. -

Estimation of Key in Digital Music Recordings, Msc Computer Science Project Report

Estimation of key in digital music recordings MSc Computer Science Project Report This report is substantially the result of my own work, expressed in my own words, except where it is explicitly indicated in the text. I give my permission for it to be submitted to the JISC Plagiarism Detection Service. The report may be freely copied and distributed provided the source is explicitly acknowledged. Ibrahim Sha'ath Department of Computer Science and Information Systems Birkbeck College, University of London Abstract The goal of this work is to develop software that can analyse a digital recording of music and estimate the key of the recorded piece, in order to provide, at a glance, some information on its tonal compatibility with other recordings. Section 1 describes the motivation for automated key classification to sup- port disc jockeys with a computer-enabled workflow. It presents some basic music theory to clarify the concepts and terms underpinning the project. The scope is established with a statement of requirements for the software. Section 2 describes the project's theoretical approach, comparing it to previous work on the problem. It discusses the algorithms employed, and describes a novel method of translating the output of a Fast Fourier Trans- form into a musical analysis. Section 3 describes the implementation from a software engineering per- spective. It outlines the principles adhered to during development and describes the design of the user interface and classes. The testing strategy is presented. Section 4 describes the experiments conducted to test and optimise the accuracy of the software. It compares the performance of the software to existing products. -

Billboard Magazine

Pop's princess takes country's newbie under her wing as part of this season's live music mash -up, May 31, 2014 1billboard.com a girl -powered punch completewith talk of, yep, who gets to wear the transparent skirt So 99U 8.99C,, UK £5.50 SAMSUNG THE NEXT BIG THING IN MUSIC 200+ Ad Free* Customized MINI I LK Stations Radio For You Powered by: Q SLACKER With more than 200 stations and a catalog of over 13 million songs, listen to your favorite songs with no interruption from ads. GET IT ON 0)*.Google play *For a limited time 2014 Samsung Telecommunications America, LLC. Samsung and Milk Music are both trademarks of Samsung Electronics Co. Ltd. Appearance of device may vary. Device screen imagessimulated. Other company names, product names and marks mentioned herein are the property of their respective owners and may be trademarks or registered trademarks. Contents ON THE COVER Katy Perry and Kacey Musgraves photographed by Lauren Dukoff on April 17 at Sony Pictures in Culver City. For an exclusive interview and behind-the-scenes video, go to Billboard.com or Billboard.com/ipad. THIS WEEK Special Double Issue Volume 126 / No. 18 TO OUR READERS Billboard will publish its next issue on June 7. Please check Billboard.biz for 24-7 business coverage. Kesha photographed by Austin Hargrave on May 18 at the MGM Grand Garden Arena in Las Vegas. FEATURES TOPLINE MUSIC 30 Kacey Musgraves and Katy 5 Can anything stop the rise of 47 Robyn and Royksopp, Perry What’s expected when Spotify? (Yes, actually.) Christina Perri, Deniro Farrar 16 a country ingenue and a Chart Movers Latin’s 50 Reviews Coldplay, pop superstar meet up on pop trouble, Disclosure John Fullbright, Quirke 40 “ getting ready for tour? Fun and profits. -

Keyfinder V2 Dataset © Ibrahim Sha'ath 2014

KeyFinder v2 dataset © Ibrahim Sha'ath 2014 ARTIST TITLE KEY 10CC Dreadlock Holiday Gm 187 Lockdown Gunman (Natural Born Chillers Remix) Ebm 4hero Star Chasers (Masters At Work Main Mix) Am 50 Cent In Da Club C#m 808 State Pacifc State Ebm A Guy Called Gerald Voodoo Ray A A Tribe Called Quest Can I Kick It? (Boilerhouse Mix) Em A Tribe Called Quest Find A Way Ebm A Tribe Called Quest Vivrant Thing (feat Violator) Bbm A-Skillz Drop The Funk Em Aaliyah Are You That Somebody? Dm AC-DC Back In Black Em AC-DC You Shook Me All Night Long G Acid District Keep On Searching G#m Adam F Aromatherapy (Edit) Am Adam F Circles (Album Edit) Dm Adam F Dirty Harry's Revenge (feat Beenie Man and Siamese) G#m Adam F Metropolis Fm Adam F Smash Sumthin (feat Redman) Cm Adam F Stand Clear (feat M.O.P.) (Origin Unknown Remix) F#m Adam F Where's My (feat Lil' Mo) Fm Adam K & Soha Question Gm Adamski Killer (feat Seal) Bbm Adana Twins Anymore (Manik Remix) Ebm Afrika Bambaataa Mind Control (The Danmass Instrumental) Ebm Agent Sumo Mayhem Fm Air Sexy Boy Dm Aktarv8r Afterwrath Am Aktarv8r Shinkirou A Alexis Raphael Kitchens & Bedrooms Fm Algol Callisto's Warm Oceans Am Alison Limerick Where Love Lives (Original 7" Radio Edit) Em Alix Perez Forsaken (feat Peven Everett and Spectrasoul) Cm Alphabet Pony Atoms Em Alphabet Pony Clones Am Alter Ego Rocker Am Althea & Donna Uptown Top Ranking (2001 digital remaster) Am Alton Ellis Black Man's Pride F Aluna George Your Drums, Your Love (Duke Dumont Remix) G# Amerie 1 Thing Ebm Amira My Desire (Dreem Teem Remix) Fm Amirali -

Eple Royksopp Download

Eple royksopp download Eple Royksopp. MB · Eple. Artist: Royksopp. MB · Eple. Artist: Royksopp. MB. Watch the video, get the download or listen to Röyksopp – Eple for free. Eple appears on the album Eple. "Eple" (Norwegian for "Apple") is an instrumental. Röyksopp Eple () - file type: mp3 - download - bitrate: kbps. Literally every time I hear this song I somehow imagine this animated pixilation scene of a really warm sunny. Download Royksopp Eple free midi and other Royksopp free midi. Stream Royksopp "Eple" (Shakedown Remix) by SHAKEDOWN from desktop or your mobile device. Buy Eple: Read 2 Digital Music Reviews - Röyksopp 8 · Stream or buy Downloaded it for a film project after hearing it on a online radio station. "Eple" by Röyksopp sampled Bob James's "You're as Right as Rain". Listen to both songs on 55 Votes. Röyksopp's Eple Download this Track. Buy on Vinyl/. Download Royksopp - Eple midi. Best midi files in the world. Over + MIDI files. Eple Royksopp kbps Free Mp3 Music Download. Royksopp Eple Mp3 Download Mp3freex Free Mp3 Downloads. Royksopp Eple Remix Mp3 Download. Eple Royksopp kbps Free Mp3 Music Download. Royksopp Eple Mp3 Download Eple Original Edit mp3 Eple EP of Royksopp. Download Royksopp eple. Röyksopp Melody A.M. The finest music to have come out of Norway since well, ever Worldwide smash hit Eple is irresistibly hypnotic electro; Poor Leno is Further reading: Download: iTunes HKB Finn Vitalistics A. Switch browsers or download Spotify for your desktop. Eple. By Röyksopp. • 1 song, Listen to Röyksopp in full in the Spotify app. Play on Spotify. -

Corpus Antville

Corpus Epistemológico da Investigação Vídeos musicais referenciados pela comunidade Antville entre Junho de 2006 e Junho de 2011 no blogue homónimo www.videos.antville.org Data Título do post 01‐06‐2006 videos at multiple speeds? 01‐06‐2006 music videos based on cars? 01‐06‐2006 can anyone tell me videos with machine guns? 01‐06‐2006 Muse "Supermassive Black Hole" (Dir: Floria Sigismondi) 01‐06‐2006 Skye ‐ "What's Wrong With Me" 01‐06‐2006 Madison "Radiate". Directed by Erin Levendorf 01‐06‐2006 PANASONIC “SHARE THE AIR†VIDEO CONTEST 01‐06‐2006 Number of times 'panasonic' mentioned in last post 01‐06‐2006 Please Panasonic 01‐06‐2006 Paul Oakenfold "FASTER KILL FASTER PUSSYCAT" : Dir. Jake Nava 01‐06‐2006 Presets "Down Down Down" : Dir. Presets + Kim Greenway 01‐06‐2006 Lansing‐Dreiden "A Line You Can Cross" : Dir. 01‐06‐2006 SnowPatrol "You're All I Have" : Dir. 01‐06‐2006 Wolfmother "White Unicorn" : Dir. Kris Moyes? 01‐06‐2006 Fiona Apple ‐ Across The Universe ‐ Director ‐ Paul Thomas Anderson. 02‐06‐2006 Ayumi Hamasaki ‐ Real Me ‐ Director: Ukon Kamimura 02‐06‐2006 They Might Be Giants ‐ "Dallas" d. Asterisk 02‐06‐2006 Bersuit Vergarabat "Sencillamente" 02‐06‐2006 Lily Allen ‐ LDN (epk promo) directed by Ben & Greg 02‐06‐2006 Jamie T 'Sheila' directed by Nima Nourizadeh 02‐06‐2006 Farben Lehre ''Terrorystan'', Director: Marek Gluziñski 02‐06‐2006 Chris And The Other Girls ‐ Lullaby (director: Christian Pitschl, camera: Federico Salvalaio) 02‐06‐2006 Megan Mullins ''Ain't What It Used To Be'' 02‐06‐2006 Mr. -

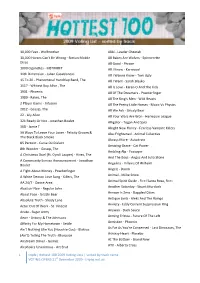

Hottest 100 List

10,000 Feet - Wolfmother Alibi - Leader Cheetah 10,000 Horses Can't Be Wrong - Simian Mobile All Babes Are Wolves - Spinnerette Disco All Good - Phrase 1000 Cigarettes - MSTRKRFT All I Know - Karnivool 11th Dimension - Julian Casablancas All I Wanna Know - Tom Ugly 15 To 20 - Phenomenal Handclap Band, The All I Want - Sarah Blasko 1517 - Whitest Boy Alive , The All Is Love - Karen O And The Kids 1901 - Phoenix All Of The Dreamers - Powderfinger 1989 - Rakes, The All The King's Men - Wild Beasts 2 Player Game - Infusion All The Pretty Little Horses - Music Vs Physics 2012 - Gossip, The All We Ask - Grizzly Bear 22 - Lily Allen All Your Wars Are Won - Harlequin League 321 Ready Or Not - Jonathan Boulet Alligator - Tegan And Sara 368 - Jamie T Alright Now Honey - Fearless Vampire Killers 39 Ways To Leave Your Lover - Felicity Groom & Also Frightened - Animal Collective The Black Black Smoke Always More - Autokratz 85 Percent - Curse Ov Dialect Amazing Grace - Cat Power 8th Wonder - Gossip, The Ambling Alp - Yeasayer A Christmas Duel (Ft. Cyndi Lauper) - Hives, The And The Boys - Angus And Julia Stone A Community Service Announcement - Jonathan Angelina - Villains Of Wilhelm Boulet Angelz - Doom A Fight About Money - Powderfinger Animal - Miike Snow A White Demon Love Song - Killers, The AA 24/7 - Dance Area Animal Spirit Guide - Fire! Santa Rosa, Fire! Another Saturday - Stuart Murdoch Abattoir Noir - Regular John Answer Is Zero - Dappled Cities About Face - Grizzly Bear Absolute Truth - Shady Lane Antique Limb - Aleks And The Ramps Anxiety -

UC Berkeley UC Berkeley Electronic Theses and Dissertations

UC Berkeley UC Berkeley Electronic Theses and Dissertations Title Anima Automata: On the Olympian Art of Song Permalink https://escholarship.org/uc/item/2f4304xv Author Porzak, Simon Lucas Publication Date 2014 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California Anima Automata: On the Olympian Art of Song By Simon Lucas Porzak A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Rhetoric in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Daniel Boyarin, Co-Chair Professor Barbara Spackman, Co-Chair Professor Pheng Cheah Professor Susan Schweik Spring 2014 Anima Automata: On the Olympian Art of Song © 2014, Simon Lucas Porzak 1 Abstract Anima Automata: On the Olympian Art of Song by Simon Lucas Porzak Doctor of Philosophy in Rhetoric University of California, Berkeley Professor Daniel Boyarin, Co-Chair Professor Barbara Spackman, Co-Chair Dominant explanations of the power of song, in musicology, sound studies, media theory, and our cultural mythologies about divas and pop singers, follow a Promethean trajectory: a singer wagers her originary humanity through an encounter with the machinery of music (vocal training, recording media, etc.); yet her song will finally carry an even more profound, immediate human meaning. Technology forms an accidental detour leading from humanity to more humanity. In an alternative, “Olympian” practice of singing, humanity and machinery constantly and productively contaminate each other. My readings, centering around the singing doll Olympia from Jacques Offenbach’s 1881 opera Les Contes d’Hoffmann , illuminate the affective and ethical consequences of the confrontation between Promethean and Olympian song. -

Robyn Do It Again Ep

Robyn do it again ep click here to download Do It Again is an extended play (EP) by Norwegian electronic music duo Röyksopp and Swedish singer-songwriter Robyn. It was released on 23 May by. do it again EP by wait what, released 06 October 1. a monumental threat [ jay-z vs robyn & röyksopp] 2. say it (hello) [jay-z vs robyn & röyksopp] 3. do it. Röyksopp & Robyn Do It Again. By Robyn. Listen to Röyksopp's top 10 Robyn tracks, and Robyn's top 10 Röyksopp tracks! The collaborative album 'Do It Again' . This doesnt sound like Röyksopp featuring Robyn, it's just something else, the word collaboration has never before been more justified in the. "Do it Again" is the pulsing, laser beam centerpiece and pinnacle of the brief release. “Do It Again” and “Say It” are dancefloor polishers, with the latter hinting at (A remix collection with versions of “Do It Again” was released shortly after the EP.). Robyn and Röyksopp's collaborative mini-album has the epic sweep of a Though technically an EP, the minute Do It Again has the epic. Robyn and Röyksopp Announce New Mini-Album, Do It Again, Share This summer, Robyn and her longtime collaborators Röyksopp will embark on the " Röyksopp and Robyn Do It Again Tour ". Do It Again EP. Royksopp & Robyn - Do It Again - www.doorway.ru Music. The running time for the ep is equivalent to a full on album, and hopefully their next venture is just that. Röyksopp and Robyn's previous team-up resulted in the incredible "The Girl and the Robot," so it was kind of a given that their collaborativ. -

Funkydiva Platliste 12244 Nummre

Artist Album Title 911 Greatest Hits 90's 5 A little bit more 911 Now, Vol. 42 A Little Bit More 911 Mr Music Hits 1996-12 Don´t Make Me Wait 911 Now, Vol. 35 Don't Make Me Wait 911 Now, Vol. 41 More Than a Women 911 Now, Vol. 38 Party People... Friday Night 911 Now, Vol. 43 Private Number 911 Now, Vol. 37 The Journey 999 Greatest Hits 70's 5 Homicide 3000 Hits for Kids 11 (Danish_Edition) osc *NSYNC Now, Vol. 46 Bye Bye Bye I Don't Wanna Spend One More *NSYNC Now Christmas 2 Christmas Without You *NSYNC Now, Vol. 47 It's Gonna Be Me You Don't Have to Be Alone (On *NSYNC Now Christmas Christmas) 10 CC For Fuld Musik 3 Good Morning Judge 10 CC For fuld musik 1 I'm not in love 10 CC For Fuld Musik 4 The Things We Do For Love 10cc Greatest Hits 70's 2 Donna 10CC Absolute Hits 70's I'm Not In Love 10cc Greatest Hits 70's 3 Rubber Bullets 10cc Greatest Hits 70's 4 The Wall Street Shuffle 16 Bit Lolitas Feat. Jennifer Horne Dance Chart Vol. 20 Feel I'm Falling 2 In A Room Greatest Hits 90's 2 Wiggle It Get Ready for No Limits 2 unlimited Turn up the Bass vol. 5 (Murphy's Megamix Part 2) 2 Unlimited Now The Very Best of Dance No Limit 2-4 Grooves Absolute Dance Move Your Body! LikeWinter the Way I Do(Special Radio 2Pac feat.