Estimation of Key in Digital Music Recordings, Msc Computer Science Project Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

User Manual HOFA CD-Burn.DDP.Master (App) HOFA CD-Burn.DDP.Master PRO (App) V2.5.4 Content Introduction

User Manual HOFA CD-Burn.DDP.Master (App) HOFA CD-Burn.DDP.Master PRO (App) V2.5.4 Content Introduction .......................................................................................... 4 Quick Start ............................................................................................ 4 Installation ............................................................................................ 5 Activation ........................................................................................... 5 Evaluation version ............................................................................... 5 Project window ....................................................................................... 6 Audio file import and formats ................................................................... 7 The Audio Editor ..................................................................................... 8 Audio Editor Tracks .............................................................................. 8 Audio Editor Mode ............................................................................... 9 Mode: Insert ................................................................................... 9 Mode: Slide ..................................................................................... 9 Audio Clips ....................................................................................... 10 Zoom ........................................................................................... 11 Using Plugins ................................................................................... -

Songs by Artist

Reil Entertainment Songs by Artist Karaoke by Artist Title Title &, Caitlin Will 12 Gauge Address In The Stars Dunkie Butt 10 Cc 12 Stones Donna We Are One Dreadlock Holiday 19 Somethin' Im Mandy Fly Me Mark Wills I'm Not In Love 1910 Fruitgum Co Rubber Bullets 1, 2, 3 Redlight Things We Do For Love Simon Says Wall Street Shuffle 1910 Fruitgum Co. 10 Years 1,2,3 Redlight Through The Iris Simon Says Wasteland 1975 10, 000 Maniacs Chocolate These Are The Days City 10,000 Maniacs Love Me Because Of The Night Sex... Because The Night Sex.... More Than This Sound These Are The Days The Sound Trouble Me UGH! 10,000 Maniacs Wvocal 1975, The Because The Night Chocolate 100 Proof Aged In Soul Sex Somebody's Been Sleeping The City 10Cc 1Barenaked Ladies Dreadlock Holiday Be My Yoko Ono I'm Not In Love Brian Wilson (2000 Version) We Do For Love Call And Answer 11) Enid OS Get In Line (Duet Version) 112 Get In Line (Solo Version) Come See Me It's All Been Done Cupid Jane Dance With Me Never Is Enough It's Over Now Old Apartment, The Only You One Week Peaches & Cream Shoe Box Peaches And Cream Straw Hat U Already Know What A Good Boy Song List Generator® Printed 11/21/2017 Page 1 of 486 Licensed to Greg Reil Reil Entertainment Songs by Artist Karaoke by Artist Title Title 1Barenaked Ladies 20 Fingers When I Fall Short Dick Man 1Beatles, The 2AM Club Come Together Not Your Boyfriend Day Tripper 2Pac Good Day Sunshine California Love (Original Version) Help! 3 Degrees I Saw Her Standing There When Will I See You Again Love Me Do Woman In Love Nowhere Man 3 Dog Night P.S. -

Aug2021 CBCS Bsc Computerscience

Choice Based Credit System 140 Credits for 3-Year UG Honours MAKAUT Framework w.e.f. Academic Year: 2021 – 2022 MODEL CURRICULUM for B. Sc.- Computer Science (Hons.) CBCS – MAKAUT UG Degree: B. Sc. - Computer Science (Hons) 140 Credit Subject Semester Semester Semester Semester II Semester V Semester VI Type I III IV CC C1, C2 C3, C4 C5, C6,C7 C8,C9,C10 C11,C12 C13,C14 DSE DSE1, DSE2 DSE3, DSE4 GE GE1 GE2 GE3 GE4 Capstone Project Evaluation AECC AECC 1 AECC 2 SEC SEC 1 SEC 2 4 (20) 4 (20) 5 (26) 5(26) 4 (24) 4 (24) Teaching-Learning-Assessment as per Bloom’s Taxonomy fitment Levels L1: L2: L3: L4: L5: L6: REMEMBER UNDERSTAND APPLY ANALYZE EVALUATE CREATE Courses – T&L and Assessment Levels SEM 1 SEM 2 SEM 3 SEM 4 SEM 5 SEM 6 MOOCs BEGINNER BASIC INTERMEDIA TE ADVANCED CC: Core Course AECC: Ability Enhancement Compulsory Courses GE: Generic Elective Course DSE: Discipline Specific Elective Course SEC: Skill Enhancement Course B. Sc. - Computer Science (Hons.) Curriculum Structure 1st Semester Credit Course Credit Mode of Delivery Subject Type Course Name DistriBution Proposed Code Points MOOCs L P T Offline Online Blended Programming CC1-T CS 101 Fundamental – 4 4 yes using C Language Programming using CC1-P CS 191 2 2 yes CC C CC2-T CS 102 Digital Electronics 4 4 yes Digital Electronics CC2-P CS 192 2 2 yes Lab Any one from GE1 6 yes GE Basket – 1 to 5 AEC Soft Skills (English AECC 1 CS(HU-101) 2 2 yes C Communication) Semester Credits 20 nd 2 Semester Credit Course Credit Mode of Delivery Subject Type Course Name DistriBution Proposed Code Points MOOCs L P T Offline Online Blended CC3-T CS 201 Data Structures 4 4 yes CC Data Structures CC3-P CS 291 2 2 yes Lab Computer CC4-T CS 202 Organization 4 4 yes Computer CC4-P CS 292 2 2 yes Organization Lab. -

The Kid3 Handbook

The Kid3 Handbook Software development: Urs Fleisch The Kid3 Handbook 2 Contents 1 Introduction 11 2 Using Kid3 12 2.1 Kid3 features . 12 2.2 Example Usage . 12 3 Command Reference 14 3.1 The GUI Elements . 14 3.1.1 File List . 14 3.1.2 Edit Playlist . 15 3.1.3 Folder List . 15 3.1.4 File . 16 3.1.5 Tag 1 . 17 3.1.6 Tag 2 . 18 3.1.7 Tag 3 . 18 3.1.8 Frame List . 18 3.1.9 Synchronized Lyrics and Event Timing Codes . 21 3.2 The File Menu . 22 3.3 The Edit Menu . 28 3.4 The Tools Menu . 29 3.5 The Settings Menu . 32 3.6 The Help Menu . 37 4 kid3-cli 38 4.1 Commands . 38 4.1.1 Help . 38 4.1.2 Timeout . 38 4.1.3 Quit application . 38 4.1.4 Change folder . 38 4.1.5 Print the filename of the current folder . 39 4.1.6 Folder list . 39 4.1.7 Save the changed files . 39 4.1.8 Select file . 39 4.1.9 Select tag . 40 The Kid3 Handbook 4.1.10 Get tag frame . 40 4.1.11 Set tag frame . 40 4.1.12 Revert . 41 4.1.13 Import from file . 41 4.1.14 Automatic import . 41 4.1.15 Download album cover artwork . 42 4.1.16 Export to file . 42 4.1.17 Create playlist . 42 4.1.18 Apply filename format . 42 4.1.19 Apply tag format . -

JSP with Javax.Script Languages

Seminar paper BSF4ooRexx: JSP with javax.script Languages Author: Nora Lengyel Matriculation no: 1552636 Class Title: Projektseminar aus Wirtschaftsinformatik (Schiseminar) Instructor: ao.Univ.Prof. Mag. Dr. Rony G. Flatscher Term: Winter Term 2019/2020 Vienna University of Economics and Business Content 1. Introduction ..................................................................................................................................... 3 2. Tomcat .............................................................................................................................................. 4 2.1 Introduction to Tomcat ............................................................................................................... 4 2.2 The Installation of Tomcat ........................................................................................................ 5 2.2.1 Environment Variables ....................................................................................................... 7 2.2.2 Tomcat Web Application Manager ................................................................................... 9 3. Cookie ............................................................................................................................................. 11 3.1 Introduction to Cookies ........................................................................................................... 11 3.2 Functioning of a Cookie ......................................................................................................... -

Installation Manual

CX-20 Installation manual ENABLING BRIGHT OUTCOMES Barco NV Beneluxpark 21, 8500 Kortrijk, Belgium www.barco.com/en/support www.barco.com Registered office: Barco NV President Kennedypark 35, 8500 Kortrijk, Belgium www.barco.com/en/support www.barco.com Copyright © All rights reserved. No part of this document may be copied, reproduced or translated. It shall not otherwise be recorded, transmitted or stored in a retrieval system without the prior written consent of Barco. Trademarks Brand and product names mentioned in this manual may be trademarks, registered trademarks or copyrights of their respective holders. All brand and product names mentioned in this manual serve as comments or examples and are not to be understood as advertising for the products or their manufacturers. Trademarks USB Type-CTM and USB-CTM are trademarks of USB Implementers Forum. HDMI Trademark Notice The terms HDMI, HDMI High Definition Multimedia Interface, and the HDMI Logo are trademarks or registered trademarks of HDMI Licensing Administrator, Inc. Product Security Incident Response As a global technology leader, Barco is committed to deliver secure solutions and services to our customers, while protecting Barco’s intellectual property. When product security concerns are received, the product security incident response process will be triggered immediately. To address specific security concerns or to report security issues with Barco products, please inform us via contact details mentioned on https://www.barco.com/psirt. To protect our customers, Barco does not publically disclose or confirm security vulnerabilities until Barco has conducted an analysis of the product and issued fixes and/or mitigations. Patent protection Please refer to www.barco.com/about-barco/legal/patents Guarantee and Compensation Barco provides a guarantee relating to perfect manufacturing as part of the legally stipulated terms of guarantee. -

MUSIC NOTES: Exploring Music Listening Data As a Visual Representation of Self

MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall A thesis submitted in partial fulfillment of the requirements for the degree of: Master of Design University of Washington 2016 Committee: Kristine Matthews Karen Cheng Linda Norlen Program Authorized to Offer Degree: Art ©Copyright 2016 Chad Philip Hall University of Washington Abstract MUSIC NOTES: Exploring Music Listening Data as a Visual Representation of Self Chad Philip Hall Co-Chairs of the Supervisory Committee: Kristine Matthews, Associate Professor + Chair Division of Design, Visual Communication Design School of Art + Art History + Design Karen Cheng, Professor Division of Design, Visual Communication Design School of Art + Art History + Design Shelves of vinyl records and cassette tapes spark thoughts and mem ories at a quick glance. In the shift to digital formats, we lost physical artifacts but gained data as a rich, but often hidden artifact of our music listening. This project tracked and visualized the music listening habits of eight people over 30 days to explore how this data can serve as a visual representation of self and present new opportunities for reflection. 1 exploring music listening data as MUSIC NOTES a visual representation of self CHAD PHILIP HALL 2 A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF: master of design university of washington 2016 COMMITTEE: kristine matthews karen cheng linda norlen PROGRAM AUTHORIZED TO OFFER DEGREE: school of art + art history + design, division -

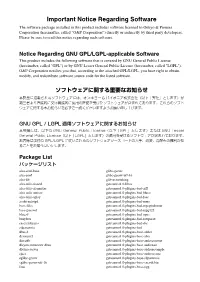

Important Notice Regarding Software

Important Notice Regarding Software The software package installed in this product includes software licensed to Onkyo & Pioneer Corporation (hereinafter, called “O&P Corporation”) directly or indirectly by third party developers. Please be sure to read this notice regarding such software. Notice Regarding GNU GPL/LGPL-applicable Software This product includes the following software that is covered by GNU General Public License (hereinafter, called "GPL") or by GNU Lesser General Public License (hereinafter, called "LGPL"). O&P Corporation notifies you that, according to the attached GPL/LGPL, you have right to obtain, modify, and redistribute software source code for the listed software. ソフトウェアに関する重要なお知らせ 本製品に搭載されるソフトウェアには、オンキヨー & パイオニア株式会社(以下「弊社」とします)が 第三者より直接的に又は間接的に使用の許諾を受けたソフトウェアが含まれております。これらのソフト ウェアに関する本お知らせを必ずご一読くださいますようお願い申し上げます。 GNU GPL / LGPL 適用ソフトウェアに関するお知らせ 本製品には、以下の GNU General Public License(以下「GPL」とします)または GNU Lesser General Public License(以下「LGPL」とします)の適用を受けるソフトウェアが含まれております。 お客様は添付の GPL/LGPL に従いこれらのソフトウェアソースコードの入手、改変、再配布の権利があ ることをお知らせいたします。 Package List パッケージリスト alsa-conf-base glibc-gconv alsa-conf glibc-gconv-utf-16 alsa-lib glib-networking alsa-utils-alsactl gstreamer1.0-libav alsa-utils-alsamixer gstreamer1.0-plugins-bad-aiff alsa-utils-amixer gstreamer1.0-plugins-bad-bluez alsa-utils-aplay gstreamer1.0-plugins-bad-faac avahi-autoipd gstreamer1.0-plugins-bad-mms base-files gstreamer1.0-plugins-bad-mpegtsdemux base-passwd gstreamer1.0-plugins-bad-mpg123 bluez5 gstreamer1.0-plugins-bad-opus busybox gstreamer1.0-plugins-bad-rawparse -

Daniel Gilbert & Anton Kristiansson

xand • Ale er Dur en efe kin lt as • M T Nummer 10 • 2009 Sveriges största musiktidning i l • d n e o h i s H i j V e l s m i h • T O • a s k u w i o c o u L d • • I k s a Daniel GIlBert & AntoN KristianssoN Det gamla och det nya – samma Göteborg Joel Alme Pascal Fuck Buttons Bästa musiken 2009 Bibio Nile Dälek Up North Tildeh Hjelm This Is Head Riddarna 5 Årets album 7 Årets låtar Är trovärdighet på 8 Om autotunen må ni berätta väg ut? 8 Skivbolaget som blev ett fanzine Jag måste erkänna att jag vacklat i min tro illa om Henrik Berggren om han levt ett glas- på sistone. En tro som säger mig att trovär- sigt stekarliv. Att leva som man lär har sin ledare dighet i musiken är lika viktigt som i livet. Är poäng. Tycker jag. 10 Blågult guld det då så eller är jag bara en verklighetsfrån- I hiphopvärlden har trovärdighet å vänd drömmare? andra sidan varit A och O från dag ett. Där innehåll Riddarna Tankarna kring den vacklande trovärdig- förväntas man rappa om det man varit med hetssträvan kom till mig på en cykeltur till om. Att ha langat knark med ena handen på This Is Head kontoret. Jag tänkte på hårdrockare som pistolkolven är standard. Kulhålen ska finnas sjunger om Satan, döden, krig och annat där som bevis, liksom. Men det är lätt att Tildeh Hjelm hemskt. Kan det verkligen vara sant att så stelna i en form, och inte vilja utvecklas. -

TRONDHEIM, MARINEN 21.-22. AUGUST Årets Nykommer 2008 Norsk Rockforbund

- FESTIVALAVIS-FESTIVALAVIS-FESTIVALAVIS-FESTIVALAVIS-FES TRONDHEIM, MARINEN 21.-22. AUGUST Årets nykommer 2008 Norsk Rockforbund 24 band på 3 scener i 2 dager. Tenk deg om 2 ganger før du finner på noe annet. RÖYKSOPP, MOTORPSYCHO, ANE BRUN, ULVER, THÅSTRÖM(SE) CALEXICO(US), LATE OF THE PIER(UK), PRIMAL SCREAM(UK) GANG OF FOUR(UK), JAGA JAZZIST, RUPHUS, SKAMBANKT, HARRYS GYM, BLACK DEBBATH, SLAGSMÅLSKLUBBEN(SE), FILTHY DUKES(UK) MARINA AND THE DIAMONDS (UK), I WAS A KING, KIM HIORTHØY, STINA STJERN, 22, AMISH 82, THE SCHOOL, FJORDEN BABY! Blåsvart er aktivitet og mat FESTIVALINFO FESTIVALINFO Blått er scener Grønt er servering og bong Rødt er toaletter Orange er inngang Lilla er kino Pstereo no igjæn? Greit å vite FESTIVALSMARTS I sine tre første leveår Marinen Vi ber publikum være forsiktig med elvebredden og Vi gadd ikke å forfatte egne regler for festivalvett så vi har Pstereo opplevd Marinen er Trondheims mest idylliske friluftsområde. kanten ved kanonoppstillingen. Om vaktene ber deg flytte tjuvlånte de som allerede er nedskrevet av Norsk Rockfor- en bratt oppadgående Området ligger helt nede ved Nidelvens bredder, og har deg fra kanten eller elvebredden så er det kult om du hører bund. De kan for øvrig også oppsummeres slik; Man skal formkurve. Festivalen Nidarosdomen som nærmeste nabo. Når du kommer etter. Ingen ønsker skader under festivalen. ikke plage andre, man skal være grei og snill, og for øvrig har altså kommet for å inn på festivalområdet, så ønsker vi at du skal ta hensyn kan man gjøre hva man vil. bli. Tre ganger gjen- til områdets verdighet. -

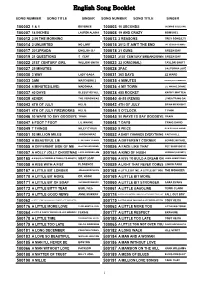

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

2 3 10000 Maniacs

A A 10000 Maniacs - Candy Everybody Wants 3 Doors Down - Here Without You AIN0101/8 4 Non Blondes - What’s Up DKK086/7 6 - Promise’ve MM6313/2 DKK082/4 3 Doors Down - Kryptonite AIN0103/11 0.666666666666667 - Sukiyaki DKK089/16 911 - All I Want Is You SF121/7 10cc - Donna SF090/15 3 Doors Down - Let Me Go NM2061/1 411 - Dumb EZH039/5 911 - How Do You Want Me To Love You 10cc - Dreadlock Holiday SF023/12 3 Doors Down - Loser CB40211/8 411 - Teardrops SF225/6 ET012/10 10cc - I’m Mandy SF079/3 3 Doors Down - Road I’m On SC8817/10 411 And Ghostface - On My Knees EZH035/5 911 - Little Bit More SF130/4 10cc - I’m Not In Love DKK082/14 3 Doors Down - So I Need You CB40211/10 42nd Stand - Lullabye Of Broadway LEGBR02/8 911 - More Than A Woman ET015/3 10cc - Rubber Bullets EK026/17 3 Doors Down - When I’m Gone AIN0064-2/5 4him - Basics Of Life CBSE5/-- 911 - Party People (Friday Night) SF118/9 10cc - Things We Do For Love SFMW832/11 3 Doors Down (Vocal) - Away From The Sun 4him - For Future Generations CBSE4/-- 911 - Private Number ET021/10 10cc - Wall Street Shuffle SFMW814/1 CB40340/3 4him - Psalm 112 PR3019/2 98 - Way You Want Me To SC8702/7 112 - Peaches And Cream SC8702/2 3 Doors Down (Vocal) - Be Like That CB40211/3 5 Seconds Of Summer - Amnesia MRH121/3 98 Degrees - Because Of You SF134/8 12 Gauge - Dunkie Butt SC8892/4 3 Doors Down (Vocal) - Behind Those Eyes 5 Seconds Of Summer - Don’t Stop SF340/17 98 Degrees - Can’t Get Enough PHM1310/1 THMR0507/5 12 Stones - Far Away THMR0411/16 5 Seconds Of Summer - Good Girls MRH123/5 98 Degrees