Reverse-Engineering Twitter's Content Removal

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

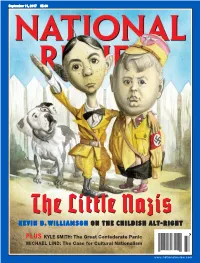

The Little Nazis KE V I N D

20170828 subscribers_cover61404-postal.qxd 8/22/2017 3:17 PM Page 1 September 11, 2017 $5.99 TheThe Little Nazis KE V I N D . W I L L I A M S O N O N T H E C HI L D I S H A L T- R I G H T $5.99 37 PLUS KYLE SMITH: The Great Confederate Panic MICHAEL LIND: The Case for Cultural Nationalism 0 73361 08155 1 www.nationalreview.com base_new_milliken-mar 22.qxd 1/3/2017 5:38 PM Page 1 !!!!!!!! ! !! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! !! "e Reagan Ranch Center ! 217 State Street National Headquarters ! 11480 Commerce Park Drive, Santa Barbara, California 93101 ! 888-USA-1776 Sixth Floor ! Reston, Virginia 20191 ! 800-USA-1776 TOC-FINAL_QXP-1127940144.qxp 8/23/2017 2:41 PM Page 1 Contents SEPTEMBER 11, 2017 | VOLUME LXIX, NO. 17 | www.nationalreview.com ON THE COVER Page 22 Lucy Caldwell on Jeff Flake The ‘N’ Word p. 15 Everybody is ten feet tall on the BOOKS, ARTS Internet, and that is why the & MANNERS Internet is where the alt-right really lives, one big online 35 THE DEATH OF FREUD E. Fuller Torrey reviews Freud: group-therapy session The Making of an Illusion, masquerading as a political by Frederick Crews. movement. Kevin D. Williamson 37 ILLUMINATIONS Michael Brendan Dougherty reviews Why Buddhism Is True: The COVER: ROMAN GENN Science and Philosophy of Meditation and Enlightenment, ARTICLES by Robert Wright. DIVIDED THEY STAND (OR FALL) by Ramesh Ponnuru 38 UNJUST PROSECUTION 12 David Bahnsen reviews The Anti-Trump Republicans are not facing their challenges. -

Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany

University of Vermont ScholarWorks @ UVM UVM Honors College Senior Theses Undergraduate Theses 2018 Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany William Peter Fitz University of Vermont Follow this and additional works at: https://scholarworks.uvm.edu/hcoltheses Recommended Citation Fitz, William Peter, "Reactionary Postmodernism? Neoliberalism, Multiculturalism, the Internet, and the Ideology of the New Far Right in Germany" (2018). UVM Honors College Senior Theses. 275. https://scholarworks.uvm.edu/hcoltheses/275 This Honors College Thesis is brought to you for free and open access by the Undergraduate Theses at ScholarWorks @ UVM. It has been accepted for inclusion in UVM Honors College Senior Theses by an authorized administrator of ScholarWorks @ UVM. For more information, please contact [email protected]. REACTIONARY POSTMODERNISM? NEOLIBERALISM, MULTICULTURALISM, THE INTERNET, AND THE IDEOLOGY OF THE NEW FAR RIGHT IN GERMANY A Thesis Presented by William Peter Fitz to The Faculty of the College of Arts and Sciences of The University of Vermont In Partial Fulfilment of the Requirements For the Degree of Bachelor of Arts In European Studies with Honors December 2018 Defense Date: December 4th, 2018 Thesis Committee: Alan E. Steinweis, Ph.D., Advisor Susanna Schrafstetter, Ph.D., Chairperson Adriana Borra, M.A. Table of Contents Introduction 1 Chapter One: Neoliberalism and Xenophobia 17 Chapter Two: Multiculturalism and Cultural Identity 52 Chapter Three: The Philosophy of the New Right 84 Chapter Four: The Internet and Meme Warfare 116 Conclusion 149 Bibliography 166 1 “Perhaps one will view the rise of the Alternative for Germany in the foreseeable future as inevitable, as a portent for major changes, one that is as necessary as it was predictable. -

The Changing Face of American White Supremacy Our Mission: to Stop the Defamation of the Jewish People and to Secure Justice and Fair Treatment for All

A report from the Center on Extremism 09 18 New Hate and Old: The Changing Face of American White Supremacy Our Mission: To stop the defamation of the Jewish people and to secure justice and fair treatment for all. ABOUT T H E CENTER ON EXTREMISM The ADL Center on Extremism (COE) is one of the world’s foremost authorities ADL (Anti-Defamation on extremism, terrorism, anti-Semitism and all forms of hate. For decades, League) fights anti-Semitism COE’s staff of seasoned investigators, analysts and researchers have tracked and promotes justice for all. extremist activity and hate in the U.S. and abroad – online and on the ground. The staff, which represent a combined total of substantially more than 100 Join ADL to give a voice to years of experience in this arena, routinely assist law enforcement with those without one and to extremist-related investigations, provide tech companies with critical data protect our civil rights. and expertise, and respond to wide-ranging media requests. Learn more: adl.org As ADL’s research and investigative arm, COE is a clearinghouse of real-time information about extremism and hate of all types. COE staff regularly serve as expert witnesses, provide congressional testimony and speak to national and international conference audiences about the threats posed by extremism and anti-Semitism. You can find the full complement of COE’s research and publications at ADL.org. Cover: White supremacists exchange insults with counter-protesters as they attempt to guard the entrance to Emancipation Park during the ‘Unite the Right’ rally August 12, 2017 in Charlottesville, Virginia. -

European Semiotics and the Radical Right Christian Maines Feature

feature / 1 Crusaders Past and Present: European Semiotics and the Radical Right Christian Maines In a Tortoiseshell: In his Writing Seminar R3, Christian Maines puts the discourse we see today in the news regarding the Alt-right into historical context, letting his research guide his understanding of the group, rather than the other way around. His use of structuring elements—purposeful orienting, definitions of key terms, clear topic sentences, consistent tie back sentences—sets his argument up for success. Motivating his thesis from the beginning to the end, Christian is able to not only sustain his topic, but make an insightful contribution to our understanding of the Alt-right. Feature Between Aug. 11th and 12th of 2017, crowds swarmed the streets of Charlottesville wearing plate armor, carrying torches, and calling out battle cries—chants of “You will not replace us!” and “Deus Vult!” echoed through the streets.1 By sundown on the 13th, there was a memorial of flower wreathes on the ground for Heather Heyer, struck down by a car in the midst of the protests.2 Coverage of the violence largely associated the protests with the “Alt-Right,” a loosely- defined collection of radical American nationalists with undertones of racism and extremism.3 Immense violence and tragedy came about in this single instance of conflict spurred on by the Alt-Right. However, in recent months, the consensus in news media has been that the movement is dead, as authors claim that the Alt-Right “has grown increasingly chaotic and fractured, torn apart by infighting and legal troubles,” and cite lawsuits and arrests, fundraising difficulties, tepid recruitment, counter protests, and banishment from social media platforms.4 1 Staff, “Deconstructing the symbols and slogans spotted in Charlottesville,” accessed 14 April 2018, https://www.washingtonpost.com/graphics/2017/local/charlottesville- videos/?utm_term=.370f3936a4be. -

Abstract Book

12th Annual International Conference on Communication and Mass Media, 12-15 May, Athens, Greece: 2014 Abstract Book Communication & Mass Media Abstracts Twelfth Annual International Conference on Communication and Mass Media 12-15 May 2014, Athens, Greece Edited by Gregory T. Papanikos THE ATHENS INSTITUTE FOR EDUCATION AND RESEARCH 1 12th Annual International Conference on Communication and Mass Media, 12-15 May, Athens, Greece: Abstract Book 2 12th Annual International Conference on Communication and Mass Media, 12-15 May, Athens, Greece: Abstract Book Communication & Mass Media Abstracts 12th Annual International Conference on Communication and Mass Media 12-15 May 2014, Athens, Greece Edited by Gregory T. Papanikos 3 12th Annual International Conference on Communication and Mass Media, 12-15 May, Athens, Greece: Abstract Book First Published in Athens, Greece by the Athens Institute for Education and Research. ISBN: 978-618-5065-38-6 All rights reserved. No part of this publication may be reproduced, stored, retrieved system, or transmitted, in any form or by any means, without the written permission of the publisher, nor be otherwise circulated in any form of binding or cover. 8 Valaoritou Street Kolonaki, 10671 Athens, Greece www.atiner.gr ©Copyright 2014 by the Athens Institute for Education and Research. The individual essays remain the intellectual properties of the contributors. 4 12th Annual International Conference on Communication and Mass Media, 12-15 May, Athens, Greece: Abstract Book TABLE OF CONTENTS (In Alphabetical Order -

The Alt-Right on Campus: What Students Need to Know

THE ALT-RIGHT ON CAMPUS: WHAT STUDENTS NEED TO KNOW About the Southern Poverty Law Center The Southern Poverty Law Center is dedicated to fighting hate and bigotry and to seeking justice for the most vulnerable members of our society. Using litigation, education, and other forms of advocacy, the SPLC works toward the day when the ideals of equal justice and equal oportunity will become a reality. • • • For more information about the southern poverty law center or to obtain additional copies of this guidebook, contact [email protected] or visit www.splconcampus.org @splcenter facebook/SPLCenter facebook/SPLConcampus © 2017 Southern Poverty Law Center THE ALT-RIGHT ON CAMPUS: WHAT STUDENTS NEED TO KNOW RICHARD SPENCER IS A LEADING ALT-RIGHT SPEAKER. The Alt-Right and Extremism on Campus ocratic ideals. They claim that “white identity” is under attack by multicultural forces using “politi- An old and familiar poison is being spread on col- cal correctness” and “social justice” to undermine lege campuses these days: the idea that America white people and “their” civilization. Character- should be a country for white people. ized by heavy use of social media and memes, they Under the banner of the Alternative Right – or eschew establishment conservatism and promote “alt-right” – extremist speakers are touring colleges the goal of a white ethnostate, or homeland. and universities across the country to recruit stu- As student activists, you can counter this movement. dents to their brand of bigotry, often igniting pro- In this brochure, the Southern Poverty Law Cen- tests and making national headlines. Their appear- ances have inspired a fierce debate over free speech ter examines the alt-right, profiles its key figures and the direction of the country. -

Ron Reagan Does Not Consider Himself an Activist

Photoshop # White Escaping from my Top winners named ‘Nothing Fails father’s Westboro in People of Color Like Prayer’ contest Baptist Church student essay contest winners announced PAGES 10-11 PAGE 12-16 PAGE 18 Vol. 37 No. 9 Published by the Freedom From Religion Foundation, Inc. November 2020 Supreme Court now taken over by Christian Nationalists President Trump’s newly confirmed Supreme Court Justice Amy Coney Barrett is going to be a disaster for the constitutional prin- ciple of separation between state and church and will complete the Christian Nationalist takeover of the high court for more than a generation, the Freedom From Religion Foundation asserts. Barrett’s biography and writings reveal a startling, life-long allegiance to religion over the law. The 48-year-old Roman Catholic at- tended a Catholic high school and a Presby- terian-affiliated college and then graduated from Notre Dame Law School, where she Photo by David Ryder taught for 15 years. She clerked for archcon- Amy Coney Barrett Despite his many public statements on atheism, Ron Reagan does not consider himself an activist. servative Justice Antonin Scalia, and signifi- cantly, like the late justice, is considered an “originalist” or “textual- ist” who insists on applying what is claimed to be the “original intent” of the framers. She and her parents have belonged to a fringe con- servative Christian group, People of Praise, which teaches that hus- Ron Reagan — A leading bands are the heads of household. Barrett’s nomination hearing for a judgeship on the 7th U.S. Circuit Court of Appeals, where she has served for less than three years, documented her many controversial figure of nonbelievers and disturbing positions on religion vis-à-vis the law. -

Click Here to View a Sample Chapter of the Pandemic Population

2020 by Tim Elmore All rights reserved. You have beeN graNted the NoN-exclusive, NoN-traNsferable right to access aNd read the text of this e-book oN screeN. No parts of this book may be reproduced, traNsmitted, decompiled, reverse eNgiNeered, or stored iN or iNtroduced iNto aNy iNformatioN storage or retrieval system iN aNy form by aNy meaNs, whether electroNic or mechaNical, Now kNowN or hereiNafter iNveNted, without express writteN permissioN from the publisher. Published by AmazoN iN associatioN with GrowiNg Leaders, INc. PandemicPopulation.com Pandemics, Protests, and Panic Attacks: A History Defined by Tragedy There is an age-old story that illustrates the state of millions of American teens today. It’s the tale of a man who sat in a local diner waiting for his lunch. His countenance was down, he was feeling discouraged, and his tone was melancholy. When his waitress saw he was feeling low, she immediately suggested he go see Grimaldi. The circus was in town, and Grimaldi was a clown who made everyone laugh. The waitress was certain Grimaldi could cheer up her sad customer. Little did she know with whom she was speaking. The man looked up at her and replied, “But, ma’am. I am Grimaldi.” In many ways, this is a picture of the Pandemic Population. On the outside, they’re clowning around on Snapchat and TikTok, laughing at memes and making others laugh at filtered photos on social media. Inside, however, their mental health has gone south. It appears their life is a comedy, but in reality, it feels like a tragedy. -

Alone Together: Exploring Community on an Incel Forum

Alone Together: Exploring Community on an Incel Forum by Vanja Zdjelar B.A. (Hons., Criminology), Simon Fraser University, 2016 B.A. (Political Science and Communication), Simon Fraser University, 2016 Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Arts in the School of Criminology Faculty of Arts and Social Sciences © Vanja Zdjelar 2020 SIMON FRASER UNIVERSITY FALL 2020 Copyright in this work rests with the author. Please ensure that any reproduction or re-use is done in accordance with the relevant national copyright legislation. Declaration of Committee Name: Vanja Zdjelar Degree: Master of Arts Thesis title: Alone Together: Exploring Community on an Incel Forum Committee: Chair: Bryan Kinney Associate Professor, Criminology Garth Davies Supervisor Associate Professor, Criminology Sheri Fabian Committee Member University Lecturer, Criminology David Hofmann Examiner Associate Professor, Sociology University of New Brunswick ii Abstract Incels, or involuntary celibates, are men who are angry and frustrated at their inability to find sexual or intimate partners. This anger has repeatedly resulted in violence against women. Because incels are a relatively new phenomenon, there are many gaps in our knowledge, including how, and to what extent, incel forums function as online communities. The current study begins to fill this lacuna by qualitatively analyzing the incels.co forum to understand how community is created through online discourse. Both inductive and deductive thematic analyses were conducted on 17 threads (3400 posts). The results confirm that the incels.co forum functions as a community. Four themes in relation to community were found: The incel brotherhood; We can disagree, but you’re wrong; We are all coping here; and Will the real incel come forward. -

Hate Speech, Pseudonyms, the Internet, Impersonator Trolls, and Fake Jews in the Era of Fake News

The Ohio State Technology Law Journal WEB OF LIES: HATE SPEECH, PSEUDONYMS, THE INTERNET, IMPERSONATOR TROLLS, AND FAKE JEWS IN THE ERA OF FAKE NEWS YITZCHAK BESSER1 This Article discusses the problem of “hate-speech impersonator trolls,” that is, those who impersonate minorities through the use of false identities online, and then use those false identities to harm those minorities through disinformation campaigns and false-flag operations. Solving this problem requires a change to the status quo, either through the passage of a new statute targeting hate-speech impersonator trolls or through the modification of Section 230 of the Communications Decency Act. In this Article, I discuss the scope and severity of hate- speech impersonator-trolling, as well as relevant jurisprudence on the First Amendment, hate speech, anonymity, and online communications. I then present proposals and recommendations to counter and combat hate-speech impersonator trolls. CONTENTS I. INTRODUCTION .............................................................. 234 II. IMPERSONATION AS A FORM OF HATE SPEECH ...... 236 III. FIRST AMENDMENT JURISPRUDENCE ...................... 244 1 The author is a term law clerk for Senior U.S. District Judge Glen H. Davidson of the Northern District of Mississippi. He graduated magna cum laude from the University of Baltimore School of Law in May 2020. He is grateful to Professors Jerry "Matt" Bodman and Phillip J. Closius for their advice during the writing and editing process. 234 THE OHIO STATE TECHNOLOGY LAW JOURNAL [Vol. -

2020 HERA Conference Program

2020 HERA Conference Program Humanities Education & Research Association March 4-7 2020 Chicago Conference Headquarters: The Palmer House Wednesday, March 4 Sessions and Registration 1-5:00 PM Room: Crystal HERA Board Meeting 6:15-9:30 PM Room: Honoré Session I 2:00-3:15 PM 1. Humanistic Power: Values and Critical Thinking Room: Wilson Chair: Thomas Ruddick “What Does It Mean to Have Value? Exploring the Problematic Term at the Center of the ‘Humanities Debate,’” Brian Ballentine, Rutgers University “The Power of the Humanities: Are We Aiding or Adding to Our Struggles?” Jessica Whitaker, Winthrop University “Critical Thinking Matters. Humanities Should Care,” Thomas Ruddick, HERA Board Member 2. Inspiring New Creativities and Modes of Consciousness Room: Marshfield Chair: Maryna Teplova “The Awakening of Mythic Consciousness in Immensely ‘Charged’ Places,” Arsenio Rodrigues, Bowling Green State University "Selfish Plants and Multispecies Creativity," Abigail Bowen, Trinity University “Constructing Utopia in Ursula Le Guin’s The Left Hand of Darkness” Maryna Teplova, Illinois State University 2 Session II 3:15-4:30 PM 3. PANEL: Like a God, the Humanities Makes or Breaks Everything It Touches Room: Wilson “Like a God, the Humanities Makes or Breaks Everything It Touches,” Jared Pearce, William Penn University, Iowa “Disrupting ‘Normative Processes’: Queer Theory and the Critique of Efficiency and Balance in the Composition Class,” Wilton Wright, William Penn University, Iowa “Something for Everyone: Humanities and Interdisciplinarity,” Samantha Allen, William Penn University, Iowa “Making Do: A Community-Focused Methodology for Institutional Change,” Chad Seader, William Penn University, Iowa 4. PANEL: Corporate Discourse and Image Repair Room: Marshfield “Wells Fargo: An analysis of CEO Timothy Sloan’s discourse to repair confidence during a banking scandal,” Ashlee Carr, Southeastern Oklahoma State University “Samsung Galaxy Note 7: An Exploration of Administrative Discourse During a Crisis Episode,” Spencer Patton, Southeastern Oklahoma State University 5. -

Version 2, 08/09/2017 20:48:33

A Psychological Profile of the Alt-Right 1 A Psychological Profile of the Alt-Right Patrick S. Forscher1 and Nour S. Kteily2 1Department of Psychological Science, University of Arkansas, 2Department of Management and Organizations, Kellogg School of Management, Northwestern University. Author notes Data and materials for this project can be found at https://osf.io/xge8q/ Conceived research: Forscher & Kteily; Designed research: Forscher & Kteily; Collected data: Forscher & Kteily; Analyzed data: Forscher & Kteily; Wrote paper: Forscher & Kteily; Revised paper: Forscher & Kteily. Address correspondence to Patrick S. Forscher, Department of Psychological Science, University of Arkansas, 216 Memorial Hall, Fayetteville, AR, 72701 (Email: [email protected]). A Psychological Profile of the Alt-Right 2 Abstract The 2016 U.S. presidential election coincided with the rise the “alternative right” or “alt- right”. Although alt-right associates wield considerable influence on the current administration, the movement’s loose organizational structure has led to disparate portrayals of its members’ psychology, compounded by a lack of empirical investigation. We surveyed 447 alt-right adherents on a battery of psychological measures, comparing their responses to those of 382 non- adherents. Alt-right adherents were much more distrustful of the mainstream media and government; expressed higher Dark Triad traits, social dominance orientation, and authoritarianism; reported high levels of aggression; and exhibited extreme levels of overt intergroup bias, including blatant dehumanization of racial minorities. Cluster analyses suggest that alt-right supporters may separate into two subgroups: one more populist and anti- establishmentarian and the other more supremacist and motivated by maintaining social hierarchy. We argue for the need to give overt bias greater empirical and theoretical consideration in contemporary intergroup research.