A Survey of Interaction Techniques for Interactive 3D Environments Jacek Jankowski, Martin Hachet

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Essentials – Session 1 – Step-By-Step Guide

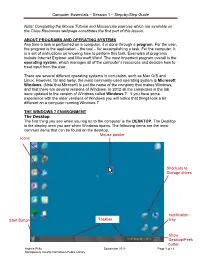

Computer Essentials – Session 1 – Step-by-Step Guide Note: Completing the Mouse Tutorial and Mousercise exercise which are available on the Class Resources webpage constitutes the first part of this lesson. ABOUT PROGRAMS AND OPERATING SYSTEMS Any time a task is performed on a computer, it is done through a program. For the user, the program is the application – the tool – for accomplishing a task. For the computer, it is a set of instructions on knowing how to perform this task. Examples of programs include Internet Explorer and Microsoft Word. The most important program overall is the operating system, which manages all of the computer’s resources and decides how to treat input from the user. There are several different operating systems in circulation, such as Mac O/S and Linux. However, far and away, the most commonly-used operating system is Microsoft Windows. (Note that Microsoft is just the name of the company that makes Windows, and that there are several versions of Windows. In 2012 all the computers in the lab were updated to the version of Windows called Windows 7. If you have some experience with the older versions of Windows you will notice that things look a bit different on a computer running Windows 7. THE WINDOWS 7 ENVIRONMENT The Desktop The first thing you see when you log on to the computer is the DESKTOP. The Desktop is the display area you see when Windows opens. The following items are the most common items that can be found on the desktop: Mouse pointer Icons Shortcuts to Storage drives Notification Start Button Taskbar tray Show Desktop/Peek button Andrea Philo September 2012 Page 1 of 13 Montgomery County-Norristown Public Library Computer Essentials – Session 1 – Step-by-Step Guide Parts of the Windows 7 Desktop Icon: A picture representing a program or file or places to store files. -

Chapter 2 3D User Interfaces: History and Roadmap

30706 02 pp011-026 r1jm.ps 5/6/04 3:49 PM Page 11 CHAPTER 2 3D3D UserUser Interfaces:Interfaces: HistoryHistory andand RoadmapRoadmap Three-dimensional UI design is not a traditional field of research with well-defined boundaries. Like human–computer interaction (HCI), it draws from many disciplines and has links to a wide variety of topics. In this chapter, we briefly describe the history of 3D UIs to set the stage for the rest of the book. We also present a 3D UI “roadmap” that posi- tions the topics covered in this book relative to associated areas. After reading this chapter, you should have an understanding of the origins of 3D UIs and its relation to other fields, and you should know what types of information to expect from the remainder of this book. 2.1. History of 3D UIs The graphical user interfaces (GUIs) used in today’s personal computers have an interesting history. Prior to 1980, almost all interaction with com- puters was based on typing complicated commands using a keyboard. The display was used almost exclusively for text, and when graphics were used, they were typically noninteractive. But around 1980, several technologies, such as the mouse, inexpensive raster graphics displays, and reasonably priced personal computer parts, were all mature enough to enable the first GUIs (such as the Xerox Star). With the advent of GUIs, UI design and HCI in general became a much more important research area, since the research affected everyone using computers. HCI is an 11 30706 02 pp011-026 r1jm.ps 5/6/04 3:49 PM Page 12 12 Chapter 2 3D User Interfaces: History and Roadmap 1 interdisciplinary field that draws from existing knowledge in perception, 2 cognition, linguistics, human factors, ethnography, graphic design, and 3 other areas. -

Adding the Maine Prescription Monitoring System As a Favorite

Adding the Maine Prescription Monitoring System as a Favorite Beginning 1/1/17 all newly prescribed narcotic medications ordered when discharging a patient from the Emergency Department or an Inpatient department must be recorded in the Maine prescription monitoring system (Maine PMP). Follow these steps to access the Maine PMP site from the SeHR/Epic system and create shortcuts to the activity to simplify access. Access the Maine PMP site from hyperspace 1. Follow this path for the intial launch of the Maine PMP site: Epic>Help>Maine PMP Program Please note: the Maine PMP database is managed and maintained by the State of Maine and does NOT support single-sign on 2. Click Login to enter your Maine PMP user name and password a. If you know your user name and password, click Login b. To retrieve your user name click Retrieve User Name c. To retrieve your password click Retrieve Password Save the Maine PMP site as a favorite under the Epic button After accessing the site from the Epic menu path you can make the link a favorite under the Epic menu. 1. Click the Epic button, the Maine PMP program hyperlink will appear under your Recent activities, click the Star icon to save it as a favorite. After staring the activity it becomes a favorite stored under the Epic button Save the Maine PMP site as a Quick Button on your Epic Toolbar After accessing the site from the Epic menu path you may choose to make the Maine PMP hyperlink a quick button on your main Epic toolbar. -

The Three-Dimensional User Interface

32 The Three-Dimensional User Interface Hou Wenjun Beijing University of Posts and Telecommunications China 1. Introduction This chapter introduced the three-dimensional user interface (3D UI). With the emergence of Virtual Environment (VE), augmented reality, pervasive computing, and other "desktop disengage" technology, 3D UI is constantly exploiting an important area. However, for most users, the 3D UI based on desktop is still a part that can not be ignored. This chapter interprets what is 3D UI, the importance of 3D UI and analyses some 3D UI application. At the same time, according to human-computer interaction strategy and research methods and conclusions of WIMP, it focus on desktop 3D UI, sums up some design principles of 3D UI. From the principle of spatial perception of people, spatial cognition, this chapter explained the depth clues and other theoretical knowledge, and introduced Hierarchical Semantic model of “UE”, Scenario-based User Behavior Model and Screen Layout for Information Minimization which can instruct the design and development of 3D UI. This chapter focuses on basic elements of 3D Interaction Behavior: Manipulation, Navigation, and System Control. It described in 3D UI, how to use manipulate the virtual objects effectively by using Manipulation which is the most fundamental task, how to reduce the user's cognitive load and enhance the user's space knowledge in use of exploration technology by using navigation, and how to issue an order and how to request the system for the implementation of a specific function and how to change the system status or change the interactive pattern by using System Control. -

Basic Computer Lesson

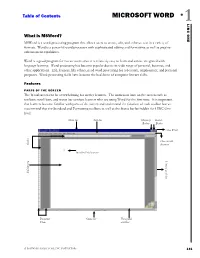

Table of Contents MICROSOFT WORD 1 ONE LINC What is MSWord? MSWord is a word-processing program that allows users to create, edit, and enhance text in a variety of formats. Word is a powerful word processor with sophisticated editing and formatting as well as graphic- enhancement capabilities. Word is a good program for novice users since it is relatively easy to learn and can be integrated with language learning. Word processing has become popular due to its wide range of personal, business, and other applications. ESL learners, like others, need word processing for job search, employment, and personal purposes. Word-processing skills have become the backbone of computer literacy skills. Features PARTS OF THE SCREEN The Word screen can be overwhelming for novice learners. The numerous bars on the screen such as toolbars, scroll bars, and status bar confuse learners who are using Word for the first time. It is important that learners become familiar with parts of the screen and understand the function of each toolbar but we recommend that the Standard and Formatting toolbars as well as the Status bar be hidden for LINC One level. Menu bar Title bar Minimize Restore Button Button Close Word Close current Rulers document Insertion Point (cursor) Vertical scroll bar Editing area Document Status bar Horizontal Views scroll bar A SOFTWARE GUIDE FOR LINC INSTRUCTORS 131 1 MICROSOFT WORD Hiding Standard toolbar, Formatting toolbar, and Status bar: • To hide the Standard toolbar, click View | Toolbars on the Menu bar. Check off Standard. LINC ONE LINC • To hide the Formatting toolbar, click View | Toolbars on the Menu bar. -

Acrobuttons Quick Start Tutorial

AcroButtons User’s Manual WindJack Solutions, Inc. AcroButtons© Quick Start Guide AcroButtons by Windjack Solutions, Inc. An Adobe® Acrobat® plug-in for creating Toolbar Buttons. The Fastest Button There are 5 quick steps to creating a custom toolbar button.. 1. Create a New Button 2. Name the button. 3. Select the image to appear on the button face. 4. Select the Action the button will perform. 5. Save to button to a file. Create a New Button There are two ways to start making your own Acrobat Toolbar Button. 1. Press the button on Acrobat’s Advanced Editing Toolbar. If this toolbar is not visible, then activate it from the menu option View–> Toolbars–> Advanced Editing. 2. Select the menu option Advanced–>AcroButtons–>Create New AcroButton Each of these Actions starts the process of creating a new toolbar button by displaying the Properties Panel for a new button. All values are set to defaults, including the button icon. (See The Properties Panel in the AcroButtons User’s Manual) At this point you could press “Ok” and a new toolbar button would appear on Acrobat’s toolbar. The image on the new button would be the icon that’s currently showing in the Properties Panel (question mark with a red slash through it). This button wouldn’t be very interesting though because it doesn’t do anything. 1 AcroButtons User’s Manual WindJack Solutions, Inc. Name the Button Change the default name, “Untitled0”, to something more descriptive. For this example change it to “NextPage”. The button’s name is important for several different reasons. -

What Is Interaction for Data Visualization? Evanthia Dimara, Charles Perin

What is Interaction for Data Visualization? Evanthia Dimara, Charles Perin To cite this version: Evanthia Dimara, Charles Perin. What is Interaction for Data Visualization?. IEEE Transactions on Visualization and Computer Graphics, Institute of Electrical and Electronics Engineers, 2020, 26 (1), pp.119 - 129. 10.1109/TVCG.2019.2934283. hal-02197062 HAL Id: hal-02197062 https://hal.archives-ouvertes.fr/hal-02197062 Submitted on 30 Jul 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. What is Interaction for Data Visualization? Evanthia Dimara and Charles Perin∗ Abstract—Interaction is fundamental to data visualization, but what “interaction” means in the context of visualization is ambiguous and confusing. We argue that this confusion is due to a lack of consensual definition. To tackle this problem, we start by synthesizing an inclusive view of interaction in the visualization community – including insights from information visualization, visual analytics and scientific visualization, as well as the input of both senior and junior visualization researchers. Once this view takes shape, we look at how interaction is defined in the field of human-computer interaction (HCI). By extracting commonalities and differences between the views of interaction in visualization and in HCI, we synthesize a definition of interaction for visualization. -

HTML Viewer Page 5

HTML Report Viewer Version: 18.1 Copyright © 2015 Intellicus Technologies This document and its content is copyrighted material of Intellicus Technologies. The content may not be copied or derived from, through any means, in parts or in whole, without a prior written permission from Intellicus Technologies. All other product names are believed to be registered trademarks of the respective companies. Dated: August 2015 Acknowledgements Intellicus acknowledges using of third-party libraries to extend support to the functionalities that they provide. For details, visit: http://www.intellicus.com/acknowledgements.htm 2 Contents 1 HTML Report Viewer 4 HTML Viewer page 5 Navigate 6 Table of Contents 7 Refresh the report 8 Collaborate 8 Email 8 Upload 9 Print 10 Publish 12 Export 13 View Alerts 14 3 1 HTML Report Viewer Reports are displayed in HTML Viewer when you select View > HTML option on Report Delivery Options page. Figure 1: View > HTML on Report Delivery Options page In addition to viewing a report, you can carry out following activities on HTML Viewer: • Navigate • Refresh the report • Collaborate • Email • Print • Publish • Export • Upload • View Alerts You can initiate any of these activities by clicking respective tool button from the toolbar. 4 HTML Viewer page Depending on the report being viewed and the steps taken as post-view operations, it may have multiple components on it: Toolbar Explore Pane Page area Figure 2: HTML Viewer HTML Viewer has mainly three components. On the top, it has toolbar having buttons for all the operations that can be done on the Viewer. The Explore pane appears on the left side of the window. -

Introduction to Office 2007 the Ribbon Home

Introduction to Office 2007 Introduction to Office 2007 class will show what’s different in Office 2007. The Ribbon at the top of the page has replaced menus and toolbars in Word, Excel, PowerPoint, Access and new messages in Outlook. This class will cover the use of the Ribbon, the Office Button (where is the File menu?), getting Help and online Training, the Mini Toolbar, the Quick Access Toolbar, new File Formats, and a Few Fun Features. The class and short handout are designed so when you return to your desk you can begin using the Office 2007 products. There are three major differences in Office 2007, the Ribbon, the Office Button and the new file formats. The Ribbon The Ribbon at the top of the page has replaced menus and toolbars in Word, Excel, PowerPoint, Access and new messages in Outlook. Commands – Are buttons, menus or boxes where you enter information. Groups – Are sets of related commands. Tabs – Represent core tasks. Home Tab Try it: 1. Start Word 2007, Excel 2007 & PowerPoint 2007. 2. Click on each tab to display different groups of commands. 3. Hover over a command for Enhanced Toolbar Tips. Note: keyboard shortcuts are shown if available. The Home Tab displays the most commonly used commands. In Word and Excel these include Copy, Cut, and Paste, Bold, Italic, Underscore etc. The commands are arranged in groups: Clipboard, Font, Paragraph, Styles and Editing. The most frequently used commands, Paste, Cut and Copy, are the left most in the first group in the Home Tab. The less frequently used commands or command choices can Try it: 1. -

A Review of User Interface Design for Interactive Machine Learning

1 A Review of User Interface Design for Interactive Machine Learning JOHN J. DUDLEY, University of Cambridge, United Kingdom PER OLA KRISTENSSON, University of Cambridge, United Kingdom Interactive Machine Learning (IML) seeks to complement human perception and intelligence by tightly integrating these strengths with the computational power and speed of computers. The interactive process is designed to involve input from the user but does not require the background knowledge or experience that might be necessary to work with more traditional machine learning techniques. Under the IML process, non-experts can apply their domain knowledge and insight over otherwise unwieldy datasets to find patterns of interest or develop complex data driven applications. This process is co-adaptive in nature and relies on careful management of the interaction between human and machine. User interface design is fundamental to the success of this approach, yet there is a lack of consolidated principles on how such an interface should be implemented. This article presents a detailed review and characterisation of Interactive Machine Learning from an interactive systems perspective. We propose and describe a structural and behavioural model of a generalised IML system and identify solution principles for building effective interfaces for IML. Where possible, these emergent solution principles are contextualised by reference to the broader human-computer interaction literature. Finally, we identify strands of user interface research key to unlocking more efficient and productive non-expert interactive machine learning applications. CCS Concepts: • Human-centered computing → HCI theory, concepts and models; Additional Key Words and Phrases: Interactive machine learning, interface design ACM Reference Format: John J. -

Interaction Design and Children

Foundations and TrendsR in Human–Computer Interaction Vol. 1, No. 4 (2007) 277–392 c 2008 J. P. Hourcade DOI: 10.1561/1100000006 Interaction Design and Children Juan Pablo Hourcade University of Iowa, USA, [email protected] Abstract Children are increasingly using computer technologies as reflected in reports of computer use in schools in the United States. Given the greater exposure of children to these technologies, it is imperative that they be designed taking into account children’s abilities, interests, and developmental needs. This survey aims to contribute toward this goal through a review of research on children’s cognitive and motor development, safety issues related to technologies and design method- ologies and principles. It also provides and overview of current research trends in the field of interaction design and children and identifies chal- lenges for future research. To understand children’s developmental needs it is important to be aware of the factors that affect children’s intellectual development. This survey analyzes the relevance of constructivist, socio-cultural, and other modern theories with respect to the design of technologies for chil- dren. It also examines the significance of research on children’s cognitive development in terms of perception, memory, symbolic representation, problem solving, and language. Since interacting with technologies most often involves children’s hands this survey also reviews literature on children’s fine motor development including manipulation and reach- ing movements. Just as it is important to know how to aid children’s development it is also crucial to avoid harming development. This survey summarizes research on how technologies can negatively affect children’s physical, intellectual, social, emotional, and moral devel- opment. -

Remove ANY TOOLBAR from Internet Explorer, Firefox and Chrome

Remove ANY TOOLBAR from Internet Explorer, Firefox and Chrome Browser toolbars have been around for years, however, in the last couple of months they became a huge mess. Unfortunately, lots of free software comes with more or less unwanted add-ons or browser toolbars. These are quite annoying because they may: Change your homepage and your search engine without your permission or awareness Track your browsing activities and searches Display annoying ads and manipulate search results Take up a lot of (vertical) space inside the browser Slow down your browser and degrade your browsing experience Fight against each other and make normal add-on handling difficult or impossible Become difficult or even impossible for the average user to fully uninstall Toolbars are not technically not a virus, but they do exhibit plenty of malicious traits, such as rootkit capabilities to hook deep into the operating system, browser hijacking, and in general just interfering with the user experience. The industry generally refers to it as a “PUP,” or potentially unwanted program. Generally speaking, toolbars are ad-supported (users may see additional banner, search, pop-up, pop-under, interstitial and in-text link advertisements) cross web browser plugin for Internet Explorer, Firefox and Chrome, and distributed through various monetization platforms during installation. Very often users have no idea where did it come from, so it’s not surprising at all that most of them assume that the installed toolbar is a virus. For example, when you install iLivid Media Player, you will also agree to change your browser homepage to search.conduit.com, set your default search engine to Conduit Search, and install the AVG Search-Results Toolbar.