ACID, CAP, No Newsql

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Smart Methods for Retrieving Physiological Data in Physiqual

University of Groningen Bachelor thesis Smart methods for retrieving physiological data in Physiqual Marc Babtist & Sebastian Wehkamp Supervisors: Frank Blaauw & Marco Aiello July, 2016 Contents 1 Introduction 4 1.1 Physiqual . 4 1.2 Problem description . 4 1.3 Research questions . 5 1.4 Document structure . 5 2 Related work 5 2.1 IJkdijk project . 5 2.2 MUMPS . 6 3 Background 7 3.1 Scalability . 7 3.2 CAP theorem . 7 3.3 Reliability models . 7 4 Requirements 8 4.1 Scalability . 8 4.2 Reliability . 9 4.3 Performance . 9 4.4 Open source . 9 5 General database options 9 5.1 Relational or Non-relational . 9 5.2 NoSQL categories . 10 5.2.1 Key-value Stores . 10 5.2.2 Document Stores . 11 5.2.3 Column Family Stores . 11 5.2.4 Graph Stores . 12 5.3 When to use which category . 12 5.4 Conclusion . 12 5.4.1 Key-Value . 13 5.4.2 Document stores . 13 5.4.3 Column family stores . 13 5.4.4 Graph Stores . 13 6 Specific database options 13 6.1 Key-value stores: Riak . 14 6.2 Document stores: MongoDB . 14 6.3 Column Family stores: Cassandra . 14 6.4 Performance comparison . 15 6.4.1 Sensor Data Storage Performance . 16 6.4.2 Conclusion . 16 7 Data model 17 7.1 Modeling rules . 17 7.2 Data model Design . 18 2 7.3 Summary . 18 8 Architecture 20 8.1 Basic layout . 20 8.2 Sidekiq . 21 9 Design decisions 21 9.1 Keyspaces . 21 9.2 Gap determination . -

Not ACID, Not BASE, but SALT a Transaction Processing Perspective on Blockchains

Not ACID, not BASE, but SALT A Transaction Processing Perspective on Blockchains Stefan Tai, Jacob Eberhardt and Markus Klems Information Systems Engineering, Technische Universitat¨ Berlin fst, je, [email protected] Keywords: SALT, blockchain, decentralized, ACID, BASE, transaction processing Abstract: Traditional ACID transactions, typically supported by relational database management systems, emphasize database consistency. BASE provides a model that trades some consistency for availability, and is typically favored by cloud systems and NoSQL data stores. With the increasing popularity of blockchain technology, another alternative to both ACID and BASE is introduced: SALT. In this keynote paper, we present SALT as a model to explain blockchains and their use in application architecture. We take both, a transaction and a transaction processing systems perspective on the SALT model. From a transactions perspective, SALT is about Sequential, Agreed-on, Ledgered, and Tamper-resistant transaction processing. From a systems perspec- tive, SALT is about decentralized transaction processing systems being Symmetric, Admin-free, Ledgered and Time-consensual. We discuss the importance of these dual perspectives, both, when comparing SALT with ACID and BASE, and when engineering blockchain-based applications. We expect the next-generation of decentralized transactional applications to leverage combinations of all three transaction models. 1 INTRODUCTION against. Using the admittedly contrived acronym of SALT, we characterize blockchain-based transactions There is a common belief that blockchains have the – from a transactions perspective – as Sequential, potential to fundamentally disrupt entire industries. Agreed, Ledgered, and Tamper-resistant, and – from Whether we are talking about financial services, the a systems perspective – as Symmetric, Admin-free, sharing economy, the Internet of Things, or future en- Ledgered, and Time-consensual. -

Failures in DBMS

Chapter 11 Database Recovery 1 Failures in DBMS Two common kinds of failures StSystem filfailure (t)(e.g. power outage) ‒ affects all transactions currently in progress but does not physically damage the data (soft crash) Media failures (e.g. Head crash on the disk) ‒ damagg()e to the database (hard crash) ‒ need backup data Recoveryyp scheme responsible for handling failures and restoring database to consistent state 2 Recovery Recovering the database itself Recovery algorithm has two parts ‒ Actions taken during normal operation to ensure system can recover from failure (e.g., backup, log file) ‒ Actions taken after a failure to restore database to consistent state We will discuss (briefly) ‒ Transactions/Transaction recovery ‒ System Recovery 3 Transactions A database is updated by processing transactions that result in changes to one or more records. A user’s program may carry out many operations on the data retrieved from the database, but the DBMS is only concerned with data read/written from/to the database. The DBMS’s abstract view of a user program is a sequence of transactions (reads and writes). To understand database recovery, we must first understand the concept of transaction integrity. 4 Transactions A transaction is considered a logical unit of work ‒ START Statement: BEGIN TRANSACTION ‒ END Statement: COMMIT ‒ Execution errors: ROLLBACK Assume we want to transfer $100 from one bank (A) account to another (B): UPDATE Account_A SET Balance= Balance -100; UPDATE Account_B SET Balance= Balance +100; We want these two operations to appear as a single atomic action 5 Transactions We want these two operations to appear as a single atomic action ‒ To avoid inconsistent states of the database in-between the two updates ‒ And obviously we cannot allow the first UPDATE to be executed and the second not or vice versa. -

Oracle Vs. Nosql Vs. Newsql Comparing Database Technology

Oracle vs. NoSQL vs. NewSQL Comparing Database Technology John Ryan Senior Solution Architect, Snowflake Computing Table of Contents The World has Changed . 1 What’s Changed? . 2 What’s the Problem? . .. 3 Performance vs. Availability and Durability . 3 Consistecy vs. Availability . 4 Flexibility vs . Scalability . 5 ACID vs. Eventual Consistency . 6 The OLTP Database Reimagined . 7 Achieving the Impossible! . .. 8 NewSQL Database Technology . 9 VoltDB . 10 MemSQL . 11 Which Applications Need NewSQL Technology? . 12 Conclusion . 13 About the Author . 13 ii The World has Changed The world has changed massively in the past 20 years. Back in the year 2000, a few million users connected to the web using a 56k modem attached to a PC, and Amazon only sold books. Now billions of people are using to their smartphone or tablet 24x7 to buy just about everything, and they’re interacting with Facebook, Twitter and Instagram. The pace has been unstoppable . Expectations have also changed. If a web page doesn’t refresh within seconds we’re quickly frustrated, and go elsewhere. If a web site is down, we fear it’s the end of civilisation as we know it. If a major site is down, it makes global headlines. Instant gratification takes too long! — Ladawn Clare-Panton Aside: If you’re not a seasoned Database Architect, you may want to start with my previous articles on Scalability and Database Architecture. Oracle vs. NoSQL vs. NewSQL eBook 1 What’s Changed? The above leads to a few observations: • Scalability — With potentially explosive traffic growth, IT systems need to quickly grow to meet exponential numbers of transactions • High Availability — IT systems must run 24x7, and be resilient to failure. -

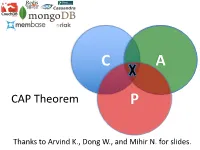

CAP Theorem P

C A CAP Theorem P Thanks to Arvind K., Dong W., and Mihir N. for slides. CAP Theorem • “It is impossible for a web service to provide these three guarantees at the same time (pick 2 of 3): • (Sequential) Consistency • Availability • Partition-tolerance” • Conjectured by Eric Brewer in ’00 • Proved by Gilbert and Lynch in ’02 • But with definitions that do not match what you’d assume (or Brewer meant) • Influenced the NoSQL mania • Highly controversial: “the CAP theorem encourages engineers to make awful decisions.” – Stonebraker • Many misinterpretations 2 CAP Theorem • Consistency: – Sequential consistency (a data item behaves as if there is one copy) • Availability: – Node failures do not prevent survivors from continuing to operate • Partition-tolerance: – The system continues to operate despite network partitions • CAP says that “A distributed system can satisfy any two of these guarantees at the same time but not all three” 3 C in CAP != C in ACID • They are different! • CAP’s C(onsistency) = sequential consistency – Similar to ACID’s A(tomicity) = Visibility to all future operations • ACID’s C(onsistency) = Does the data satisfy schema constraints 4 Sequential consistency • Makes it appear as if there is one copy of the object • Strict ordering on ops from same client • A single linear ordering across client ops – If client a executes operations {a1, a2, a3, ...}, client b executes operations {b1, b2, b3, ...} – Then, globally, clients observe some serialized version of the sequence • e.g., {a1, b1, b2, a2, ...} (or whatever) Notice -

SQL Vs Nosql: a Performance Comparison

SQL vs NoSQL: A Performance Comparison Ruihan Wang Zongyan Yang University of Rochester University of Rochester [email protected] [email protected] Abstract 2. ACID Properties and CAP Theorem We always hear some statements like ‘SQL is outdated’, 2.1. ACID Properties ‘This is the world of NoSQL’, ‘SQL is still used a lot by We need to refer the ACID properties[12]: most of companies.’ Which one is accurate? Has NoSQL completely replace SQL? Or is NoSQL just a hype? SQL Atomicity (Structured Query Language) is a standard query language A transaction is an atomic unit of processing; it should for relational database management system. The most popu- either be performed in its entirety or not performed at lar types of RDBMS(Relational Database Management Sys- all. tems) like Oracle, MySQL, SQL Server, uses SQL as their Consistency preservation standard database query language.[3] NoSQL means Not A transaction should be consistency preserving, meaning Only SQL, which is a collection of non-relational data stor- that if it is completely executed from beginning to end age systems. The important character of NoSQL is that it re- without interference from other transactions, it should laxes one or more of the ACID properties for a better perfor- take the database from one consistent state to another. mance in desired fields. Some of the NOSQL databases most Isolation companies using are Cassandra, CouchDB, Hadoop Hbase, A transaction should appear as though it is being exe- MongoDB. In this paper, we’ll outline the general differences cuted in iso- lation from other transactions, even though between the SQL and NoSQL, discuss if Relational Database many transactions are execut- ing concurrently. -

APPENDIX G Acid Dissociation Constants

harxxxxx_App-G.qxd 3/8/10 1:34 PM Page AP11 APPENDIX G Acid Dissociation Constants § ϭ 0.1 M 0 ؍ (Ionic strength ( † ‡ † Name Structure* pKa Ka pKa ϫ Ϫ5 Acetic acid CH3CO2H 4.756 1.75 10 4.56 (ethanoic acid) N ϩ H3 ϫ Ϫ3 Alanine CHCH3 2.344 (CO2H) 4.53 10 2.33 ϫ Ϫ10 9.868 (NH3) 1.36 10 9.71 CO2H ϩ Ϫ5 Aminobenzene NH3 4.601 2.51 ϫ 10 4.64 (aniline) ϪO SNϩ Ϫ4 4-Aminobenzenesulfonic acid 3 H3 3.232 5.86 ϫ 10 3.01 (sulfanilic acid) ϩ NH3 ϫ Ϫ3 2-Aminobenzoic acid 2.08 (CO2H) 8.3 10 2.01 ϫ Ϫ5 (anthranilic acid) 4.96 (NH3) 1.10 10 4.78 CO2H ϩ 2-Aminoethanethiol HSCH2CH2NH3 —— 8.21 (SH) (2-mercaptoethylamine) —— 10.73 (NH3) ϩ ϫ Ϫ10 2-Aminoethanol HOCH2CH2NH3 9.498 3.18 10 9.52 (ethanolamine) O H ϫ Ϫ5 4.70 (NH3) (20°) 2.0 10 4.74 2-Aminophenol Ϫ 9.97 (OH) (20°) 1.05 ϫ 10 10 9.87 ϩ NH3 ϩ ϫ Ϫ10 Ammonia NH4 9.245 5.69 10 9.26 N ϩ H3 N ϩ H2 ϫ Ϫ2 1.823 (CO2H) 1.50 10 2.03 CHCH CH CH NHC ϫ Ϫ9 Arginine 2 2 2 8.991 (NH3) 1.02 10 9.00 NH —— (NH2) —— (12.1) CO2H 2 O Ϫ 2.24 5.8 ϫ 10 3 2.15 Ϫ Arsenic acid HO As OH 6.96 1.10 ϫ 10 7 6.65 Ϫ (hydrogen arsenate) (11.50) 3.2 ϫ 10 12 (11.18) OH ϫ Ϫ10 Arsenious acid As(OH)3 9.29 5.1 10 9.14 (hydrogen arsenite) N ϩ O H3 Asparagine CHCH2CNH2 —— —— 2.16 (CO2H) —— —— 8.73 (NH3) CO2H *Each acid is written in its protonated form. -

Nosql Databases: Yearning for Disambiguation

NOSQL DATABASES: YEARNING FOR DISAMBIGUATION Chaimae Asaad Alqualsadi, Rabat IT Center, ENSIAS, University Mohammed V in Rabat and TicLab, International University of Rabat, Morocco [email protected] Karim Baïna Alqualsadi, Rabat IT Center, ENSIAS, University Mohammed V in Rabat, Morocco [email protected] Mounir Ghogho TicLab, International University of Rabat Morocco [email protected] March 17, 2020 ABSTRACT The demanding requirements of the new Big Data intensive era raised the need for flexible storage systems capable of handling huge volumes of unstructured data and of tackling the challenges that arXiv:2003.04074v2 [cs.DB] 16 Mar 2020 traditional databases were facing. NoSQL Databases, in their heterogeneity, are a powerful and diverse set of databases tailored to specific industrial and business needs. However, the lack of the- oretical background creates a lack of consensus even among experts about many NoSQL concepts, leading to ambiguity and confusion. In this paper, we present a survey of NoSQL databases and their classification by data model type. We also conduct a benchmark in order to compare different NoSQL databases and distinguish their characteristics. Additionally, we present the major areas of ambiguity and confusion around NoSQL databases and their related concepts, and attempt to disambiguate them. Keywords NoSQL Databases · NoSQL data models · NoSQL characteristics · NoSQL Classification A PREPRINT -MARCH 17, 2020 1 Introduction The proliferation of data sources ranging from social media and Internet of Things (IoT) to industrially generated data (e.g. transactions) has led to a growing demand for data intensive cloud based applications and has created new challenges for big-data-era databases. -

IBM Cloudant: Database As a Service Fundamentals

Front cover IBM Cloudant: Database as a Service Fundamentals Understand the basics of NoSQL document data stores Access and work with the Cloudant API Work programmatically with Cloudant data Christopher Bienko Marina Greenstein Stephen E Holt Richard T Phillips ibm.com/redbooks Redpaper Contents Notices . 5 Trademarks . 6 Preface . 7 Authors. 7 Now you can become a published author, too! . 8 Comments welcome. 8 Stay connected to IBM Redbooks . 9 Chapter 1. Survey of the database landscape . 1 1.1 The fundamentals and evolution of relational databases . 2 1.1.1 The relational model . 2 1.1.2 The CAP Theorem . 4 1.2 The NoSQL paradigm . 5 1.2.1 ACID versus BASE systems . 7 1.2.2 What is NoSQL? . 8 1.2.3 NoSQL database landscape . 9 1.2.4 JSON and schema-less model . 11 Chapter 2. Build more, grow more, and sleep more with IBM Cloudant . 13 2.1 Business value . 15 2.2 Solution overview . 16 2.3 Solution architecture . 18 2.4 Usage scenarios . 20 2.5 Intuitively interact with data using Cloudant Dashboard . 21 2.5.1 Editing JSON documents using Cloudant Dashboard . 22 2.5.2 Configuring access permissions and replication jobs within Cloudant Dashboard. 24 2.6 A continuum of services working together on cloud . 25 2.6.1 Provisioning an analytics warehouse with IBM dashDB . 26 2.6.2 Data refinement services on-premises. 30 2.6.3 Data refinement services on the cloud. 30 2.6.4 Hybrid clouds: Data refinement across on/off–premises. 31 Chapter 3. -

Drugs and Acid Dissociation Constants Ionisation of Drug Molecules Most Drugs Ionise in Aqueous Solution.1 They Are Weak Acids Or Weak Bases

Drugs and acid dissociation constants Ionisation of drug molecules Most drugs ionise in aqueous solution.1 They are weak acids or weak bases. Those that are weak acids ionise in water to give acidic solutions while those that are weak bases ionise to give basic solutions. Drug molecules that are weak acids Drug molecules that are weak bases where, HA = acid (the drug molecule) where, B = base (the drug molecule) H2O = base H2O = acid A− = conjugate base (the drug anion) OH− = conjugate base (the drug anion) + + H3O = conjugate acid BH = conjugate acid Acid dissociation constant, Ka For a drug molecule that is a weak acid The equilibrium constant for this ionisation is given by the equation + − where [H3O ], [A ], [HA] and [H2O] are the concentrations at equilibrium. In a dilute solution the concentration of water is to all intents and purposes constant. So the equation is simplified to: where Ka is the acid dissociation constant for the weak acid + + Also, H3O is often written simply as H and the equation for Ka is usually written as: Values for Ka are extremely small and, therefore, pKa values are given (similar to the reason pH is used rather than [H+]. The relationship between pKa and pH is given by the Henderson–Hasselbalch equation: or This relationship is important when determining pKa values from pH measurements. Base dissociation constant, Kb For a drug molecule that is a weak base: 1 Ionisation of drug molecules. 1 Following the same logic as for deriving Ka, base dissociation constant, Kb, is given by: and Ionisation of water Water ionises very slightly. -

Database Software Market: Billy Fitzsimmons +1 312 364 5112

Equity Research Technology, Media, & Communications | Enterprise and Cloud Infrastructure March 22, 2019 Industry Report Jason Ader +1 617 235 7519 [email protected] Database Software Market: Billy Fitzsimmons +1 312 364 5112 The Long-Awaited Shake-up [email protected] Naji +1 212 245 6508 [email protected] Please refer to important disclosures on pages 70 and 71. Analyst certification is on page 70. William Blair or an affiliate does and seeks to do business with companies covered in its research reports. As a result, investors should be aware that the firm may have a conflict of interest that could affect the objectivity of this report. This report is not intended to provide personal investment advice. The opinions and recommendations here- in do not take into account individual client circumstances, objectives, or needs and are not intended as recommen- dations of particular securities, financial instruments, or strategies to particular clients. The recipient of this report must make its own independent decisions regarding any securities or financial instruments mentioned herein. William Blair Contents Key Findings ......................................................................................................................3 Introduction .......................................................................................................................5 Database Market History ...................................................................................................7 Market Definitions -

A Big Data Roadmap for the DB2 Professional

A Big Data Roadmap For the DB2 Professional Sponsored by: align http://www.datavail.com Craig S. Mullins Mullins Consulting, Inc. http://www.MullinsConsulting.com © 2014 Mullins Consulting, Inc. Author This presentation was prepared by: Craig S. Mullins President & Principal Consultant Mullins Consulting, Inc. 15 Coventry Ct Sugar Land, TX 77479 Tel: 281-494-6153 Fax: 281.491.0637 Skype: cs.mullins E-mail: [email protected] http://www.mullinsconsultinginc.com This document is protected under the copyright laws of the United States and other countries as an unpublished work. This document contains information that is proprietary and confidential to Mullins Consulting, Inc., which shall not be disclosed outside or duplicated, used, or disclosed in whole or in part for any purpose other than as approved by Mullins Consulting, Inc. Any use or disclosure in whole or in part of this information without the express written permission of Mullins Consulting, Inc. is prohibited. © 2014 Craig S. Mullins and Mullins Consulting, Inc. (Unpublished). All rights reserved. © 2014 Mullins Consulting, Inc. 2 Agenda Uncover the roadmap to Big Data… the terminology and technology used, use cases, and trends. • Gain a working knowledge and definition of Big Data (beyond the simple three V's definition) • Break down and understand the often confusing terminology within the realm of Big Data (e.g. polyglot persistence) • Examine the four predominant NoSQL database systems used in Big Data implementations (graph, key/value, column, and document) • Learn some of the major differences between Big Data/NoSQL implementations vis-a-vis traditional transaction processing • Discover the primary use cases for Big Data and NoSQL versus relational databases © 2014 Mullins Consulting, Inc.