Linking Genes in Locuslink/Entrez Gene to Medline Citations with Lingpipe∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hyaluronidase PH20 (SPAM1) Rabbit Polyclonal Antibody – TA337855

OriGene Technologies, Inc. 9620 Medical Center Drive, Ste 200 Rockville, MD 20850, US Phone: +1-888-267-4436 [email protected] EU: [email protected] CN: [email protected] Product datasheet for TA337855 Hyaluronidase PH20 (SPAM1) Rabbit Polyclonal Antibody Product data: Product Type: Primary Antibodies Applications: WB Recommended Dilution: WB Reactivity: Human Host: Rabbit Isotype: IgG Clonality: Polyclonal Immunogen: The immunogen for anti-SPAM1 antibody is: synthetic peptide directed towards the C- terminal region of Human SPAM1. Synthetic peptide located within the following region: CYSTLSCKEKADVKDTDAVDVCIADGVCIDAFLKPPMETEEPQIFYNASP Formulation: Liquid. Purified antibody supplied in 1x PBS buffer with 0.09% (w/v) sodium azide and 2% sucrose. Note that this product is shipped as lyophilized powder to China customers. Purification: Affinity Purified Conjugation: Unconjugated Storage: Store at -20°C as received. Stability: Stable for 12 months from date of receipt. Predicted Protein Size: 58 kDa Gene Name: sperm adhesion molecule 1 Database Link: NP_694859 Entrez Gene 6677 Human P38567 This product is to be used for laboratory only. Not for diagnostic or therapeutic use. View online » ©2021 OriGene Technologies, Inc., 9620 Medical Center Drive, Ste 200, Rockville, MD 20850, US 1 / 3 Hyaluronidase PH20 (SPAM1) Rabbit Polyclonal Antibody – TA337855 Background: Hyaluronidase degrades hyaluronic acid, a major structural proteoglycan found in extracellular matrices and basement membranes. Six members of the hyaluronidase family are clustered into two tightly linked groups on chromosome 3p21.3 and 7q31.3. This gene was previously referred to as HYAL1 and HYA1 and has since been assigned the official symbol SPAM1; another family member on chromosome 3p21.3 has been assigned HYAL1. -

Metallothionein Monoclonal Antibody, Clone N11-G

Metallothionein monoclonal antibody, clone N11-G Catalog # : MAB9787 規格 : [ 50 uL ] List All Specification Application Image Product Rabbit monoclonal antibody raised against synthetic peptide of MT1A, Western Blot (Recombinant protein) Description: MT1B, MT1E, MT1F, MT1G, MT1H, MT1IP, MT1L, MT1M, MT2A. Immunogen: A synthetic peptide corresponding to N-terminus of human MT1A, MT1B, MT1E, MT1F, MT1G, MT1H, MT1IP, MT1L, MT1M, MT2A. Host: Rabbit enlarge Reactivity: Human, Mouse Immunoprecipitation Form: Liquid Enzyme-linked Immunoabsorbent Assay Recommend Western Blot (1:1000) Usage: ELISA (1:5000-1:10000) The optimal working dilution should be determined by the end user. Storage Buffer: In 20 mM Tris-HCl, pH 8.0 (10 mg/mL BSA, 0.05% sodium azide) Storage Store at -20°C. Instruction: Note: This product contains sodium azide: a POISONOUS AND HAZARDOUS SUBSTANCE which should be handled by trained staff only. Datasheet: Download Applications Western Blot (Recombinant protein) Western blot analysis of recombinant Metallothionein protein with Metallothionein monoclonal antibody, clone N11-G (Cat # MAB9787). Lane 1: 1 ug. Lane 2: 3 ug. Lane 3: 5 ug. Immunoprecipitation Enzyme-linked Immunoabsorbent Assay ASSP5 MT1A MT1B MT1E MT1F MT1G MT1H MT1M MT1L MT1IP Page 1 of 5 2021/6/2 Gene Information Entrez GeneID: 4489 Protein P04731 (Gene ID : 4489);P07438 (Gene ID : 4490);P04732 (Gene ID : Accession#: 4493);P04733 (Gene ID : 4494);P13640 (Gene ID : 4495);P80294 (Gene ID : 4496);P80295 (Gene ID : 4496);Q8N339 (Gene ID : 4499);Q86YX0 (Gene ID : 4490);Q86YX5 -

CD56+ T-Cells in Relation to Cytomegalovirus in Healthy Subjects and Kidney Transplant Patients

CD56+ T-cells in Relation to Cytomegalovirus in Healthy Subjects and Kidney Transplant Patients Institute of Infection and Global Health Department of Clinical Infection, Microbiology and Immunology Thesis submitted in accordance with the requirements of the University of Liverpool for the degree of Doctor in Philosophy by Mazen Mohammed Almehmadi December 2014 - 1 - Abstract Human T cells expressing CD56 are capable of tumour cell lysis following activation with interleukin-2 but their role in viral immunity has been less well studied. The work described in this thesis aimed to investigate CD56+ T-cells in relation to cytomegalovirus infection in healthy subjects and kidney transplant patients (KTPs). Proportions of CD56+ T cells were found to be highly significantly increased in healthy cytomegalovirus-seropositive (CMV+) compared to cytomegalovirus-seronegative (CMV-) subjects (8.38% ± 0.33 versus 3.29%± 0.33; P < 0.0001). In donor CMV-/recipient CMV- (D-/R-)- KTPs levels of CD56+ T cells were 1.9% ±0.35 versus 5.42% ±1.01 in D+/R- patients and 5.11% ±0.69 in R+ patients (P 0.0247 and < 0.0001 respectively). CD56+ T cells in both healthy CMV+ subjects and KTPs expressed markers of effector memory- RA T-cells (TEMRA) while in healthy CMV- subjects and D-/R- KTPs the phenotype was predominantly that of naïve T-cells. Other surface markers, CD8, CD4, CD58, CD57, CD94 and NKG2C were expressed by a significantly higher proportion of CD56+ T-cells in healthy CMV+ than CMV- subjects. Functional studies showed levels of pro-inflammatory cytokines IFN-γ and TNF-α, as well as granzyme B and CD107a were significantly higher in CD56+ T-cells from CMV+ than CMV- subjects following stimulation with CMV antigens. -

Investigation of Structural Properties of Methylated Human Promoter Regions in Terms of Dna Helical Rise

INVESTIGATION OF STRUCTURAL PROPERTIES OF METHYLATED HUMAN PROMOTER REGIONS IN TERMS OF DNA HELICAL RISE A THESIS SUBMITTED TO THE GRADUATE SCHOOL OF INFORMATICS OF MIDDLE EAST TECHNICAL UNIVERSITY BY BURCU YALDIZ IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE IN BIOINFORMATICS AUGUST 2014 INVESTIGATION OF STRUCTURAL PROPERTIES OF METHYLATED HUMAN PROMOTER REGIONS IN TERMS OF DNA HELICAL RISE submitted by Burcu YALDIZ in partial fulfillment of the requirements for the degree of Master of Science, Bioinformatics Program, Middle East Technical University by, Prof. Dr. Nazife Baykal _____________________ Director, Informatics Institute Assist. Prof. Dr. Yeşim Aydın Son _____________________ Head of Department, Health Informatics, METU Assist. Prof. Dr. Yeşim Aydın Son Supervisor, Health Informatics, METU _____________________ Examining Committee Members: Assoc. Prof. Dr. Tolga Can _____________________ METU, CENG Assist. Prof. Dr. Yeşim Aydın Son _____________________ METU, Health Informatics Assist. Prof. Dr. Aybar Can Acar _____________________ METU, Health Informatics Assist. Prof. Dr. Özlen Konu _____________________ Bilkent University, Molecular Biology and Genetics Assoc. Prof. Dr. Çağdaş D. Son _____________________ METU, Biology Date: 27.08.2014 I hereby declare that all information in this document has been obtained and presented in accordance with academic rules and ethical conduct. I also declare that, as required by these rules and conduct, I have fully cited and referenced all material and results that are not original to this work. Name, Last name : Burcu Yaldız Signature : iii ABSTRACT INVESTIGATION OF STRUCTURAL PROPERTIES OF METHYLATED HUMAN PROMOTER REGIONS IN TERMS OF DNA HELICAL RISE Yaldız, Burcu M.Sc. Bioinformatics Program Advisor: Assist. Prof. Dr. Yeşim Aydın Son August 2014, 60 pages The infamous double helix structure of DNA was assumed to be a rigid, uniformly observed structure throughout the genomic DNA. -

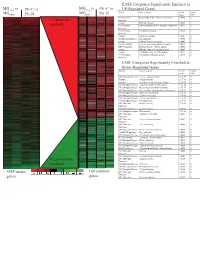

Supplement 1 Microarray Studies

EASE Categories Significantly Enriched in vs MG vs vs MGC4-2 Pt1-C vs C4-2 Pt1-C UP-Regulated Genes MG System Gene Category EASE Global MGRWV Pt1-N RWV Pt1-N Score FDR GO Molecular Extracellular matrix cellular construction 0.0008 0 110 genes up- Function Interpro EGF-like domain 0.0009 0 regulated GO Molecular Oxidoreductase activity\ acting on single dono 0.0015 0 Function GO Molecular Calcium ion binding 0.0018 0 Function Interpro Laminin-G domain 0.0025 0 GO Biological Process Cell Adhesion 0.0045 0 Interpro Collagen Triple helix repeat 0.0047 0 KEGG pathway Complement and coagulation cascades 0.0053 0 KEGG pathway Immune System – Homo sapiens 0.0053 0 Interpro Fibrillar collagen C-terminal domain 0.0062 0 Interpro Calcium-binding EGF-like domain 0.0077 0 GO Molecular Cell adhesion molecule activity 0.0105 0 Function EASE Categories Significantly Enriched in Down-Regulated Genes System Gene Category EASE Global Score FDR GO Biological Process Copper ion homeostasis 2.5E-09 0 Interpro Metallothionein 6.1E-08 0 Interpro Vertebrate metallothionein, Family 1 6.1E-08 0 GO Biological Process Transition metal ion homeostasis 8.5E-08 0 GO Biological Process Heavy metal sensitivity/resistance 1.9E-07 0 GO Biological Process Di-, tri-valent inorganic cation homeostasis 6.3E-07 0 GO Biological Process Metal ion homeostasis 6.3E-07 0 GO Biological Process Cation homeostasis 2.1E-06 0 GO Biological Process Cell ion homeostasis 2.1E-06 0 GO Biological Process Ion homeostasis 2.1E-06 0 GO Molecular Helicase activity 2.3E-06 0 Function GO Biological -

Emerging Roles of Metallothioneins in Beta Cell Pathophysiology: Beyond and Above Metal Homeostasis and Antioxidant Response

biology Review Emerging Roles of Metallothioneins in Beta Cell Pathophysiology: Beyond and above Metal Homeostasis and Antioxidant Response Mohammed Bensellam 1,* , D. Ross Laybutt 2,3 and Jean-Christophe Jonas 1 1 Pôle D’endocrinologie, Diabète et Nutrition, Institut de Recherche Expérimentale et Clinique, Université Catholique de Louvain, B-1200 Brussels, Belgium; [email protected] 2 Garvan Institute of Medical Research, Sydney, NSW 2010, Australia; [email protected] 3 St Vincent’s Clinical School, UNSW Sydney, Sydney, NSW 2010, Australia * Correspondence: [email protected]; Tel.: +32-2764-9586 Simple Summary: Defective insulin secretion by pancreatic beta cells is key for the development of type 2 diabetes but the precise mechanisms involved are poorly understood. Metallothioneins are metal binding proteins whose precise biological roles have not been fully characterized. Available evidence indicated that Metallothioneins are protective cellular effectors involved in heavy metal detoxification, metal ion homeostasis and antioxidant defense. This concept has however been challenged by emerging evidence in different medical research fields revealing novel negative roles of Metallothioneins, including in the context of diabetes. In this review, we gather and analyze the available knowledge regarding the complex roles of Metallothioneins in pancreatic beta cell biology and insulin secretion. We comprehensively analyze the evidence showing positive effects Citation: Bensellam, M.; Laybutt, of Metallothioneins on beta cell function and survival as well as the emerging evidence revealing D.R.; Jonas, J.-C. Emerging Roles of negative effects and discuss the possible underlying mechanisms. We expose in parallel findings Metallothioneins in Beta Cell from other medical research fields and underscore unsettled questions. -

Role of Phytochemicals in Colon Cancer Prevention: a Nutrigenomics Approach

Role of phytochemicals in colon cancer prevention: a nutrigenomics approach Marjan J van Erk Promotor: Prof. Dr. P.J. van Bladeren Hoogleraar in de Toxicokinetiek en Biotransformatie Wageningen Universiteit Co-promotoren: Dr. Ir. J.M.M.J.G. Aarts Universitair Docent, Sectie Toxicologie Wageningen Universiteit Dr. Ir. B. van Ommen Senior Research Fellow Nutritional Systems Biology TNO Voeding, Zeist Promotiecommissie: Prof. Dr. P. Dolara University of Florence, Italy Prof. Dr. J.A.M. Leunissen Wageningen Universiteit Prof. Dr. J.C. Mathers University of Newcastle, United Kingdom Prof. Dr. M. Müller Wageningen Universiteit Dit onderzoek is uitgevoerd binnen de onderzoekschool VLAG Role of phytochemicals in colon cancer prevention: a nutrigenomics approach Marjan Jolanda van Erk Proefschrift ter verkrijging van graad van doctor op gezag van de rector magnificus van Wageningen Universiteit, Prof.Dr.Ir. L. Speelman, in het openbaar te verdedigen op vrijdag 1 oktober 2004 des namiddags te vier uur in de Aula Title Role of phytochemicals in colon cancer prevention: a nutrigenomics approach Author Marjan Jolanda van Erk Thesis Wageningen University, Wageningen, the Netherlands (2004) with abstract, with references, with summary in Dutch ISBN 90-8504-085-X ABSTRACT Role of phytochemicals in colon cancer prevention: a nutrigenomics approach Specific food compounds, especially from fruits and vegetables, may protect against development of colon cancer. In this thesis effects and mechanisms of various phytochemicals in relation to colon cancer prevention were studied through application of large-scale gene expression profiling. Expression measurement of thousands of genes can yield a more complete and in-depth insight into the mode of action of the compounds. -

Identifying Host Genetic Factors Controlling Susceptibility to Blood-Stage Malaria in Mice

Identifying host genetic factors controlling susceptibility to blood-stage malaria in mice Aurélie Laroque Department of Biochemistry McGill University Montreal, Quebec, Canada December 2016 A thesis submitted to McGill University in partial fulfilment of the requirements of the degree of Doctor of Philosophy. © Aurélie Laroque, 2016 ABSTRACT This thesis examines genetic factors controlling host response to blood stage malaria in mice. We first phenotyped 25 inbred strains for resistance/susceptibility to Plasmodium chabaudi chabaudi AS infection. A broad spectrum of responses was observed, which suggests rich genetic diversity among different mouse strains in response to malaria. A F2 intercross was generated between susceptible SM/J and resistant C57BL/6 mice and the progeny was phenotyped for susceptibility to P. chabaudi chabaudi AS infection. A whole genome scan revealed the Char1 locus as a key regulator of parasite density. Using a haplotype mapping approach, we reduced the locus to a 0.4Mb conserved interval that segregates with resistance/susceptibility to infection for the search of positional candidate genes. In addition, I pursued the work on the Char10 locus that was previously identified in [AcB62xCBA/PK]F2 animals (LOD=10.8, 95% Bayesian CI=50.7-75Mb). Pyruvate kinase deficiency was found to protect mice and humans against malaria. However, AcB62 mice are susceptible to P. chabaudi infection despite carrying the protective PklrI90N mutation. We characterized the Char10 locus and showed that it modulates the severity of the pyruvate deficiency phenotype by regulating erythroid responses. We created 4 congenic lines carrying different portions of the Char10 interval and demonstrated that Char10 is found within a maximal 4Mb interval. -

HYAL3 (E-11): Sc-377430

SANTA CRUZ BIOTECHNOLOGY, INC. HYAL3 (E-11): sc-377430 BACKGROUND APPLICATIONS Hyaluronidases (HAases or HYALs) are a family of lysosomal enzymes that are HYAL3 (E-11) is recommended for detection of HYAL3 of human origin by crucial for the spread of bacterial infections and of toxins present in a vari- Western Blotting (starting dilution 1:100, dilution range 1:100-1:1000), ety of venoms. HYALs may also be involved in the progression of cancer. In immunoprecipitation [1-2 µg per 100-500 µg of total protein (1 ml of cell humans, six HYAL proteins have been identified. HYAL proteins use hydroly- lysate)], immunofluorescence (starting dilution 1:50, dilution range 1:50- sis to degrade hyaluronic acid (HA), which is present in body fluids, tissues, 1:500), immunohistochemistry (including paraffin-embedded sections) and the extracellular matrix of vertebrate tissues. HA keeps tissues hydrated, (starting dilution 1:50, dilution range 1:50-1:500) and solid phase ELISA maintains osmotic balance, and promotes cell proliferation, differentiation, (starting dilution 1:30, dilution range 1:30-1:3000). and metastasis. HA is also an important structural component of cartilage Suitable for use as control antibody for HYAL3 siRNA (h): sc-60826, HYAL3 and acts as a lubricant in joints. HYAL3 is a 417-amino acid protein that is shRNA Plasmid (h): sc-60826-SH and HYAL3 shRNA (h) Lentiviral Particles: highly expressed in testis and bone marrow, but has relatively low expression sc-60826-V. in all other tissues. Unlike HYAL 1 and HYAL2, HYAL3 is an unlikely tumor supressor candidate, given the lack of detected mutations in its gene. -

Hyaluronidase PH20 (SPAM1) (NM 003117) Human Mass Spec Standard – PH305378 | Origene

OriGene Technologies, Inc. 9620 Medical Center Drive, Ste 200 Rockville, MD 20850, US Phone: +1-888-267-4436 [email protected] EU: [email protected] CN: [email protected] Product datasheet for PH305378 Hyaluronidase PH20 (SPAM1) (NM_003117) Human Mass Spec Standard Product data: Product Type: Mass Spec Standards Description: SPAM1 MS Standard C13 and N15-labeled recombinant protein (NP_003108) Species: Human Expression Host: HEK293 Expression cDNA Clone RC205378 or AA Sequence: Predicted MW: 58.2 kDa Protein Sequence: >RC205378 representing NM_003117 Red=Cloning site Green=Tags(s) MGVLKFKHIFFRSFVKSSGVSQIVFTFLLIPCCLTLNFRAPPVIPNVPFLWAWNAPSEFCLGKFDEPLDM SLFSFIGSPRINATGQGVTIFYVDRLGYYPYIDSITGVTVNGGIPQKISLQDHLDKAKKDITFYMPVDNL GMAVIDWEEWRPTWARNWKPKDVYKNRSIELVQQQNVQLSLTEATEKAKQEFEKAGKDFLVETIKLGKLL RPNHLWGYYLFPDCYNHHYKKPGYNGSCFNVEIKRNDDLSWLWNESTALYPSIYLNTQQSPVAATLYVRN RVREAIRVSKIPDAKSPLPVFAYTRIVFTDQVLKFLSQDELVYTFGETVALGASGIVIWGTLSIMRSMKS CLLLDNYMETILNPYIINVTLAAKMCSQVLCQEQGVCIRKNWNSSDYLHLNPDNFAIQLEKGGKFTVRGK PTLEDLEQFSEKFYCSCYSTLSCKEKADVKDTDAVDVCIADGVCIDAFLKPPMETEEPQIFYNASPSTLS ATMFIWRLEVWDQGISRIGFF TRTRPLEQKLISEEDLAANDILDYKDDDDKV Tag: C-Myc/DDK Purity: > 80% as determined by SDS-PAGE and Coomassie blue staining Concentration: 50 ug/ml as determined by BCA Labeling Method: Labeled with [U- 13C6, 15N4]-L-Arginine and [U- 13C6, 15N2]-L-Lysine Buffer: 100 mM glycine, 25 mM Tris-HCl, pH 7.3. Store at -80°C. Avoid repeated freeze-thaw cycles. Stable for 3 months from receipt of products under proper storage and handling conditions. RefSeq: -

Molecular Signatures of Maturing Dendritic Cells

Jin et al. Journal of Translational Medicine 2010, 8:4 http://www.translational-medicine.com/content/8/1/4 RESEARCH Open Access Molecular signatures of maturing dendritic cells: implications for testing the quality of dendritic cell therapies Ping Jin1*†, Tae Hee Han1,2†, Jiaqiang Ren1, Stefanie Saunders1, Ena Wang1, Francesco M Marincola1, David F Stroncek1 Abstract Background: Dendritic cells (DCs) are often produced by granulocyte-macrophage colony-stimulating factor (GM- CSF) and interleukin-4 (IL-4) stimulation of monocytes. To improve the effectiveness of DC adoptive immune cancer therapy, many different agents have been used to mature DCs. We analyzed the kinetics of DC maturation by lipopolysaccharide (LPS) and interferon-g (IFN-g) induction in order to characterize the usefulness of mature DCs (mDCs) for immune therapy and to identify biomarkers for assessing the quality of mDCs. Methods: Peripheral blood mononuclear cells were collected from 6 healthy subjects by apheresis, monocytes were isolated by elutriation, and immature DCs (iDCs) were produced by 3 days of culture with GM-CSF and IL-4. The iDCs were sampled after 4, 8 and 24 hours in culture with LPS and IFN-g and were then assessed by flow cytometry, ELISA, and global gene and microRNA (miRNA) expression analysis. Results: After 24 hours of LPS and IFN-g stimulation, DC surface expression of CD80, CD83, CD86, and HLA Class II antigens were up-regulated. Th1 attractant genes such as CXCL9, CXCL10, CXCL11 and CCL5 were up-regulated during maturation but not Treg attractants such as CCL22 and CXCL12. The expression of classical mDC biomarker genes CD83, CCR7, CCL5, CCL8, SOD2, MT2A, OASL, GBP1 and HES4 were up-regulated throughout maturation while MTIB, MTIE, MTIG, MTIH, GADD45A and LAMP3 were only up-regulated late in maturation. -

HYAL1LUCA-1, a Candidate Tumor Suppressor Gene on Chromosome 3P21.3, Is Inactivated in Head and Neck Squamous Cell Carcinomas by Aberrant Splicing of Pre-Mrna

Oncogene (2000) 19, 870 ± 878 ã 2000 Macmillan Publishers Ltd All rights reserved 0950 ± 9232/00 $15.00 www.nature.com/onc HYAL1LUCA-1, a candidate tumor suppressor gene on chromosome 3p21.3, is inactivated in head and neck squamous cell carcinomas by aberrant splicing of pre-mRNA Gregory I Frost1,3, Gayatry Mohapatra2, Tim M Wong1, Antonei Benjamin Cso ka1, Joe W Gray2 and Robert Stern*,1 1Department of Pathology, School of Medicine, University of California, San Francisco, California, CA 94143, USA; 2Cancer Genetics Program, UCSF Cancer Center, University of California, San Francisco, California, CA 94115, USA The hyaluronidase ®rst isolated from human plasma, genes in the process of carcinogenesis (Sager, 1997; Hyal-1, is expressed in many somatic tissues. The Hyal- Baylin et al., 1998). Nevertheless, functionally 1 gene, HYAL1, also known as LUCA-1, maps to inactivating point mutations are generally viewed as chromosome 3p21.3 within a candidate tumor suppressor the critical `smoking gun' when de®ning a novel gene locus de®ned by homozygous deletions and by TSG. functional tumor suppressor activity. Hemizygosity in We recently mapped the HYAL1 gene to human this region occurs in many malignancies, including chromosome 3p21.3 (Cso ka et al., 1998), con®rming its squamous cell carcinomas of the head and neck. We identity with LUCA-1, a candidate tumor suppressor have investigated whether cell lines derived from such gene frequently deleted in small cell lung carcinomas malignancies expressed Hyal-1 activity, using normal (SCLC) (Wei et al., 1996). The HYAL1 gene resides human keratinocytes as controls. Hyal-1 enzyme activity within a commonly deleted region of 3p21.3 where a and protein were absent or markedly reduced in six of potentially informative 30 kb homozygous deletion has seven carcinoma cell lines examined.