Tactile Precision, Natural Movement and Haptic Feedback in 3D Virtual Spaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Studio Showcase

Contacts: Holly Rockwood Tricia Gugler EA Corporate Communications EA Investor Relations 650-628-7323 650-628-7327 [email protected] [email protected] EA SPOTLIGHTS SLATE OF NEW TITLES AND INITIATIVES AT ANNUAL SUMMER SHOWCASE EVENT REDWOOD CITY, Calif., August 14, 2008 -- Following an award-winning presence at E3 in July, Electronic Arts Inc. (NASDAQ: ERTS) today unveiled new games that will entertain the core and reach for more, scheduled to launch this holiday and in 2009. The new games presented on stage at a press conference during EA’s annual Studio Showcase include The Godfather® II, Need for Speed™ Undercover, SCRABBLE on the iPhone™ featuring WiFi play capability, and a brand new property, Henry Hatsworth in the Puzzling Adventure. EA Partners also announced publishing agreements with two of the world’s most creative independent studios, Epic Games and Grasshopper Manufacture. “Today’s event is a key inflection point that shows the industry the breadth and depth of EA’s portfolio,” said Jeff Karp, Senior Vice President and General Manager of North American Publishing for Electronic Arts. “We continue to raise the bar with each opportunity to show new titles throughout the summer and fall line up of global industry events. It’s been exciting to see consumer and critical reaction to our expansive slate, and we look forward to receiving feedback with the debut of today’s new titles.” The new titles and relationships unveiled on stage at today’s Studio Showcase press conference include: • Need for Speed Undercover – Need for Speed Undercover takes the franchise back to its roots and re-introduces break-neck cop chases, the world’s hottest cars and spectacular highway battles. -

Game Console Rating

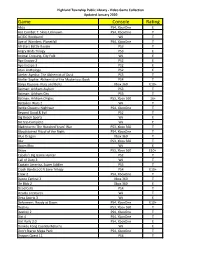

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

![Createworld 2011 Proceedings [PDF]](https://docslib.b-cdn.net/cover/8165/createworld-2011-proceedings-pdf-1388165.webp)

Createworld 2011 Proceedings [PDF]

CreateWorld 2011 Proceedings CreateWorld11 Conference 28 November - 30 November 2011 Griffith University, Brisbane Queensland Australia Editors Michael Docherty :: Queensland University of Technology Matt Hitchcock :: Griffith University, Qld Conservatorium CreateWorld 2011 Proceedings of the CreateWorld11 Conference 20 - 30 November Griffith University, Brisbane Queensland Australia Editor Michael Docherty :: Queensland University of Technology Matt HItchcock :: Griffith University Web Content Editor Andrew Jeffrey http://www.auc.edu.au/Create+World+2011 ISBN: 978-0-947209-38-4 © Copyright 2011, Apple University Consortium Australia, and individual authors. Apart from any use as permitted under the Copyright Act 1968, no part may be reproduced by any process without the written permission of the copyright holders. CreateWorld 2011 Proceedings of the CreateWorld11 Conference 28 - 30 November, 2011 Griffith University, Brisbane Queensland Australia Contents Robert Burrell, Queensland Conservatorium, Griffith University 1 Zoomusicology, Live Performance and DAW [email protected] Dean Chircop, Griffith Film School (Film and Screen Media Productions) 10 The Digital Cinematography Revolution & 35mm Sensors: Arriflex Alexa, Sony PMW-F3, Panasonic AF100, Sony HDCAM HDW-750 and the Cannon 5D DSLR. [email protected] Kim Cunio, Queensland Conservatorium Griffith University 18 ISHQ: A collaborative film and music project – art music and image as an installation, joint art as boundary crossing. [email protected]; Louise Harvey, -

Createworld 2011

CreateWorld 2011 Pre-publication Proceedings CreateWorld11 Conference 28 November - 30 November 2011 Griffith University, Brisbane Queensland Australia Editors Michael Docherty :: Queensland University of Technology Matt Hitchcock :: Griffith University, Qld Conservatorium CreateWorld 2011 Pre-Publication Proceedings of the CreateWorld11 Conference Griffith University, Brisbane Queensland Australia Editor Michael Docherty :: Queensland University of Technology Matt HItchcock :: Griffith University Web Content Editor Andrew Jeffrey http://www.auc.edu.au/Create+World+2011 © Copyright 2011, Apple University Consortium Australia, and individual authors. Apart from any use as permitted under the Copyright Act 1968, no part may be reproduced by any process without the written permission of the copyright holders. CreateWorld 2010 Pre-Publication Proceedings of the CreateWorld10 Conference Griffith University, Brisbane Queensland Australia Contents Submitted Papers Robert Burrell, Queensland Conservatorium, Griffith University 1 Zoomusicology, Live Performance and DAW [email protected] Presentation Date 12:15 pm to 1:00 pm Tuesday 29th Session: Technology in the Performing Arts S07 1.23 (Graduate Centre) Dean Chircop, Griffith Film School (Film and Screen Media Productions) 9 The Digital Cinematography Revolution & 35mm Sensors: Arriflex Alexa, Sony PMW-F3, Panasonic AF100, Sony HDCAM HDW-750 and the Cannon 5D DSLR. [email protected] Presentation Date 12:35 pm to 1:20 pm Wednesday 30th Session : Film and Animation Grifith Film School Cinema. Kim Cunio, Queensland Conservatorium Griffith University 16 ISHQ: A collaborative film and music project – art music and image as an installation, joint art as boundary crossing. [email protected]; Louise Harvey, [email protected] Presentation Date 12:35 pm to 1:20 pm Wednesday 30th Session : Film and Animation Grifith Film School Cinema. -

Elektroniska Spel I Tidningsfältet

Institutionen för studier av samhällsutveckling och kultur – ISAK LiU Norrköping Elektroniska spel i tidningsfältet En studie av recensenters förhållningssätt till dator- och TV-spel Daniel Ahlin, Martin Padu, Andreas Petersson Magisteruppsats från programmet Kultur Samhälle Mediegestaltning Linköpings universitet, LiU Norrköping, 601 74 NORRKÖPING ISAK-Instutionen för studier av samhällsutveckling och kultur ISRN: LIU-ISAK/KSM-A -- 09/17 -- SE Handledare: Jonas Ramsten Nyckelord: TV-spel, elektroniska spel, recensioner, media, spel, statistik, tabeller, Level, Superplay, Aftonbladet, Expressen, Dagens Nyheter, Svenska Dagbladet, Sverige, EU, PEGI, betyg, Bourdieu, metafysik, textanalys, speltidning, kvällstidning, dagstidning, nyheter, datorspel, recensenter, spelvärlden, Nintendo, Microsoft, Sega, Sony, Atari, Ubisoft, speltillverkare, spelutvecklare, barnspel, ungdomsspel, vuxenspel, världskrig, fighting, GTA. Abstract in English Electronic games in the field of newspapers and magazines – a study of the critics’ way of looking at and writing about computer and video games This paper considers the roles of critics, newspapers and magazines, in the process of describing computer games and video games as either technical objects or products intended for entertainment. The making and “using” of computer games and videogames originates in small groups of people possessing a lot of knowledge in computers, during a time when these kinds of devices were very expensive. But now, the gaming culture has grown and almost anyone in our society can own and play a video game. For that reason, one could ask the questions “are the games and the people who plays them still parts of a ‘technical culture’?” and “do we need some kind of prior knowledge to fully understand the videogame critics?” The critics represent “the official idea” of what a videogame is, how it works and if it is worth playing. -

![[Full PDF] How to Manual on Skate 3](https://docslib.b-cdn.net/cover/6891/full-pdf-how-to-manual-on-skate-3-1946891.webp)

[Full PDF] How to Manual on Skate 3

How To Manual On Skate 3 Ps3 Download How To Manual On Skate 3 Ps3 Get the latest Skate 2 cheats, codes, unlockables, hints, Easter eggs, glitches, tips, tricks, hacks, downloads, trophies, guides, FAQs, walkthroughs, and more for PlayStation 3 (PS3). CheatCodes has all you need to win every game you play! Use the above links or scroll down see all to the PlayStation 3 cheats we have available for Skate 2. Download Skate 3 Ps3 Posted on 10 April، 2021 by games Skate 3 is the third installment in the Skate series of video games, developed by EA Black Box, and published by Electronic Arts. Skate 2 is the peak of the series, it's the first in the series to give you the ability to get off your skateboard and walk around the environment if necessary, also it has been updated to support custom soundtracks (PS3 version, always could on Xbox360) by going to the Playstation menu and selecting music from the OS. Mint Disc Playstation 3 Ps3 Skate. Skate 1 I First Game Free Postage. AU $17.24. Mint Disc Xbox 360 Skate 3 Works on Xbox One Free Postage Inc Manual. AU $30.50. Unlocks:New band t-shirt available Film:Sight Unseen -Stay in a No-Skate Zone -Get 2000 points - Nose Manual to Fliptrick to Nose Manual I did this at the lakeside area no-skate zone. Film:Ghetto Blasters -Do 3 grinds -Do 15 ft powerslide -720 total deg spin I did this at the lakeside area, doing the powerslide up and back down the loopy thing. -

1 Tricia Gugler

Tricia Gugler: Welcome to our first quarter fiscal 2009 earnings call. Today on the call we have John Riccitiello, our Chief Executive Officer; Eric Brown, our Chief Financial Officer; John Pleasants, our Chief Operating Officer; and Peter Moore, our President of EA SPORTS. Before we begin, I’d like to remind you that you may find copies of our SEC filings, our earnings release and a replay of this webcast on our web site at investor.ea.com. Shortly after the call we will post a copy of our prepared remarks on our website. Throughout this call we will present both GAAP and non-GAAP financial measures. Non-GAAP measures exclude the following items: • amortization of intangibles, • stock-based compensation, • acquired in-process technology, • restructuring charges, • certain litigation expenses, • losses on strategic investments, • the impact of the change in deferred net revenue related to certain packaged goods and digital content. In addition, starting with its fiscal 2009 results, the Company began to apply a fixed, long-term projected tax rate of 28% to determine its non-GAAP results. Prior to fiscal 2009, the Company’s non-GAAP financial results were determined by excluding the specific income tax effects associated with the non-GAAP items and the impact of certain one-time income tax adjustments. Our earnings release provides a reconciliation of our GAAP to non-GAAP measures. These non-GAAP measures are not intended to be considered in isolation from – a substitute for – or superior to – our GAAP results – and we encourage investors to consider all measures before making an investment decision. -

2014-07 EFF Gaming Exempiton Comment

Before the U.S. COPYRIGHT OFFICE, LIBRARY OF CONGRESS In the Matter of Exemption to Prohibition on Circumvention of Copyright Protection Systems for Access Control Technologies Docket No. 2014-07 Comments of the Electronic Frontier Foundation 1. Commenter Information Electronic Frontier Foundation Kendra Albert Mitch Stoltz (203) 424-0382 Corynne McSherry [email protected] Kit Walsh 815 Eddy St San Francisco, CA 94109 (415) 436-9333 [email protected] The Electronic Frontier Foundation (EFF) is a member-supported, nonprofit public interest organization devoted to maintaining the traditional balance that copyright law strikes between the interests of copyright owners and the interests of the public. Founded in 1990, EFF represents over 25,000 dues-paying members, including consumers, hobbyists, artists, writers, computer programmers, entrepreneurs, students, teachers, and researchers, who are united in their reliance on a balanced copyright system that ensures adequate incentives for creative work while facilitating innovation and broad access to information in the digital age. In filing these comments, EFF represents the interests of gaming communities, archivists, and researchers who seek to preserve the functionality of video games abandoned by their manufacturer. 2. Proposed Class Addressed Proposed Class 23: Abandoned Software—video games requiring server communication Literary works in the form of computer programs, where circumvention is undertaken for the purpose of restoring access to single-player or multiplayer video gaming on consoles, personal computers or personal handheld gaming devices when the developer and its agents have ceased to support such gaming. We ask the Librarian to grant an exemption to the ban on circumventing access controls applied to copyrighted works, 17 U.S.C. -

EA Unveils Eclectic Soundtracks for Skate It and Skate 2

EA Unveils Eclectic Soundtracks for Skate It and Skate 2 Track List Boasts Songs From Some of the Biggest and Most Influential Artists of All-Time Including Black Sabbath, Public Enemy, The Clash and Wu-Tang Clan REDWOOD CITY, Calif., Oct 23, 2008 (BUSINESS WIRE) -- Black Box, an Electronic Arts Inc. (NASDAQ:ERTS) studio, today revealed the soundtracks that will power the award-winning Skate It and Skate 2. These two skateboarding games bring the sport's incredible tricks, epic moves and high energy to gamers with all of the fun -- and none of the bruises! The 52 featured songs are from some of music's most influential and celebrated artists including Black Sabbath, Public Enemy, Judas Priest and Wu-Tang Clan. "The soundtracks for Skate It and Skate 2 builds upon last year's critically acclaimed Skate soundtrack," said Chris Parry, Associate Producer. "The songs reflect the culture of skateboarding, with some tracks picked by the pros themselves, and others coming straight out of iconic skate videos.Skateboarding today is an eclectic mix of people and influences and this is true of where we took this year's soundtracks. Look for some old school soul and funk, a few rock anthems, some good beats and a lot of classic punk!" "Skateboarding culture is like no other culture in the world and with that comes a unique sound of its own," said Steve Schnur, worldwide executive of music and marketing. "Our goal was to create two soundtracks that bring together classic acts and explosive new bands while fearlessly crossing all genres. -

Microsoft Xbox 360

Microsoft Xbox 360 Last Updated on October 1, 2021 Title Publisher Qty Box Man Comments 007 Legends Activision 007: Quantum of Solace Activision 007: Quantum of Solace: Collector's Edition Activision 007: Quantum of Solace: Best Buy T-shirt Activision 2006 FIFA World Cup Electronic Arts 2010 FIFA World Cup South Africa Electronic Arts 2014 FIFA World Cup Brazil Electronic Arts 2K Essentials Collection 2K 2K Rogues and Outlaws Collection Take-Two Interactive Software 2K12 Sports Combo Pack 2K Sports 2K13 Sports Combo Pack 2K Sports 50 Cent: Blood on the Sand THQ AC/DC Live: Rock Band Track Pack Electronic Arts Ace Combat 6: Fires of Liberation Namco Bandai Games America Ace Combat 6: Fires of Liberation: Limited Edition Ace Edge Flightstick Bundle Namco Bandai Games America Ace Combat 6: Fires of Liberation: Platinum Hits Namco Bandai Games America Ace Combat: Assault Horizon Namco Ace Combat: Assault Horizon: Walmart Exclusive Namco Adidas miCoach 505 Games Adrenalin Misfits Konami Adventure Time: Explore the Dungeon Because I DON'T KNOW D3Publisher Adventure Time: The Secret of the Nameless Kingdom Little Orbit Adventures of Tintin, The: The Game Ubisoft Afro Samurai Namco Bandai Games Air Conflicts: Secret Wars Kalypso Media USA Air Conflicts: Vietnam bitComposer Games Akai Katana Rising Star Games Alan Wake: Limited Collector's Edition Microsoft Alan Wake Microsoft Alice: Madness Returns Electronic Arts Alien: Isolation: Nostromo Edition Sega Aliens vs Predator: Hunter Edition Sega Aliens vs Predator Sega Aliens: Colonial Marines Sega -

The BG News April 11, 2008

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 4-11-2008 The BG News April 11, 2008 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News April 11, 2008" (2008). BG News (Student Newspaper). 7915. https://scholarworks.bgsu.edu/bg-news/7915 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. ESTABLISHED 1920 A daily independent student press serving THE BG NEWS the campus and surrounding community Friday April 11,2008 Volume 101, Issue 157 WWWBGNEWSCOM A crisis off T-shirts that deserve a double-take A T-shirt project works to bring attention to violence committed against Wood County women Among other college transiticftsr^tudent's |hn3 religion can also be called into question WORKING WITH THE BUGS: Natural Resources Specialist Cinda Stutzman tends to the hummingbird and butterfly habitat located in Wintergarden Park. Losing a loyal By Kate Snyder than just somebody telling best friend Reporter them it's good for them. Columnist Ally And everybody practices When coming to college, the faith differently. A wooded area Blankartz chronicles obvious changes include dorm Some flourish by join- the last few days of her life, new classes, a new town ing organizations and get- dog, Millie, who went and different food. -

Ubisoft® Reports Third-Quarter 2008-09 Sales

Ubisoft ® reports third-quarter 2008-09 sales Third-quarter sales up 13% to ⁄508 million. Update on the games release schedule. Full-year 2008-09 targets adjusted. Initial targets for 2009-10. Paris, January 22, 2009 œ Today, Ubisoft reported its sales for the third fiscal quarter ended December 31, 2008. Sales Sales for the third quarter of 2008-09 came to ⁄508 million, up 12.9%, or 17.0% at constant exchange rates, compared with the ⁄450 million recorded for the same period of 2007-08. For the first nine months of fiscal 2008-09 sales totaled ⁄852 million versus ⁄711 million in the corresponding prior-year period, representing an increase of 19.8%, or 24.8% at constant exchange rates. Third-quarter sales came in slightly higher than the guidance of approximately ⁄500 million issued when Ubisoft released its sales figures for the second quarter of 2008-09. This performance was primarily attributable to: Þ The diversity and quality of Ubisoft‘s games line-up for the holiday period, with a targeted offering for each type of consumer. Þ A solid showing from franchise titles launched during the quarter, particularly Far Cry ® 2 and Rayman Raving Rabbids ® TV Party which generated sell-in sales of 2.9 million and 1.5 million units respectively and offset the impact of a slower take-off for Prince of Persia ® (2.2 million units). Þ The creation of a new multi-million units selling franchise œ Shaun White Snowboarding œ which was the quarter‘s number two sports game in the United States and recorded 2.3 million units sell-in worldwide.