Createworld 2011 Proceedings [PDF]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Studio Showcase

Contacts: Holly Rockwood Tricia Gugler EA Corporate Communications EA Investor Relations 650-628-7323 650-628-7327 [email protected] [email protected] EA SPOTLIGHTS SLATE OF NEW TITLES AND INITIATIVES AT ANNUAL SUMMER SHOWCASE EVENT REDWOOD CITY, Calif., August 14, 2008 -- Following an award-winning presence at E3 in July, Electronic Arts Inc. (NASDAQ: ERTS) today unveiled new games that will entertain the core and reach for more, scheduled to launch this holiday and in 2009. The new games presented on stage at a press conference during EA’s annual Studio Showcase include The Godfather® II, Need for Speed™ Undercover, SCRABBLE on the iPhone™ featuring WiFi play capability, and a brand new property, Henry Hatsworth in the Puzzling Adventure. EA Partners also announced publishing agreements with two of the world’s most creative independent studios, Epic Games and Grasshopper Manufacture. “Today’s event is a key inflection point that shows the industry the breadth and depth of EA’s portfolio,” said Jeff Karp, Senior Vice President and General Manager of North American Publishing for Electronic Arts. “We continue to raise the bar with each opportunity to show new titles throughout the summer and fall line up of global industry events. It’s been exciting to see consumer and critical reaction to our expansive slate, and we look forward to receiving feedback with the debut of today’s new titles.” The new titles and relationships unveiled on stage at today’s Studio Showcase press conference include: • Need for Speed Undercover – Need for Speed Undercover takes the franchise back to its roots and re-introduces break-neck cop chases, the world’s hottest cars and spectacular highway battles. -

A Culture of Recording: Christopher Raeburn and the Decca Record Company

A Culture of Recording: Christopher Raeburn and the Decca Record Company Sally Elizabeth Drew A thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy The University of Sheffield Faculty of Arts and Humanities Department of Music This work was supported by the Arts & Humanities Research Council September 2018 1 2 Abstract This thesis examines the working culture of the Decca Record Company, and how group interaction and individual agency have made an impact on the production of music recordings. Founded in London in 1929, Decca built a global reputation as a pioneer of sound recording with access to the world’s leading musicians. With its roots in manufacturing and experimental wartime engineering, the company developed a peerless classical music catalogue that showcased technological innovation alongside artistic accomplishment. This investigation focuses specifically on the contribution of the recording producer at Decca in creating this legacy, as can be illustrated by the career of Christopher Raeburn, the company’s most prolific producer and specialist in opera and vocal repertoire. It is the first study to examine Raeburn’s archive, and is supported with unpublished memoirs, private papers and recorded interviews with colleagues, collaborators and artists. Using these sources, the thesis considers the history and functions of the staff producer within Decca’s wider operational structure in parallel with the personal aspirations of the individual in exerting control, choice and authority on the process and product of recording. Having been recruited to Decca by John Culshaw in 1957, Raeburn’s fifty-year career spanned seminal moments of the company’s artistic and commercial lifecycle: from assisting in exploiting the dramatic potential of stereo technology in Culshaw’s Ring during the 1960s to his serving as audio producer for the 1990 The Three Tenors Concert international phenomenon. -

Antarctica: Music, Sounds and Cultural Connections

Antarctica Music, sounds and cultural connections Antarctica Music, sounds and cultural connections Edited by Bernadette Hince, Rupert Summerson and Arnan Wiesel Published by ANU Press The Australian National University Acton ACT 2601, Australia Email: [email protected] This title is also available online at http://press.anu.edu.au National Library of Australia Cataloguing-in-Publication entry Title: Antarctica - music, sounds and cultural connections / edited by Bernadette Hince, Rupert Summerson, Arnan Wiesel. ISBN: 9781925022285 (paperback) 9781925022292 (ebook) Subjects: Australasian Antarctic Expedition (1911-1914)--Centennial celebrations, etc. Music festivals--Australian Capital Territory--Canberra. Antarctica--Discovery and exploration--Australian--Congresses. Antarctica--Songs and music--Congresses. Other Creators/Contributors: Hince, B. (Bernadette), editor. Summerson, Rupert, editor. Wiesel, Arnan, editor. Australian National University School of Music. Antarctica - music, sounds and cultural connections (2011 : Australian National University). Dewey Number: 780.789471 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying or otherwise, without the prior permission of the publisher. Cover design and layout by ANU Press Cover photo: Moonrise over Fram Bank, Antarctica. Photographer: Steve Nicol © Printed by Griffin Press This edition © 2015 ANU Press Contents Preface: Music and Antarctica . ix Arnan Wiesel Introduction: Listening to Antarctica . 1 Tom Griffiths Mawson’s musings and Morse code: Antarctic silence at the end of the ‘Heroic Era’, and how it was lost . 15 Mark Pharaoh Thulia: a Tale of the Antarctic (1843): The earliest Antarctic poem and its musical setting . 23 Elizabeth Truswell Nankyoku no kyoku: The cultural life of the Shirase Antarctic Expedition 1910–12 . -

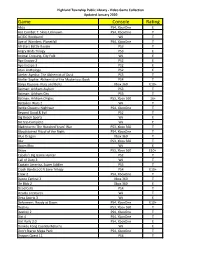

Game Console Rating

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

Reel-To-Real: Intimate Audio Epistolarity During the Vietnam War Dissertation Presented in Partial Fulfillment of the Requireme

Reel-to-Real: Intimate Audio Epistolarity During the Vietnam War Dissertation Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Matthew Alan Campbell, B.A. Graduate Program in Music The Ohio State University 2019 Dissertation Committee Ryan T. Skinner, Advisor Danielle Fosler-Lussier Barry Shank 1 Copyrighted by Matthew Alan Campbell 2019 2 Abstract For members of the United States Armed Forces, communicating with one’s loved ones has taken many forms, employing every available medium from the telegraph to Twitter. My project examines one particular mode of exchange—“audio letters”—during one of the US military’s most trying and traumatic periods, the Vietnam War. By making possible the transmission of the embodied voice, experiential soundscapes, and personalized popular culture to zones generally restricted to purely written or typed correspondence, these recordings enabled forms of romantic, platonic, and familial intimacy beyond that of the written word. More specifically, I will examine the impact of war and its sustained separations on the creative and improvisational use of prosthetic culture, technologies that allow human beings to extend and manipulate aspects of their person beyond their own bodies. Reel-to-reel was part of a constellation of amateur recording technologies, including Super 8mm film, Polaroid photography, and the Kodak slide carousel, which, for the first time, allowed average Americans the ability to capture, reify, and share their life experiences in multiple modalities, resulting in the construction of a set of media-inflected subjectivities (at home) and intimate intersubjectivities developed across spatiotemporal divides. -

Econ Board Wants More Info

1A WEEKEND EDITION FRIDAY & SATURDAY, OCTOBER 12 & 13, 2012 | YOUR COMMUNITY NEWSPAPER SINCE 1874 | 75¢ Lake City Reporter LAKECITYREPORTER.COM EVENTS CENTER ‘A lot of pink’ PROPOSAL Econ Oct. 12 board Classic car parade On Friday, Oct. 12 from 6 to 9 p.m. American wants Hometown Veteran Assist Inc. will host a cruise in and classic car parade at Hardees on U.S. 90. All of more the proceeds benefit local veterans. Oct. 13 info Monument rededication Please join the Sons of Possible Ellisville Confederate Veterans and location prompts the United Daughters of the Confederacy on questions. Saturday, Oct. 13 at 11 a.m. for the Rededication of the By TONY BRITT Confederate Monument, [email protected] which was originally dedi- cated 100 years ago. The Columbia County Economic Development Department board of direc- Zumba charity tors is Zumba to benefit local interested breast cancer awareness in the Oct. 13 from 5:30 to 7 p.m. at c o u n t y ’ s the Teen Town Recreation JASON MATTHEW WALKER/Lake City Reporter proposed Building, 533 NW Desoto Lake City resident Marcelle Bedenbaugh is in the market for a pink flamingo after attending the Standing up to Breast e v e n t s St. The Zumbathon Charity Cancer luncheon Thursday. ‘I’ve had breast cancer two times. I enjoyed the chemo the first time I said what the heck, c e n t e r , Event will benefit the I’ll do it again,’ Bedenbaugh joked. but wants Suwannee River Breast Quillen m o r e Cancer Awareness Assn. -

Createworld 2011

CreateWorld 2011 Pre-publication Proceedings CreateWorld11 Conference 28 November - 30 November 2011 Griffith University, Brisbane Queensland Australia Editors Michael Docherty :: Queensland University of Technology Matt Hitchcock :: Griffith University, Qld Conservatorium CreateWorld 2011 Pre-Publication Proceedings of the CreateWorld11 Conference Griffith University, Brisbane Queensland Australia Editor Michael Docherty :: Queensland University of Technology Matt HItchcock :: Griffith University Web Content Editor Andrew Jeffrey http://www.auc.edu.au/Create+World+2011 © Copyright 2011, Apple University Consortium Australia, and individual authors. Apart from any use as permitted under the Copyright Act 1968, no part may be reproduced by any process without the written permission of the copyright holders. CreateWorld 2010 Pre-Publication Proceedings of the CreateWorld10 Conference Griffith University, Brisbane Queensland Australia Contents Submitted Papers Robert Burrell, Queensland Conservatorium, Griffith University 1 Zoomusicology, Live Performance and DAW [email protected] Presentation Date 12:15 pm to 1:00 pm Tuesday 29th Session: Technology in the Performing Arts S07 1.23 (Graduate Centre) Dean Chircop, Griffith Film School (Film and Screen Media Productions) 9 The Digital Cinematography Revolution & 35mm Sensors: Arriflex Alexa, Sony PMW-F3, Panasonic AF100, Sony HDCAM HDW-750 and the Cannon 5D DSLR. [email protected] Presentation Date 12:35 pm to 1:20 pm Wednesday 30th Session : Film and Animation Grifith Film School Cinema. Kim Cunio, Queensland Conservatorium Griffith University 16 ISHQ: A collaborative film and music project – art music and image as an installation, joint art as boundary crossing. [email protected]; Louise Harvey, [email protected] Presentation Date 12:35 pm to 1:20 pm Wednesday 30th Session : Film and Animation Grifith Film School Cinema. -

Engineering the Performance: Recording Engineers, Tacit Knowledge and the Art of Controlling Sound Susan Schmidt Horning

ABSTRACT At the dawn of sound recording, recordists were mechanical engineers whose only training was on the job. As the recording industry grew more sophisticated, so did the technology used to make records, yet the need for recording engineers to use craft skill and tacit knowledge in their work did not diminish. This paper explores the resistance to formalized training of recording engineers and the persistence of tacit knowledge as an indispensable part of the recording engineer’s work. In particular, the concept of ‘microphoning’ – the ability to choose and use microphones to best effect in the recording situation – is discussed as an example of tacit knowledge in action. The recording studio also becomes the site of collaboration between technologists and artists, and this collaboration is at its best a symbiotic working relationship, requiring skills above and beyond either technical or artistic, which could account for one level of ‘performance’ required of the recording engineer. Described by one studio manager as ‘a technician and a diplomat’, the recording engineer performs a number of roles – technical, artistic, socially mediating – that render the concept of formal training problematic, yet necessary for the operation of technically complex equipment. Keywords audio engineering, aural thinking, ‘microphoning’, sound recording, tacit knowledge Engineering the Performance: Recording Engineers, Tacit Knowledge and the Art of Controlling Sound Susan Schmidt Horning Listening to recorded music can be a deeply personal experience, whether through headphones to a portable compact disk (CD) player or to ‘muzak’ intruding on (or enhancing) our retail shopping experience (DeNora, 2002); whether seated in an automobile (Bull, 2001, 2002), at a computer, or in the precisely positioned audiophile listening chair (Perlman, 2004). -

![[Full PDF] How to Manual on Skate 3](https://docslib.b-cdn.net/cover/6891/full-pdf-how-to-manual-on-skate-3-1946891.webp)

[Full PDF] How to Manual on Skate 3

How To Manual On Skate 3 Ps3 Download How To Manual On Skate 3 Ps3 Get the latest Skate 2 cheats, codes, unlockables, hints, Easter eggs, glitches, tips, tricks, hacks, downloads, trophies, guides, FAQs, walkthroughs, and more for PlayStation 3 (PS3). CheatCodes has all you need to win every game you play! Use the above links or scroll down see all to the PlayStation 3 cheats we have available for Skate 2. Download Skate 3 Ps3 Posted on 10 April، 2021 by games Skate 3 is the third installment in the Skate series of video games, developed by EA Black Box, and published by Electronic Arts. Skate 2 is the peak of the series, it's the first in the series to give you the ability to get off your skateboard and walk around the environment if necessary, also it has been updated to support custom soundtracks (PS3 version, always could on Xbox360) by going to the Playstation menu and selecting music from the OS. Mint Disc Playstation 3 Ps3 Skate. Skate 1 I First Game Free Postage. AU $17.24. Mint Disc Xbox 360 Skate 3 Works on Xbox One Free Postage Inc Manual. AU $30.50. Unlocks:New band t-shirt available Film:Sight Unseen -Stay in a No-Skate Zone -Get 2000 points - Nose Manual to Fliptrick to Nose Manual I did this at the lakeside area no-skate zone. Film:Ghetto Blasters -Do 3 grinds -Do 15 ft powerslide -720 total deg spin I did this at the lakeside area, doing the powerslide up and back down the loopy thing. -

1 Tricia Gugler

Tricia Gugler: Welcome to our first quarter fiscal 2009 earnings call. Today on the call we have John Riccitiello, our Chief Executive Officer; Eric Brown, our Chief Financial Officer; John Pleasants, our Chief Operating Officer; and Peter Moore, our President of EA SPORTS. Before we begin, I’d like to remind you that you may find copies of our SEC filings, our earnings release and a replay of this webcast on our web site at investor.ea.com. Shortly after the call we will post a copy of our prepared remarks on our website. Throughout this call we will present both GAAP and non-GAAP financial measures. Non-GAAP measures exclude the following items: • amortization of intangibles, • stock-based compensation, • acquired in-process technology, • restructuring charges, • certain litigation expenses, • losses on strategic investments, • the impact of the change in deferred net revenue related to certain packaged goods and digital content. In addition, starting with its fiscal 2009 results, the Company began to apply a fixed, long-term projected tax rate of 28% to determine its non-GAAP results. Prior to fiscal 2009, the Company’s non-GAAP financial results were determined by excluding the specific income tax effects associated with the non-GAAP items and the impact of certain one-time income tax adjustments. Our earnings release provides a reconciliation of our GAAP to non-GAAP measures. These non-GAAP measures are not intended to be considered in isolation from – a substitute for – or superior to – our GAAP results – and we encourage investors to consider all measures before making an investment decision. -

Sing! 1975 – 2014 Song Index

Sing! 1975 – 2014 song index Song Title Composer/s Publication Year/s First line of song 24 Robbers Peter Butler 1993 Not last night but the night before ... 59th St. Bridge Song [Feelin' Groovy], The Paul Simon 1977, 1985 Slow down, you move too fast, you got to make the morning last … A Beautiful Morning Felix Cavaliere & Eddie Brigati 2010 It's a beautiful morning… A Canine Christmas Concerto Traditional/May Kay Beall 2009 On the first day of Christmas my true love gave to me… A Long Straight Line G Porter & T Curtan 2006 Jack put down his lister shears to join the welders and engineers A New Day is Dawning James Masden 2012 The first rays of sun touch the ocean, the golden rays of sun touch the sea. A Wallaby in My Garden Matthew Hindson 2007 There's a wallaby in my garden… A Whole New World (Aladdin's Theme) Words by Tim Rice & music by Alan Menken 2006 I can show you the world. A Wombat on a Surfboard Louise Perdana 2014 I was sitting on the beach one day when I saw a funny figure heading my way. A.E.I.O.U. Brian Fitzgerald, additional words by Lorraine Milne 1990 I can't make my mind up- I don't know what to do. Aba Daba Honeymoon Arthur Fields & Walter Donaldson 2000 "Aba daba ... -" said the chimpie to the monk. ABC Freddie Perren, Alphonso Mizell, Berry Gordy & Deke Richards 2003 You went to school to learn girl, things you never, never knew before. Abiyoyo Traditional Bantu 1994 Abiyoyo .. -

John Williamson Home Among the Gum Trees Free Download

1 / 5 John Williamson Home Among The Gum Trees Free Download Oct 25, 2017 — That means before you head off you'll need to download these songs from wherever you get your ... Home Among The Gum Trees - John Williamson.. Home Among the Gum Trees. B. Brown/ W. Johnson ... rue Blue is an ustralian folk song written in 1981 by singer-songwriter John Williamson.. Jun 14, 2020 — Give me a home among the gum trees, With lots of plum trees, ... John Williamson has 43 years experience as Musician and song writer .... Apr 5, 2019 — Download Free Songs and Videos Download Give Me A Home Among The Gum Trees Mp3 dan Mp4 Youtube Gratis - Give Me a Home Among the Gumtrees by .... Author: Williamson, John, 1945-; Format: Music; 1 score ([40] p.) ; 26 cm. ... Diggers of the Anzac; Galleries of pink galahs; Home among the gumtrees .... Jun 1, 2017 — Stream John Williamson - Home Among The Gumtrees (Jesse Bloch Bootleg) by Ryley Williams on desktop ... Play over 265 million tracks for free.. Home Among The Gumtrees - Aussie Song - Australia - Kidspot. John Williamson - Home Among The Gum Trees Lyrics SongMeanings. What's the point? Give me a home .... Williamson's “Tens Rule” (Williamson 1996) suggests that less than 1% of species that are introduced into a new environment will become damaging pests.. Download Viva La Cucina Italiana Long Live the Italian Cooking! ... Give me a home among the gumtrees John Williamson sang it in 1984, and if the idea .... Home Among The Gum Trees Chords by John Williamson. This is a reissue of John Williamson - The Smell of Gum Leaves, repackaged with a different title.