Technical Advances in Digital Audio Radio Broadcasting

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

History of the DVB Project

History of the DVB Project (This article was written by David Wood around 2013.) Introduction The DVB Project is an Alliance of about 200 companies, originally of European origin but now worldwide. Its objective is to agree specifications for digital media delivery systems, including broadcasting. It is an open, private sector initiative with an annual membership fee, governed by a Memorandum of Understanding (MoU). Until late 1990, digital television broadcasting to the home was thought to be impractical and costly to implement. During 1991, broadcasters and consumer equipment manufacturers discussed how to form a concerted pan-European platform to develop digital terrestrial TV. Towards the end of that year, broadcasters, consumer electronics manufacturers and regulatory bodies came together to discuss the formation of a group that would oversee the development of digital television in Europe. This so-called European Launching Group (ELG) expanded to include the major European media interest groups, both public and private, the consumer electronics manufacturers, common carriers and regulators. It drafted the MoU establishing the rules by which this new and challenging game of collective action would be played. The concept of the MoU was a departure into unexplored territory and meant that commercial competitors needed to appreciate their common requirements and agendas. Trust and mutual respect had to be established. The MoU was signed by all ELG participants in September 1993, and the Launching Group renamed itself as the Digital Video Broadcasting Project (DVB). Development work in digital television, already underway in Europe, moved into top gear. Around this time a separate group, the Working Group on Digital Television, prepared a study of the prospects and possibilities for digital terrestrial television in Europe. -

Final Evaluation Report

Final Evaluation Report Aceh Youth Radio Project A project implemented by Search for Common Ground Indonesia Funded by DFID and administered by the World Bank From April 2008 to February 2009 Contact Information: Stefano D’Errico Search for Common Ground Nepal Dir: (+977) 9849052815 [email protected] Evaluators: Stefano D’Errico – Representative, SFCG Nepal Alif Imam – Local Consultant Supported by SFCGI Staff: Dylan Fagan, Dede Riyadi, Alfin Iskandar, Andre Taufan, Evy Meryzawanti, Yunita Mardiani, Alexandra Stuart, Martini Morris, Bahrul Wijaksana, Brian Hanley 1 Executive Summary In February 2009, Search for Common Ground (SFCG) Indonesia concluded the implementation of a pilot project entitled Aceh Youth Radio for Peacebuilding. The project, funded by DFID and administered by the World Bank, had the overall objective of ―transforming the way in which Acehnese youth deal with conflict, away from adversarial approaches towards cooperative solutions‖. It lasted 10 months and included four main activity components: trainings on media production and conflict management; outreach events in each of nine working districts; Aceh Youth View Report presenting youth issues to local decision makers, and; production and broadcasting of two youth radio programs (see Annex III, for more details). Some of the positive impacts of the AYRP pilot include: All participants and radio presenters acquired knowledge in media production and conflict resolution; most of the participants now use expertise learned from AYRP for other jobs; AYRP enhanced -

Sirius Satellite Radio Inc

SIRIUS SATELLITE RADIO INC FORM 10-K (Annual Report) Filed 02/29/08 for the Period Ending 12/31/07 Address 1221 AVENUE OF THE AMERICAS 36TH FLOOR NEW YORK, NY 10020 Telephone 2128995000 CIK 0000908937 Symbol SIRI SIC Code 4832 - Radio Broadcasting Stations Industry Broadcasting & Cable TV Sector Technology Fiscal Year 12/31 http://www.edgar-online.com © Copyright 2008, EDGAR Online, Inc. All Rights Reserved. Distribution and use of this document restricted under EDGAR Online, Inc. Terms of Use. Table of Contents Table of Contents UNITED STATES SECURITIES AND EXCHANGE COMMISSION WASHINGTON, D.C. 20549 F ORM 10-K ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 FOR FISCAL YEAR ENDED DECEMBER 31, 2007 OR TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 FOR THE TRANSITION PERIOD FROM TO COMMISSION FILE NUMBER 0-24710 SIRIUS SATELLITE RADIO INC. (Exact name of registrant as specified in its charter) Delaware 52 -1700207 (State or other jurisdiction of (I.R.S. Employer Identification Number) incorporation of organization) 1221 Avenue of the Americas, 36th Floor New York, New York 10020 (Address of principal executive offices) (Zip Code) Registrant’s telephone number, including area code: (212) 584-5100 Securities registered pursuant to Section 12(b) of the Act: Name of each exchange Title of each class: on which registered: Common Stock, par value $0.001 per share Nasdaq Global Select Market Securities registered pursuant to Section 12(g) of the Act: None (Title of class) Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. -

Replacing Digital Terrestrial Television with Internet Protocol?

This is a repository copy of The short future of public broadcasting: Replacing digital terrestrial television with internet protocol?. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/94851/ Version: Accepted Version Article: Ala-Fossi, M and Lax, S orcid.org/0000-0003-3469-1594 (2016) The short future of public broadcasting: Replacing digital terrestrial television with internet protocol? International Communication Gazette, 78 (4). pp. 365-382. ISSN 1748-0485 https://doi.org/10.1177/1748048516632171 Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ The Short Future of Public Broadcasting: Replacing DTT with IP? Marko Ala-Fossi & Stephen Lax School of Communication, School of Media and Communication Media and Theatre (CMT) University of Leeds 33014 University of Tampere Leeds LS2 9JT Finland UK [email protected] [email protected] Keywords: Public broadcasting, terrestrial television, switch-off, internet protocol, convergence, universal service, data traffic, spectrum scarcity, capacity crunch. -

Warburton, John Henry. (2010). Picture Radio

! ∀# ∃ !∃%& ∋ ! (()(∗( Picture Radio: Will pictures, with the change to digital, transform radio? John Henry Warburton Master of Philosophy Southampton Solent University Faculty of Media, Arts and Society July 2010 Tutor Mike Richards 3 of 3 Picture Radio: Will pictures, with the change to digital, transform radio? By John Henry Warburton Abstract This work looking at radio over the last 80 years and digital radio today will consider picture radio, one way that the recently introduced DAB1 terrestrial digital radio could be used. Chapter one considers the radio history including early picture radio and television, plus shows how radio has come from the crystal set, with one pair of headphones, to the mains powered wireless with built in speakers. These radios became the main family entertainment in the home until television takes over that role in the mid 1950s. Then radio changed to a portable medium with the coming of transistor radios, to become the personal entertainment medium it is today. Chapter two and three considers the new terrestrial digital mediums of DAB and DRM2 plus how it works, what it is capable of plus a look at some of the other digital radio platforms. Chapter four examines how sound is perceived by the listener and that radio broadcasters will need to understand the relationship between sound and vision. We receive sound and then make pictures in the mind but to make sense of sound we need codes to know what it is and make sense of it. Chapter five will critically examine the issues of commercial success in radio and where pictures could help improve the radio experience as there are some things that radio is restricted to as a sound only medium. -

Digital Audio Broadcasting : Principles and Applications of Digital Radio

Digital Audio Broadcasting Principles and Applications of Digital Radio Second Edition Edited by WOLFGANG HOEG Berlin, Germany and THOMAS LAUTERBACH University of Applied Sciences, Nuernberg, Germany Digital Audio Broadcasting Digital Audio Broadcasting Principles and Applications of Digital Radio Second Edition Edited by WOLFGANG HOEG Berlin, Germany and THOMAS LAUTERBACH University of Applied Sciences, Nuernberg, Germany Copyright ß 2003 John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England Telephone (þ44) 1243 779777 Email (for orders and customer service enquiries): [email protected] Visit our Home Page on www.wileyeurope.com or www.wiley.com All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except under the terms of the Copyright, Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licensing Agency Ltd, 90 Tottenham Court Road, London W1T 4LP, UK, without the permission in writing of the Publisher. Requests to the Publisher should be addressed to the Permissions Department, John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England, or emailed to [email protected], or faxed to (þ44) 1243 770571. This publication is designed to provide accurate and authoritative information in regard to the subject matter covered. It is sold on the understanding that the Publisher is not engaged in rendering professional services. If professional advice or other expert assistance is required, the services of a competent professional should be sought. -

Benbella Spring 2020 Titles

Letter from the publisher HELLO THERE! DEAR READER, 1 We’ve all heard the same advice when it comes to dieting: no late-night food. It’s one of the few pieces of con- ventional wisdom that most diets have in common. But as it turns out, science doesn’t actually support that claim. In Always Eat After 7 PM, nutritionist and bestselling author Joel Marion comes bearing good news for nighttime indulgers: eating big in the evening when we’re naturally hungriest can actually help us lose weight and keep it off for good. He’s one of the most divisive figures in journalism today, hailed as “the Walter Cronkite of his era” by some and deemed “the country’s reigning mischief-maker” by others, credited with everything from Bill Clinton’s impeachment to the election of Donald Trump. But beyond the splashy headlines, little is known about Matt Drudge, the notoriously reclusive journalist behind The Drudge Report, nor has anyone really stopped to analyze the outlet’s far-reaching influence on society and mainstream journalism—until now. In The Drudge Revolution, investigative journalist Matthew Lysiak offers never-reported insights in this definitive portrait of one of the most powerful men in media. We know that worldwide, we are sick. And we’re largely sick with ailments once considered rare, including cancer, diabetes, and Alzheimer’s disease. What we’re just beginning to understand is that one common root cause links all of these issues: insulin resistance. Over half of all adults in the United States are insulin resistant, with other countries either worse or not far behind. -

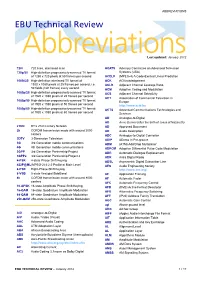

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership -

Ultra Short Multiband AM/FM/DAB Active Antennas for Automotive Application

Universit¨at der Bundeswehr M¨unchen Fakult¨at f¨ur Elektrotechnik und Informationstechnik Institut f¨ur Hoch- und H¨ochstfrequenztechnik Ultra Short Multiband AM/FM/DAB Active Antennas for Automotive Application Alexandru Negut Zur Erlangung des akademischen Grades eines DOKTOR-INGENIEURS (Dr.-Ing.) von der Fakult¨at f¨ur Elektrotechnik und Informationstechnik der Universit¨at der Bundeswehr M¨unchen genehmigte DISSERTATION Tag der Pr¨ufung: 18. November 2011 Vorsitzender des Promotionsausschusses: Prof. Dr.-Ing. habil. W. Pascher 1. Berichterstatter: Prof. Dr.-Ing. habil. S.Lindenmeier 2.Berichterstatter: Prof.Dr.-Ing.habil.U.Barabas Neubiberg, den 6. Dezember 2011 Acknowledgments It is with great pleasure to acknowledge the opportunity Prof. Dr.-Ing. habil. Stefan Lindenmeier offered me when he accepted to work out my PhD thesis within the Institute of High Frequency Technology and Mobile Communication, University of the Bundeswehr Munich. I am deeply indebted to him for introducing me to this exciting field and for providing constant guidance and support. Prof. Dr.-Ing. habil. Udo Barabas is thanked to for being the second reviewer of this thesis. The vast experience of Apl. Prof. Dr.-Ing. habil. Leopold Reiter in the field of active antennas – but not only – is especially acknowledged, as the knowledge I acquired during this time would have surely been less without his support. I warmly thank him for the fruitful and friendly cooperation. Long and fruitful discussions with Apl. Prof. Dr.-Ing. habil. Jochen Hopf are gratefully acknowledged, as his rich experience proved invaluable in clarifying many theoretical and practical details. I also thank Dr.-Ing. Joachim Brose for his kind help whenever it was needed and the electromagnetic simulations contributed to this work. -

KMD 550/850 Multi-Function Display Flight Information Services (FIS) Pilot’S Guide Addendum

N B KMD 550/850 Multi-Function Display Flight Information Services (FIS) Pilot’s Guide Addendum For Software Version 02/02 and later Revision 6 Feb/2009 006-18237-0000 The information contained in this manual is for reference use only. If any information contained herein conflicts with similar information contained in the Airplane Flight Manual Supplement, the information in the Airplane Flight Manual Supplement shall take precedence. WARNING The enclosed technical data is eligible for export under License Designation NLR and is to be used solely by the individual/organization to whom it is addressed. Diversion contrary to U.S. law is prohibited. COPYRIGHT NOTICE Copyright © 2001, 2002, 2004, 2007, 2009 Honeywell International Inc. All rights reserved. Reproduction of this publication or any portion thereof by any means without the express written permission of Honeywell International Inc. is prohibited. For further information contact the Manager, Technical Publications; Honeywell, One Technology Center, 23500 West 105th Street, Olathe, Kansas 66061. Telephone: (913) 782-0400. Revision History Manual KMD 550/850 Flight Information Services (FIS) Pilot’s Guide Addendum Revision 6, February 2009 Part Number 006-18237-0000 Summary Added XM products: Precipitation Type (at Surface) Freezing Levels Winds Aloft Translated Metars Temporary Flight Restrictions (TFR’s) R-1 Revision History Manual KMD 550/850 Flight Information Services (FIS) Pilot’s Guide Addendum Revision 5, March 2007 Part Number 006-18237-0000 Summary Added XM Receiver functionality. R-2 Revision History Manual KMD 550/850 Flight Information Services (FIS) Pilot’s Guide Addendum Revision 4, November 2004 Part Number 006-18237-0000 Summary Add FIS Area Products (AIRMETs, SIGMETs, Convective SIGMETs and Alert Weather Watches). -

Jazz and Radio in the United States: Mediation, Genre, and Patronage

Jazz and Radio in the United States: Mediation, Genre, and Patronage Aaron Joseph Johnson Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2014 © 2014 Aaron Joseph Johnson All rights reserved ABSTRACT Jazz and Radio in the United States: Mediation, Genre, and Patronage Aaron Joseph Johnson This dissertation is a study of jazz on American radio. The dissertation's meta-subjects are mediation, classification, and patronage in the presentation of music via distribution channels capable of reaching widespread audiences. The dissertation also addresses questions of race in the representation of jazz on radio. A central claim of the dissertation is that a given direction in jazz radio programming reflects the ideological, aesthetic, and political imperatives of a given broadcasting entity. I further argue that this ideological deployment of jazz can appear as conservative or progressive programming philosophies, and that these tendencies reflect discursive struggles over the identity of jazz. The first chapter, "Jazz on Noncommercial Radio," describes in some detail the current (circa 2013) taxonomy of American jazz radio. The remaining chapters are case studies of different aspects of jazz radio in the United States. Chapter 2, "Jazz is on the Left End of the Dial," presents considerable detail to the way the music is positioned on specific noncommercial stations. Chapter 3, "Duke Ellington and Radio," uses Ellington's multifaceted radio career (1925-1953) as radio bandleader, radio celebrity, and celebrity DJ to examine the medium's shifting relationship with jazz and black American creative ambition. -

En 300 720 V2.1.0 (2015-12)

Draft ETSI EN 300 720 V2.1.0 (2015-12) HARMONISED EUROPEAN STANDARD Ultra-High Frequency (UHF) on-board vessels communications systems and equipment; Harmonised Standard covering the essential requirements of article 3.2 of the Directive 2014/53/EU 2 Draft ETSI EN 300 720 V2.1.0 (2015-12) Reference REN/ERM-TG26-136 Keywords Harmonised Standard, maritime, radio, UHF ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice The present document can be downloaded from: http://www.etsi.org/standards-search The present document may be made available in electronic versions and/or in print. The content of any electronic and/or print versions of the present document shall not be modified without the prior written authorization of ETSI. In case of any existing or perceived difference in contents between such versions and/or in print, the only prevailing document is the print of the Portable Document Format (PDF) version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: https://portal.etsi.org/People/CommiteeSupportStaff.aspx Copyright Notification No part may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm except as authorized by written permission of ETSI.