Measures of Skewness

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 22: Bivariate Normal Distribution Distribution

6.5 Conditional Distributions General Bivariate Normal Let Z1; Z2 ∼ N (0; 1), which we will use to build a general bivariate normal Lecture 22: Bivariate Normal Distribution distribution. 1 1 2 2 f (z1; z2) = exp − (z1 + z2 ) Statistics 104 2π 2 We want to transform these unit normal distributions to have the follow Colin Rundel arbitrary parameters: µX ; µY ; σX ; σY ; ρ April 11, 2012 X = σX Z1 + µX p 2 Y = σY [ρZ1 + 1 − ρ Z2] + µY Statistics 104 (Colin Rundel) Lecture 22 April 11, 2012 1 / 22 6.5 Conditional Distributions 6.5 Conditional Distributions General Bivariate Normal - Marginals General Bivariate Normal - Cov/Corr First, lets examine the marginal distributions of X and Y , Second, we can find Cov(X ; Y ) and ρ(X ; Y ) Cov(X ; Y ) = E [(X − E(X ))(Y − E(Y ))] X = σX Z1 + µX h p i = E (σ Z + µ − µ )(σ [ρZ + 1 − ρ2Z ] + µ − µ ) = σX N (0; 1) + µX X 1 X X Y 1 2 Y Y 2 h p 2 i = N (µX ; σX ) = E (σX Z1)(σY [ρZ1 + 1 − ρ Z2]) h 2 p 2 i = σX σY E ρZ1 + 1 − ρ Z1Z2 p 2 2 Y = σY [ρZ1 + 1 − ρ Z2] + µY = σX σY ρE[Z1 ] p 2 = σX σY ρ = σY [ρN (0; 1) + 1 − ρ N (0; 1)] + µY = σ [N (0; ρ2) + N (0; 1 − ρ2)] + µ Y Y Cov(X ; Y ) ρ(X ; Y ) = = ρ = σY N (0; 1) + µY σX σY 2 = N (µY ; σY ) Statistics 104 (Colin Rundel) Lecture 22 April 11, 2012 2 / 22 Statistics 104 (Colin Rundel) Lecture 22 April 11, 2012 3 / 22 6.5 Conditional Distributions 6.5 Conditional Distributions General Bivariate Normal - RNG Multivariate Change of Variables Consequently, if we want to generate a Bivariate Normal random variable Let X1;:::; Xn have a continuous joint distribution with pdf f defined of S. -

Applied Biostatistics Mean and Standard Deviation the Mean the Median Is Not the Only Measure of Central Value for a Distribution

Health Sciences M.Sc. Programme Applied Biostatistics Mean and Standard Deviation The mean The median is not the only measure of central value for a distribution. Another is the arithmetic mean or average, usually referred to simply as the mean. This is found by taking the sum of the observations and dividing by their number. The mean is often denoted by a little bar over the symbol for the variable, e.g. x . The sample mean has much nicer mathematical properties than the median and is thus more useful for the comparison methods described later. The median is a very useful descriptive statistic, but not much used for other purposes. Median, mean and skewness The sum of the 57 FEV1s is 231.51 and hence the mean is 231.51/57 = 4.06. This is very close to the median, 4.1, so the median is within 1% of the mean. This is not so for the triglyceride data. The median triglyceride is 0.46 but the mean is 0.51, which is higher. The median is 10% away from the mean. If the distribution is symmetrical the sample mean and median will be about the same, but in a skew distribution they will not. If the distribution is skew to the right, as for serum triglyceride, the mean will be greater, if it is skew to the left the median will be greater. This is because the values in the tails affect the mean but not the median. Figure 1 shows the positions of the mean and median on the histogram of triglyceride. -

1. How Different Is the T Distribution from the Normal?

Statistics 101–106 Lecture 7 (20 October 98) c David Pollard Page 1 Read M&M §7.1 and §7.2, ignoring starred parts. Reread M&M §3.2. The eects of estimated variances on normal approximations. t-distributions. Comparison of two means: pooling of estimates of variances, or paired observations. In Lecture 6, when discussing comparison of two Binomial proportions, I was content to estimate unknown variances when calculating statistics that were to be treated as approximately normally distributed. You might have worried about the effect of variability of the estimate. W. S. Gosset (“Student”) considered a similar problem in a very famous 1908 paper, where the role of Student’s t-distribution was first recognized. Gosset discovered that the effect of estimated variances could be described exactly in a simplified problem where n independent observations X1,...,Xn are taken from (, ) = ( + ...+ )/ a normal√ distribution, N . The sample mean, X X1 Xn n has a N(, / n) distribution. The random variable X Z = √ / n 2 2 Phas a standard normal distribution. If we estimate by the sample variance, s = ( )2/( ) i Xi X n 1 , then the resulting statistic, X T = √ s/ n no longer has a normal distribution. It has a t-distribution on n 1 degrees of freedom. Remark. I have written T , instead of the t used by M&M page 505. I find it causes confusion that t refers to both the name of the statistic and the name of its distribution. As you will soon see, the estimation of the variance has the effect of spreading out the distribution a little beyond what it would be if were used. -

Fan Chart: Methodology and Its Application to Inflation Forecasting in India

W P S (DEPR) : 5 / 2011 RBI WORKING PAPER SERIES Fan Chart: Methodology and its Application to Inflation Forecasting in India Nivedita Banerjee and Abhiman Das DEPARTMENT OF ECONOMIC AND POLICY RESEARCH MAY 2011 The Reserve Bank of India (RBI) introduced the RBI Working Papers series in May 2011. These papers present research in progress of the staff members of RBI and are disseminated to elicit comments and further debate. The views expressed in these papers are those of authors and not that of RBI. Comments and observations may please be forwarded to authors. Citation and use of such papers should take into account its provisional character. Copyright: Reserve Bank of India 2011 Fan Chart: Methodology and its Application to Inflation Forecasting in India Nivedita Banerjee 1 (Email) and Abhiman Das (Email) Abstract Ever since Bank of England first published its inflation forecasts in February 1996, the Fan Chart has been an integral part of inflation reports of central banks around the world. The Fan Chart is basically used to improve presentation: to focus attention on the whole of the forecast distribution, rather than on small changes to the central projection. However, forecast distribution is a technical concept originated from the statistical sampling distribution. In this paper, we have presented the technical details underlying the derivation of Fan Chart used in representing the uncertainty in inflation forecasts. The uncertainty occurs because of the inter-play of the macro-economic variables affecting inflation. The uncertainty in the macro-economic variables is based on their historical standard deviation of the forecast errors, but we also allow these to be subjectively adjusted. -

Characteristics and Statistics of Digital Remote Sensing Imagery (1)

Characteristics and statistics of digital remote sensing imagery (1) Digital Images: 1 Digital Image • With raster data structure, each image is treated as an array of values of the pixels. • Image data is organized as rows and columns (or lines and pixels) start from the upper left corner of the image. • Each pixel (picture element) is treated as a separate unite. Statistics of Digital Images Help: • Look at the frequency of occurrence of individual brightness values in the image displayed • View individual pixel brightness values at specific locations or within a geographic area; • Compute univariate descriptive statistics to determine if there are unusual anomalies in the image data; and • Compute multivariate statistics to determine the amount of between-band correlation (e.g., to identify redundancy). 2 Statistics of Digital Images It is necessary to calculate fundamental univariate and multivariate statistics of the multispectral remote sensor data. This involves identification and calculation of – maximum and minimum value –the range, mean, standard deviation – between-band variance-covariance matrix – correlation matrix, and – frequencies of brightness values The results of the above can be used to produce histograms. Such statistics provide information necessary for processing and analyzing remote sensing data. A “population” is an infinite or finite set of elements. A “sample” is a subset of the elements taken from a population used to make inferences about certain characteristics of the population. (e.g., training signatures) 3 Large samples drawn randomly from natural populations usually produce a symmetrical frequency distribution. Most values are clustered around the central value, and the frequency of occurrence declines away from this central point. -

Linear Regression

eesc BC 3017 statistics notes 1 LINEAR REGRESSION Systematic var iation in the true value Up to now, wehav e been thinking about measurement as sampling of values from an ensemble of all possible outcomes in order to estimate the true value (which would, according to our previous discussion, be well approximated by the mean of a very large sample). Givenasample of outcomes, we have sometimes checked the hypothesis that it is a random sample from some ensemble of outcomes, by plotting the data points against some other variable, such as ordinal position. Under the hypothesis of random selection, no clear trend should appear.Howev er, the contrary case, where one finds a clear trend, is very important. Aclear trend can be a discovery,rather than a nuisance! Whether it is adiscovery or a nuisance (or both) depends on what one finds out about the reasons underlying the trend. In either case one must be prepared to deal with trends in analyzing data. Figure 2.1 (a) shows a plot of (hypothetical) data in which there is a very clear trend. The yaxis scales concentration of coliform bacteria sampled from rivers in various regions (units are colonies per liter). The x axis is a hypothetical indexofregional urbanization, ranging from 1 to 10. The hypothetical data consist of 6 different measurements at each levelofurbanization. The mean of each set of 6 measurements givesarough estimate of the true value for coliform bacteria concentration for rivers in a region with that urbanization level. The jagged dark line drawn on the graph connects these estimates of true value and makes the trend quite clear: more extensive urbanization is associated with higher true values of bacteria concentration. -

Finding Basic Statistics Using Minitab 1. Put Your Data Values in One of the Columns of the Minitab Worksheet

Finding Basic Statistics Using Minitab 1. Put your data values in one of the columns of the Minitab worksheet. 2. Add a variable name in the gray box just above the data values. 3. Click on “Stat”, then click on “Basic Statistics”, and then click on "Display Descriptive Statistics". 4. Choose the variable you want the basic statistics for click on “Select”. 5. Click on the “Statistics” box and then check the box next to each statistic you want to see, and uncheck the boxes next to those you do not what to see. 6. Click on “OK” in that window and click on “OK” in the next window. 7. The values of all the statistics you selected will appear in the Session window. Example (Navidi & Monk, Elementary Statistics, 2nd edition, #31 p. 143, 1st 4 columns): This data gives the number of tribal casinos in a sample of 16 states. 3 7 14 114 2 3 7 8 26 4 3 14 70 3 21 1 Open Minitab and enter the data under C1. The table below shows a portion of the entered data. ↓ C1 C2 Casinos 1 3 2 7 3 14 4 114 5 2 6 3 7 7 8 8 9 26 10 4 Now click on “Stat” and then choose “Basic Statistics” and “Display Descriptive Statistics”. Click in the box under “Variables:”, choose C1 from the window at left, and then click on the “Select” button. Next click on the “Statistics” button and choose which statistics you want to find. We will usually be interested in the following statistics: mean, standard error of the mean, standard deviation, minimum, maximum, range, first quartile, median, third quartile, interquartile range, mode, and skewness. -

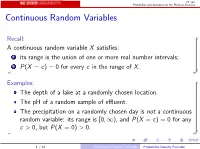

Continuous Random Variables

ST 380 Probability and Statistics for the Physical Sciences Continuous Random Variables Recall: A continuous random variable X satisfies: 1 its range is the union of one or more real number intervals; 2 P(X = c) = 0 for every c in the range of X . Examples: The depth of a lake at a randomly chosen location. The pH of a random sample of effluent. The precipitation on a randomly chosen day is not a continuous random variable: its range is [0; 1), and P(X = c) = 0 for any c > 0, but P(X = 0) > 0. 1 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences Discretized Data Suppose that we measure the depth of the lake, but round the depth off to some unit. The rounded value Y is a discrete random variable; we can display its probability mass function as a bar graph, because each mass actually represents an interval of values of X . In R source("discretize.R") discretize(0.5) 2 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences As the rounding unit becomes smaller, the bar graph more accurately represents the continuous distribution: discretize(0.25) discretize(0.1) When the rounding unit is very small, the bar graph approximates a smooth function: discretize(0.01) plot(f, from = 1, to = 5) 3 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences The probability that X is between two values a and b, P(a ≤ X ≤ b), can be approximated by P(a ≤ Y ≤ b). -

The Central Limit Theorem (Review)

Introduction to Confidence Intervals { Solutions STAT-UB.0103 { Statistics for Business Control and Regression Models The Central Limit Theorem (Review) 1. You draw a random sample of size n = 64 from a population with mean µ = 50 and standard deviation σ = 16. From this, you compute the sample mean, X¯. (a) What are the expectation and standard deviation of X¯? Solution: E[X¯] = µ = 50; σ 16 sd[X¯] = p = p = 2: n 64 (b) Approximately what is the probability that the sample mean is above 54? Solution: The sample mean has expectation 50 and standard deviation 2. By the central limit theorem, the sample mean is approximately normally distributed. Thus, by the empirical rule, there is roughly a 2.5% chance of being above 54 (2 standard deviations above the mean). (c) Do you need any additional assumptions for part (c) to be true? Solution: No. Since the sample size is large (n ≥ 30), the central limit theorem applies. 2. You draw a random sample of size n = 16 from a population with mean µ = 100 and standard deviation σ = 20. From this, you compute the sample mean, X¯. (a) What are the expectation and standard deviation of X¯? Solution: E[X¯] = µ = 100; σ 20 sd[X¯] = p = p = 5: n 16 (b) Approximately what is the probability that the sample mean is between 95 and 105? Solution: The sample mean has expectation 100 and standard deviation 5. If it is approximately normal, then we can use the empirical rule to say that there is a 68% of being between 95 and 105 (within one standard deviation of its expecation). -

Calculating Variance and Standard Deviation

VARIANCE AND STANDARD DEVIATION Recall that the range is the difference between the upper and lower limits of the data. While this is important, it does have one major disadvantage. It does not describe the variation among the variables. For instance, both of these sets of data have the same range, yet their values are definitely different. 90, 90, 90, 98, 90 Range = 8 1, 6, 8, 1, 9, 5 Range = 8 To better describe the variation, we will introduce two other measures of variation—variance and standard deviation (the variance is the square of the standard deviation). These measures tell us how much the actual values differ from the mean. The larger the standard deviation, the more spread out the values. The smaller the standard deviation, the less spread out the values. This measure is particularly helpful to teachers as they try to find whether their students’ scores on a certain test are closely related to the class average. To find the standard deviation of a set of values: a. Find the mean of the data b. Find the difference (deviation) between each of the scores and the mean c. Square each deviation d. Sum the squares e. Dividing by one less than the number of values, find the “mean” of this sum (the variance*) f. Find the square root of the variance (the standard deviation) *Note: In some books, the variance is found by dividing by n. In statistics it is more useful to divide by n -1. EXAMPLE Find the variance and standard deviation of the following scores on an exam: 92, 95, 85, 80, 75, 50 SOLUTION First we find the mean of the data: 92+95+85+80+75+50 477 Mean = = = 79.5 6 6 Then we find the difference between each score and the mean (deviation). -

Binomial and Normal Distributions

Probability distributions Introduction What is a probability? If I perform n experiments and a particular event occurs on r occasions, the “relative frequency” of r this event is simply . This is an experimental observation that gives us an estimate of the n probability – there will be some random variation above and below the actual probability. If I do a large number of experiments, the relative frequency gets closer to the probability. We define the probability as meaning the limit of the relative frequency as the number of experiments tends to infinity. Usually we can calculate the probability from theoretical considerations (number of beads in a bag, number of faces of a dice, tree diagram, any other kind of statistical model). What is a random variable? A random variable (r.v.) is the value that might be obtained from some kind of experiment or measurement process in which there is some random uncertainty. A discrete r.v. takes a finite number of possible values with distinct steps between them. A continuous r.v. takes an infinite number of values which vary smoothly. We talk not of the probability of getting a particular value but of the probability that a value lies between certain limits. Random variables are given names which start with a capital letter. An r.v. is a numerical value (eg. a head is not a value but the number of heads in 10 throws is an r.v.) Eg the score when throwing a dice (discrete); the air temperature at a random time and date (continuous); the age of a cat chosen at random. -

On Median and Quartile Sets of Ordered Random Variables*

ISSN 2590-9770 The Art of Discrete and Applied Mathematics 3 (2020) #P2.08 https://doi.org/10.26493/2590-9770.1326.9fd (Also available at http://adam-journal.eu) On median and quartile sets of ordered random variables* Iztok Banicˇ Faculty of Natural Sciences and Mathematics, University of Maribor, Koroskaˇ 160, SI-2000 Maribor, Slovenia, and Institute of Mathematics, Physics and Mechanics, Jadranska 19, SI-1000 Ljubljana, Slovenia, and Andrej Marusiˇ cˇ Institute, University of Primorska, Muzejski trg 2, SI-6000 Koper, Slovenia Janez Zerovnikˇ Faculty of Mechanical Engineering, University of Ljubljana, Askerˇ cevaˇ 6, SI-1000 Ljubljana, Slovenia, and Institute of Mathematics, Physics and Mechanics, Jadranska 19, SI-1000 Ljubljana, Slovenia Received 30 November 2018, accepted 13 August 2019, published online 21 August 2020 Abstract We give new results about the set of all medians, the set of all first quartiles and the set of all third quartiles of a finite dataset. We also give new and interesting results about rela- tionships between these sets. We also use these results to provide an elementary correctness proof of the Langford’s doubling method. Keywords: Statistics, probability, median, first quartile, third quartile, median set, first quartile set, third quartile set. Math. Subj. Class. (2020): 62-07, 60E05, 60-08, 60A05, 62A01 1 Introduction Quantiles play a fundamental role in statistics: they are the critical values used in hypoth- esis testing and interval estimation. Often they are the characteristics of distributions we usually wish to estimate. The use of quantiles as primary measure of performance has *This work was supported in part by the Slovenian Research Agency (grants J1-8155, N1-0071, P2-0248, and J1-1693.) E-mail addresses: [email protected] (Iztok Banic),ˇ [email protected] (Janez Zerovnik)ˇ cb This work is licensed under https://creativecommons.org/licenses/by/4.0/ 2 Art Discrete Appl.