Lecture Notes 5)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 6 Continuous Random Variables and Probability

EF 507 QUANTITATIVE METHODS FOR ECONOMICS AND FINANCE FALL 2019 Chapter 6 Continuous Random Variables and Probability Distributions Chap 6-1 Probability Distributions Probability Distributions Ch. 5 Discrete Continuous Ch. 6 Probability Probability Distributions Distributions Binomial Uniform Hypergeometric Normal Poisson Exponential Chap 6-2/62 Continuous Probability Distributions § A continuous random variable is a variable that can assume any value in an interval § thickness of an item § time required to complete a task § temperature of a solution § height in inches § These can potentially take on any value, depending only on the ability to measure accurately. Chap 6-3/62 Cumulative Distribution Function § The cumulative distribution function, F(x), for a continuous random variable X expresses the probability that X does not exceed the value of x F(x) = P(X £ x) § Let a and b be two possible values of X, with a < b. The probability that X lies between a and b is P(a < X < b) = F(b) -F(a) Chap 6-4/62 Probability Density Function The probability density function, f(x), of random variable X has the following properties: 1. f(x) > 0 for all values of x 2. The area under the probability density function f(x) over all values of the random variable X is equal to 1.0 3. The probability that X lies between two values is the area under the density function graph between the two values 4. The cumulative density function F(x0) is the area under the probability density function f(x) from the minimum x value up to x0 x0 f(x ) = f(x)dx 0 ò xm where -

Skewed Double Exponential Distribution and Its Stochastic Rep- Resentation

EUROPEAN JOURNAL OF PURE AND APPLIED MATHEMATICS Vol. 2, No. 1, 2009, (1-20) ISSN 1307-5543 – www.ejpam.com Skewed Double Exponential Distribution and Its Stochastic Rep- resentation 12 2 2 Keshav Jagannathan , Arjun K. Gupta ∗, and Truc T. Nguyen 1 Coastal Carolina University Conway, South Carolina, U.S.A 2 Bowling Green State University Bowling Green, Ohio, U.S.A Abstract. Definitions of the skewed double exponential (SDE) distribution in terms of a mixture of double exponential distributions as well as in terms of a scaled product of a c.d.f. and a p.d.f. of double exponential random variable are proposed. Its basic properties are studied. Multi-parameter versions of the skewed double exponential distribution are also given. Characterization of the SDE family of distributions and stochastic representation of the SDE distribution are derived. AMS subject classifications: Primary 62E10, Secondary 62E15. Key words: Symmetric distributions, Skew distributions, Stochastic representation, Linear combina- tion of random variables, Characterizations, Skew Normal distribution. 1. Introduction The double exponential distribution was first published as Laplace’s first law of error in the year 1774 and stated that the frequency of an error could be expressed as an exponential function of the numerical magnitude of the error, disregarding sign. This distribution comes up as a model in many statistical problems. It is also considered in robustness studies, which suggests that it provides a model with different characteristics ∗Corresponding author. Email address: (A. Gupta) http://www.ejpam.com 1 c 2009 EJPAM All rights reserved. K. Jagannathan, A. Gupta, and T. Nguyen / Eur. -

1 One Parameter Exponential Families

1 One parameter exponential families The world of exponential families bridges the gap between the Gaussian family and general dis- tributions. Many properties of Gaussians carry through to exponential families in a fairly precise sense. • In the Gaussian world, there exact small sample distributional results (i.e. t, F , χ2). • In the exponential family world, there are approximate distributional results (i.e. deviance tests). • In the general setting, we can only appeal to asymptotics. A one-parameter exponential family, F is a one-parameter family of distributions of the form Pη(dx) = exp (η · t(x) − Λ(η)) P0(dx) for some probability measure P0. The parameter η is called the natural or canonical parameter and the function Λ is called the cumulant generating function, and is simply the normalization needed to make dPη fη(x) = (x) = exp (η · t(x) − Λ(η)) dP0 a proper probability density. The random variable t(X) is the sufficient statistic of the exponential family. Note that P0 does not have to be a distribution on R, but these are of course the simplest examples. 1.0.1 A first example: Gaussian with linear sufficient statistic Consider the standard normal distribution Z e−z2=2 P0(A) = p dz A 2π and let t(x) = x. Then, the exponential family is eη·x−x2=2 Pη(dx) / p 2π and we see that Λ(η) = η2=2: eta= np.linspace(-2,2,101) CGF= eta**2/2. plt.plot(eta, CGF) A= plt.gca() A.set_xlabel(r'$\eta$', size=20) A.set_ylabel(r'$\Lambda(\eta)$', size=20) f= plt.gcf() 1 Thus, the exponential family in this setting is the collection F = fN(η; 1) : η 2 Rg : d 1.0.2 Normal with quadratic sufficient statistic on R d As a second example, take P0 = N(0;Id×d), i.e. -

Fan Chart: Methodology and Its Application to Inflation Forecasting in India

W P S (DEPR) : 5 / 2011 RBI WORKING PAPER SERIES Fan Chart: Methodology and its Application to Inflation Forecasting in India Nivedita Banerjee and Abhiman Das DEPARTMENT OF ECONOMIC AND POLICY RESEARCH MAY 2011 The Reserve Bank of India (RBI) introduced the RBI Working Papers series in May 2011. These papers present research in progress of the staff members of RBI and are disseminated to elicit comments and further debate. The views expressed in these papers are those of authors and not that of RBI. Comments and observations may please be forwarded to authors. Citation and use of such papers should take into account its provisional character. Copyright: Reserve Bank of India 2011 Fan Chart: Methodology and its Application to Inflation Forecasting in India Nivedita Banerjee 1 (Email) and Abhiman Das (Email) Abstract Ever since Bank of England first published its inflation forecasts in February 1996, the Fan Chart has been an integral part of inflation reports of central banks around the world. The Fan Chart is basically used to improve presentation: to focus attention on the whole of the forecast distribution, rather than on small changes to the central projection. However, forecast distribution is a technical concept originated from the statistical sampling distribution. In this paper, we have presented the technical details underlying the derivation of Fan Chart used in representing the uncertainty in inflation forecasts. The uncertainty occurs because of the inter-play of the macro-economic variables affecting inflation. The uncertainty in the macro-economic variables is based on their historical standard deviation of the forecast errors, but we also allow these to be subjectively adjusted. -

Basic Econometrics / Statistics Statistical Distributions: Normal, T, Chi-Sq, & F

Basic Econometrics / Statistics Statistical Distributions: Normal, T, Chi-Sq, & F Course : Basic Econometrics : HC43 / Statistics B.A. Hons Economics, Semester IV/ Semester III Delhi University Course Instructor: Siddharth Rathore Assistant Professor Economics Department, Gargi College Siddharth Rathore guj75845_appC.qxd 4/16/09 12:41 PM Page 461 APPENDIX C SOME IMPORTANT PROBABILITY DISTRIBUTIONS In Appendix B we noted that a random variable (r.v.) can be described by a few characteristics, or moments, of its probability function (PDF or PMF), such as the expected value and variance. This, however, presumes that we know the PDF of that r.v., which is a tall order since there are all kinds of random variables. In practice, however, some random variables occur so frequently that statisticians have determined their PDFs and documented their properties. For our purpose, we will consider only those PDFs that are of direct interest to us. But keep in mind that there are several other PDFs that statisticians have studied which can be found in any standard statistics textbook. In this appendix we will discuss the following four probability distributions: 1. The normal distribution 2. The t distribution 3. The chi-square (2 ) distribution 4. The F distribution These probability distributions are important in their own right, but for our purposes they are especially important because they help us to find out the probability distributions of estimators (or statistics), such as the sample mean and sample variance. Recall that estimators are random variables. Equipped with that knowledge, we will be able to draw inferences about their true population values. -

Finding Basic Statistics Using Minitab 1. Put Your Data Values in One of the Columns of the Minitab Worksheet

Finding Basic Statistics Using Minitab 1. Put your data values in one of the columns of the Minitab worksheet. 2. Add a variable name in the gray box just above the data values. 3. Click on “Stat”, then click on “Basic Statistics”, and then click on "Display Descriptive Statistics". 4. Choose the variable you want the basic statistics for click on “Select”. 5. Click on the “Statistics” box and then check the box next to each statistic you want to see, and uncheck the boxes next to those you do not what to see. 6. Click on “OK” in that window and click on “OK” in the next window. 7. The values of all the statistics you selected will appear in the Session window. Example (Navidi & Monk, Elementary Statistics, 2nd edition, #31 p. 143, 1st 4 columns): This data gives the number of tribal casinos in a sample of 16 states. 3 7 14 114 2 3 7 8 26 4 3 14 70 3 21 1 Open Minitab and enter the data under C1. The table below shows a portion of the entered data. ↓ C1 C2 Casinos 1 3 2 7 3 14 4 114 5 2 6 3 7 7 8 8 9 26 10 4 Now click on “Stat” and then choose “Basic Statistics” and “Display Descriptive Statistics”. Click in the box under “Variables:”, choose C1 from the window at left, and then click on the “Select” button. Next click on the “Statistics” button and choose which statistics you want to find. We will usually be interested in the following statistics: mean, standard error of the mean, standard deviation, minimum, maximum, range, first quartile, median, third quartile, interquartile range, mode, and skewness. -

Lecture 2 — September 24 2.1 Recap 2.2 Exponential Families

STATS 300A: Theory of Statistics Fall 2015 Lecture 2 | September 24 Lecturer: Lester Mackey Scribe: Stephen Bates and Andy Tsao 2.1 Recap Last time, we set out on a quest to develop optimal inference procedures and, along the way, encountered an important pair of assertions: not all data is relevant, and irrelevant data can only increase risk and hence impair performance. This led us to introduce a notion of lossless data compression (sufficiency): T is sufficient for P with X ∼ Pθ 2 P if X j T (X) is independent of θ. How far can we take this idea? At what point does compression impair performance? These are questions of optimal data reduction. While we will develop general answers to these questions in this lecture and the next, we can often say much more in the context of specific modeling choices. With this in mind, let's consider an especially important class of models known as the exponential family models. 2.2 Exponential Families Definition 1. The model fPθ : θ 2 Ωg forms an s-dimensional exponential family if each Pθ has density of the form: s ! X p(x; θ) = exp ηi(θ)Ti(x) − B(θ) h(x) i=1 • ηi(θ) 2 R are called the natural parameters. • Ti(x) 2 R are its sufficient statistics, which follows from NFFC. • B(θ) is the log-partition function because it is the logarithm of a normalization factor: s ! ! Z X B(θ) = log exp ηi(θ)Ti(x) h(x)dµ(x) 2 R i=1 • h(x) 2 R: base measure. -

Statistical Inference

GU4204: Statistical Inference Bodhisattva Sen Columbia University February 27, 2020 Contents 1 Introduction5 1.1 Statistical Inference: Motivation.....................5 1.2 Recap: Some results from probability..................5 1.3 Back to Example 1.1...........................8 1.4 Delta method...............................8 1.5 Back to Example 1.1........................... 10 2 Statistical Inference: Estimation 11 2.1 Statistical model............................. 11 2.2 Method of Moments estimators..................... 13 3 Method of Maximum Likelihood 16 3.1 Properties of MLEs............................ 20 3.1.1 Invariance............................. 20 3.1.2 Consistency............................ 21 3.2 Computational methods for approximating MLEs........... 21 3.2.1 Newton's Method......................... 21 3.2.2 The EM Algorithm........................ 22 1 4 Principles of estimation 23 4.1 Mean squared error............................ 24 4.2 Comparing estimators.......................... 25 4.3 Unbiased estimators........................... 26 4.4 Sufficient Statistics............................ 28 5 Bayesian paradigm 33 5.1 Prior distribution............................. 33 5.2 Posterior distribution........................... 34 5.3 Bayes Estimators............................. 36 5.4 Sampling from a normal distribution.................. 37 6 The sampling distribution of a statistic 39 6.1 The gamma and the χ2 distributions.................. 39 6.1.1 The gamma distribution..................... 39 6.1.2 The Chi-squared distribution.................. 41 6.2 Sampling from a normal population................... 42 6.3 The t-distribution............................. 45 7 Confidence intervals 46 8 The (Cramer-Rao) Information Inequality 51 9 Large Sample Properties of the MLE 57 10 Hypothesis Testing 61 10.1 Principles of Hypothesis Testing..................... 61 10.2 Critical regions and test statistics.................... 62 10.3 Power function and types of error.................... 64 10.4 Significance level............................ -

Likelihood-Based Inference

The score statistic Inference Exponential distribution example Likelihood-based inference Patrick Breheny September 29 Patrick Breheny Survival Data Analysis (BIOS 7210) 1/32 The score statistic Univariate Inference Multivariate Exponential distribution example Introduction In previous lectures, we constructed and plotted likelihoods and used them informally to comment on likely values of parameters Our goal for today is to make this more rigorous, in terms of quantifying coverage and type I error rates for various likelihood-based approaches to inference With the exception of extremely simple cases such as the two-sample exponential model, exact derivation of these quantities is typically unattainable for survival models, and we must rely on asymptotic likelihood arguments Patrick Breheny Survival Data Analysis (BIOS 7210) 2/32 The score statistic Univariate Inference Multivariate Exponential distribution example The score statistic Likelihoods are typically easier to work with on the log scale (where products become sums); furthermore, since it is only relative comparisons that matter with likelihoods, it is more meaningful to work with derivatives than the likelihood itself Thus, we often work with the derivative of the log-likelihood, which is known as the score, and often denoted U: d U (θ) = `(θjX) X dθ Note that U is a random variable, as it depends on X U is a function of θ For independent observations, the score of the entire sample is the sum of the scores for the individual observations: X U = Ui i Patrick Breheny Survival -

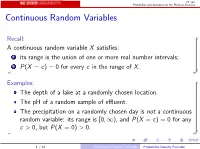

Continuous Random Variables

ST 380 Probability and Statistics for the Physical Sciences Continuous Random Variables Recall: A continuous random variable X satisfies: 1 its range is the union of one or more real number intervals; 2 P(X = c) = 0 for every c in the range of X . Examples: The depth of a lake at a randomly chosen location. The pH of a random sample of effluent. The precipitation on a randomly chosen day is not a continuous random variable: its range is [0; 1), and P(X = c) = 0 for any c > 0, but P(X = 0) > 0. 1 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences Discretized Data Suppose that we measure the depth of the lake, but round the depth off to some unit. The rounded value Y is a discrete random variable; we can display its probability mass function as a bar graph, because each mass actually represents an interval of values of X . In R source("discretize.R") discretize(0.5) 2 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences As the rounding unit becomes smaller, the bar graph more accurately represents the continuous distribution: discretize(0.25) discretize(0.1) When the rounding unit is very small, the bar graph approximates a smooth function: discretize(0.01) plot(f, from = 1, to = 5) 3 / 12 Continuous Random Variables Probability Density Function ST 380 Probability and Statistics for the Physical Sciences The probability that X is between two values a and b, P(a ≤ X ≤ b), can be approximated by P(a ≤ Y ≤ b). -

Chapter 10 “Some Continuous Distributions”.Pdf

CHAPTER 10 Some continuous distributions 10.1. Examples of continuous random variables We look at some other continuous random variables besides normals. Uniform distribution A continuous random variable has uniform distribution if its density is f(x) = 1/(b a) − if a 6 x 6 b and 0 otherwise. For a random variable X with uniform distribution its expectation is 1 b a + b EX = x dx = . b a ˆ 2 − a Exponential distribution A continuous random variable has exponential distribution with parameter λ > 0 if its λx density is f(x) = λe− if x > 0 and 0 otherwise. Suppose X is a random variable with an exponential distribution with parameter λ. Then we have ∞ λx λa (10.1.1) P(X > a) = λe− dx = e− , ˆa λa FX (a) = 1 P(X > a) = 1 e− , − − and we can use integration by parts to see that EX = 1/λ, Var X = 1/λ2. Examples where an exponential random variable is a good model is the length of a telephone call, the length of time before someone arrives at a bank, the length of time before a light bulb burns out. Exponentials are memoryless, that is, P(X > s + t X > t) = P(X > s), | or given that the light bulb has burned 5 hours, the probability it will burn 2 more hours is the same as the probability a new light bulb will burn 2 hours. Here is how we can prove this 129 130 10. SOME CONTINUOUS DISTRIBUTIONS P(X > s + t) P(X > s + t X > t) = | P(X > t) λ(s+t) e− λs − = λt = e e− = P(X > s), where we used Equation (10.1.1) for a = t and a = s + t. -

Random Variables and Applications

Random Variables and Applications OPRE 6301 Random Variables. As noted earlier, variability is omnipresent in the busi- ness world. To model variability probabilistically, we need the concept of a random variable. A random variable is a numerically valued variable which takes on different values with given probabilities. Examples: The return on an investment in a one-year period The price of an equity The number of customers entering a store The sales volume of a store on a particular day The turnover rate at your organization next year 1 Types of Random Variables. Discrete Random Variable: — one that takes on a countable number of possible values, e.g., total of roll of two dice: 2, 3, ..., 12 • number of desktops sold: 0, 1, ... • customer count: 0, 1, ... • Continuous Random Variable: — one that takes on an uncountable number of possible values, e.g., interest rate: 3.25%, 6.125%, ... • task completion time: a nonnegative value • price of a stock: a nonnegative value • Basic Concept: Integer or rational numbers are discrete, while real numbers are continuous. 2 Probability Distributions. “Randomness” of a random variable is described by a probability distribution. Informally, the probability distribution specifies the probability or likelihood for a random variable to assume a particular value. Formally, let X be a random variable and let x be a possible value of X. Then, we have two cases. Discrete: the probability mass function of X specifies P (x) P (X = x) for all possible values of x. ≡ Continuous: the probability density function of X is a function f(x) that is such that f(x) h P (x < · ≈ X x + h) for small positive h.