SITAC Consortium

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Guidelines for Securing Radio Frequency Identification (RFID) Systems

Special Publication 800-98 Guidelines for Securing Radio Frequency Identification (RFID) Systems Recommendations of the National Institute of Standards and Technology Tom Karygiannis Bernard Eydt Greg Barber Lynn Bunn Ted Phillips NIST Special Publication 800-98 Guidelines for Securing Radio Frequency Identification (RFID) Systems Recommendations of the National Institute of Standards and Technology Tom Karygiannis Bernard Eydt Greg Barber Lynn Bunn Ted Phillips C O M P U T E R S E C U R I T Y Computer Security Division Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 April 2007 US Department of Commerce Carlos M. Gutierrez, Secretary Technology Administration Robert C. Cresanti, Under Secretary of Commerce for Technology National Institute of Standards and Technology William Jeffrey, Director GUIDELINES FOR SECURING RFID SYSTEMS Reports on Computer Systems Technology The Information Technology Laboratory (ITL) at the National Institute of Standards and Technology (NIST) promotes the US economy and public welfare by providing technical leadership for the nation’s measurement and standards infrastructure. ITL develops tests, test methods, reference data, proof of concept implementations, and technical analysis to advance the development and productive use of information technology. ITL’s responsibilities include the development of technical, physical, administrative, and management standards and guidelines for the cost-effective security and privacy of sensitive unclassified information in Federal computer systems. Special Publication 800-series documents report on ITL’s research, guidelines, and outreach efforts in computer security and its collaborative activities with industry, government, and academic organizations. National Institute of Standards and Technology Special Publication 800-98 Natl. -

Tesi Definitiva

UNIVERSITA’ DEGLI STUDI DI TORINO FACOLTA’ DI ECONOMIA CORSO DI LAUREA IN ECONOMIA E DIREZIONE DELLE IMPRESE RELAZIONE DI LAUREA INNOVATIVI DISPOSITIVI TECNOLOGICI PER UNA MAGGIORE INFORMAZIONE DEL CONSUMATORE NEL SETTORE AGROALIMENTARE: UNO STUDIO AL SALONE DEL GUSTO 2010 Relatore: Prof. Giovanni Peira Correlatori: Prof. Luigi Bollani Dott. Sergio Arnoldi Candidato: Andrea Gino Sferrazza ANNO ACCADEMICO 2010/2011 Ringraziamenti Ringrazio per la stesura di questo lavoro: - il Prof. Giovanni Peira del Dipartimento di Scienze Merceologiche, per avermi seguito e aiutato durante questo lavoro, fornendomi parte del materiale di studio, indicandomi alcune persone cruciali per la buona riuscita della stesura e per la sua completa disponibilità durante quesi mesi di ricerche; - il Prof. Luigi Bollani, del Dipartimento di Statistica e Matematica applicata “Diego de Castro”, per il suo fondamentale contributo nell’impostazione e nell’elaborazione del questionario, proposto durante il Salone del Gusto 2010; - il Dott. Sergio Arnoldi, coordinatore dell’Area Promozione Agroalimentare della Camera di commercio di Torino, il quale ha messo a disposizione tempo e risorse preziose per questo progetto di ricerca, sempre con molta professionalità e competenza; - il Dott. Alessandro Bonadonna del Dipartimento di Scienze Merceologiche, per avermi fornito preziosi spunti per la trattazione; - tutti coloro che mi hanno supportato nella stesura ed elaborazione del questionario, rendendo possibile il raggiungimento degli obiettivi prefissati nei tempi prestabiliti. Ringraziamenti particolari Il primo ringraziamento va a mia madre, l’unica vera persona che mi ha accompagnato in ogni momento durante il mio percorso di studio. Senza di lei, non avrei potuto raggiungere questo importante traguardo. Questo risultato lo divido con te. -

3 Security Threats for RFID Systems

FIDIS Future of Identity in the Information Society Title: “D3.7 A Structured Collection on Information and Literature on Technological and Usability Aspects of Radio Frequency Identification (RFID)” Author: WP3 Editors: Martin Meints (ICPP) Reviewers: Jozef Vyskoc (VaF) Sandra Steinbrecher (TUD) Identifier: D3.7 Type: [Template] Version: 1.0 Date: Monday, 04 June 2007 Status: [Deliverable] Class: [Public] File: fidis-wp3-del3.7.literature_RFID.doc Summary In this deliverable the physical properties of RFID, types of RFID systems basing on the physical properties and operational aspects of RFID systems are introduced and described. An overview on currently know security threats for RFID systems, countermeasures and related cost aspects is given. This is followed by a brief overview on current areas of application for RFID. To put a light on status quo and trends of development in the private sector in the context of RFID, the results of a study carried out in 2004 and 2005 in Germany are summarised. This is followed by an overview on relevant standards in the context of RFID. This deliverable also includes a bibliography containing relevant literature in the context of RFID. This is published in the bibliographic system at http://www.fidis.net/interactive/rfid-bibliography/ Copyright © 2004-07 by the FIDIS consortium - EC Contract No. 507512 The FIDIS NoE receives research funding from the Community’s Sixth Framework Program FIDIS D3.7 Future of Identity in the Information Society (No. 507512) Copyright Notice: This document may not be copied, reproduced, or modified in whole or in part for any purpose without written permission from the FIDIS Consortium. -

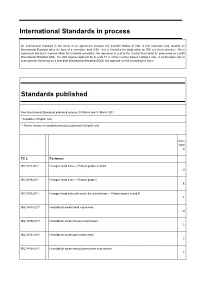

ISO Update Marec 2011

International Standards in process An International Standard is the result of an agreement between the member bodies of ISO. A first important step towards an International Standard takes the form of a committee draft (CD) - this is circulated for study within an ISO technical committee. When consensus has been reached within the technical committee, the document is sent to the Central Secretariat for processing as a draft International Standard (DIS). The DIS requires approval by at least 75 % of the member bodies casting a vote. A confirmation vote is subsequently carried out on a final draft International Standard (FDIS), the approval criteria remaining the same. Standards published New International Standards published between 01 March and 31 March 2011 * Available in English only ** French version of standard previously published in English only Price grou p TC 2 Fasteners ISO 4014:2011 Hexagon head bolts — Product grades A and B G ISO 4016:2011 Hexagon head bolts — Product grade C E ISO 8765:2011 Hexagon head bolts with metric fine pitch thread — Product grades A and B F ISO 14579:2011 Hexalobular socket head cap screws D ISO 14580:2011 Hexalobular socket cheese head screws C ISO 14583:2011 Hexalobular socket pan head screws C ISO 14584:2011 Hexalobular socket raised countersunk head screws C ISO 10510:2011 Tapping screw and washer assemblies with plain washers B TC 4 Rolling bearings ISO 15:2011 Rolling bearings — Radial bearings — Boundary dimensions, general plan K TC 17 Steel ISO 10893-1:2011 Non-destructive testing of steel -

A Hybrid Traceability Technology Selection Approach for Sustainable Food Supply Chains

sustainability Article A Hybrid Traceability Technology Selection Approach for Sustainable Food Supply Chains Samantha Islam 1,*, Louise Manning 2 and Jonathan M. Cullen 1 1 Energy, Fluids and Turbomachinery Division, Department of Engineering, University of Cambridge, Cambridge CB2 1PZ, UK; [email protected] 2 School of Agriculture, Food and the Environment, Royal Agricultural University, Gloucestershire GL7 6JS, UK; [email protected] * Correspondence: [email protected] Abstract: Traceability technologies have great potential to improve sustainable performance in cold food supply chains by reducing food loss. In existing approaches, traceability technologies are selected either intuitively or through a random approach, that neither considers the trade-off between multiple cost–benefit technology criteria nor systematically translates user requirements for traceability systems into the selection process. This paper presents a hybrid approach combining the fuzzy Analytic Hierarchy Process (AHP) and Technique for Order Preference by Similarity to Ideal Solution (TOPSIS) with integer linear programming to select the optimum traceability technologies for improving sustainable performance in cold food supply chains. The proposed methodology is applied in four case studies utilising data collected from literature and expert interviews. The proposed approach can assist decision-makers, e.g., food business operators and technology companies, to identify what combination of technologies best suits a given food supply chain scenario and reduces food loss at minimum cost. Citation: Islam, S.; Manning, L.; Cullen, J.M. A Hybrid Traceability Keywords: cold food chain; traceability technology; technology selection; fuzzy AHP; fuzzy TOPSIS; Technology Selection Approach for integer linear programming Sustainable Food Supply Chains. Sustainability 2021, 13, 9385. -

Rfid Para La Empresa Soluciones G Cuatro Del Ecuador Cia

ESCUELA POLITECNICA NACIONAL FACULTAD DE INGENIERÍA ELÉCTRICA Y ELECTRÓNICA DISEÑO DE UN SISTEMA DE CONTROL DE ACCESO UTILIZANDO LA TECNOLOGÍA DE IDENTIFICACIÓN RFID PARA LA EMPRESA SOLUCIONES G CUATRO DEL ECUADOR CIA. LTDA. PROYECTO PREVIO A LA OBTENCIÓN DEL TITULO DE INGENIERO EN ELECTRONICA Y REDES DE INFORMACIÓN PABLO WALTER PUPIALES ANGAMARCA [email protected] DIRECTOR: Ing. NELSON AVILA [email protected] Quito, julio 2009 DECLARACIÓN Yo, Pablo Walter Pupiales Angamarca, declaro bajo juramento que el trabajo aquí descrito es de mi autoría; que no ha sido previamente presentada para ningún grado o calificación profesional; y, que he consultado las referencias bibliográficas que se incluyen en este documento. A través de la presente declaración cedo mis derechos de propiedad intelectual correspondientes a este trabajo, a la Escuela Politécnica Nacional, según lo establecido por la Ley de Propiedad Intelectual, por su Reglamento y por la normatividad institucional vigente. Pablo Pupiales A. CERTIFICACIÓN Certifico que el presente trabajo fue desarrollado por Pablo Walter Pupiales Angamarca, bajo mi supervisión. Ing. Nelson Avila DIRECTOR DE PROYECTO DEDICATORIA A mis adorados padres Teodoro y Rosa quienes incondicionalmente me han apoyado en todo y siempre han estado pendientes de mis éxitos y fracasos de mis alegrías y tristezas a ellos que siempre me han dado el buen ejemplo de superación les dedico con todo mi amor este triunfo tan importante. A ti Consuelo que eres mi hermana y segunda madre y siempre has estado cuidando de mí, te quiero mucho. A mis demás hermanos Alberto, Serafín, Segundo, Edwin quienes han sido para mí un ejemplo de superación y guía. -

Reflective-Physically Unclonable Function Based System for Anti

Reflective-Physically Unclonable Function based System for Anti-Counterfeiting Zur Erlangung des akademischen Grades eines DOKTOR-INGENIEURS von der Fakultät für Elektrotechnik und Informationstechnik des Karlsruher Institut für Technologie (KIT) genehmigte DISSERTATION von M.Sc. Harsha Umesh Babu geb. in: Bangalore Tag der mündlichen Prüfung: 10. Dezember 2013 Hauptreferent: Prof. Dr. rer. nat. Wilhelm Stork Korreferent: Prof. Dr. rer. nat. Cornelius Neumann Karlsruhe, den January 20, 2014 To my friends and family. Declaration I hereby declare that I wrote my doctoral dissertation on my own and that I have followed the regulations relating to good scientific practice of the Karlsruhe Institute of Technology (KIT) in its latest form. I have not used any unacknowledged sources or means and I have marked all references I used literally or by content. This work has not previously been presented in an identical or similar form to any other university or examination board. Karlsruhe, 12. November 2013 ——————————— Harsha Umesh Babu i Acknowledgments Although this appears at the beginning of the document, I would like to emphasize that it was written at the very end of this wonderful journey. The simple reason, for why I had put off writing this section is - I do not know how to appropriately express my gratitude to all the people who have helped me reach this stage in life. I do not want to sound obligatory in acknowledging the help and influence while at same time inadvertently forget mentioning names. I would like to express my sincere thanks to Prof. Wilhelm Stork and my master thesis supervisor Prof. K.-D. -

TR 187 020 V1.1.1 (2011-05) Technical Report

ETSI TR 187 020 V1.1.1 (2011-05) Technical Report Radio Frequency Identification (RFID); Coordinated ESO response to Phase 1 of EU Mandate M436 2 ETSI TR 187 020 V1.1.1 (2011-05) Reference DTR/TISPAN-07044 Keywords privacy, RFID, security ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice Individual copies of the present document can be downloaded from: http://www.etsi.org The present document may be made available in more than one electronic version or in print. In any case of existing or perceived difference in contents between such versions, the reference version is the Portable Document Format (PDF). In case of dispute, the reference shall be the printing on ETSI printers of the PDF version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: http://portal.etsi.org/chaircor/ETSI_support.asp Copyright Notification No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. -

Nieuwe Normen NEN

NEN Nieuwe normen NEN Overzicht van nieuwe publicaties van NEN-EN 1304:2003 Ontw. – lersystemen; Ontwerp, installatie en het Nederlands Normalisatie- Keramische dakpannen en hulpstuk- onderhoud. (en). Prijs € 111,83. Instituut, inclusief de Europese nor- ken; Definities en productspecifica- NEN-EN 13036-4:2003 – Oppervlak- men, in de periode 3 september 2003 ties. (en). Kritiek voor 2003-12-24. eigenschappen voor weg- en vlieg- t/m 7 oktober 2003. De prijzen voor Prijs € 23,85. veldverhardingen; de normen afkomstig van ETSI in de NEN-EN 1337-10:2003 – Opleggingen Beproevingsmethoden; Deel 4: rubriek ’Telecommunicatie’ zijn op voor bouwkundige en civieltechnische Methode voor de meting van de aanvraag beschikbaar. De normbla- toepassingen; Deel 10: Inspectie en stroefheid van een oppervlak; De slin- den kunnen worden besteld bij NEN- onderhoud. (en). Prijs € 40,28. gerproef. (en). Prijs € 50,35. Klantenservice, tel. 015-2690391. NEN-EN 1744-4:2003 2e Ontw. – NEN-EN 13036-7:2003 – Oppervlak- Tevens kunnen de genoemde normen Beproevingen voor chemische eigen- eigenschappen voor weg- en vlieg- via Internet besteld worden schappen van toeslagmaterialen; Deel veldverhardingen; (www.nen.nl). Met vragen kunt u 4: Bepaling van de watergevoeligheid Beproevingsmethoden; Deel 7: terecht bij NEN-Klantenservice, tel. van vulstoffen voor bitumineuze Vlakheidsmeting van een verhardings- 015-2690391. Correspondentieadres: mengsels. (en). Kritiek voor 2003-11- oppervlak met een rei. (en). Prijs postbus 5059, 2600 GB Delft. 21. Prijs € 19,08. € 40,28. NEN-EN 12101-4:2003 2e Ontw. – NEN-EN 13363-1:2003 – Zonwerende Bouw Installaties voor rook- en warmtebe- voorzieningen gecombineerd met heersing; Deel 4: Samenstelling van beglazing; Berekening van zon- en NEN-EN 312:2003 – Spaanplaat; systemen voor rook- en warmtebe- lichtdoorlatendheid; Deel 1: Specificaties. -

Merlin Partner User Guide

Merlin Partner User Guide Merlin - Version 1.18 Enterprise Europe Network 2 Merlin User Guide - updated 28 April 2015 Table of contents 1. Introduction 9 About Merlin 9 About this guide 9 Accessing Merlin 10 Merlin Logout 10 Browser Optimisation 11 What if I need help? 11 Icon Legend 11 Navigating Merlin 12 Exporting your Content 13 The Dashboards 13 Using the Filters 15 Clear Filters 16 Working with Technology, Market and NACE Keywords 17 When should I use different levels of keywords? 17 How do I select keywords in Merlin? 18 Where can I find a current list of Technology, Market and NACE keywords? 18 Who to Contact 18 Feedback 19 2. Change History 21 New features 21 Changes that impact your work 21 Features under development 21 3. Managing Profiles 23 Profile Workflow Overview 23 Profile creation 24 Internal Review Process 24 External Review Process 24 Blocking Scores 25 Enterprise Europe Network Profile expiry date 25 My Profiles Dashboard 25 Create a new profile 27 Review a profile 29 Edit a Draft profile 32 My Expired Profiles Dashboard 32 Modifying profile deadlines 33 Delete a profile 34 Archive a profile 35 Change your external reviewer status 35 Update your external reviewer information 36 Resign as an external reviewer 37 Reassign Profiles 38 4. Disseminating Profiles 39 Profile Search - Intranet 39 Intranet Simple Search 39 Intranet Advanced Search 40 Profile Search - Internet 43 Export Profiles 43 Export a Single Profile 43 Export All Profiles From Search Results 45 Merlin POD Web Service 45 5. Managing Clients and Queries -

Guidelines for Securing Radio Frequency Identification (RFID) Systems

Special Publication 800-98 Guidelines for Securing Radio Frequency Identification (RFID) Systems Recommendations of the National Institute of Standards and Technology Tom Karygiannis Bernard Eydt Greg Barber Lynn Bunn Ted Phillips NIST Special Publication 800-98 Guidelines for Securing Radio Frequency Identification (RFID) Systems Recommendations of the National Institute of Standards and Technology Tom Karygiannis Bernard Eydt Greg Barber Lynn Bunn Ted Phillips C O M P U T E R S E C U R I T Y Computer Security Division Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8930 April 2007 US Department of Commerce Carlos M. Gutierrez, Secretary Technology Administration Robert C. Cresanti, Under Secretary of Commerce for Technology National Institute of Standards and Technology William Jeffrey, Director GUIDELINES FOR SECURING RFID SYSTEMS Reports on Computer Systems Technology The Information Technology Laboratory (ITL) at the National Institute of Standards and Technology (NIST) promotes the US economy and public welfare by providing technical leadership for the nation’s measurement and standards infrastructure. ITL develops tests, test methods, reference data, proof of concept implementations, and technical analysis to advance the development and productive use of information technology. ITL’s responsibilities include the development of technical, physical, administrative, and management standards and guidelines for the cost-effective security and privacy of sensitive unclassified information in Federal computer systems. Special Publication 800-series documents report on ITL’s research, guidelines, and outreach efforts in computer security and its collaborative activities with industry, government, and academic organizations. National Institute of Standards and Technology Special Publication 800-98 Natl. -

Tesis T1375ec.Pdf

DEDICATORIA Dedico este trabajo, a mi pilar fundamental de vida, mi Madre, quien me inculco valores y me demostró que esta vida se debe luchar y disfrutar día a día, permitiéndome ser cada vez una mejor persona. A mi Padre, Que con su recuerdo me animado a seguir a lo largo de este camino. A mis hermanas, quienes me han animado y apoyado a continuar con mis sueños, brindándome un apoyo incondicional sacándome sonrisas en los momentos más difíciles de mi vida. Víctor David Vasco Cabrera vi AGRADECIMIENTO Agradezco a mi Padre Supremo, por bendecirme con fortaleza para continuar con el aprendizaje de la vida. A mi Madre, por apoyarme en toda mi carrera estudiantil, y demostrarme que no importa los problemas que se tenga, lo importante es el aprendizaje que estos dejan al solucionarlos, por el ejemplo de constante lucha y superación. A mis hermanas, por darme fuerzas ánimo y ejemplo para terminar y luchar por las metas propuestas. Víctor David Vasco Cabrera vii ÍNDICE UNIVERSIDAD TÉCNICA DE AMBATO ................................................................. i APROBACIÓN DEL TUTOR ..................................................................................... ii AUTORÍA ................................................................................................................... iii DERECHOS DE AUTOR ........................................................................................... iv APROBACIÓN DE LA COMISIÓN CALIFICADORA ............................................ v DEDICATORIA .........................................................................................................