Copyrighted Material

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 1: Computer Abstractions and Technology 1.1 – 1.4: Introduction, Great Ideas, Moore’S Law, Abstraction, Computer Components, and Program Execution

Chapter 1: Computer Abstractions and Technology 1.1 – 1.4: Introduction, great ideas, Moore’s law, abstraction, computer components, and program execution ITSC 3181 Introduction to Computer Architecture https://passlab.github.io/ITSC3181/ Department of Computer Science Yonghong Yan [email protected] https://passlab.github.io/yanyh/ Lectures for Chapter 1 and C Basics Computer Abstractions and Technology ☛• Lecture 01: Chapter 1 – 1.1 – 1.4: Introduction, great ideas, Moore’s law, abstraction, computer components, and program execution • Lecture 02 - 03: C Basics; Compilation, Assembly, Linking and Program Execution • Lecture 03 - 04: Chapter 1 – 1.6 – 1.7: Performance, power and technology trends • Lecture 04 - 05: Memory and Binary Systems • Lecture 05: – 1.8 - 1.9: Multiprocessing and benchmarking 2 § 1.1 Introduction 1.1 The Computer Revolution • Progress in computer technology – Underpinned by Moore’s Law • Every two years, circuit density ~= increasing frequency ~= performance, double • Makes novel applications feasible – Computers in automobiles – Cell phones – Human genome project – World Wide Web – Search Engines • Computers are pervasive 3 Generation Of Computers https://solarrenovate.com/the-evolution-of-computers/ 4 New School Computer 5 Classes of Computers • Personal computers (PC) --> computers are PCs today – General purpose, variety of software – Subject to cost/performance tradeoff • Server computers – Network based – High capacity, performance, reliability – Range from small servers to building sized 6 Classes of Computers -

2200, Canmath 201.Qxd

An Introduction to the Computer Age Computers are changing our world. The small amount of training. Amazingly invention of the internal combustion engine enough, as the size has decreased, the power and the harnessing of electricity have had a has increased and prices have plummeted. profound effect on the way society operates. How does the computer age affect the The widespread use of the computer is Christian? What is the history behind the having a similar and perhaps even greater computer? What are the fundamental parts impact on our society. of a computer and how do they work In only decades, computers have shrunk together? What are the uses, advantages, from mammoth, room-filling machines that and limitations of computers? Do I need a only the highly educated could operate to computer? This LightUnit and those follow - tiny devices held in the palm of the hand ing it will begin to answer some of these that the average person can use after a questions for you. Section 1 Computer Background Any study must be based on definitions very specialized field, new words have come about the subject. If definitions are not into being, and many common words have understood, there is little hope that much acquired new definitions. Some of the words can be learned about the subject. The goal of may already be familiar to you, but in the the first section of this LightUnit is to pro - context of computers, they may take on a vide a basis for the rest of the course by different meaning. Therefore, do not assume defining computer and many terms associ - you know the definition even if the word is ated with computers. -

The History of Computers: Part II

The History Of Computers: Part II You will learn about the computers of the 20th century and the people behind those machines. Some of the clipart images come from the sites: •http://www.clipartheaven.com/ •http://www.horton-szar.net/ •http://www.shootpetoet.be •www.dpw.wau.nl/pv/temp/ clipart/screenbeans.htm James Tam Categories Of 20th Century Computers •The mechanical monsters of the twenty first century - The machines of Konrad Zuse - The Bell telephone models - Howard Aiken and the Harvard computers •The computers of the electronic revolution -The ABC -The ENIAC - The Colossus machines of Bletchley Park •The first modern (stored program/memory) computers - The Manchester machine -The EDSAC - The EDVAC James Tam Computer History: Part II 1 The Mechanical Monsters •Performed calculations using moving mechanical parts rather than using electronics Images from the History of Computing Technology by Michael R. Williams James Tam The Mechanical Monsters •Many were used to solve equations that were either impossible or very time consuming to solve analytically. •Often conducting experiments were also impractical. James Tam Computer History: Part II 2 The Mechanical Monsters •Konrad Zuse -Z1 –Z4 •George Stibitz - Bell relay based computers Model I - VI •Howard Aiken - Harvard Mark I - IV James Tam The First Set Of Mechanical Monsters Were Created By Konrad Zuse •Developed a series of mechanical calculating machines (Z1, Z2, Z3, Z4). •Motivated by the need to perform complex calculations because current approaches were unsatisfactory. James Tam Computer History: Part II 3 The Z1 •It was entirely mechanical From the History of Computing Technology by Michael R. -

Cpu Performance

LECTURE 1 Introduction CLASSES OF COMPUTERS • When we think of a “computer”, most of us might first think of our laptop or maybe one of the desktop machines frequently used in the Majors’ Lab. Computers, however, are used for a wide variety of applications, each of which has a unique set of design considerations. Although computers in general share a core set of technologies, the implementation and use of these technologies varies with the chosen application. In general, there are three classes of applications to consider: desktop computers, servers, and embedded computers. CLASSES OF COMPUTERS • Desktop Computers (or Personal Computers) • Emphasize good performance for a single user at relatively low cost. • Mostly execute third-party software. • Servers • Emphasize great performance for a few complex applications. • Or emphasize reliable performance for many users at once. • Greater computing, storage, or network capacity than personal computers. • Embedded Computers • Largest class and most diverse. • Usually specifically manufactured to run a single application reliably. • Stringent limitations on cost and power. PERSONAL MOBILE DEVICES • A newer class of computers, Personal Mobile Devices (PMDs), has quickly become a more numerous alternative to PCs. PMDs, including small general-purpose devices such as tablets and smartphones, generally have the same design requirements as PCs with much more stringent efficiency requirements (to preserve battery life and reduce heat emission). Despite the various ways in which computational technology can be applied, the core concepts of the architecture of a computer are the same. Throughout the semester, try to test yourself by imagining how these core concepts might be tailored to meet the needs of a particular domain of computing. -

Open PDF in New Window

BEST AVAILABLE COPY i The pur'pose of this DIGITAL COMPUTER Itop*"°a""-'"""neesietter' YNEWSLETTER . W OFFICf OF NIVAM RUSEARCMI • MATNEMWTICAL SCIENCES DIVISION Vol. 9, No. 2 Editors: Gordon D. Goldstein April 1957 Albrecht J. Neumann TABLE OF CONTENTS It o Page No. W- COMPUTERS. U. S. A. "1.Air Force Armament Center, ARDC, Eglin AFB, Florida 1 2. Air Force Cambridge Research Center, Bedford, Mass. 1 3. Autonetics, RECOMP, Downey, Calif. 2 4. Corps of Engineers, U. S. Army 2 5. IBM 709. New York, New York 3 6. Lincoln Laboratory TX-2, M.I.T., Lexington, Mass. 4 7. Litton Industries 20 and 40 DDA, Beverly Hills, Calif. 5 8. Naval Air Test Centcr, Naval Air Station, Patuxent River, Maryland 5 9. National Cash Register Co. NC 304, Dayton, Ohio 6 10. Naval Air Missile Test Center, RAYDAC, Point Mugu, Calif. 7 11. New York Naval Shipyard, Brooklyn, New York 7 12. Philco, TRANSAC. Philadelphia, Penna. 7 13. Western Reserve Univ., Cleveland, Ohio 8 COMPUTING CENTERS I. Univ. of California, Radiation Lab., Livermore, Calif. 9 2. Univ. of California, SWAC, Los Angeles, Calif. 10 3. Electronic Associates, Inc., Princeton Computation Center, Princeton, New Jersey 10 4. Franklin Institute Laboratories, Computing Center, Philadelphia, Penna. 11 5. George Washington Univ., Logistics Research Project, Washington, D. C. 11 6. M.I.T., WHIRLWIND I, Cambridge, Mass. 12 7. National Bureau of Standards, Applied Mathematics Div., Washington, D.C. 12 8. Naval Proving Ground, Naval Ordnance Computation Center, Dahlgren, Virgin-.a 12 9. Ramo Wooldridge Corp., Digital Computing Center, Los Angeles, Calif. -

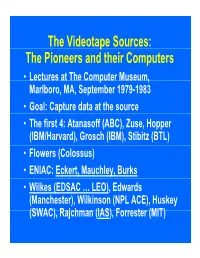

P the Pioneers and Their Computers

The Videotape Sources: The Pioneers and their Computers • Lectures at The Compp,uter Museum, Marlboro, MA, September 1979-1983 • Goal: Capture data at the source • The first 4: Atanasoff (ABC), Zuse, Hopper (IBM/Harvard), Grosch (IBM), Stibitz (BTL) • Flowers (Colossus) • ENIAC: Eckert, Mauchley, Burks • Wilkes (EDSAC … LEO), Edwards (Manchester), Wilkinson (NPL ACE), Huskey (SWAC), Rajchman (IAS), Forrester (MIT) What did it feel like then? • What were th e comput ers? • Why did their inventors build them? • What materials (technology) did they build from? • What were their speed and memory size specs? • How did they work? • How were they used or programmed? • What were they used for? • What did each contribute to future computing? • What were the by-products? and alumni/ae? The “classic” five boxes of a stored ppgrogram dig ital comp uter Memory M Central Input Output Control I O CC Central Arithmetic CA How was programming done before programming languages and O/Ss? • ENIAC was programmed by routing control pulse cables f ormi ng th e “ program count er” • Clippinger and von Neumann made “function codes” for the tables of ENIAC • Kilburn at Manchester ran the first 17 word program • Wilkes, Wheeler, and Gill wrote the first book on programmiidbBbbIiSiing, reprinted by Babbage Institute Series • Parallel versus Serial • Pre-programming languages and operating systems • Big idea: compatibility for program investment – EDSAC was transferred to Leo – The IAS Computers built at Universities Time Line of First Computers Year 1935 1940 1945 1950 1955 ••••• BTL ---------o o o o Zuse ----------------o Atanasoff ------------------o IBM ASCC,SSEC ------------o-----------o >CPC ENIAC ?--------------o EDVAC s------------------o UNIVAC I IAS --?s------------o Colossus -------?---?----o Manchester ?--------o ?>Ferranti EDSAC ?-----------o ?>Leo ACE ?--------------o ?>DEUCE Whirl wi nd SEAC & SWAC ENIAC Project Time Line & Descendants IBM 701, Philco S2000, ERA.. -

History of Computers, Chronological Record of Events – Particularly in the Area of Technological Development – Will Be Explained

Computer Organization 1. Introduction STUDY MATERIALS ON COMPUTER ORGANIZATION (As per the curriculum of Third semester B.Sc. Electronics of Mahatma Gandh Uniiversity) Compiled by Sam Kollannore U.. Lecturer in Electronics M.E.S. College, Marampally 1. INTRODUCTION 1.1 GENERATION OF COMPUTERS The first electronic computer was designed and built at the University of Pennsylvania based on vacuum tube technology. Vacuum tubes were used to perform logic operations and to store data. Generations of computers has been divided into five according to the development of technologies used to fabricate the processors, memories and I/O units. I Generation : 1945 – 55 II Generation : 1955 – 65 III Generation : 1965 – 75 IV Generation : 1975 – 89 V Generation : 1989 to present First Generation (ENIAC - Electronic Numerical Integrator And Calculator EDSAC – Electronic Delay Storage Automatic Calculator EDVAC – Electronic Discrete Variable Automatic Computer UNIVAC – Universal Automatic Computer IBM 701) Vacuum tubes were used – basic arithmetic operations took few milliseconds Bulky Consume more power with limited performance High cost Uses assembly language – to prepare programs. These were translated into machine level language for execution. Mercury delay line memories and Electrostatic memories were used Fixed point arithmetic was used 100 to 1000 fold increase in speed relative to the earlier mechanical and relay based electromechanical technology Punched cards and paper tape were invented to feed programs and data and to get results. Magnetic tape / magnetic drum were used as secondary memory Mainly used for scientific computations. Second Generation (Manufacturers – IBM 7030, Digital Data Corporation’s PDP 1/5/8 Honeywell 400) Transistors were used in place of vacuum tubes. -

Computer Architecture ٤٠/٠٦/٢٦

Computer Architecture ٤٠/٠٦/٢٦ Computer Architecture Faculty Of Computers And Information Technology Second Term 2018 - 2019 Dr.Khaled Kh. Sharaf Computer Architecture Topic 1. Introduction 2. Performance Metrics I 3. Memory Hierarchy 4. MIPS Instruction Set Architecture (ISA) 5. Introduction to Logic Circuit Design 6. Instruction Level Parallelism 7. Multicore Architecture 8. GPU Architecture Textbook Patterson & Hennessy (2011 or 2013), "Computer Organization and Design: The Hardware/Software Interface“, revised 4th or 5th edition. ISBN: 978-0-12-374750-1 ١ Computer Architecture ٤٠/٠٦/٢٦ Computer Architecture 1.Introduction • Machine structures: layers of abstraction • Eight great ideas Computer Architecture Konrad Zuse’s Z3 electro-mechanical computer (1941, Germany). Turing complete, though conditional jumps were missing. ٢ Computer Architecture ٤٠/٠٦/٢٦ Computer Architecture Colossus (UK, 1941) was the world’s first totally electronic programmable computing device. But not Turing complete. Computer Architecture Harvard Mark I – IBM ASCC (1944, US). Electro- mechanical computer (no conditional jumps and not Turing complete). It could store 72 numbers, each 23 decimal digits long. It could do three additions or subtractions in a second. A multiplication took six seconds, a division took 15. 3 seconds, and a logarithm or a trigonometric function took over one minute. A loop was accomplished by joining the end of the paper tape containing the program back to the beginning of the tape (literally creating a loop). ٣ Computer Architecture ٤٠/٠٦/٢٦ Computer Architecture Electronic Numerical Integrator And Computer (ENIAC). The first general-purpose, electronic computer. It was a Turing-complete, digital computer capable of being reprogrammed and was running at 5,000 cycles per second for operations on the 10-digit numbers. -

1. Types of Computers Contents

1. Types of Computers Contents 1 Classes of computers 1 1.1 Classes by size ............................................. 1 1.1.1 Microcomputers (personal computers) ............................ 1 1.1.2 Minicomputers (midrange computers) ............................ 1 1.1.3 Mainframe computers ..................................... 1 1.1.4 Supercomputers ........................................ 1 1.2 Classes by function .......................................... 2 1.2.1 Servers ............................................ 2 1.2.2 Workstations ......................................... 2 1.2.3 Information appliances .................................... 2 1.2.4 Embedded computers ..................................... 2 1.3 See also ................................................ 2 1.4 References .............................................. 2 1.5 External links ............................................. 2 2 List of computer size categories 3 2.1 Supercomputers ............................................ 3 2.2 Mainframe computers ........................................ 3 2.3 Minicomputers ............................................ 3 2.4 Microcomputers ........................................... 3 2.5 Mobile computers ........................................... 3 2.6 Others ................................................. 4 2.7 Distinctive marks ........................................... 4 2.8 Categories ............................................... 4 2.9 See also ................................................ 4 2.10 References -

ECE 252 / CPS 220 Advanced Computer Architecture I Lecture 1

ECE 552 / CPS 550 Advanced Computer Architecture I Lecture 2 History, Instruction Set Architectures Benjamin Lee Electrical and Computer Engineering Duke University www.duke.edu/~bcl15 www.duke.edu/~bcl15/class/class_ece252fall12.html ECE 552 Administrivia 11 September – Homework #1 Due Assignment on web page. Teams of 2-3. Submit soft copies to Sakai. Use Piazza for questions. 11 September – Class Discussion Do not wait until the day before! 1. Hill et al. “Classic machines: Technology, implementation, and economics” 2. Moore. “Cramming more components onto integrated circuits” 3. Radin. “The 801 minicomputer” 4. Patterson et al. “The case for the reduced instruction set computer” 5. Colwell et al. “Instruction sets and beyond: Computers, complexity, controversy” ECE 552 / CPS 550 2 A Bit of History Historical Narrative - Helps understand why ideas arose - Helps illustrate the design process Technology Trends - Future technologies may be as constrained as older ones Learning from History - Those who ignore history are doomed to repeat it - Every mistake made in mainframe design was also made in minicomputers, then microcomputers, where next? ECE 552 / CPS 550 3 Charles Babbage Charles Babbage (1791-1871) - Lucasian Professor of Mathematics - Cambridge University, 1827-1839 Contributions - Difference Engine 1823 - Analytic Engine 1833 Approach - mathematical tables (astronomy), nautical tables (navy) - any continuous function can be approximated by polynomial - mechanical gears and simple calculators ECE 552 / CPS 550 4 Difference Engine -

History in the Computing Curriculum

R e p o r t History in the Computing Curriculum I F I P TC 3 / TC 9 Joint Task Group John Impagliazzo (Task Group Chair) Hofstra University Martin Campbell-Kelly University of Warwick Gordon Davies Open University John A. N. Lee Virginia Tech Michael R. Williams University of Calgary Prepublication Copy 1998 October Copyright © 1998, 1999 Institute of Electrical and Electronics Engineers. This report is scheduled to appear in an upcoming issue of the Annals of the History of Computing. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by sending an email request to <[email protected]>. HISTORY IN THE COMPUTING CURRICULUM Prepublication Copy 1998 October CONTENTS 1. INTRODUCTION 9. RESOURCES 9.1 Textbooks and General Works 2. GOALS AND OBJECTIVES 9.2 Monographs and Articles 9.3 Electronic Information 3. OVERVIEW OF THIS REPORT 9.4 Videos, Simulators, and Other Resources 4. BACKGROUND 10. EXTENDED TOPICS 4.1 Overview of Curriculum Recommendations 10.1 Advanced Courses 4.2 History Status 10.2 Projects 5. NEED FOR HISTORY CONTENT 11. CONCLUSIONS 5.1 The Student Perspective 5.2 The Professional Perspective 12. FUTURE DEVELOPMENTS 6. CURRICULUM CONTENT 6.1 Establishing a Knowledge Base ACKNOWLEDGMENTS 6.2 Methods of Presentation 6.2.1 The Period Method REFERENCES 6.2.2 Other Methods 6.3 Depth of Knowledge PUBLIC ACCESS 6.4 Clusters APPENDIX 7. IMPLEMENTATION OF A BASIC CURRICULUM A. -

Pioneercomputertimeline2.Pdf

Memory Size versus Computation Speed for various calculators and computers , IBM,ZQ90 . 11J A~len · W •• EDVAC lAS• ,---.. SEAC • Whirlwind ~ , • ENIAC SWAC /# / Harvard.' ~\ EDSAC Pilot• •• • ; Mc;rk I " • ACE I • •, ABC Manchester MKI • • ! • Z3 (fl. pt.) 1.000 , , .ENIAC •, Ier.n I i • • \ I •, BTL I (complexV • 100 ~ . # '-------" Comptometer • Ir.ne l ' with constants 10 0.1 1.0 10 100 lK 10K lOOK 1M GENERATIONS: II] = electronic-vacuum tube [!!!] = manual [1] = transistor Ime I = mechanical [1] = integrated circuit Iem I = electromechanical [I] = large scale integrated circuit CONTENTS THE COMPUTER MUSEUM BOARD OF DIRECTORS The Computer Museum is a non-profit. Kenneth H. Olsen. Chairman public. charitable foundation dedicated to Digital Equipment Corporation preserving and exhibiting an industry-wide. broad-based collection of the history of in Charles W Bachman formation processing. Computer history is Cullinane Database Systems A Compcmion to the Pioneer interpreted through exhibits. publications. Computer Timeline videotapes. lectures. educational programs. C. Gordon Bell and other programs. The Museum archives Digital Equipment Corporation I Introduction both artifacts and documentation and Gwen Bell makes the materials available for The Computer Museum 2 Bell Telephone Laboratories scholarly use. Harvey D. Cragon Modell Complex Calculator The Computer Museum is open to the public Texas Instruments Sunday through Friday from 1:00 to 6:00 pm. 3 Zuse Zl, Z3 There is no charge for admission. The Robert Everett 4 ABC. Atanasoff Berry Computer Museum's lecture hall and rece ption The Mitre Corporation facilities are available for rent on a mM ASCC (Harvard Mark I) prearranged basis. For information call C.