Effects, Signal Processors & Noise Reduction Dynamic Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Carlton Barrett

! 2/,!.$ 4$ + 6 02/3%2)%3 f $25-+)4 7 6!,5%$!4 x]Ó -* Ê " /",½-Ê--1 t 4HE7ORLDS$RUM-AGAZINE !UGUST , -Ê Ê," -/ 9 ,""6 - "*Ê/ Ê /-]Ê /Ê/ Ê-"1 -] Ê , Ê "1/Ê/ Ê - "Ê Ê ,1 i>ÌÕÀ} " Ê, 9½-#!2,4/."!22%44 / Ê-// -½,,/9$+.)"" 7 Ê /-½'),3(!2/.% - " ½-Ê0(),,)0h&)3(v&)3(%2 "Ê "1 /½-!$2)!.9/5.' *ÕÃ -ODERN$RUMMERCOM -9Ê 1 , - /Ê 6- 9Ê `ÊÕV ÊÀit Volume 36, Number 8 • Cover photo by Adrian Boot © Fifty-Six Hope Road Music, Ltd CONTENTS 30 CARLTON BARRETT 54 WILLIE STEWART The songs of Bob Marley and the Wailers spoke a passionate mes- He spent decades turning global audiences on to the sage of political and social justice in a world of grinding inequality. magic of Third World’s reggae rhythms. These days his But it took a powerful engine to deliver the message, to help peo- focus is decidedly more grassroots. But his passion is as ple to believe and find hope. That engine was the beat of the infectious as ever. drummer known to his many admirers as “Field Marshal.” 56 STEVE NISBETT 36 JAMAICAN DRUMMING He barely knew what to do with a reggae groove when he THE EVOLUTION OF A STYLE started his climb to the top of the pops with Steel Pulse. He must have been a fast learner, though, because it wouldn’t Jamaican drumming expert and 2012 MD Pro Panelist Gil be long before the man known as Grizzly would become one Sharone schools us on the history and techniques of the of British reggae’s most identifiable figures. -

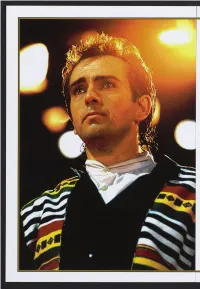

Peter Gabriel by W Ill Hermes

Peter Gabriel By W ill Hermes The multifaceted performer has spent nearly forty years as a solo artist and music innovator. THERE HAVE BEEN MANY PETER GABRIELS: THE PROG rocker who steered the cosmos-minded genre toward Earth; the sem i- new waver more focused on empathic storytelling and musical inno vation than fashion or attitude; the Top Forty hitmaker ambivalent about the spotlight; the global activist whose Real World label and collaborations introduced pivotal non-Western acts to new audiences; the elder statesman inspiring a new generation of singer-songwrit ers. ^ So his second induction into the Rock and Roll Hall of Fame, as a solo act this time - his first, in 2010, was as cofounder of the prog-pop juggernaut Genesis - seems wholly earned. Yet it surprised him, especially since he was a no-show that first time: The ceremony took place two days before the notorious perfectionist began a major tour. “I would’ve gone last time if I had not been about to perform,” Gabriel (b. February 13,1950) explained. “I just thought, 1 can’t go. We’d given ourselves very little rehearsal time.’” ^ “It’s a huge honor,” he said of his inclusions, noting that among numerous Grammys and other awards, this one is distinguished ‘because it’s more for your body of work than a specific project.” ^ That body of work began with Genesis, named after the young British band declined the moniker of “Gabriel’s Angels.” They produced their first single (“The Silent Sun”) in the winter of 1968, when Gabriel was 17. -

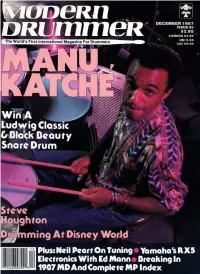

December 1987

VOLUME 11, NUMBER 1 2, ISSUE 98 Cover Photo by Jaeger Kotos EDUCATION IN THE STUDIO Drumheads And Recording Kotos by Craig Krampf 38 SHOW DRUMMERS' SEMINAR Jaeger Get Involved by by Vincent Dee 40 KEYBOARD PERCUSSION Photo In Search Of Time by Dave Samuels 42 THE MACHINE SHOP New Sounds For Your Old Machines by Norman Weinberg 44 ROCK PERSPECTIVES Ringo Starr: The Later Years by Kenny Aronoff 66 ELECTRONIC INSIGHTS Percussive Sound Sources And Synthesis by Ed Mann 68 TAKING CARE OF BUSINESS Breaking In MANU KATCHE by Karen Ervin Pershing 70 One of the highlights of Peter Gabriel's recent So album and ROCK 'N' JAZZ CLINIC tour was French drummer Manu Katche, who has gone on to Two-Surface Riding: Part 2 record with such artists as Sting, Joni Mitchell, and Robbie by Rod Morgenstein 82 Robertson. He tells of his background in France, and explains BASICS why Peter Gabriel is so important to him. Thoughts On Tom Tuning by Connie Fisher 16 by Neil Peart 88 TRACKING DRUMMING AT DISNEY Studio Chart Interpretation by Hank Jaramillo 100 WORLD DRUM SOLOIST When it comes to employment opportunities, you have to Three Solo Intros consider Disney World in Florida, where 45 to 50 drummers by Bobby Cleall 102 are working at any given time. We spoke to several of them JAZZ DRUMMERS' WORKSHOP about their working conditions and the many styles of music Fast And Slow Tempos that are represented there, by Peter Erskine 104 by Rick Van Horn 22 CONCEPTS Drummers Are Special People STEVE HOUGHTON by Roy Burns 116 He's known for his big band work with Woody Herman, EQUIPMENT small-group playing with Scott Henderson, and his teaching at SHOP TALK P.I.T. -

HUGH PADGHAM He Helped to Defi Ne the Sound of the 1980S—And Kept Right on Innovating by Howard Massey

JUNE 2010 ISSUE MMUSICMAG.COM PRODUCER Capitol Studios HUGH PADGHAM He helped to defi ne the sound of the 1980s—and kept right on innovating By Howard Massey IT WAS THE DRUM BREAK HEARD ’ROUND THE WORLD. Police. A couple of years later, the Police brought Padgham on Phil Collins’ 1981 debut solo single, “In the Air Tonight,” quietly board to co-produce their massive hit album Ghost in the Machine. simmered for a full three minutes and 40 seconds—then erupted From there, Padgham went on to helm albums by Paul McCartney, without warning into 10 thunderous notes on Collins’ tom-toms that David Bowie, Melissa Etheridge, Genesis and many other artists, air drummers have been joyously pounding out ever since. as well as several Sting solo albums. Along the way, he has won The enormous gated-reverb drum sound that would help to four Grammys in four different categories, including Producer of defi ne the sound of the 1980s was in large part the creation of the Year. Hugh Padgham (discovered while engineering Peter Gabriel’s third Padgham’s ultra-clean signature sound had a major impact album), and it loudly announced the arrival of a major production on the shift from the close-miked sounds of the ’70s to the open, talent. Padgham’s career had started at London’s Advision Studios, ambient sounds of the ’90s and beyond. “I often spend more time where he served as tea boy (the British equivalent to a runner). He pruning down than adding things,” he says. “Doing so can often moved to Lansdowne Studios in the mid-’70s and received formal require a musician to learn or evolve an altogether different part to training, quickly rising through the ranks to chief engineer. -

Effects and Signal Processors

CCHAPTERHAPTER 1100 Effects and Signal Processors With effects, your mix sounds more like a real “ production ” and less like a bland home recording. You might simulate a concert hall with reverb. Put a guitar in space with stereo chorus. Make a kick drum punchy by adding compression. Used on all pop-music records, effects can enhance plain tracks by adding spaciousness and excite- ment. They are essential if you want to produce a commercial sound. But many jazz, folk, and classical groups sound fi ne without any effects. 195 This chapter describes the most popular signal processors and effects, and suggests how to use them. Effects are available both as hardware and software (called plug-ins). To add a hardware effect to a track, you feed the track’s signal from your mixer’s aux send to an effects device, or signal processor ( Figure 10.1 ). It modifi es the signal in a controlled way. Then the modifi ed signal returns to your mixer, where it blends with the dry, unpro- cessed signal. SOFTWARE EFFECTS (PLUG-INS) Most recording programs include plug-ins: software effects that you control on your computer screen. Each effect is an algorithm (small program) that runs either in your computer’s CPU or in a DSP card. Some plug-ins come already installed with the recording software; others you can download or purchase on CD, then install them on your hard drive. Each plug-in becomes part of your recording pro- gram (called the host), and can be called up from within the host. 115_K81144_Ch10.indd5_K81144_Ch10.indd 119595 99/24/2008/24/2008 44:36:49:36:49 PPMM 196 CHAPTER 10 Effects and Signal Processors FIGURE 10.1 Eventide H8000FW Multichannel Effects System, an example of a signal processor. -

Viagra and Antidepressants

Mixing Secrets for the Small Studio Mike Senior Amsterdam • Boston • Heidelberg • London • New York • Oxford • Paris San Diego • San Francisco • Singapore • Sydney • Tokyo Focal Press is an imprint of Elsevier FocalPress is an imprint of Elsevier 30 Corporate Drive, Suite 400, Burlington, MA 01803, USA The Boulevard, Langford Lane, Kidlington, Oxford, OX5 1GB, UK © 2011 Mike Senior. Published by Elsevier Inc. All rights reserved No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage and retrieval system, without permission in writing from the publisher. Details on how to seek permission, further information about the Publisher’s permissions policies and our arrangements with organizations such as the Copyright Clearance Center and the Copyright Licensing Agency, can be found at our website: www.elsevier.com/permissions. This book and the individual contributions contained in it are protected under copyright by the Publisher (other than as may be noted herein). Notices Knowledge and best practice in this field are constantly changing. As new research and experience broaden our understanding, changes in research methods, professional practices, or medical treatment may become necessary. Practitioners and researchers must always rely on their own experience and knowledge in evaluating and using any information, methods, compounds, or experiments described herein. In using such information or methods they should be mindful of their own safety and the safety of others, including parties for whom they have a professional responsibility. To the fullest extent of the law, neither the Publisher nor the authors, contributors, or editors, assume any liability for any injury and/or damage to persons or property as a matter of products liability, negligence or otherwise, or from any use or operation of any methods, products, instructions, or ideas contained in the material herein. -

Downloading Technologies and Software, Namely in Order to Paint a Picture of What Kind of Music Industry Youtube Would Inherit

Distribution Agreement In presenting this thesis or dissertation as a partial fulfillment of the requirements for an advanced degree from Emory University, I hereby grant to Emory University and its agents the non-exclusive license to archive, make accessible, and display my thesis or dissertation in whole or in part in all forms of media, now or hereafter known, including display on the world wide web. I understand that I may select some access restrictions as part of the online submission of this thesis or dissertation. I retain all ownership rights to the copyright of the thesis or dissertation. I also retain the right to use in future works (such as articles or books) all or part of this thesis or dissertation. Signature: _____________________________ ______________ Brian A. Smith Date All Access: YouTube, the History of the Music Video, and its Contemporary Renaissance By Brian A. Smith Doctor of Philosophy Comparative Literature _________________________________________ John Johnston, Ph.D. Advisor _________________________________________ Deepika Bahri, Ph.D. Committee Member _________________________________________ Geoffrey Bennington, Ph.D. Committee Member Accepted: _________________________________________ Lisa A. Tedesco, Ph.D. Dean of the James T. Laney School of Graduate Studies ___________________ Date All Access: YouTube, the History of the Music Video, and Its Contemporary Renaissance By Brian A. Smith B.A., Appalachian State University, 2003 Advisor: John Johnston, Ph.D. An abstract of a dissertation submitted to the Faculty of the James T. Laney School of Graduate Studies of Emory University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Comparative Literature 2014 Abstract All Access: YouTube, the History of the Music Video, and Its Contemporary Renaissance by Brian A. -

Come Closer Acousmatic Intimacy in Popular Music Sound

Emil Kraugerud Come Closer Acousmatic Intimacy in Popular Music Sound A dissertation submitted in partial fulfilment of the requirements for the degree of PhD Department of Musicology Faculty of Humanities University of Oslo 2020 Contents Acknowledgements V 1. Introduction 1 Aim of the Dissertation and Research Questions 6 Clarification of Terms 7 Knowledge Aims 10 Analyzing Recorded Popular Music Sound 16 Interviews and Their Role in Hermeneutic Research 26 Outline of the Dissertation 29 2. Perceptions of Physical Proximity 33 Intimacy as Interpersonal Proximity 34 Mediated Intimacy 38 Portrayed Intimacy 43 Conclusion 47 3. Acousmatic Intimacy as Relation 49 Intimate Awareness and Interaction 51 Recorded Song Persona 54 Authenticity 59 The “Realism” of Virtual Performances 64 The Double Paradox of Acousmatic Intimacy 67 Domestic Intimate Space 70 Metaphorical Intimate Space 74 (Positive and) Negative Notions of Acousmatic Intimacy 76 Conclusion 80 4. Technological Facilitation of Acousmatic Intimacy 83 The Microphone and its Intimate Affordances 87 Magnetic Tape and Multitrack Recording: Reduced Noise and Increased Control 92 Dynamic Range Processors: Controlling Intimate Proximity 95 Reverb and the Construction of Intimate Space 98 Stereo Recording and Pseudo-Stereo Techniques: Exaggerated Presence 103 Noise as Signifier of Silence 105 Hyperintimacy: Intimacy Beyond Intimacy 107 Conclusion 112 5. Soft, Intense, and Close 115 Background 116 Intimacy, Closeness, and Smallness 118 What Happened Is What You Hear: Siv Jakobsen’s “To Leave You” 121 Hyperintimacy by Contrast: Jordan Mackampa’s “Teardrops in a Hurricane” 132 Conclusion 139 6. Constructing Relational Closeness 141 Background 142 Intimacy as Perceived Relational Closeness 144 Portrayed Intimacy and Human Imperfection: David Byrne’s “My Love Is You” 148 Intimacy in Failing Technology: Bumcello’s “Je Ne Sais Quality” 157 Conclusion 164 7. -

November 1999

BRANFORD MARSALIS'S JEFF "TAIN" WATTS A new solo disc proves this amazing skinsman has a world of ideas up his sleeve. by Ken Micallef 52 MD's GUIDE TO DRUMSET TUNING It's a lot more important than you think, and with our pro tips, probably a lot easier, too! by Rich Watson 66 La Raia SANTANA'S Paul RODNEY HOLMES Even Santana's rhythmic wonderland can't UPDATE contain this monster. Tom Petty's Steve Ferrone by T. Bruce Wittet Ricky Martin's Jonathan Joseph 92 Ed Toth of Vertical Horizon Steven Drozd of The Flaming Lips Brett Michaels' Brett Chassen SUICIDAL TENDENCIES' 20 BROOKS WACKERMAN What's a nice guy like you doing in a band like this? REFLECTIONS by Matt Peiken VINNIE COLAIUTA 106 The distinguished Mr. C shares some deep thoughts on drumming's giants. by Robyn Flans ARTIST ON TRACK 26 ART BLAKEY, PART 2 Wherein the jazz giant draws the maps so many UP & COMING would follow. BYRON McMACKIN by Mark Griffith OF PENNYWISE 136 Pushin' punk's parameters. by Matt Schild 122 Volume 23, Number 11 Cover photo by Paul La Raia departments education 112 STRICTLY TECHNIQUE 4 AN EDITOR'S OVERVIEW Musical Accents, Part 3 Learning To Schmooze by Ted Bonar and Ed Breckenfeld by William F. Miller 116 ROCK 'N' JAZZ CLINIC 6 READERS' PLATFORM Implied Metric Modulation by Steve Smith 14 ASK A PRO 118 ROCK PERSPECTIVES Graham Lear, Vinnie Paul, and Ian Paice The Double Bass Challenge by Ken Vogel 16 IT'S QUESTIONABLE 142 PERCUSSION TODAY 130 ON THE MOVE Street Beats by Robin Tolleson 132 CRITIQUE 148 THE MUSICAL DRUMMER The Benefits Of Learning A Second Instrument by Ted Bonar 152 INDUSTRY HAPPENINGS New Orleans Jazz & Heritage Festival and more, including Taking The Stage equipment 160 DRUM MARKET Including Vintage Showcase 32 NEW AND NOTABLE 168 INSIDE TRACK Dave Mattacks 38 PRODUCT CLOSE-UP by T. -

2 2 Dětství a První Úspěchy……………………………………………………

OBSAH 1 Úvod…………………………………………………………………………… 2 2 Dětství a první úspěchy……………………………………………………....... 3 2.1 Rodina a dospívání…………………………………………………………. 3 2.2 Herectví…………………………………………………………………….. 4 2.3 Hudba………………………………………………………………………. 6 2.4 První úspěchy………………………………………………………………. 8 3 Éra GENESIS………………………………………………………………….. 10 3.1 Collins jako bubeník……………………………………………………….. 10 3.2 Collins jako frontman……………………………………………………….. 15 3.3 Collins jako začínající autor………………………………………………… 18 4 Sólová kariéra………………………………………………………………….... 21 4.1 Collins jako skladatel a producent………………………………………….. 21 4.2 Live Aid……………………………………………………………………... 26 4.3 Poslední projekty a odchod z GENESIS……………………………………. 28 5 Přelom tisíciletí………………………………………………………………….. 32 5.1 Big band a další album………………………………………………………. 32 5.2 Spolupráce se společností Walt Disney……………………………………… 33 6 Zdravotní problémy a tvorba do roku 2017……………………………………… 34 7 Závěr……………………………………………………………………………... 37 1 1 ÚVOD Tato práce je zaměřena na život a práci zpěváka, skladatele, producenta a bubeníka kapely Genesis, Phila Collinse. Poskytne informace o jeho hudební a herecké kariéře, díle a o tom, jak ovlivnil populární hudbu 80. let 20. století a hudbu současnosti. V závislosti na dostupnosti literárních a internetových zdrojů se pokusím tato témata propojit a utvořit z nich jeden celek. Zejména pomocí zpracování určitých událostí soukromého života, které měly nemalý dopad na jeho tvorbu. Dále se budu zabývat jeho působením v kapele GENESIS, sólovou kariérou a celkovým dopadem této osobnosti na scénu moderní pop music. Práce je rozdělena do pěti kapitol. Každá z nich obsahuje podkapitoly, které se podrobněji zaměřují na daný obsah. Cílem této práce je seznámení veřejnosti s osobností Phila Collinse a poskytnutí možnosti pochopit opravdový záměr jeho počínání a práce, protože je jednou z nejzajímavějších osobností populární hudby. „Jmenuji se Phil Collins a jsem bubeník a už vím, že nejsem nesmrtelný. -

The Spiritual Beliefs of Chart-Topping Rock Stars, in Their Lives

ROCK & HOLY ROLLERS: THE SPIRITUAL BELIEFS OF CHART-TOPPING ROCK STARS, IN THEIR LIVES AND LYRICS by Geoffrey D. Falk © 2006 Downloaded form www.holybooks.com Rock & Holy Rollers CONTENTS Introduction ................................................................................................... Chapter 1 Across the Universe ...................................................... The Beatles 2 Beliefs, They Are A-Changin’ ....................................... Bob Dylan 3 Sympathy for the Devil ..................................... The Rolling Stones 4 White Light Fantasy ........................................................ The Kinks 5 Meher, Can You Hear Me? ............................................... The Who 6 Bad Vibrations ...................................................... The Beach Boys 7 White Rabbit Habit ............................................ Jefferson Airplane 8 A Saucerful of Sant Mat ................................................ Pink Floyd 9 Jerry and the Spinners ........................................ The Grateful Dead 10 Voodoo Child .............................................................. Jimi Hendrix 11 The Doors of Perception ................................................. The Doors 12 Astral Years .............................................................. Van Morrison 13 Timothy Leary’s Dead ........................................ The Moody Blues 14 Stairway to Heaven .................................................... Led Zeppelin 15 Station to Station ....................................................... -

Dynamic Range Processing and Digital Effects

Dynamic Range Processing and Digital Effects Dynamic Range Compression Compression is a reduction of the dynamic range of a signal, meaning that the ratio of the loudest to the softest levels of a signal is reduced. This is accomplished using an amplifier with variable gain that can be controlled by the amplitude of the input signal: when the input exceeds a preset threshold level, the gain is reduced. The figure below is a device input/output graph that shows the basic adjustable parameters found on compressors: 1:1 Gain is the ratio of the output to the input, the slope of the line. For inputs above the threshold level, the output level change for a given input level change is known as the compression ratio (the inverse of the above threshold slope.) The knee is the point at which the gain changes. It can be changed all at once (“hard knee” as in the figure above) or gradually with increasing input level (“soft knee”). Since this change is non-linear, it can result in distortion that sounds slightly different when changed smoothly or instantaneously. The time required for the gain to change after a signal crosses the threshold is referred to as the attack time and the time it takes to returns to the original gain when the signal drops back below the threshold is the release time. Generally, the attack is fast and the release is slow to prevent audible artifacts of the gain change, however too short an attack time reduces the transient onset of a sound and noticeably alters the character of the sound: sometimes this may be desirable, but often it is not.