U.S. Department of Education's Implementation and Oversight Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

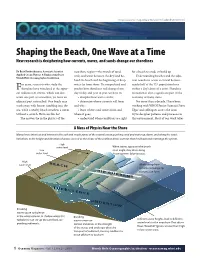

Shaping the Beach, One Wave at a Time New Research Is Deciphering How Currents, Waves, and Sands Change Our Shorelines

http://oceanusmag.whoi.edu/v43n1/raubenheimer.html Shaping the Beach, One Wave at a Time New research is deciphering how currents, waves, and sands change our shorelines By Britt Raubenheimer, Associate Scientist nearshore region—the stretch of sand, for a beach to erode or build up. Applied Ocean Physics & Engineering Dept. rock, and water between the dry land be- Understanding beaches and the adja- Woods Hole Oceanographic Institution hind the beach and the beginning of deep cent nearshore ocean is critical because or years, scientists who study the water far from shore. To comprehend and nearly half of the U.S. population lives Fshoreline have wondered at the appar- predict how shorelines will change from within a day’s drive of a coast. Shoreline ent fickleness of storms, which can dev- day to day and year to year, we have to: recreation is also a significant part of the astate one part of a coastline, yet leave an • decipher how waves evolve; economy of many states. adjacent part untouched. One beach may • determine where currents will form For more than a decade, I have been wash away, with houses tumbling into the and why; working with WHOI Senior Scientist Steve sea, while a nearby beach weathers a storm • learn where sand comes from and Elgar and colleagues across the coun- without a scratch. How can this be? where it goes; try to decipher patterns and processes in The answers lie in the physics of the • understand when conditions are right this environment. Most of our work takes A Mess of Physics Near the Shore Many forces intersect and interact in the surf and swash zones of the coastal ocean, pushing sand and water up, down, and along the coast. -

Secondment Plan

Secondment Plan Capacity Building in Asia for Resilience Education Project (CABARET) WP6 De La Salle University Environmental Hydraulics Institute of the University of Cantabria (IHC) 2017-2020 LIST OF ACRONYMS ADPC - Asian Disaster Preparedness Center CABARET - Capacity Building in Asia for Resilience Education CCA - Climate Change Adaptation DRRM - Disaster Risk Reduction and Management EU - European Union FSLGA - Federation of Sri Lankan Local Government Authorities HEI - Higher Education Institution IHC - Environmental Hydraulics Institute of the University of Cantabria IOC/UNESCO - Intergovernmental Oceanographic Commission United Nations Educational Scientific and Cultural Organization MHEWS - Multi-Hazard Early Warning System MOA - Memorandum of Agreement MOU - Memorandum of Understanding SEA - Socio-Economic Actors Table of Contents 1 INTRODUCTION AND OBJECTIVE .......................................................................................................... 1 1.1 Rationale ....................................................................................................................................... 1 1.2 Objective ....................................................................................................................................... 2 2 METHODOLOGY .................................................................................................................................... 2 3 PARTNERSHIP STRATEGY AT THE REGIONAL LEVEL ............................................................................. -

Beachcombers Field Guide

Beachcombers Field Guide The Beachcombers Field Guide has been made possible through funding from Coastwest and the Western Australian Planning Commission, and the Department of Fisheries, Government of Western Australia. The project would not have been possible without our community partners – Friends of Marmion Marine Park and Padbury Senior High School. Special thanks to Sue Morrison, Jane Fromont, Andrew Hosie and Shirley Slack- Smith from the Western Australian Museum and John Huisman for editing the fi eld guide. FRIENDS OF Acknowledgements The Beachcombers Field Guide is an easy to use identifi cation tool that describes some of the more common items you may fi nd while beachcombing. For easy reference, items are split into four simple groups: • Chordates (mainly vertebrates – animals with a backbone); • Invertebrates (animals without a backbone); • Seagrasses and algae; and • Unusual fi nds! Chordates and invertebrates are then split into their relevant phylum and class. PhylaPerth include:Beachcomber Field Guide • Chordata (e.g. fi sh) • Porifera (sponges) • Bryozoa (e.g. lace corals) • Mollusca (e.g. snails) • Cnidaria (e.g. sea jellies) • Arthropoda (e.g. crabs) • Annelida (e.g. tube worms) • Echinodermata (e.g. sea stars) Beachcombing Basics • Wear sun protective clothing, including a hat and sunscreen. • Take a bottle of water – it can get hot out in the sun! • Take a hand lens or magnifying glass for closer inspection. • Be careful when picking items up – you never know what could be hiding inside, or what might sting you! • Help the environment and take any rubbish safely home with you – recycle or place it in the bin. Perth• Take Beachcomber your camera Fieldto help Guide you to capture memories of your fi nds. -

)22' 6(&85,7< 5(6($5&+ 352-(&7

)22'6(&85,7<5(6($5&+352-(&7 RECOMMENDATIONS ON SAMPLE DESIGN FOR POST-HARVEST SURVEYS IN ZAMBIA BASED ON THE 2000 CENSUS By David J. Megill WORKING PAPER No. 11 FOOD SECURITY RESEARCH PROJECT LUSAKA, ZAMBIA February 2004 (Downloadable at: http://www.aec.msu.edu/agecon/fs2/zambia/index.htm ) RECOMMENDATIONS ON SAMPLE DESIGN FOR POST-HARVEST SURVEYS IN ZAMBIA BASED ON THE 2000 CENSUS David J. Megill FSRP Working Paper No. 11 February 2004 ACKNOWLEDGMENTS The Food Security Research Project is a collaboration between the Agricultural Consultative Forum (ACF), the Ministry of Agriculture, Food and Fisheries (MAFF), and Michigan State University’s Department of Agricultural Economics (MSU). We wish to acknowledge the financial and substantive support of the United States Agency for International Development (USAID) in Lusaka. Research support from the Global Bureau, Office Agriculture and Food Security, and the Africa Bureau, Office of Sustainable Development at USAID/Washington also made it possible for MSU researchers to contribute to this work. This study has been made possible thanks to the contributions of a number of people and organizations. In particular, the Agriculture and Environment Division, Central Statistical Office (CSO), and the Database Management Unit, Ministry of Agriculture and Cooperatives (MACO), who provided assistance in analyzing and utilizing 2000 Census data. MACO and CSO also actively participated in technical discussions that took place during the sample design process, providing the opportunity for the author, D.J. Megill (sampling consultant for FSRP), to refine the methodologies proposed in FSRP Working Paper No. 2 and present a working version of the new sampling methodology. -

Coastal Morphology Report Southwold to Benacre Denes (Suffolk)

Coastal Morphology Report Southwold to Benacre Denes (Suffolk) RP016/S/2010 March 2010 Title here in 8pt Arial (change text colour to black) i We are the Environment Agency. We protect and improve the environment and make it a better place for people and wildlife. We operate at the place where environmental change has its greatest impact on people’s lives. We reduce the risks to people and properties from flooding; make sure there is enough water for people and wildlife; protect and improve air, land and water quality and apply the environmental standards within which industry can operate. Acting to reduce climate change and helping people and wildlife adapt to its consequences are at the heart of all that we do. We cannot do this alone. We work closely with a wide range of partners including government, business, local authorities, other agencies, civil society groups and the communities we serve. Published by: Shoreline Management Group Environment Agency Kingfisher House, Goldhay Way Orton goldhay, Peterborough PE2 5ZR Email: [email protected] www.environment-agency.gov.uk © Environment Agency 2010 Further copies of this report are available from our publications catalogue: All rights reserved. This document may be http://publications.environment-agency.gov.uk reproduced with prior permission of or our National Customer Contact Centre: T: the Environment Agency. 03708 506506 Email: [email protected]. ii Cliffs at Eastern Bavents Photo: Environment Agency Glossary Accretion The accumulation of sediment -

Seychelles Coastal Management Plan 2019–2024 Mahé Island, Seychelles

Ministry of Environment, Energy and Climate Change Seychelles Coastal Management Plan 2019–2024 Mahé Island, Seychelles. Photo: 35007 Ministry of Environment, Energy and Climate Change Seychelles Coastal Management Plan 2019–2024 © 2019 International Bank for Reconstruction and Development / The World Bank 1818 H Street NW Washington DC 20433 Telephone: 202-473-1000 Internet: www.worldbank.org This work is a product of the staff of The World Bank with the Ministry of Environment, Energy and Climate Change of Seychelles. The findings, interpretations, and conclusions expressed in this work do not necessarily reflect the views of The World Bank, its Board of Executive Directors or the governments they represent, and the European Union. In addition, the European Union is not responsible for any use that may be made of the information contained therein. The World Bank does not guarantee the accuracy of the data included in this work. The boundaries, colors, denomina- tions, and other information shown on any map in this work do not imply any judgment on the part of The World Bank concerning the legal status of any territory or the endorsement or acceptance of such boundaries. Rights and Permissions The material in this work is subject to copyright. Because The World Bank encourages dissemination of its knowledge, this work may be reproduced, in whole or in part, for noncommercial purposes as long as full attribution to this work is given. Any queries on rights and licenses, including subsidiary rights, should be addressed to World Bank Publications, The World Bank Group, 1818 H Street NW, Washington, DC 20433, USA; fax: 202-522-2625; e-mail: [email protected]. -

Tool for the Identification and Assessment Of

Ocean & Coastal Management 113 (2015) 8e17 Contents lists available at ScienceDirect Ocean & Coastal Management journal homepage: www.elsevier.com/locate/ocecoaman Tool for the identification and assessment of Environmental Aspects in Ports (TEAP) * Martí Puig a, Chris Wooldridge b, Joaquim Casal a, Rosa Mari Darbra c, a Center for Technological Risk Studies (CERTEC), Department of Chemical Engineering, Universitat Politecnica de Catalunya · BarcelonaTech, Diagonal 647, 08028 Barcelona, Catalonia, Spain b School of Earth and Ocean Sciences, Cardiff University, Main Building, Park Place, Cardiff CF10 3AT, United Kingdom c Group on Techniques for Separating and Treating Industrial Waste (SETRI), Department of Chemical Engineering, Universitat Politecnica de Catalunya · BarcelonaTech, Diagonal 647, 08028 Barcelona, Catalonia, Spain article info abstract Article history: A new tool to assist port authorities in identifying aspects and in assessing their significance (TEAP) has Received 23 January 2015 been developed. The present research demonstrates that although there is a high percentage of European Received in revised form ports that have already identified their Significant Environmental Aspects (SEA), most of these ports do 26 March 2015 not use any standardized method. This suggests that some of the procedures used may not necessarily be Accepted 7 May 2015 science-based, systematic in approach or appropriate for the purpose of implementing effective envi- Available online 19 May 2015 ronmental management. For the port sector as a whole, where the free-exchange of environmental in- formation and experience is an established policy of the European Sea Ports Organization's (ESPO) and Keywords: Significant environmental aspects the EcoPorts Network, developing a tool to assist ports in identifying SEAs can be very useful. -

Innovative Tool for Individualized Environmental Indicators

1 2 3 Deliverable 3.3: Innovative tool for individualized environmental indicators DOCUMENT ID PORTOPIA|D|3.3|DT|2016.12.30|V|01 DUE DATE OF DELIVERABLE 2016-12-30 ACTUAL SUBMISSION DATE 29-11-2016 DISSEMINATION LEVEL CO (Confidential, only for the member of the consortium, including Commission Services) Deliverable 3.3: Innovative tool for individualized environmental indicators DELIVERABLE 3.3: INNOVATIVE TOOL FOR INDIVIDUALIZED ENVIRONMENTAL INDICATORS AUTHORSHIP Author(s) Puig, M., Pla, A., Seguí, X., Wooldrdige, C., Darbra, RM. Beneficiary Partner UPC Issue Date 31/12/2016 Revision 14/12/2106 Status Final Contributors Puig, M., Pla, A., Seguí, X., Wooldrdige, C., Darbra, RM. Pages 114 Figures 11 Tables 50 Appendixes 8 SIGNATURES Author(s) Coordinator M.Dooms Disclaimer The information contained in this report is subject to change without notice and should not be construed as a commitment by any members of the PORTOPIA Consortium or the authors. In the event of any software or algorithms being described in this report, the PORTOPIA Consortium assumes no responsibility for the use or inability to use any of its software or algorithms. The information is provided without any warranty of any kind and the PORTOPIA Consortium expressly disclaims all implied warranties, including but not limited to the implied warranties of merchantability and fitness for a particular use. (c) COPYRIGHT 2013 The PORTOPIA Consortium This document may be copied and reproduced without written permission from the PORTOPIA Consortium. Acknowledgement of the authors of the document shall be clearly referenced. All rights reserved. 1 Deliverable 3.3: Innovative tool for individualized environmental indicators DELIVERABLE 3.3: INNOVATIVE TOOL FOR INDIVIDUALIZED ENVIRONMENTAL INDICATORS 1 Introduction .................................................................................................................. -

Coastal Change in the Pacific Islands

COASTAL CHANGE IN THE PACIFIC ISLANDS VOLUME ONE: A Guide to Support Community Understanding of Coastal Erosion and Flooding Issues This document is made possible by the generous support of the Australian Government funded community based adaptation project led by The Nature Conservancy (TNC), Secretariat of the Pacific Community (SPC), the Coping with Climate Change in the Pacific Island Region project funded by the German Federal Ministry for Economic Cooperation and Development (BMZ), and United States Agency for International Development (USAID). Suggested Citation: Gombos, M., Ramsay, D., Webb, A., Marra, J., Atkinson, S., & Gorong, B. (Eds.). (2014). Coastal Change in the Pacific Islands, Volume One: A Guide to Support Community Understanding of Coastal Erosion and Flooding Issues. Pohnpei, Federated States of Micronesia: Micronesia Conservation Trust. Front cover photos by Arthur Webb, Doug Ramsay, Meghan Gombos, and Arthur Webb (top left to right); and Doug Ramsay (center) Back cover photos by Fenno Brunken (top) and Doug Ramsay (bottom) Table of Contents Acknowledgements ..................................................................................................................................5 About This Guide .....................................................................................................................................8 Purpose ........................................................................................................................................................................ 8 Audience -

Antimicrobial Resistance Module for Population-Based Surveys

Antimicrobial Resistance Module for Population-Based Surveys 2008 Rational Pharmaceutical Management Plus Center for Pharmaceutical Management Management Sciences for Health 4301 N. Fairfax Drive, Suite 400 Arlington, VA 22203 USA Phone: 703.524.6575 Fax: 703.524.7898 E-mail: [email protected] Macro International, Inc. 11705 Beltsville Drive, Suite 300 Calverton, MD 20705 Phone: 301.572.0200 Fax: 301.572.0999 E-mail: [email protected] TABLE OF CONTENTS Module Description ......................................................................................................................1 Tabulation Plan ............................................................................................................................4 Questionnaire ...............................................................................................................................8 Data Collector’s Guide ..............................................................................................................14 Zambia Pretest of AMR Module 2007 ......................................................................................21 This report was made possible through support provided by the U.S. Agency for International Development, under the terms of cooperative agreement number HRN-A-00-00-00016-00. The opinions expressed herein are those of the author(s) and do not necessarily reflect the views of the U.S. Agency for International Development. Antimicrobial Resistance Module for Population-based Surveys The Antimicrobial Resistance (AMR) Module -

000-2012-18Th 목차 국제세미나 논문집.Hwp

NAMES OF THE SEA BETWEEN KOREA AND JAPAN Based on Jason Hubbard’s forthcoming Iaponiae Insulae (2012) on Western printed maps of Japan up to 18001. Ferjan Ormeling Utrecht University Introduction In the recent past, both in the Republic of Korea2 and in Japan3 inventories were published of printed maps showing Korea and Japan and the sea in-between, with sea-names in sympathy with the relevant position of the respective nations. The underlying principle was, that the case for the selection of a specific name would be strengthened if it could be proved that that name was predominantly used in different periods in the past. The number of cases in which a specific name version was found on different maps or in different atlas editions was used as argument to prove a case. In all these examples of toponymical analyses of historical maps, only the fact that a specific name was printed in a specific sea area was registered (the name location), and the names were not put into context with other names on the map (name hierarchy), nor were different views on the meaning of sea names (directional names, territorial waters, etc) taken into account. Finally, the influence of the map frame on the incorporation of specific sea names was not taken into consideration either. More important still is the fact that the occurrence of specific name versions on specific maps as such does not prove which name versions were predominantly used at a specific time. If maps were to be the only source to derive the dominant name versions from, then their circulation numbers or print runs would have to be taken into account as well. -

Skill Tests of Threedimensional Tidal Currents in a Global Ocean Model: A

JOURNAL OF GEOPHYSICAL RESEARCH, VOL. 117, C08014, doi:10.1029/2011JC007617, 2012 Skill tests of three-dimensional tidal currents in a global ocean model: A look at the North Atlantic Patrick G. Timko,1,2,3 Brian K. Arbic,2 James G. Richman,4 Robert B. Scott,5,6 E. Joseph Metzger,4 and Alan J. Wallcraft4 Received 22 September 2011; revised 22 June 2012; accepted 26 June 2012; published 9 August 2012. [1] We present and apply several skill tests to assess tidal currents generated within a global ocean model compared to an archive of current meter records spanning 40 years. Within the North Atlantic we have identified over 1800 velocity records available for comparison to model output. The skill tests identify those regions where tidal ellipse parameters (semi-major/minor axis, inclination and Greenwich phase) fall within 95% confidence intervals derived from observations. Additional skill tests examine the vertical and horizontal structure of the tidal currents using the correlation and root mean square error between the observations and model. The skill tests account for grid misrepresentation within the model by comparing model values in a local 9-point neighborhood. Both the three-dimensional structure of model tidal currents and barotropic model tidal currents are compared to the altimetry based barotropic model TPXO 7.2. Our results indicate that the non-data assimilative ocean model performance is comparable to TPXO 7.2. The semi-major axes of the semi-diurnal constituents lie within the 95% confidence intervals between 40% and 60% of the time in some regions of the North Atlantic.