Opinion Dynamics with Confirmation Bias

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Paranoid – Suspicious; Argumentative; Paranoid; Continually on The

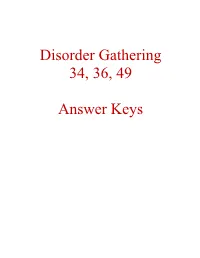

Disorder Gathering 34, 36, 49 Answer Keys A N S W E R K E Y, Disorder Gathering 34 1. Avital Agoraphobia – 2. Ewelina Alcoholism – 3. Martyna Anorexia – 4. Clarissa Bipolar Personality Disorder –. 5. Lysette Bulimia – 6. Kev, Annabelle Co-Dependant Relationship – 7. Archer Cognitive Distortions / all-of-nothing thinking (Splitting) – 8. Josephine Cognitive Distortions / Mental Filter – 9. Mendel Cognitive Distortions / Disqualifying the Positive – 10. Melvira Cognitive Disorder / Labeling and Mislabeling – 11. Liat Cognitive Disorder / Personalization – 12. Noa Cognitive Disorder / Narcissistic Rage – 13. Regev Delusional Disorder – 14. Connor Dependant Relationship – 15. Moira Dissociative Amnesia / Psychogenic Amnesia – (*Jason Bourne character) 16. Eylam Dissociative Fugue / Psychogenic Fugue – 17. Amit Dissociative Identity Disorder / Multiple Personality Disorder – 18. Liam Echolalia – 19. Dax Factitous Disorder – 20. Lorna Neurotic Fear of the Future – 21. Ciaran Ganser Syndrome – 22. Jean-Pierre Korsakoff’s Syndrome – 23. Ivor Neurotic Paranoia – 24. Tucker Persecutory Delusions / Querulant Delusions – 25. Lewis Post-Traumatic Stress Disorder – 26. Abdul Proprioception – 27. Alisa Repressed Memories – 28. Kirk Schizophrenia – 29. Trevor Self-Victimization – 30. Jerome Shame-based Personality – 31. Aimee Stockholm Syndrome – 32. Delphine Taijin kyofusho (Japanese culture-specific syndrome) – 33. Lyndon Tourette’s Syndrome – 34. Adar Social phobias – A N S W E R K E Y, Disorder Gathering 36 Adjustment Disorder – BERKELEY Apotemnophilia -

A Theoretical Exploration of Altruistic Action As an Adaptive Intervention

Smith ScholarWorks Theses, Dissertations, and Projects 2008 Dissonance, development and doing the right thing : a theoretical exploration of altruistic action as an adaptive intervention Christopher L. Woodman Smith College Follow this and additional works at: https://scholarworks.smith.edu/theses Part of the Social and Behavioral Sciences Commons Recommended Citation Woodman, Christopher L., "Dissonance, development and doing the right thing : a theoretical exploration of altruistic action as an adaptive intervention" (2008). Masters Thesis, Smith College, Northampton, MA. https://scholarworks.smith.edu/theses/439 This Masters Thesis has been accepted for inclusion in Theses, Dissertations, and Projects by an authorized administrator of Smith ScholarWorks. For more information, please contact [email protected]. Christopher L. Woodman Dissonance, Development, and Doing the Right Thing: A Theoretical Exploration of Altruistic Action as an Adaptive Intervention ABSTRACT This theoretical exploration was undertaken to give consideration to the phenomenon of altruistic action as a potential focus for therapeutic intervention strategies. The very nature of altruism carries with it a fundamentally paradoxical and discrepant conundrum because of the opposing forces that it activates within us; inclinations to put the welfare of others ahead of self-interest are not experienced by the inner self as sound survival planning, though this has historically been a point of contention. Internal and external discrepancies cause psychological dissonance -

Consumers' Behavioural Intentions After Experiencing Deception Or

CORE Metadata, citation and similar papers at core.ac.uk Provided by Plymouth Electronic Archive and Research Library Wilkins, S., Beckenuyte, C. and Butt, M. M. (2016), Consumers’ behavioural intentions after experiencing deception or cognitive dissonance caused by deceptive packaging, package downsizing or slack filling. European Journal of Marketing, 50(1/2), 213-235. Consumers’ behavioural intentions after experiencing deception or cognitive dissonance caused by deceptive packaging, package downsizing or slack filling Stephen Wilkins Graduate School of Management, Plymouth University, Plymouth, UK Carina Beckenuyte Fontys International Business School, Fontys University of Applied Sciences, Venlo, The Netherlands Muhammad Mohsin Butt Curtin Business School, Curtin University Sarawak Campus, Miri, Sarawak, Malaysia Abstract Purpose – The purpose of this study is to discover the extent to which consumers are aware of air filling in food packaging, the extent to which deceptive packaging and slack filling – which often result from package downsizing – lead to cognitive dissonance, and the extent to which feelings of cognitive dissonance and being deceived lead consumers to engage in negative post purchase behaviours. Design/methodology/approach – The study analysed respondents’ reactions to a series of images of a specific product. The sample consisted of consumers of FMCG products in the UK. Five photographs served as the stimulus material. The first picture showed a well-known brand of premium chocolate in its packaging and then four further pictures each showed a plate with a different amount of chocolate on it, which represented different possible levels of package fill. Findings – Consumer expectations of pack fill were positively related to consumers’ post purchase dissonance, and higher dissonance was negatively related to repurchase intentions and positively related to both intended visible and non-visible negative post purchase behaviours, such as switching brand and telling friends to avoid the product. -

Lecture Misinformation

Quote of the Day: “A lie will go round the world while truth is pulling its boots on.” -- Baptist preacher Charles H. Spurgeon, 1859 Please fill out the course evaluations: https://uw.iasystem.org/survey/233006 Questions on the final paper Readings for next time Today’s class: misinformation and conspiracy theories Some definitions of fake news: • any piece of information Donald Trump dislikes more seriously: • “a type of yellow journalism or propaganda that consists of deliberate disinformation or hoaxes spread via traditional news media (print and broadcast) or online social media.” disinformation: “false information which is intended to mislead, especially propaganda issued by a government organization to a rival power or the media” misinformation: “false or inaccurate information, especially that which is deliberately intended to deceive” Some findings of recent research on fake news, disinformation, and misinformation • False news stories are 70% more likely to be retweeted than true news stories. The false ones get people’s attention (by design). • Some people inadvertently spread fake news by saying it’s false and linking to it. • Much of the fake news from the 2016 election originated in small-time operators in Macedonia trying to make money (get clicks, sell advertising). • However, Russian intelligence agencies were also active (Kate Starbird’s research). The agencies created fake Black Lives Matter activists and Blue Lives Matter activists, among other profiles. A quick guide to spotting fake news, from the Freedom Forum Institute: https://www.freedomforuminstitute.org/first-amendment- center/primers/fake-news-primer/ Fact checking sites are also essential for identifying fake news. -

Conspiracy Theory Beliefs: Measurement and the Role of Perceived Lack Of

Conspiracy theory beliefs: measurement and the role of perceived lack of control Ana Stojanov A thesis submitted for the degree of Doctor of Philosophy at the University of Otago, Dunedin, New Zealand November, 2019 Abstract Despite conspiracy theory beliefs’ potential to lead to negative outcomes, psychologists have only relatively recently taken a strong interest in their measurement and underlying mechanisms. In this thesis I test a particularly common motivational claim about the origin of conspiracy theory beliefs: that they are driven by threats to personal control. Arguing that previous experimental studies have used inconsistent and potentially confounded measures of conspiracy beliefs, I first developed and validated a new Conspiracy Mentality Scale, and then used it to test the control hypothesis in six systematic and well-powered studies. Little evidence for the hypothesis was found in these studies, or in a subsequent meta-analysis of all experimental evidence on the subject, although the latter indicated that specific measures of conspiracies are more likely to change in response to control manipulations than are generic or abstract measures. Finally, I examine how perceived lack of control relates to conspiracy beliefs in two very different naturalistic settings, both of which are likely to threaten individuals feelings of control: a political crisis over Macedonia’s name change, and series of tornadoes in North America. In the first, I found that participants who had opposed the name change reported stronger conspiracy beliefs than those who has supported it. In the second, participants who had been more seriously affected by the tornadoes reported decreased control, which in turn predicted their conspiracy beliefs, but only for threat-related claims. -

What Is the Function of Confirmation Bias?

Erkenntnis https://doi.org/10.1007/s10670-020-00252-1 ORIGINAL RESEARCH What Is the Function of Confrmation Bias? Uwe Peters1,2 Received: 7 May 2019 / Accepted: 27 March 2020 © The Author(s) 2020 Abstract Confrmation bias is one of the most widely discussed epistemically problematic cognitions, challenging reliable belief formation and the correction of inaccurate views. Given its problematic nature, it remains unclear why the bias evolved and is still with us today. To ofer an explanation, several philosophers and scientists have argued that the bias is in fact adaptive. I critically discuss three recent proposals of this kind before developing a novel alternative, what I call the ‘reality-matching account’. According to the account, confrmation bias evolved because it helps us infuence people and social structures so that they come to match our beliefs about them. This can result in signifcant developmental and epistemic benefts for us and other people, ensuring that over time we don’t become epistemically disconnected from social reality but can navigate it more easily. While that might not be the only evolved function of confrmation bias, it is an important one that has so far been neglected in the theorizing on the bias. In recent years, confrmation bias (or ‘myside bias’),1 that is, people’s tendency to search for information that supports their beliefs and ignore or distort data contra- dicting them (Nickerson 1998; Myers and DeWall 2015: 357), has frequently been discussed in the media, the sciences, and philosophy. The bias has, for example, been mentioned in debates on the spread of “fake news” (Stibel 2018), on the “rep- lication crisis” in the sciences (Ball 2017; Lilienfeld 2017), the impact of cognitive diversity in philosophy (Peters 2019a; Peters et al. -

Cognitive Dissonance Approach

Explaining Preferences from Behavior: A Cognitive Dissonance Approach Avidit Acharya, Stanford University Matthew Blackwell, Harvard University Maya Sen, Harvard University The standard approach in positive political theory posits that action choices are the consequences of preferences. Social psychology—in particular, cognitive dissonance theory—suggests the opposite: preferences may themselves be affected by action choices. We present a framework that applies this idea to three models of political choice: (1) one in which partisanship emerges naturally in a two-party system despite policy being multidimensional, (2) one in which interactions with people who express different views can lead to empathetic changes in political positions, and (3) one in which ethnic or racial hostility increases after acts of violence. These examples demonstrate how incorporating the insights of social psychology can expand the scope of formalization in political science. hat are the origins of interethnic hostility? How Our framework builds on an insight originating in social do young people become lifelong Republicans or psychology with the work of Festinger (1957) that suggests WDemocrats? What causes people to change deeply that actions could affect preferences through cognitive dis- held political preferences? These questions are the bedrock of sonance. One key aspect of cognitive dissonance theory is many inquiries within political science. Numerous articles that individuals experience a mental discomfort after taking and books study the determinants of racism, partisanship, actions that appear to be in conflict with their starting pref- and preference change. Throughout, a theme linking these erences. To minimize or avoid this discomfort, they change seemingly disparate literatures is the formation and evolution their preferences to more closely align with their actions. -

Your Journey Is Loading. Scroll Ahead to Continue

Your journey is loading. Scroll ahead to continue. amizade.org • @AmizadeGSL Misinformation and Disinformation in the time of COVID-19 | VSL powered by Amizade | amizade.org 2 Welcome! It is with great pleasure that Amizade welcomes you to what will be a week of learning around the abundance of misinformation and disinformation in both the traditional media as well as social media. We are so excited to share this unique opportunity with you during what is a challenging time in human history. We have more access to information and knowledge today than at any point in human history. However, in our increasingly hyper-partisan world, it has become more difficult to find useful and accurate information and distinguish between what is true and false. There are several reasons for this. Social media has given everyone in the world, if they so desire, a platform to spread information throughout their social networks. Many websites, claiming to be valid sources of news, use salacious headlines in order to get clicks and advertising dollars. Many “legitimate” news outlets skew research and data to fit their audiences’ political beliefs. Finally, there are truly bad actors, intentionally spreading false information, in order to sow unrest and further divide people. It seems that as we practice social distancing, connecting with the world has become more important than ever before. At the same time, it is exceedingly important to be aware of the information that you are consuming and sharing so that you are a part of the solution to the ongoing flood of false information. This program’s goal is to do just that—to connect you with the tools and resources you need to push back when you come across incorrect or intentionally misleading information, to investigate your own beliefs and biases, and to provide you with the tools to become a steward of good information. -

Cognitive Dissonance Evidence from Children and Monkeys Louisa C

PSYCHOLOGICAL SCIENCE Research Article The Origins of Cognitive Dissonance Evidence From Children and Monkeys Louisa C. Egan, Laurie R. Santos, and Paul Bloom Yale University ABSTRACT—In a study exploring the origins of cognitive of psychology, including attitudes and prejudice (e.g., Leippe & dissonance, preschoolers and capuchins were given a Eisenstadt, 1994), moral cognition (e.g., Tsang, 2002), decision choice between two equally preferred alternatives (two making (e.g., Akerlof & Dickens, 1982), happiness (e.g., Lyu- different stickers and two differently colored M&M’ss, bomirsky & Ross, 1999), and therapy (Axsom, 1989). respectively). On the basis of previous research with Unfortunately, despite long-standing interest in cognitive adults, this choice was thought to cause dissonance be- dissonance, there is still little understanding of its origins—both cause it conflicted with subjects’ belief that the two options developmentally over the life course and evolutionarily as the were equally valuable. We therefore expected subjects to product of human phylogenetic history. Does cognitive-disso- change their attitude toward the unchosen alternative, nance reduction begin to take hold only after much experience deeming it less valuable. We then presented subjects with a with the aversive consequences of dissonant cognitions, or does choice between the unchosen option and an option that was it begin earlier in development? Similarly, are humans unique in originally as attractive as both options in the first choice. their drive to avoid dissonant cognitions, or is this process older Both groups preferred the novel over the unchosen option evolutionarily, perhaps shared with nonhuman primate species? in this experimental condition, but not in a control condi- To date, little research has investigated whether children or tion in which they did not take part in the first decision. -

Teaching Aid 4: Challenging Conspiracy Theories

Challenging Conspiracy Theories Teaching Aid 4 1. Increasing Knowledge about Jews and Judaism 2. Overcoming Unconscious Biases 3. Addressing Anti-Semitic Stereotypes and Prejudice 4. Challenging Conspiracy Theories 5. Teaching about Anti-Semitism through Holocaust Education 6. Addressing Holocaust Denial, Distortion and Trivialization 7. Anti-Semitism and National Memory Discourse 8. Dealing with Anti-Semitic Incidents 9. Dealing with Online Anti-Semitism 10. Anti-Semitism and the Situation in the Middle East What is a conspiracy Challenging theory? “A belief that some covert but Conspiracy influential organization is re- sponsible for an unexplained Theories event.” SOURCE: Concise Oxford Eng- lish Dictionary, ninth edition The world is full of challenging Such explanatory models reject of conspiracy theories presents complexities, one of which is accepted narratives, and official teachers with a challenge: to being able to identify fact from explanations are sometimes guide students to identify, con- fiction. People are inundated regarded as further evidence of front and refute such theories. with information from family, the conspiracy. Conspiracy the- friends, community and online ories build on distrust of estab- This teaching aid will look at sources. Political, economic, cul- lished institutions and process- how conspiracy theories func- tural and other forces shape the es, and often implicate groups tion, how they may relate to narratives we are exposed to that are associated with nega- anti-Semitism, and outline daily, and hidden -

Confirmation Bias

CONFIRMATION BIAS PATRICK BARRY* ABSTRACT Supreme Court confirmation hearings are vapid. Supreme Court confirmation hearings are pointless. Supreme Court confirmation hearings are harmful to a citizenry already cynical about government. Sentiments like these have been around for decades and are bound to resurface each time a new nomination is made. This essay, however, takes a different view. It argues that Supreme Court confirmation hearings are a valuable form of cultural expression, one that provides a unique record of, as the theater critic Martin Esslin might say, a nation thinking about itself in public. The theatre is a place where a nation thinks in public in front of itself. —Martin Esslin1 The Supreme Court confirmation process—once a largely behind-the- scenes affair—has lately moved front-and-center onto the public stage. —Laurence H. Tribe2 INTRODUCTION That Supreme Court confirmation hearings are televised unsettles some legal commentators. Constitutional law scholar Geoffrey Stone, for example, worries that publicly performed hearings encourage grandstanding; knowing their constituents will be watching, senators unhelpfully repeat questions they think the nominee will try to evade—the goal being to make the nominee look bad and themselves look good.3 Stone even suggests the country might be * Clinical Assistant Professor, University of Michigan Law School. © 2017. I thank for their helpful comments and edits Enoch Brater, Martha Jones, Eva Foti-Pagan, Sidonie Smith, and James Boyd White. I am also indebted to Alexis Bailey and Hannah Hoffman for their wonderful work as research assistants. 1. MARTIN ESSLIN, AN ANATOMY OF DRAMA 101 (1977). 2. Laurence H. Tribe, Foreword to PAUL SIMON, ADVICE AND CONSENT: CLARENCE THOMAS, ROBERT BORK AND THE INTRIGUING HISTORY OF THE SUPREME COURT’S NOMINATION BATTLES 13 (1992). -

Self-Perception: an Alternative Interpretation of Cognitive Dissonance Phenomena1

Psychological Review 1967, Vol. 74, No. 3, 183-200 SELF-PERCEPTION: AN ALTERNATIVE INTERPRETATION OF COGNITIVE DISSONANCE PHENOMENA1 DARYL J. BEM Carnegie Institute of Technology A theory of self-perception is proposed to provide an alternative in- terpretation for several of the major phenomena embraced by Fest- inger's theory of cognitive dissonance and to explicate some of the secondary patterns of data that have appeared in dissonance experi- ments. It is suggested that the attitude statements which comprise the major dependent variables in dissonance experiments may be regarded as interpersonal judgments in which the observer and the observed happen to be the same individual and that it is unnecessary to postu- late an aversive motivational drive toward consistency to account for the attitude change phenomena observed. Supporting experiments are presented, and metatheoretical contrasts between the "radical" be- havioral approach utilized and the phenomenological approach typi- fied by dissonance theory are discussed. If a person holds two cognitions that ducted within the framework of dis- are inconsistent with one another, he sonance theory; and, in the 5 years will experience the pressure of an since the appearance of their book, aversive motivational state called cog- every major social-psychological jour- nitive dissonance, a pressure which he nal has averaged at least one article will seek to remove, among other ways, per issue probing some prediction "de- by altering one of the two "dissonant" rived" from the basic propositions of cognitions. This proposition is the dissonance theory. In popularity, even heart of Festinger's (1957) theory of the empirical law of effect now appears cognitive dissonance, a theory which to be running a poor second.