Digital Image Processing (CS/ECE 545) Lecture 9: Color Images (Part 2) & Introduction to Spectral Techniques (Fourier Transform, DFT, DCT)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

What Resolution Should Your Images Be?

What Resolution Should Your Images Be? The best way to determine the optimum resolution is to think about the final use of your images. For publication you’ll need the highest resolution, for desktop printing lower, and for web or classroom use, lower still. The following table is a general guide; detailed explanations follow. Use Pixel Size Resolution Preferred Approx. File File Format Size Projected in class About 1024 pixels wide 102 DPI JPEG 300–600 K for a horizontal image; or 768 pixels high for a vertical one Web site About 400–600 pixels 72 DPI JPEG 20–200 K wide for a large image; 100–200 for a thumbnail image Printed in a book Multiply intended print 300 DPI EPS or TIFF 6–10 MB or art magazine size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1800 x 1200 pixels. Printed on a Multiply intended print 200 DPI EPS or TIFF 2-3 MB laserwriter size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1200 x 800 pixels. Digital Camera Photos Digital cameras have a range of preset resolutions which vary from camera to camera. Designation Resolution Max. Image size at Printable size on 300 DPI a color printer 4 Megapixels 2272 x 1704 pixels 7.5” x 5.7” 12” x 9” 3 Megapixels 2048 x 1536 pixels 6.8” x 5” 11” x 8.5” 2 Megapixels 1600 x 1200 pixels 5.3” x 4” 6” x 4” 1 Megapixel 1024 x 768 pixels 3.5” x 2.5” 5” x 3 If you can, you generally want to shoot larger than you need, then sharpen the image and reduce its size in Photoshop. -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Camera Raw Workflows

RAW WORKFLOWS: FROM CAMERA TO POST Copyright 2007, Jason Rodriguez, Silicon Imaging, Inc. Introduction What is a RAW file format, and what cameras shoot to these formats? How does working with RAW file-format cameras change the way I shoot? What changes are happening inside the camera I need to be aware of, and what happens when I go into post? What are the available post paths? Is there just one, or are there many ways to reach my end goals? What post tools support RAW file format workflows? How do RAW codecs like CineForm RAW enable me to work faster and with more efficiency? What is a RAW file? In simplest terms is the native digital data off the sensor's A/D converter with no further destructive DSP processing applied Derived from a photometrically linear data source, or can be reconstructed to produce data that directly correspond to the light that was captured by the sensor at the time of exposure (i.e., LOG->Lin reverse LUT) Photometrically Linear 1:1 Photons Digital Values Doubling of light means doubling of digitally encoded value What is a RAW file? In film-analogy would be termed a “digital negative” because it is a latent representation of the light that was captured by the sensor (up to the limit of the full-well capacity of the sensor) “RAW” cameras include Thomson Viper, Arri D-20, Dalsa Evolution 4K, Silicon Imaging SI-2K, Red One, Vision Research Phantom, noXHD, Reel-Stream “Quasi-RAW” cameras include the Panavision Genesis In-Camera Processing Most non-RAW cameras on the market record to 8-bit YUV formats -

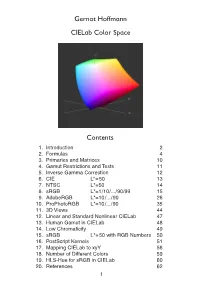

Cielab Color Space

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Invention of Digital Photograph

Invention of Digital photograph Digital photography uses cameras containing arrays of electronic photodetectors to capture images focused by a lens, as opposed to an exposure on photographic film. The captured images are digitized and stored as a computer file ready for further digital processing, viewing, electronic publishing, or digital printing. Until the advent of such technology, photographs were made by exposing light sensitive photographic film and paper, which was processed in liquid chemical solutions to develop and stabilize the image. Digital photographs are typically created solely by computer-based photoelectric and mechanical techniques, without wet bath chemical processing. The first consumer digital cameras were marketed in the late 1990s.[1] Professionals gravitated to digital slowly, and were won over when their professional work required using digital files to fulfill the demands of employers and/or clients, for faster turn- around than conventional methods would allow.[2] Starting around 2000, digital cameras were incorporated in cell phones and in the following years, cell phone cameras became widespread, particularly due to their connectivity to social media websites and email. Since 2010, the digital point-and-shoot and DSLR formats have also seen competition from the mirrorless digital camera format, which typically provides better image quality than the point-and-shoot or cell phone formats but comes in a smaller size and shape than the typical DSLR. Many mirrorless cameras accept interchangeable lenses and have advanced features through an electronic viewfinder, which replaces the through-the-lens finder image of the SLR format. While digital photography has only relatively recently become mainstream, the late 20th century saw many small developments leading to its creation. -

Preparing Images for Delivery

TECHNICAL PAPER Preparing Images for Delivery TABLE OF CONTENTS So, you’ve done a great job for your client. You’ve created a nice image that you both 2 How to prepare RGB files for CMYK agree meets the requirements of the layout. Now what do you do? You deliver it (so you 4 Soft proofing and gamut warning can bill it!). But, in this digital age, how you prepare an image for delivery can make or 13 Final image sizing break the final reproduction. Guess who will get the blame if the image’s reproduction is less than satisfactory? Do you even need to guess? 15 Image sharpening 19 Converting to CMYK What should photographers do to ensure that their images reproduce well in print? 21 What about providing RGB files? Take some precautions and learn the lingo so you can communicate, because a lack of crystal-clear communication is at the root of most every problem on press. 24 The proof 26 Marking your territory It should be no surprise that knowing what the client needs is a requirement of pro- 27 File formats for delivery fessional photographers. But does that mean a photographer in the digital age must become a prepress expert? Kind of—if only to know exactly what to supply your clients. 32 Check list for file delivery 32 Additional resources There are two perfectly legitimate approaches to the problem of supplying digital files for reproduction. One approach is to supply RGB files, and the other is to take responsibility for supplying CMYK files. Either approach is valid, each with positives and negatives. -

Dalmatian's Definitions

T H E B L A C K & W H I T E P A P E R S D A L M A T I A N ’ S D E F I N I T I O N S 8 BIT A bit is the possible number of colors or tones assigned to each pixel. In 8 bit files, 1 of 256 tones is assigned to each pixel. 8-bit Jpeg is the default setting for most cameras; whenever possible set the camera to RAW capture for the best image capture possible. 16 BIT A bit is the possible number of colors or tones assigned to each pixel. In 16 bit files, 1 of 65,536 tones are assigned to each pixel. 16 bit files have the greatest range of tonalities and a more realistic interpretation of continuous tone. Note the increase in tonalities from 8 bit to 16 bit. If your aim is the quality of the image, then 16 bit is a must. ADOBE 1998 COLOR SPACE Adobe ‘98 is the preferred RGB color space that places the captured and therefore printable colors within larger parameters, rendering approximately 50% the visible color space of the human eye. Whenever possible, change the camera’s default sRGB setting to Adobe 1998 in order to increase available color capture. Whenever possible change this setting to Adobe 1998 in order to increase available color capture. When an image is captured in sRGB, it is best to leave the color space unchanged so color rendering is unaffected. ARCHIVAL Archival materials are those with a neutral pH balance that will not degrade over time and are resistant to UV fading. -

Specification of Srgb

How to interpret the sRGB color space (specified in IEC 61966-2-1) for ICC profiles A. Key sRGB color space specifications (see IEC 61966-2-1 https://webstore.iec.ch/publication/6168 for more information). 1. Chromaticity co-ordinates of primaries: R: x = 0.64, y = 0.33, z = 0.03; G: x = 0.30, y = 0.60, z = 0.10; B: x = 0.15, y = 0.06, z = 0.79. Note: These are defined in ITU-R BT.709 (the television standard for HDTV capture). 2. Reference display‘Gamma’: Approximately 2.2 (see precise specification of color component transfer function below). 3. Reference display white point chromaticity: x = 0.3127, y = 0.3290, z = 0.3583 (equivalent to the chromaticity of CIE Illuminant D65). 4. Reference display white point luminance: 80 cd/m2 (includes veiling glare). Note: The reference display white point tristimulus values are: Xabs = 76.04, Yabs = 80, Zabs = 87.12. 5. Reference veiling glare luminance: 0.2 cd/m2 (this is the reference viewer-observed black point luminance). Note: The reference viewer-observed black point tristimulus values are assumed to be: Xabs = 0.1901, Yabs = 0.2, Zabs = 0.2178. These values are not specified in IEC 61966-2-1, and are an additional interpretation provided in this document. 6. Tristimulus value normalization: The CIE 1931 XYZ values are scaled from 0.0 to 1.0. Note: The following scaling equations can be used. These equations are not provided in IEC 61966-2-1, and are an additional interpretation provided in this document. 76.04 X abs 0.1901 XN = = 0.0125313 (Xabs – 0.1901) 80 76.04 0.1901 Yabs 0.2 YN = = 0.0125313 (Yabs – 0.2) 80 0.2 87.12 Zabs 0.2178 ZN = = 0.0125313 (Zabs – 0.2178) 80 87.12 0.2178 7. -

Computational RYB Color Model and Its Applications

IIEEJ Transactions on Image Electronics and Visual Computing Vol.5 No.2 (2017) -- Special Issue on Application-Based Image Processing Technologies -- Computational RYB Color Model and its Applications Junichi SUGITA† (Member), Tokiichiro TAKAHASHI†† (Member) †Tokyo Healthcare University, ††Tokyo Denki University/UEI Research <Summary> The red-yellow-blue (RYB) color model is a subtractive model based on pigment color mixing and is widely used in art education. In the RYB color model, red, yellow, and blue are defined as the primary colors. In this study, we apply this model to computers by formulating a conversion between the red-green-blue (RGB) and RYB color spaces. In addition, we present a class of compositing methods in the RYB color space. Moreover, we prescribe the appropriate uses of these compo- siting methods in different situations. By using RYB color compositing, paint-like compositing can be easily achieved. We also verified the effectiveness of our proposed method by using several experiments and demonstrated its application on the basis of RYB color compositing. Keywords: RYB, RGB, CMY(K), color model, color space, color compositing man perception system and computer displays, most com- 1. Introduction puter applications use the red-green-blue (RGB) color mod- Most people have had the experience of creating an arbi- el3); however, this model is not comprehensible for many trary color by mixing different color pigments on a palette or people who not trained in the RGB color model because of a canvas. The red-yellow-blue (RYB) color model proposed its use of additive color mixing. As shown in Fig. -

Yasser Syed & Chris Seeger Comcast/NBCU

Usage of Video Signaling Code Points for Automating UHD and HD Production-to-Distribution Workflows Yasser Syed & Chris Seeger Comcast/NBCU Comcast TPX 1 VIPER Architecture Simpler Times - Delivering to TVs 720 1920 601 HD 486 1080 1080i 709 • SD - HD Conversions • Resolution, Standard Dynamic Range and 601/709 Color Spaces • 4:3 - 16:9 Conversions • 4:2:0 - 8-bit YUV video Comcast TPX 2 VIPER Architecture What is UHD / 4K, HFR, HDR, WCG? HIGH WIDE HIGHER HIGHER DYNAMIC RESOLUTION COLOR FRAME RATE RANGE 4K 60p GAMUT Brighter and More Colorful Darker Pixels Pixels MORE FASTER BETTER PIXELS PIXELS PIXELS ENABLED BY DOLBYVISION Comcast TPX 3 VIPER Architecture Volume of Scripted Workflows is Growing Not considering: • Live Events (news/sports) • Localized events but with wider distributions • User-generated content Comcast TPX 4 VIPER Architecture More Formats to Distribute to More Devices Standard Definition Broadcast/Cable IPTV WiFi DVDs/Files • More display devices: TVs, Tablets, Mobile Phones, Laptops • More display formats: SD, HD, HDR, 4K, 8K, 10-bit, 8-bit, 4:2:2, 4:2:0 • More distribution paths: Broadcast/Cable, IPTV, WiFi, Laptops • Integration/Compositing at receiving device Comcast TPX 5 VIPER Architecture Signal Normalization AUTOMATED LOGIC FOR CONVERSION IN • Compositing, grading, editing SDR HLG PQ depends on proper signal BT.709 BT.2100 BT.2100 normalization of all source files (i.e. - Still Graphics & Titling, Bugs, Tickers, Requires Conversion Native Lower-Thirds, weather graphics, etc.) • ALL content must be moved into a single color volume space. Normalized Compositing • Transformation from different Timeline/Switcher/Transcoder - PQ-BT.2100 colourspaces (BT.601, BT.709, BT.2020) and transfer functions (Gamma 2.4, PQ, HLG) Convert Native • Correct signaling allows automation of conversion settings.