Game System Architecture Zachary Kruse & Kevin Davison History of Gaming Consoles

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nova College-Wide Course Content Summary Ite 170 - Multimedia Software (3 Cr.)

Revised 8/2012 NOVA COLLEGE-WIDE COURSE CONTENT SUMMARY ITE 170 - MULTIMEDIA SOFTWARE (3 CR.) Course Description Explores technical fundamentals of creating multimedia projects with related hardware and software. Students will learn to manage resources required for multimedia production and evaluation and techniques for selection of graphics and multimedia software. Lecture 3 hours per week. General Purpose In this course students will learn the concepts, design and implementation of multimedia for the web. Students will create professional websites which incorporate images, sound, video and animation. The materials created will correspond to standards for Web accessibility and copyright issues. Course Prerequisites/Corequisites Prerequisite: ITE 115 Course Objectives Upon completion of this course, the student will be able to: a) Plan and organize a multimedia web site b) Understand the design concepts for creating a multimedia website c) Use a web authoring tool to create a multimedia web site d) Understand the design concepts related to creating and using graphics for the web e) Use graphics software to create and edit images for the web f) Understand the design concepts related to creating and using animation, audio and video for the web g) Use animation software to create and edit animations for the web h) Use software tools to publish and maintain a multimedia web site Major Topics to be Included Plan and organize a multimedia web site User characteristics and their effect upon design considerations Principles of good design -

A History of Video Game Consoles Introduction the First Generation

A History of Video Game Consoles By Terry Amick – Gerald Long – James Schell – Gregory Shehan Introduction Today video games are a multibillion dollar industry. They are in practically all American households. They are a major driving force in electronic innovation and development. Though, you would hardly guess this from their modest beginning. The first video games were played on mainframe computers in the 1950s through the 1960s (Winter, n.d.). Arcade games would be the first glimpse for the general public of video games. Magnavox would produce the first home video game console featuring the popular arcade game Pong for the 1972 Christmas Season, released as Tele-Games Pong (Ellis, n.d.). The First Generation Magnavox Odyssey Rushed into production the original game did not even have a microprocessor. Games were selected by using toggle switches. At first sales were poor because people mistakenly believed you needed a Magnavox TV to play the game (GameSpy, n.d., para. 11). By 1975 annual sales had reached 300,000 units (Gamester81, 2012). Other manufacturers copied Pong and began producing their own game consoles, which promptly got them sued for copyright infringement (Barton, & Loguidice, n.d.). The Second Generation Atari 2600 Atari released the 2600 in 1977. Although not the first, the Atari 2600 popularized the use of a microprocessor and game cartridges in video game consoles. The original device had an 8-bit 1.19MHz 6507 microprocessor (“The Atari”, n.d.), two joy sticks, a paddle controller, and two game cartridges. Combat and Pac Man were included with the console. In 2007 the Atari 2600 was inducted into the National Toy Hall of Fame (“National Toy”, n.d.). -

Image Compression, Comparison Between Discrete Cosine Transform and Fast Fourier Transform and the Problems Associated with DCT

Image Compression, Comparison between Discrete Cosine Transform and Fast Fourier Transform and the problems associated with DCT Imdad Ali Ismaili 1, Sander Ali Khowaja 2, Waseem Javed Soomro 3 1Institute of Information and Communication Technology, University of Sindh, Jamshoro, Sindh, Pakistan 2Institute of Information and Communication Technology, University of Sindh, Jamshoro, Sindh, Pakistan Abstract - The research article focuses on the Image much higher than the calculations mentioned above; this Compression techniques such as. Discrete Cosine Transform increases the bandwidth requirement of the channel which is (DCT) and Fast Fourier Transform (FFT). These techniques very costly. This is the challenging part for the researchers to are chosen because of their vast use in image processing field, transmit theses digital signals through limited bandwidth JPEG (Joint Photographic Experts Group) is one of the communication channel, most of the times the way is found to examples of compression technique which uses DCT. The overcome this obstacle but sometimes it is impossible to send Research compares the two compression techniques based on these digital signals in its raw form. Though there has been a DCT and FFT and compare their results using MATLAB revolution in the increased capacity and decreased cost of software, Graphical User Interface (GUI). These results are storage over the past years but the requirement of data storage based on two compression techniques with different rates of and data processing applications is growing explosively to out compression i.e. Compression rates are 90%, 60%, 30% and space this achievement.[8] 5%. The technique allows compressing any picture format to JPG format. -

Network Requirements for High-Speed Real-Time Multimedia

NETWORK REQUIREMENTS FOR HIGH-SPEED REAL-TIME MULTIMEDIA DATA STREAMS Andrei Sukhov1), Prasad Calyam2), Warren Daly3), Alexander Iliin4) 1) Laboratory of Network Technologies, Samara Academy of Transport Engineering, Samara, Russia 2) Department of Electrical and Computer Engineering, Ohio State University, Ohio USA 4) HEANet Ltd, Dublin, Ireland 4) Russian Institute for Public Network, Moscow, Russia Abstract High-speed real-time multimedia applications such as Videoconferencing and HDTV have already become popular in many end-user communities. Developing better network protocols and systems that support such applications requires a sound understanding of the voice and video traffic characteristics and factors that ultimately affect end-user perception of audiovisual quality. In this paper we attempt to propose an analytical model for the various network factors whose interdependencies need to be modeled for characterizing end- user perception of audiovisual quality for high-speed real-time multimedia data streams. Towards validating our theory presented in this paper, we describe our initial results and future plans that consist of conducting a series of experiments and potential modifications to our proposed model. As the portion of middle and high-speed RTP (Real-Time Protocols) applications in the total communication over the Internet grows, it is important to understand the behavior of such traffic over the TCP/IP networks. The impetuous growth of traffic volumes and the rapid advances in the technologies of new-generation networks like IPv6 have made it possible for use of such high-speed applications, but with new demands on the network to deliver superior Quality of Service (QoS) to these applications. Our research focuses on the area of quality criteria for middle and high-speed network applications like • Video Conferencing / Streaming • Grid applications • P2P applications Audio- and Videoconferencing are two major multimedia communications that are defined by various international standards such as H.320, H.323 and SIP. -

Multimedia in Teacher Education: Perceptions & Uses

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by International Institute for Science, Technology and Education (IISTE): E-Journals Journal of Education and Practice www.iiste.org ISSN 2222-1735 (Paper) ISSN 2222-288X (Online) Vol 3, No 1, 2012 Multimedia in Teacher Education: Perceptions & Uses Gourav Mahajan* Sri Sai College of Education, Badhani, Pathankot,Punjab, India * E-mail of the corresponding author: [email protected] Abstract Educational systems around the world are under increasing pressure to use the new technologies to teach students the knowledge and skills they need in the 21st century. Education is at the confluence of powerful and rapidly shifting educational, technological and political forces that will shape the structure of educational systems across the globe for the remainder of this century. Many countries are engaged in a number of efforts to effect changes in the teaching/learning process to prepare students for an information and technology based society. Multimedia provide an array of powerful tools that may help in transforming the present isolated, teacher-centred and text-bound classrooms into rich, student-focused, interactive knowledge environments. The schools must embrace the new technologies and appropriate multimedia approach for learning. They must also move toward the goal of transforming the traditional paradigm of learning. Teacher education institutions may either assume a leadership role in the transformation of education or be left behind in the swirl of rapid technological change. For education to reap the full benefits of multimedia in learning, it is essential that pre-service and in-service teachers have basic skills and competencies required for using multimedia. -

Video Games: Changing the Way We Think of Home Entertainment

Rochester Institute of Technology RIT Scholar Works Theses 2005 Video games: Changing the way we think of home entertainment Eri Shulga Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Shulga, Eri, "Video games: Changing the way we think of home entertainment" (2005). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Video Games: Changing The Way We Think Of Home Entertainment by Eri Shulga Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Information Technology Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Copyright 2005 Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Thesis Approval Form Student Name: _ __;E=.;r....;...i S=-h;....;..;u;;;..;..lg;;i..;:a;;...__ _____ Thesis Title: Video Games: Changing the Way We Think of Home Entertainment Thesis Committee Name Signature Date Evelyn Rozanski, Ph.D Evelyn Rozanski /o-/d-os- Chair Prof. Andy Phelps Andrew Phelps Committee Member Anne Haake, Ph.D Anne R. Haake Committee Member Thesis Reproduction Permission Form Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Video Games: Changing the Way We Think Of Home Entertainment L Eri Shulga. hereby grant permission to the Wallace Library of the Rochester Institute of Technofogy to reproduce my thesis in whole or in part. -

History of Computer Game Design Our Collaboration Will Build on Projects That We Two Have Been Engaged in for Some Time

How They Got Game: The History and Culture of Interactive Simulations and Video Games http://www.stanford.edu/dept/HPS/VideoGameProposal/ Principal Investigators Tim Lenoir, History of Science, email: [email protected] Henry Lowood, Stanford University Libraries, email: [email protected] Research and Development Team Carlos Seligo, ATS Freshman Sophomore Programs, email: [email protected] Carlos co-designed the Word and the World sites. His Blade Runner site is one of the most innovative interactive educational sites on the web Rosemary Rogers, Program Administrator, HPS, email: [email protected] Rosemary has designed the Mousesite, the HPS sites, and the Sloan Science and Technology in the Making sites. She has won numerous awards for her website designs. Undergraduate Interns Zachary Pogue, sophomore, email: [email protected] Zach is part of the DMZ design team that won the VPUE website design contract. He has extensive experience in interactive website design John Eric Fu, senior, email: [email protected] As a Presidential Scholar John has completed an outstanding research project comparing British and American video game products and culture. Since his early teens he has been a video game reviewer for Macworld. Grad Students Casey Alt, History of Science and Film Studies, email: [email protected] Casey has worked with Scott Bukatman, Pamela Lee, and Lenoir. He is working on Japanese Manga film and has extensive media experience. Yorgos Panzaris, History of Science, email: [email protected] Yorgos has a master's degree in educational technologies from the MIT MediaLab and has extensive experience with databases and online design. He has worked with Lenoir on several recent projects. -

Lecture 11 : Discrete Cosine Transform Moving Into the Frequency Domain

Lecture 11 : Discrete Cosine Transform Moving into the Frequency Domain Frequency domains can be obtained through the transformation from one (time or spatial) domain to the other (frequency) via Fourier Transform (FT) (see Lecture 3) — MPEG Audio. Discrete Cosine Transform (DCT) (new ) — Heart of JPEG and MPEG Video, MPEG Audio. Note : We mention some image (and video) examples in this section with DCT (in particular) but also the FT is commonly applied to filter multimedia data. External Link: MIT OCW 8.03 Lecture 11 Fourier Analysis Video Recap: Fourier Transform The tool which converts a spatial (real space) description of audio/image data into one in terms of its frequency components is called the Fourier transform. The new version is usually referred to as the Fourier space description of the data. We then essentially process the data: E.g . for filtering basically this means attenuating or setting certain frequencies to zero We then need to convert data back to real audio/imagery to use in our applications. The corresponding inverse transformation which turns a Fourier space description back into a real space one is called the inverse Fourier transform. What do Frequencies Mean in an Image? Large values at high frequency components mean the data is changing rapidly on a short distance scale. E.g .: a page of small font text, brick wall, vegetation. Large low frequency components then the large scale features of the picture are more important. E.g . a single fairly simple object which occupies most of the image. The Road to Compression How do we achieve compression? Low pass filter — ignore high frequency noise components Only store lower frequency components High pass filter — spot gradual changes If changes are too low/slow — eye does not respond so ignore? Low Pass Image Compression Example MATLAB demo, dctdemo.m, (uses DCT) to Load an image Low pass filter in frequency (DCT) space Tune compression via a single slider value n to select coefficients Inverse DCT, subtract input and filtered image to see compression artefacts. -

Image Compression Using Discrete Cosine Transform Method

Qusay Kanaan Kadhim, International Journal of Computer Science and Mobile Computing, Vol.5 Issue.9, September- 2016, pg. 186-192 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 5.258 IJCSMC, Vol. 5, Issue. 9, September 2016, pg.186 – 192 Image Compression Using Discrete Cosine Transform Method Qusay Kanaan Kadhim Al-Yarmook University College / Computer Science Department, Iraq [email protected] ABSTRACT: The processing of digital images took a wide importance in the knowledge field in the last decades ago due to the rapid development in the communication techniques and the need to find and develop methods assist in enhancing and exploiting the image information. The field of digital images compression becomes an important field of digital images processing fields due to the need to exploit the available storage space as much as possible and reduce the time required to transmit the image. Baseline JPEG Standard technique is used in compression of images with 8-bit color depth. Basically, this scheme consists of seven operations which are the sampling, the partitioning, the transform, the quantization, the entropy coding and Huffman coding. First, the sampling process is used to reduce the size of the image and the number bits required to represent it. Next, the partitioning process is applied to the image to get (8×8) image block. Then, the discrete cosine transform is used to transform the image block data from spatial domain to frequency domain to make the data easy to process. -

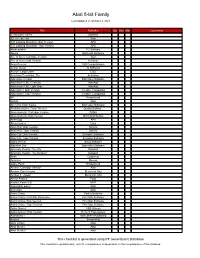

Atari 8-Bit Family

Atari 8-bit Family Last Updated on October 2, 2021 Title Publisher Qty Box Man Comments 221B Baker Street Datasoft 3D Tic-Tac-Toe Atari 747 Landing Simulator: Disk Version APX 747 Landing Simulator: Tape Version APX Abracadabra TG Software Abuse Softsmith Software Ace of Aces: Cartridge Version Atari Ace of Aces: Disk Version Accolade Acey-Deucey L&S Computerware Action Quest JV Software Action!: Large Label OSS Activision Decathlon, The Activision Adventure Creator Spinnaker Software Adventure II XE: Charcoal AtariAge Adventure II XE: Light Gray AtariAge Adventure!: Disk Version Creative Computing Adventure!: Tape Version Creative Computing AE Broderbund Airball Atari Alf in the Color Caves Spinnaker Software Ali Baba and the Forty Thieves Quality Software Alien Ambush: Cartridge Version DANA Alien Ambush: Disk Version Micro Distributors Alien Egg APX Alien Garden Epyx Alien Hell: Disk Version Syncro Alien Hell: Tape Version Syncro Alley Cat: Disk Version Synapse Software Alley Cat: Tape Version Synapse Software Alpha Shield Sirius Software Alphabet Zoo Spinnaker Software Alternate Reality: The City Datasoft Alternate Reality: The Dungeon Datasoft Ankh Datamost Anteater Romox Apple Panic Broderbund Archon: Cartridge Version Atari Archon: Disk Version Electronic Arts Archon II - Adept Electronic Arts Armor Assault Epyx Assault Force 3-D MPP Assembler Editor Atari Asteroids Atari Astro Chase Parker Brothers Astro Chase: First Star Rerelease First Star Software Astro Chase: Disk Version First Star Software Astro Chase: Tape Version First Star Software Astro-Grover CBS Games Astro-Grover: Disk Version Hi-Tech Expressions Astronomy I Main Street Publishing Asylum ScreenPlay Atari LOGO Atari Atari Music I Atari Atari Music II Atari This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database. -

Openbsd Gaming Resource

OPENBSD GAMING RESOURCE A continually updated resource for playing video games on OpenBSD. Mr. Satterly Updated August 7, 2021 P11U17A3B8 III Title: OpenBSD Gaming Resource Author: Mr. Satterly Publisher: Mr. Satterly Date: Updated August 7, 2021 Copyright: Creative Commons Zero 1.0 Universal Email: [email protected] Website: https://MrSatterly.com/ Contents 1 Introduction1 2 Ways to play the games2 2.1 Base system........................ 2 2.2 Ports/Editors........................ 3 2.3 Ports/Emulators...................... 3 Arcade emulation..................... 4 Computer emulation................... 4 Game console emulation................. 4 Operating system emulation .............. 7 2.4 Ports/Games........................ 8 Game engines....................... 8 Interactive fiction..................... 9 2.5 Ports/Math......................... 10 2.6 Ports/Net.......................... 10 2.7 Ports/Shells ........................ 12 2.8 Ports/WWW ........................ 12 3 Notable games 14 3.1 Free games ........................ 14 A-I.............................. 14 J-R.............................. 22 S-Z.............................. 26 3.2 Non-free games...................... 31 4 Getting the games 33 4.1 Games............................ 33 5 Former ways to play games 37 6 What next? 38 Appendices 39 A Clones, models, and variants 39 Index 51 IV 1 Introduction I use this document to help organize my thoughts, files, and links on how to play games on OpenBSD. It helps me to remember what I have gone through while finding new games. The biggest reason to read or at least skim this document is because how can you search for something you do not know exists? I will show you ways to play games, what free and non-free games are available, and give links to help you get started on downloading them. -

Integrating Formal Verification Into an Advanced Computer Architecture Course

Session 1532 Integrating Formal Verification into an Advanced Computer Architecture Course Miroslav N. Velev [email protected] School of Electrical and Computer Engineering Georgia Institute of Technology, Atlanta, GA 30332, U.S.A. Abstract. The paper presents a sequence of three projects on design and formal verification of pipelined and superscalar processors. The projects were integrated—by means of lectures and pre- paratory homework exercises—into an existing advanced computer architecture course taught to both undergraduate and graduate students in a way that required them to have no prior knowledge of formal methods. The first project was on design and formal verification of a 5-stage pipelined DLX processor, implementing the six basic instruction types—register-register-ALU, register- immediate-ALU, store, load, jump, and branch. The second project was on extending the processor from project one with ALU exceptions, a return-from-exception instruction, and branch prediction; each of the resulting models was formally verified. The third project was on design and formal ver- ification of a dual-issue superscalar version of the DLX from project one. The preparatory home- work problems included an exercise on design and formal verification of a staggered ALU, pipelined in the style of the integer ALUs in the Intel Pentium 4. The processors were described in the high-level hardware description language AbsHDL that allows the students to ignore the bit widths of word-level values and the internal implementations of functional units and memories, while focusing entirely on the logic that controls the pipelined or superscalar execution. The formal verification tool flow included the term-level symbolic simulator TLSim, the decision procedure EVC, and an efficient SAT-checker; this tool flow—combined with the same abstraction techniques for defining processors with exceptions and branch prediction, as used in the projects—was applied at Motorola to formally verify a model of the M•CORE processor, and detected bugs.