Statistical Methods for the Analysis of Genomic Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1W9c Lichtarge Lab 2006

Pages 1–7 1w9c Evolutionary trace report by report maker June 18, 2010 4.3.1 Alistat 7 4.3.2 CE 7 4.3.3 DSSP 7 4.3.4 HSSP 7 4.3.5 LaTex 7 4.3.6 Muscle 7 4.3.7 Pymol 7 4.4 Note about ET Viewer 7 4.5 Citing this work 7 4.6 About report maker 7 4.7 Attachments 7 1 INTRODUCTION From the original Protein Data Bank entry (PDB id 1w9c): Title: Proteolytic fragment of crm1 spanning six c-terminal heat repeats Compound: Mol id: 1; molecule: crm1 protein; chain: a, b; frag- ment: c-terminal six heat repeats, residues 707-1027; synonym: exportin 1; engineered: yes Organism, scientific name: Homo Sapiens; 1w9c contains a single unique chain 1w9cA (321 residues long) CONTENTS and its homologue 1w9cB. 1 Introduction 1 2 CHAIN 1W9CA 2.1 O14980 overview 2 Chain 1w9cA 1 2.1 O14980 overview 1 From SwissProt, id O14980, 100% identical to 1w9cA: 2.2 Multiple sequence alignment for 1w9cA 1 Description: Exportin-1 (Chromosome region maintenance 1 protein 2.3 Residue ranking in 1w9cA 2 homolog). 2.4 Top ranking residues in 1w9cA and their position on Organism, scientific name: Homo sapiens (Human). the structure 2 Taxonomy: Eukaryota; Metazoa; Chordata; Craniata; Vertebrata; 2.4.1 Clustering of residues at 25% coverage. 2 Euteleostomi; Mammalia; Eutheria; Euarchontoglires; Primates; 2.4.2 Overlap with known functional surfaces at Catarrhini; Hominidae; Homo. 25% coverage. 2 Function: Mediates the nuclear export of cellular proteins (cargoes) 2.4.3 Possible novel functional surfaces at 25% bearing a leucine-rich nuclear export signal (NES) and of RNAs. -

UNIVERSITY of CALIFORNIA, SAN DIEGO Functional Analysis of Sall4

UNIVERSITY OF CALIFORNIA, SAN DIEGO Functional analysis of Sall4 in modulating embryonic stem cell fate A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Molecular Pathology by Pei Jen A. Lee Committee in charge: Professor Steven Briggs, Chair Professor Geoff Rosenfeld, Co-Chair Professor Alexander Hoffmann Professor Randall Johnson Professor Mark Mercola 2009 Copyright Pei Jen A. Lee, 2009 All rights reserved. The dissertation of Pei Jen A. Lee is approved, and it is acceptable in quality and form for publication on microfilm and electronically: ______________________________________________________________ ______________________________________________________________ ______________________________________________________________ ______________________________________________________________ Co-Chair ______________________________________________________________ Chair University of California, San Diego 2009 iii Dedicated to my parents, my brother ,and my husband for their love and support iv Table of Contents Signature Page……………………………………………………………………….…iii Dedication…...…………………………………………………………………………..iv Table of Contents……………………………………………………………………….v List of Figures…………………………………………………………………………...vi List of Tables………………………………………………….………………………...ix Curriculum vitae…………………………………………………………………………x Acknowledgement………………………………………………….……….……..…...xi Abstract………………………………………………………………..…………….....xiii Chapter 1 Introduction ..…………………………………………………………………………….1 Chapter 2 Materials and Methods……………………………………………………………..…12 -

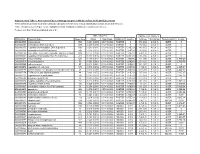

Association of Gene Ontology Categories with Decay Rate for Hepg2 Experiments These Tables Show Details for All Gene Ontology Categories

Supplementary Table 1: Association of Gene Ontology Categories with Decay Rate for HepG2 Experiments These tables show details for all Gene Ontology categories. Inferences for manual classification scheme shown at the bottom. Those categories used in Figure 1A are highlighted in bold. Standard Deviations are shown in parentheses. P-values less than 1E-20 are indicated with a "0". Rate r (hour^-1) Half-life < 2hr. Decay % GO Number Category Name Probe Sets Group Non-Group Distribution p-value In-Group Non-Group Representation p-value GO:0006350 transcription 1523 0.221 (0.009) 0.127 (0.002) FASTER 0 13.1 (0.4) 4.5 (0.1) OVER 0 GO:0006351 transcription, DNA-dependent 1498 0.220 (0.009) 0.127 (0.002) FASTER 0 13.0 (0.4) 4.5 (0.1) OVER 0 GO:0006355 regulation of transcription, DNA-dependent 1163 0.230 (0.011) 0.128 (0.002) FASTER 5.00E-21 14.2 (0.5) 4.6 (0.1) OVER 0 GO:0006366 transcription from Pol II promoter 845 0.225 (0.012) 0.130 (0.002) FASTER 1.88E-14 13.0 (0.5) 4.8 (0.1) OVER 0 GO:0006139 nucleobase, nucleoside, nucleotide and nucleic acid metabolism3004 0.173 (0.006) 0.127 (0.002) FASTER 1.28E-12 8.4 (0.2) 4.5 (0.1) OVER 0 GO:0006357 regulation of transcription from Pol II promoter 487 0.231 (0.016) 0.132 (0.002) FASTER 6.05E-10 13.5 (0.6) 4.9 (0.1) OVER 0 GO:0008283 cell proliferation 625 0.189 (0.014) 0.132 (0.002) FASTER 1.95E-05 10.1 (0.6) 5.0 (0.1) OVER 1.50E-20 GO:0006513 monoubiquitination 36 0.305 (0.049) 0.134 (0.002) FASTER 2.69E-04 25.4 (4.4) 5.1 (0.1) OVER 2.04E-06 GO:0007050 cell cycle arrest 57 0.311 (0.054) 0.133 (0.002) -

Modulation of Gene Expression Associated with the Cell Cycle and Tumorigenicity of Glioblastoma Cells by the 18 Kda Translocator Protein (TSPO)

Open Access Austin Journal of Pharmacology and Therapeutics Research Article Modulation of Gene Expression Associated with the Cell Cycle and Tumorigenicity of Glioblastoma Cells by the 18 kDa Translocator Protein (TSPO) Julia Bode1,2#, Leo Veenman3#, Alex Vainshtein3, Wilfried Kugler1,4, Nahum Rosenberg3,5 and Abstract Moshe Gavish3* After PK 11195 (25 µM) exposure (24 and 48 hours) and permanent TSPO 1Department of Pediatrics, Hematology and Oncology, silencing by siRNA to U118MG glioblastoma cells, microarray analysis of gene University Medical Center of the Georg- August- expression, followed up by validation with real time polymerase chain reaction University Goettingen, Germany (RT-PCR), showed effects on gene expression related to the cell cycle. Other 2Schaller Research Group at the University of Heidelberg affected genes are related to apoptosis, oxidative stress, immune responses, and the German Cancer Research Center, Division of DNA repair, and membrane functions, including adhesion and transport. In Molecular Mechanisms of Tumor Invasion, German relation to post transcriptional and post translational effects, TSPO ligand and Cancer Research Center (DKFZ), Germany TSPO knockdown affected gene expression for many short nucleolar RNAs. 3Department of Molecular Pharmacology, Ruth and Bruce Applying a 2-fold, cut off to micro array analysis revealed that 24 and 48 hours Rappaport Faculty of Medicine, Technion-Israel Institute of PK 11195 exposure affected respectively 128 and 85 genes that were also of Technology, Israel affected by TSPO silencing. Taking a 2.5 fold, cut off, only gene expression 4Fluoron City GmbH, Magirus-Deutz-Straße, Neu Ulm of v-FOS was found to be modulated by each of these TSPO manipulations. -

Role and Regulation of the P53-Homolog P73 in the Transformation of Normal Human Fibroblasts

Role and regulation of the p53-homolog p73 in the transformation of normal human fibroblasts Dissertation zur Erlangung des naturwissenschaftlichen Doktorgrades der Bayerischen Julius-Maximilians-Universität Würzburg vorgelegt von Lars Hofmann aus Aschaffenburg Würzburg 2007 Eingereicht am Mitglieder der Promotionskommission: Vorsitzender: Prof. Dr. Dr. Martin J. Müller Gutachter: Prof. Dr. Michael P. Schön Gutachter : Prof. Dr. Georg Krohne Tag des Promotionskolloquiums: Doktorurkunde ausgehändigt am Erklärung Hiermit erkläre ich, dass ich die vorliegende Arbeit selbständig angefertigt und keine anderen als die angegebenen Hilfsmittel und Quellen verwendet habe. Diese Arbeit wurde weder in gleicher noch in ähnlicher Form in einem anderen Prüfungsverfahren vorgelegt. Ich habe früher, außer den mit dem Zulassungsgesuch urkundlichen Graden, keine weiteren akademischen Grade erworben und zu erwerben gesucht. Würzburg, Lars Hofmann Content SUMMARY ................................................................................................................ IV ZUSAMMENFASSUNG ............................................................................................. V 1. INTRODUCTION ................................................................................................. 1 1.1. Molecular basics of cancer .......................................................................................... 1 1.2. Early research on tumorigenesis ................................................................................. 3 1.3. Developing -

Small Gtpase Ran and Ran-Binding Proteins

BioMol Concepts, Vol. 3 (2012), pp. 307–318 • Copyright © by Walter de Gruyter • Berlin • Boston. DOI 10.1515/bmc-2011-0068 Review Small GTPase Ran and Ran-binding proteins Masahiro Nagai 1 and Yoshihiro Yoneda 1 – 3, * highly abundant and strongly conserved GTPase encoding ∼ 1 Biomolecular Dynamics Laboratory , Department a 25 kDa protein primarily located in the nucleus (2) . On of Frontier Biosciences, Graduate School of Frontier the one hand, as revealed by a substantial body of work, Biosciences, Osaka University, 1-3 Yamada-oka, Suita, Ran has been found to have widespread functions since Osaka 565-0871 , Japan its initial discovery. Like other small GTPases, Ran func- 2 Department of Biochemistry , Graduate School of Medicine, tions as a molecular switch by binding to either GTP or Osaka University, 2-2 Yamada-oka, Suita, Osaka 565-0871 , GDP. However, Ran possesses only weak GTPase activ- Japan ity, and several well-known ‘ Ran-binding proteins ’ aid in 3 Japan Science and Technology Agency , Core Research for the regulation of the GTPase cycle. Among such partner Evolutional Science and Technology, Osaka University, 1-3 molecules, RCC1 was originally identifi ed as a regulator of Yamada-oka, Suita, Osaka 565-0871 , Japan mitosis in tsBN2, a temperature-sensitive hamster cell line (3) ; RCC1 mediates the conversion of RanGDP to RanGTP * Corresponding author in the nucleus and is mainly associated with chromatin (4) e-mail: [email protected] through its interactions with histones H2A and H2B (5) . On the other hand, the GTP hydrolysis of Ran is stimulated by the Ran GTPase-activating protein (RanGAP) (6) , in con- Abstract junction with Ran-binding protein 1 (RanBP1) and/or the large nucleoporin Ran-binding protein 2 (RanBP2, also Like many other small GTPases, Ran functions in eukaryotic known as Nup358). -

Rabbit Anti-Ranbp3/FITC Conjugated Antibody

SunLong Biotech Co.,LTD Tel: 0086-571- 56623320 Fax:0086-571- 56623318 E-mail:[email protected] www.sunlongbiotech.com Rabbit Anti-RanBP3/FITC Conjugated antibody SL20113R-FITC Product Name: Anti-RanBP3/FITC Chinese Name: FITC标记的RANBinding protein3抗体 Alias: Ran BP-3; Ran binding protein 3; Ran-binding protein 3; RANB3_HUMAN; RanBP3. Organism Species: Rabbit Clonality: Polyclonal React Species: Human,Mouse,Rat,Chicken,Dog,Pig, ICC=1:50-200IF=1:50-200 Applications: not yet tested in other applications. optimal dilutions/concentrations should be determined by the end user. Molecular weight: 60kDa Form: Lyophilized or Liquid Concentration: 1mg/ml immunogen: KLH conjugated synthetic peptide derived from human RanBP3 Lsotype: IgG Purification: affinity purified by Protein A Storage Buffer: 0.01M TBS(pH7.4) with 1% BSA, 0.03% Proclin300 and 50% Glycerol. Store at -20 °C for one year. Avoid repeated freeze/thaw cycles. The lyophilized antibodywww.sunlongbiotech.com is stable at room temperature for at least one month and for greater than a year Storage: when kept at -20°C. When reconstituted in sterile pH 7.4 0.01M PBS or diluent of antibody the antibody is stable for at least two weeks at 2-4 °C. background: Acts as a cofactor for XPO1/CRM1-mediated nuclear export, perhaps as export complex scaffolding protein. Bound to XPO1/CRM1, stabilizes the XPO1/CRM1-cargo interaction. In the absence of Ran-bound GTP prevents binding of XPO1/CRM1 to the nuclear pore complex. Binds to CHC1/RCC1 and increases the guanine nucleotide Product Detail: exchange activity of CHC1/RCC1. Recruits XPO1/CRM1 to CHC1/RCC1 in a Ran- dependent manner. -

Activin/Nodal Signalling in Stem Cells Siim Pauklin and Ludovic Vallier*

© 2015. Published by The Company of Biologists Ltd | Development (2015) 142, 607-619 doi:10.1242/dev.091769 REVIEW Activin/Nodal signalling in stem cells Siim Pauklin and Ludovic Vallier* ABSTRACT organisms. Of particular relevance, genetic studies in the mouse Activin/Nodal growth factors control a broad range of biological have established that Nodal signalling is necessary at the early processes, including early cell fate decisions, organogenesis and epiblast stage during implantation, in which the pathway functions adult tissue homeostasis. Here, we provide an overview of the to maintain the expression of key pluripotency factors as well as to mechanisms by which the Activin/Nodal signalling pathway governs regulate the differentiation of extra-embryonic tissue. Activins, β stem cell function in these different stages of development. dimers of different subtypes of Inhibin , are also expressed in pre- We describe recent findings that associate Activin/Nodal signalling implantation blastocyst but not in the primitive streak (Albano to pathological conditions, focusing on cancer stem cells in et al., 1993; Feijen et al., 1994). However, genetic studies have β tumorigenesis and its potential as a target for therapies. Moreover, shown that Inhibin s are not necessary for early development in the we will discuss future directions and questions that currently remain mouse (Lau et al., 2000; Matzuk, 1995; Matzuk et al., 1995a,b). unanswered on the role of Activin/Nodal signalling in stem cell self- Combined gradients of Nodal and BMP signalling within the renewal, differentiation and proliferation. primitive streak control endoderm and mesoderm germ layer specification and also their subsequent patterning whilst blocking KEY WORDS: Activin, Cell cycle, Differentiation, Nodal, neuroectoderm formation (Camus et al., 2006; Mesnard et al., Pluripotency, Stem cells 2006). -

Genome-Wide Rnai Analysis of JAK/STAT Signaling Components in Drosophila

Downloaded from genesdev.cshlp.org on August 31, 2016 - Published by Cold Spring Harbor Laboratory Press Genome-wide RNAi analysis of JAK/STAT signaling components in Drosophila Gyeong-Hun Baeg,1,2 Rui Zhou,1,2 and Norbert Perrimon1,3 1Department of Genetics, Howard Hughes Medical Institute, Harvard Medical School, Boston, MA 02115, USA The cytokine-activated Janus kinase (JAK)/signal transducer and activator of transcription (STAT) pathway plays an important role in the control of a wide variety of biological processes. When misregulated, JAK/STAT signaling is associated with various human diseases, such as immune disorders and tumorigenesis. To gain insights into the mechanisms by which JAK/STAT signaling participates in these diverse biological responses, we carried out a genome-wide RNA interference (RNAi) screen in cultured Drosophila cells. We identified 121 genes whose double-stranded RNA (dsRNA)-mediated knockdowns affected STAT92E activity. Of the 29 positive regulators, 13 are required for the tyrosine phosphorylation of STAT92E. Furthermore, we found that the Drosophila homologs of RanBP3 and RanBP10 are negative regulators of JAK/STAT signaling through their control of nucleocytoplasmic transport of STAT92E. In addition, we identified a key negative regulator of Drosophila JAK/STAT signaling, protein tyrosine phosphatase PTP61F, and showed thatitisa transcriptional target of JAK/STAT signaling, thus revealing a novel negative feedback loop. Our study has uncovered many uncharacterized genes required for different steps of the JAK/STAT signaling pathway. [Keywords: JAK/STAT signal transduction pathway; Drosophila; RNA interference] Supplemental material is available at http://genesdev.org. Received April 1, 2005; revised version accepted June 20, 2005. -

Variation in Protein Coding Genes Identifies Information Flow

bioRxiv preprint doi: https://doi.org/10.1101/679456; this version posted June 21, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Animal complexity and information flow 1 1 2 3 4 5 Variation in protein coding genes identifies information flow as a contributor to 6 animal complexity 7 8 Jack Dean, Daniela Lopes Cardoso and Colin Sharpe* 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 Institute of Biological and Biomedical Sciences 25 School of Biological Science 26 University of Portsmouth, 27 Portsmouth, UK 28 PO16 7YH 29 30 * Author for correspondence 31 [email protected] 32 33 Orcid numbers: 34 DLC: 0000-0003-2683-1745 35 CS: 0000-0002-5022-0840 36 37 38 39 40 41 42 43 44 45 46 47 48 49 Abstract bioRxiv preprint doi: https://doi.org/10.1101/679456; this version posted June 21, 2019. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Animal complexity and information flow 2 1 Across the metazoans there is a trend towards greater organismal complexity. How 2 complexity is generated, however, is uncertain. Since C.elegans and humans have 3 approximately the same number of genes, the explanation will depend on how genes are 4 used, rather than their absolute number. -

Supporting Information

Supporting Information Friedman et al. 10.1073/pnas.0812446106 SI Results and Discussion intronic miR genes in these protein-coding genes. Because in General Phenotype of Dicer-PCKO Mice. Dicer-PCKO mice had many many cases the exact borders of the protein-coding genes are defects in additional to inner ear defects. Many of them died unknown, we searched for miR genes up to 10 kb from the around birth, and although they were born at a similar size to hosting-gene ends. Out of the 488 mouse miR genes included in their littermate heterozygote siblings, after a few weeks the miRBase release 12.0, 192 mouse miR genes were found as surviving mutants were smaller than their heterozygote siblings located inside (distance 0) or in the vicinity of the protein-coding (see Fig. 1A) and exhibited typical defects, which enabled their genes that are expressed in the P2 cochlear and vestibular SE identification even before genotyping, including typical alopecia (Table S2). Some coding genes include huge clusters of miRNAs (in particular on the nape of the neck), partially closed eyelids (e.g., Sfmbt2). Other genes listed in Table S2 as coding genes are [supporting information (SI) Fig. S1 A and C], eye defects, and actually predicted, as their transcript was detected in cells, but weakness of the rear legs that were twisted backwards (data not the predicted encoded protein has not been identified yet, and shown). However, while all of the mutant mice tested exhibited some of them may be noncoding RNAs. Only a single protein- similar deafness and stereocilia malformation in inner ear HCs, coding gene that is differentially expressed in the cochlear and other defects were variable in their severity. -

Ranbp3 Enhances Nuclear Export of Active ß-Catenin

JCB: ARTICLE RanBP3 enhances nuclear export of active -catenin independently of CRM1 Jolita Hendriksen,1 Francois Fagotto,2 Hella van der Velde,1 Martijn van Schie,3 Jasprien Noordermeer,3 and Maarten Fornerod1 1Department of Tumor Biology, Netherlands Cancer Institute, 1066 CX Amsterdam, Netherlands 2Department of Biology, McGill University, Montreal, Quebec, Canada H3A 2T5 3Department of Molecular Cell Biology, Leiden University Medical Center, 2333 AL Leiden, Netherlands -Catenin is the nuclear effector of the Wnt signal- with -catenin–induced dorsoventral axis formation.  ing cascade. The mechanism by which nuclear ac- Furthermore, RanBP3 depletion stimulates the Wnt path- tivity of -catenin is regulated is not well defined. way in both human cells and Drosophila melanogaster Therefore, we used the nuclear marker RanGTP to screen embryos. In human cells, this is accompanied by an in- for novel nuclear -catenin binding proteins. We identi- crease of dephosphorylated -catenin in the nucleus. Con- fied a cofactor of chromosome region maintenance 1 versely, overexpression of RanBP3 leads to a shift of active (CRM1)–mediated nuclear export, Ran binding protein 3 -catenin toward the cytoplasm. Modulation of -catenin (RanBP3), as a novel -catenin–interacting protein that activity and localization by RanBP3 is independent of ade- binds directly to -catenin in a RanGTP-stimulated man- nomatous polyposis coli protein and CRM1. We conclude ner. RanBP3 inhibits -catenin–mediated transcriptional that RanBP3 is a direct export enhancer