Ulysses in Cyberspace: Examining the Effectiveness of Design Patterns

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

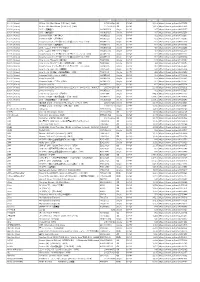

アーティスト 商品名 品番 ジャンル名 定価 URL 100% (Korea) RE

アーティスト 商品名 品番 ジャンル名 定価 URL 100% (Korea) RE:tro: 6th Mini Album (HIP Ver.)(KOR) 1072528598 K-POP 2,290 https://tower.jp/item/4875651 100% (Korea) RE:tro: 6th Mini Album (NEW Ver.)(KOR) 1072528759 K-POP 2,290 https://tower.jp/item/4875653 100% (Korea) 28℃ <通常盤C> OKCK05028 K-POP 1,296 https://tower.jp/item/4825257 100% (Korea) 28℃ <通常盤B> OKCK05027 K-POP 1,296 https://tower.jp/item/4825256 100% (Korea) 28℃ <ユニット別ジャケット盤B> OKCK05030 K-POP 648 https://tower.jp/item/4825260 100% (Korea) 28℃ <ユニット別ジャケット盤A> OKCK05029 K-POP 648 https://tower.jp/item/4825259 100% (Korea) How to cry (Type-A) <通常盤> TS1P5002 K-POP 1,204 https://tower.jp/item/4415939 100% (Korea) How to cry (Type-B) <通常盤> TS1P5003 K-POP 1,204 https://tower.jp/item/4415954 100% (Korea) How to cry (ミヌ盤) <初回限定盤>(LTD) TS1P5005 K-POP 602 https://tower.jp/item/4415958 100% (Korea) How to cry (ロクヒョン盤) <初回限定盤>(LTD) TS1P5006 K-POP 602 https://tower.jp/item/4415970 100% (Korea) How to cry (ジョンファン盤) <初回限定盤>(LTD) TS1P5007 K-POP 602 https://tower.jp/item/4415972 100% (Korea) How to cry (チャンヨン盤) <初回限定盤>(LTD) TS1P5008 K-POP 602 https://tower.jp/item/4415974 100% (Korea) How to cry (ヒョクジン盤) <初回限定盤>(LTD) TS1P5009 K-POP 602 https://tower.jp/item/4415976 100% (Korea) Song for you (A) OKCK5011 K-POP 1,204 https://tower.jp/item/4655024 100% (Korea) Song for you (B) OKCK5012 K-POP 1,204 https://tower.jp/item/4655026 100% (Korea) Song for you (C) OKCK5013 K-POP 1,204 https://tower.jp/item/4655027 100% (Korea) Song for you メンバー別ジャケット盤 (ロクヒョン)(LTD) OKCK5015 K-POP 602 https://tower.jp/item/4655029 100% (Korea) -

Washington Apple Pi Journal, May 1988

Wa1hington Journal of WasGhington Appl e Pi, Ltd Volume. 10 may 1988 number 5 Hiahliaht.1 ' . ~ The Great Apple Lawsuit- (pgs4&so) • Timeout AppleWorks Enhancements - I IGs •Personal Newsletter & Publish It! ~ MacNovice: Word Processing- The Next Generati on ~ Views & Reviews: MidiPaint and Performer ~ Macintosh Virus: Technical Notes In This Issue. Officers & Staff, Editorial ................................ ...................... 3 Personal Newsletter and Publish It! .................... Ray Settle 40* President's Corner ........................................... Tom Warrick 4 • GameSIG News ....................................... Charles Don Hall 41 Event Queue, General Information, Classifieds ..................... 6 Plan for Use of Tutorial Tapes & Disks ................................ 41 AV-SIG News .............................................. Nancy Sefcrian 6 The Design Page for W.A.P .................................. Jay Rohr 42 Commercial Classifieds, Job Mart .......................................... 7 MacNovice Column: Word Processing .... Ralph J. Begleiter 47 * WAP Calendar, SIG News ..................................................... 8 Developer's View: The Great Apple Lawsuit.. ..... Bill Hole 50• WAP Hotline............................................................. ............. 9 Macintosh Bits & Bytes ............................... Lynn R. Trusal 52 Q & A ............................... Robert C. Platt & Bruce F. Field 10 Macinations 3 .................................................. Robb Wolov 56 Using -

URL 100% (Korea)

アーティスト 商品名 オーダー品番 フォーマッ ジャンル名 定価(税抜) URL 100% (Korea) RE:tro: 6th Mini Album (HIP Ver.)(KOR) 1072528598 CD K-POP 1,603 https://tower.jp/item/4875651 100% (Korea) RE:tro: 6th Mini Album (NEW Ver.)(KOR) 1072528759 CD K-POP 1,603 https://tower.jp/item/4875653 100% (Korea) 28℃ <通常盤C> OKCK05028 Single K-POP 907 https://tower.jp/item/4825257 100% (Korea) 28℃ <通常盤B> OKCK05027 Single K-POP 907 https://tower.jp/item/4825256 100% (Korea) Summer Night <通常盤C> OKCK5022 Single K-POP 602 https://tower.jp/item/4732096 100% (Korea) Summer Night <通常盤B> OKCK5021 Single K-POP 602 https://tower.jp/item/4732095 100% (Korea) Song for you メンバー別ジャケット盤 (チャンヨン)(LTD) OKCK5017 Single K-POP 301 https://tower.jp/item/4655033 100% (Korea) Summer Night <通常盤A> OKCK5020 Single K-POP 602 https://tower.jp/item/4732093 100% (Korea) 28℃ <ユニット別ジャケット盤A> OKCK05029 Single K-POP 454 https://tower.jp/item/4825259 100% (Korea) 28℃ <ユニット別ジャケット盤B> OKCK05030 Single K-POP 454 https://tower.jp/item/4825260 100% (Korea) Song for you メンバー別ジャケット盤 (ジョンファン)(LTD) OKCK5016 Single K-POP 301 https://tower.jp/item/4655032 100% (Korea) Song for you メンバー別ジャケット盤 (ヒョクジン)(LTD) OKCK5018 Single K-POP 301 https://tower.jp/item/4655034 100% (Korea) How to cry (Type-A) <通常盤> TS1P5002 Single K-POP 843 https://tower.jp/item/4415939 100% (Korea) How to cry (ヒョクジン盤) <初回限定盤>(LTD) TS1P5009 Single K-POP 421 https://tower.jp/item/4415976 100% (Korea) Song for you メンバー別ジャケット盤 (ロクヒョン)(LTD) OKCK5015 Single K-POP 301 https://tower.jp/item/4655029 100% (Korea) How to cry (Type-B) <通常盤> TS1P5003 Single K-POP 843 https://tower.jp/item/4415954 -

How the Imperial Mode of Living Prevents a Good Life for All for a Good Life the Imperial Mode of Living Prevents – How Researchers and Activists

I.L.A. Kollektiv I.L.A. Kollektiv Today it feels like everybody is talking about the problems and crises of our times: the climate and resource crisis, Greece’s permanent socio-political crisis or the degrading exploitative practices of the textile industry. Many are aware of the issues, yet little AT THE EXPENSE seems to change. Why is this? The concept of the imperial mode of living explains why, in spite of increasing injustices, no long-term alternatives have managed to succeed and a socio-ecological transformation remains out of sight. This text introduces the concept of an imperial mode of living and explains how our OF OTHERS? current mode of production and living is putting both people and the natural world under strain. We shine a spotlight on various areas of our daily lives, including food, mobility and digitalisation. We also look at socio-ecological alternatives and approaches How the imperial mode of living to establish a good life for everyone – not just a few. prevents a good life for all The non-pro t association Common Future e.V. from Göttingen is active in a number of projects focussing on global justice and socio-ecological business approaches. From April to May , the association organised the I.L.A. Werkstatt (Imperiale Lebensweisen – Ausbeutungsstrukturen im . Jahrhundert/ Imperial Modes of Living – Structures of Exploitation in the st Century). Out of this was borne the interdisciplinary I.L.A. Kollektiv, consisting of young – How the imperial mode of living prevents a good life for all for a good life the imperial mode of living prevents – How researchers and activists. -

Analysing the Changing Trajectory of South Korea's ICT Business

Analysing the Changing Trajectory of South Korea’s ICT Business Environment Nigel Callinan Thesis presented for the award of Doctor of Philosophy Supervisors: Professor Bernadette Andreosso & Dr. Mikael Fernström University of Limerick Submitted to the University of Limerick November 2014 Declaration I hereby certify that this material, which I now submit for assessment on the programme of study leading to the award of Doctor of Philosophy is entirely my own work, that I have exercised reasonable care to ensure that the work is original, and does not to the best of my knowledge breach any law of copyright, and has not been taken from the work of others save and to the extent that such work has been cited and acknowledged within the text of my work. Signed: ___________________________________ I.D No: 10142886 Date: Monday 10th November 2014 2 Abstract This thesis aims to provide a new perspective on the development of South Korea’s Information and Communications Technologies (ICT) Business Environment by taking a cross-disciplinary look at the area. Most studies on this subject have tended to remain within the boundaries of a single discipline. In this study, an interdisciplinary approach is taken to trace more of the paths that have influenced the development. This will provide a better understanding of the area and this insight should make it easier for any prospective organisation hoping to enter the Korean market to be successful. In little over two generations, South Korea has transformed from being one of the poorest countries in the world into a global business leader. Currently, Information Technology products are at the forefront of exports from the country and the world’s largest electronics company hails from a city just south of Seoul. -

THIS ZINE KILLS FASCISTS When Improperly Ingested Letter from the Editor Table of Contents Well That Took a While

무료 Issue 24 THIS ZINE KILLS FASCISTS when improperly ingested Letter from the Editor Table of Contents Well that took a while. I’ve concluded it’s hard to motivate myself to 1. Cover write zines while I’m also working at a newspaper. Even if it’s “that” newspaper, it still lets me print a lot of crazy stuff, at least until I start 2. This Page pushing it too far. Issue 24 The last issue was starting to seem a little lazy, as I was recycling con- 3. Letters to the Editor June/Sept 2017 tent also published in the newspaper. For this issue I wanted to cut down on that, as well as on lengthy interviews. So we have a lot of smaller 4. Ash stories, and all sorts of other quick content. I interviewed a lot of people This zine has been published and sliced up their interviews into pieces here and there. I also filched a 5. Dead Gakkahs at random intervals for the lot of content from social media, mainly as PSAs. Think of it more as a past ten years. social media share/retweet, but on paper; hope no one’s pissed. 6. Pseudo & BurnBurnBurn There’s a page spread on Yuppie Killer memories I solicited way back Founders when Yuppie Killer had their last show. There’s also a similar spread on 7. WDI & SHARP Jon Twitch people’s memories of Hongdae Playground, where you can get a sense 8. Playground Paul Mutts of how radically the place has changed, as well as possibly the effect we had on it ourselves. -

How Do Journalists Edit the Content of Press Releases for Publication in Online Newspapers?

UNIVERSITY OF HELSINKI How do journalists edit the content of press releases for publication in online newspapers? Jukka Leppikangas Master’s Thesis English Philology The Department of Modern Languages Autumn 2017 Tiedekunta/Osasto – Fakultet/Sektion – Faculty Laitos – Institution – Department Humanistinen tiedekunta Nykykielten laitos Tekijä – Författare – Author Jukka Leppikangas Työn nimi – Arbetets titel – Title How do journalists edit the content of press releases for publication in online newspapers? Oppiaine – Läroämne – Subject Englantilainen filologia Työn laji – Arbetets art – Level Aika – Datum – Month and Sivumäärä– Sidoantal – Number of pages Pro gradu -tutkielma year 88 sivua Lokakuu 2017 Tiivistelmä – Referat – Abstract Tutkimukseni tavoitteena on tarkastella amerikkalaisten ja brittiläisten journalistien tapaa muokata yritysten lehdistötiedotteista otettua tekstiä käytettäväksi uutisartikkeleihin. Sen taustalla on viime vuosien kehityssuunta, jossa PR:n vaikutusvalta on kasvanut ja lehdistön puolestaan heikentynyt. Pyrin tutkimuksellani valottamaan journalistien keinoja toimia tässä ympäristössä. Keskityn journalistien tekemien lingvististen muutosten kategorioihin ja määriin Henk Pander Maatin (2008) tutkimusmetodia käyttäen. Lopuksi vertailen tuloksiani hänen tuloksiinsa. Muutosten taustalla on lehdistötiedotteen ja uutisartikkelin välinen genrekonflikti. Lehdistötiedotteen genreen kuuluu näyttää aiheensa positiivisessa sävyssä, mutta se ei välttämättä sovi uutisartikkelin objektiivisempaan tyyliin. Täten journalisti -

Motorola - Enforcement of Gprs Standard Essential Patents

EUROPEAN COMMISSION CASE AT.39985 - MOTOROLA - ENFORCEMENT OF GPRS STANDARD ESSENTIAL PATENTS (Only the English text is authentic) ANTITRUST PROCEDURE Council Regulation (EC) 1/2003 Article 7 Regulation (EC) 1/2003 Date: 29/04/2014 This text is made available for information purposes only. A summary of this decision will be published in all EU languages in the Official Journal of the European Union. Parts of this text have been edited to ensure that confidential information is not disclosed. Those parts are replaced by a non-confidential summary in square brackets or are shown as […]. EN EN EUROPEAN COMMISSION Brussels, 29.04.2014 C(2014) 2892 final PUBLIC VERSION COMMISSION DECISION of 29.04.2014 addressed to Motorola Mobility LLC relating to proceedings under Article 102 of the Treaty on the Functioning of the European Union and Article 54 of the EEA Agreement Case AT.39985 - Motorola - Enforcement of GPRS standard essential patents (Only the English text is authentic) EN EN TABLE OF CONTENTS 1. Introduction .................................................................................................................. 2 2. The undertakings concerned ........................................................................................ 3 2.1. Motorola ....................................................................................................................... 3 2.2. Apple ............................................................................................................................ 4 2.3. Other undertakings -

Operations for the Executive Suite Opening New Horizons for Current and Future Pharma Leaders

Operations for the Executive Suite Opening new horizons for current and future pharma leaders Operations for the Executive Suite Opening new horizons for current and future pharma leaders 2012 EDITORS David Keeling Ulf Schrader PHARMACEUTICAL AND MEDICAL PRODUCTS OPERATIONS PRACTICE LEADERSHIP Global, Europe, Middle East and Africa Martin Lösch ([email protected]) North America David Keeling ([email protected]) Asia Vikas Bhadoria ([email protected]) Senior Knowledge Expert/Practice Manager Vanya Telpis ([email protected]) for more information please contact [email protected] ii Contents Introduction 01 Operations for the executive suite David Keeling, Ulf Schrader New aspirations and operations models 09 Plantopia? A mandate for innovation in pharma manufacturing Andrew Gonce, Ulf Schrader 23 Apple vs. Intel: What manufacturing model is right for pharma? David Keeling, Venu Nagali, Vanya Telpis 35 Biopharmaceuticals success: Why manufacturing and technology strategies matter more than ever Alberto Santagostino, Marco Ziegler Developing talent 49 Changing of the guard: What’s needed from pharma’s next Operations leaders Wolf-Christian Gerstner, David Keeling 59 Missing ingredient: Lean leadership on the factory floor Andrew Gonce, Jeff Holland Moving from cost to growth 73 From defense to offense: Leverage operations to increase revenue Noel Greenberger, Frank Scholz, Vanya Telpis iii 85 Beyond the pill: Creating medical value through technology enablement Jamie Cattell, Sastry Chilukuri, David -

DOCTORAL THESIS Title the Great Age of Design: from Design Thinking to Mental Midwifery and to Design's Impact on Brands Pres

DOCTORAL THESIS Title The Great Age of Design: From Design Thinking to Mental Midwifery and to Design’s Impact on Brands Presented by GÜRSEL ILIPINAR Centre ESADE BUSINESS SCHOOL Research Unit GRECOMAR Department MARKETING Directed by JORDI MONTAÑA MATOSAS ii I am dedicating this thesis to my dear parents: Sebahat and Seyhan ILIPINAR, and to my dear brother: Aydemir ILIPINAR, who have been there for me whenever I needed their affection and support! iii iv Acknowledgements I would like to thank my thesis tutor Professor Jordi Montaña for letting me in to the exciting world of Design, Professor Duane Truex for his intellectual stimulation and moral support, Professor Eduard Bonet for introducing me to the fascinating subject of Rhetoric and Narratives in Management Research, to Professor J.C. Spender for challenging me to amplify my imagination in the field of Organizational Theory, and to Dr. Oriol Iglesias for supporting me in the area of Branding, I would like to thank Professor Núria Agell for serving as president of my dissertation committee and Professor Francesc Soler Parellada and Professor Xavier Ginesta as members of my doctoral thesis committee. Professor Wes Johnston and Professor Naveen Donthu at Georgia State University, Raj Srivastava and Greg Thomas at Emory University, Dr. Toni-Matti Karjalainen at Aalto University’s IDBM Programme and Professor Tore Kristensen at Copenhagen Business School who hosted me in their institutions as a visiting scholar: Thanks for guiding my academic endeavor and inspiring this doctoral dissertation. Special thanks to the ESADE Business School´s Dean’s Office, PhD Programme Office and Vice Dean of Research. -

Elucidation of the Extended Pedigree of 'Honeycrisp' Apple and Genetic

Elucidation of the extended pedigree of ‘Honeycrisp’ apple and genetic architecture of its susceptibility to soft scald and soggy breakdown postharvest fruit disorders and zonal leaf chlorosis disorder A Dissertation SUBMITTED TO THE FACULTY OF THE UNIVERSITY OF MINNESOTA BY Nicholas Philip Howard IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY James J. Luby & James M. Bradeen June, 2017 © Nicholas Philip Howard 2017 Acknowledgements I would like to acknowledge the many people that helped make this dissertation possible. My advisor Dr. Jim Luby provided outstanding guidance and set me up with a rewarding project. My graduate committee members Dr. Jim Bradeen, Dr. Stan Hokanson, Dr. Cindy Tong, Dr. Matthew Clark, and Dr. Rex Bernardo likewise provided excellent guidance and expertise. My project was partially funded by the USDA NIFA project RosBREED, which provided wonderful opportunities to collaborate and learn from great scientists including Dr. Cameron Peace, Dr. Stijn Vanderzande, Dr. Lichun Cai, and particularly Dr. Eric van de Weg, who was instrumental in my understanding and implementation of SNP array data in pedigree-based QTL analysis. Many people in the fruit lab group helped with my research. I want to thank the other graduate students in the fruit lab group, Sarah Kostick, Seth Wannemuehler, Elizabeth Blissett, Ashley Powell, Joshua Anderson, and particularly Soon Li Teh, who was a great collaborator and was helpful in editing the figures in this dissertation. Fruit lab undergrad students and short term workers Baylee Miller, Emmi Klarer, Steve- Scofield Opiyo, Hannah Hauan, and Madeline Leslie all helped with copious amounts of phenotyping and sample processing. -

An Analysis of Defense Program Manager Decision Making in Complex and Chaotic Program Environments

NPS-PM-19-019 ACQUISITION RESEARCH PROGRAM SPONSORED REPORT SERIES Why do Programs Fail? An Analysis of Defense Program Manager Decision Making in Complex and Chaotic Program Environments December 2018 LCDR James R. Donahue, USN Capt. Thien N. Duong, USAF CPT Friday E. Ameh, USA Thesis Advisors: Dr. Robert F. Mortlock, Lecturer Raymond D. Jones, Lecturer Graduate School of Business & Public Policy Naval Postgraduate School Approved for public release; distribution is unlimited. Prepared for the Naval Postgraduate School, Monterey, CA 93943. Acquisition Research Program Graduate School of Business & Public Policy Naval Postgraduate School The research presented in this report was supported by the Acquisition Research Program of the Graduate School of Business & Public Policy at the Naval Postgraduate School. To request defense acquisition research, to become a research sponsor, or to print additional copies of reports, please contact any of the staff listed on the Acquisition Research Program website (www.acquisitionresearch.net). Acquisition Research Program Graduate School of Business & Public Policy Naval Postgraduate School ABSTRACT Department of Defense (DoD) program managers’ ability to make effective decisions are critical to a program’s performance. This qualitative study of two DoD program managers shed light into their decision-making processes in complex and chaotic programmatic environments. Additionally, this study specifically focuses on four aggregate categories-—leadership, attitude, bureaucracy, and reputation. These categories have profound influences on the program manager’s ability to process information, make sense of a situation, and make decisions accordingly. By understanding how program managers perceive reality when facing challenges, we could potentially introduce necessary changes, adopt proven practices, and redirect resources toward efforts that would help program managers make more effective decisions.