Fiber-Level On-The-Fly Procedural Textiles

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2021 Catalog

2021 NEW PRODUCTS G-Power Flip and Punch Spin Bait Designed by Aaron Martens, Walleye anglers across the Midwest have become Gamakatsu has developed the dependent upon the spin style hooks for walleye rigs. new G-Power Heavy Cover Flip The Spin Bait hook can be rigged behind spinner & Punch Hook. A step up from blades, prop blades or used the G-Finesse Heavy Cover alone with just a simple Hook, for serious flipping and bead in front of them. It’s punching with heavy fluorocarbon and braid. The TGW (Tournament unique design incorporates Grade Wire) hook, paired with its welded eye, make this the strongest Gamakatsu swivels that is Heavy Cover hook in Gamakatsu’s G-Series lineup. Ideal for larger baits independent of the hook, giving the hook more freedom to spin while and weights, punching through grass mats and flipping into heavy reducing line twist. The Spin Bait hook features Nano Smooth Coat for timber. G-Power Flip and Punch ideally matches to all types of cover stealth presentations and unsurpassed hook penetration and the bait and able to withstand extreme conditions. Page 26 keeper barbs on the shank hold live and plastic baits on more securely. Page 48 G-Power Stinger Trailer Hook The new G-Power Stinger Trailer Hook Superline Offset Round Bend brilliance comes from Gamakatsu’s famous Gamakatsu’s Superline Offset Round B10S series of fly hooks and the expertise Bend is designed with a heavier of Professional Bass angler Aaron Martens. Superline wire best suited for heavy The Stinger Trailer has a strategically braided and fluorocarbon lines. -

Buffalo Police All Season Jacket

Uniforms, Transit Operators CP82007 Page 1 of 48 Specifications Appendix B ----------------------------------------------------------------------------------------------------------------------------- -------------------- 100. SF MUNI GORE-TEX JACKET & LINER – UNISEX STYLE NUMBER: Flying Cross by Fechheimer Item: 79900GTXA 10 STYLE: Waist length style with 6 snap storm flap overlapping a 2 way zipper. Side vents for access to equipment. Front and back flapped yokes to accommodate drop down panels. Utility shoulder straps. Hide-away hood. OUTER SHELL: 100% Nylon, 3 ply Supplex, 3.7 oz per sq. yd., with water and stain repellency, uncoated. COLOR: Black DROP LINING: Made from waterproof, breathable, windproof 2 layer Gore-Tex. Face fabric: 100% Black Polyester. Membrane layer: bi-component PTFE membrane. ====================================================================================== 101. REMOVABLE INSULATED LINER ENDURANCE INSULATED BASE LAYER JACKET SPECIFICATIONS STYLE NUMBER: Flying Cross by Fechheimer 55100A 10 (Black) The shell fabric shall be 100% Nylon with 37.5 padding insulation, 2.9 oz. per sq. yd. STYLE: The jacket shall be manufactured from new up-to-date pattern with an articulated sleeve, gusseted underarms and mandarin style collar. The jacket shall have two (2) upper napoleon pockets and two (2) lower vertical pockets with hidden zippers. There shall be (2) side zippers with adjustable snap closure. Sleeve cuff has partial Nylon Spandex for a comfort fit. Loops at back sleeve cuff seam and outside neck seam shall coordinate with snap tabs on related outerwear styles (). POCKET LINING SLEEVE LINING: The upper napoleon pocket lining shall extend from the hem to the chest and from the side seam to the front zipper edge. Pocket lining shall consist of a Taffeta material. The lining shall extend across the back yoke and down the top sleeve. -

Please Note: Vendor Must Provide One Sample of a Gold Badge for Sheriff and One Sample of a Silver Badge for Deputy

EL PASO COUNTY SHERIFF'S OFFICE DEPUTY BADGES C.W. Nielsen - Model #S-300 FINISH - Alloy either Alloy S or Alloy G depending on rank. BADGE is 3 inches point to point with floral design in each tip. Badge has a 1/4" dap and is struck from .064 material. LETTERING around badge circle is blue block: Deputy Sheriff (seal) El Paso County Lettering is separated by dot break points. The seal is a full color State of Texas seal 7/8" diameter with red rim blue center area and white star. A top the bade and straddling the top two tips is the title banner. It is affixed 1/8" above the top circle of the badge. The title banner is 5/6" wide. The titles are standing Roman letters in a field of blue enamel. All blue enamel colors are #643 blue. Attachments are B.A. Ballou #105 joint #68 catch with 2" foot pin. Gold Badges for the following officers: Sheriff Chief Deputy Commander Detective Lieutenant Sergeant Silver Badge for Deputy Please Note: Vendor must provide one sample of a Gold badge for Sheriff and one sample of a silver badge for Deputy. 5 EL PASO COUNTY SHERIFF’S OFFICE DETENTION OFFICER BADGE Badge Body Specifications: Diameter 72.4 mm or 2.82 inches Thickness 3.5 mm or .13 inches Material Brass Metal Plating TBD Silver for Detention Officer and Gold for Corporal and above Curvature 10 mm convex Fixture Vertical Safety Pin 2” Style Clasp (Welded) Enamel Genuine Cloisonne hard enamel lettering and state seal State Seal 3 D Rank Ribbon Specifications: Dimensions Approximately 50.46 X 18.46 mm “TBD upon final artwork Thickness 2mm Materials Brass Plating To match badge body determined by rank Lettering Genuine Cloisonne hard enamel lettering Bureau Ribbon Specifications: Dimensions Approximately 50.46 X 18.46 mm TBD upon final artwork approval Thickness 2 mm Material Brass Plating To match badge body DETENTION BUREAU Lettering Genuine Cloisonne hard enamel lettering All die charges one time only fee as long as there are no changes to the physical design. -

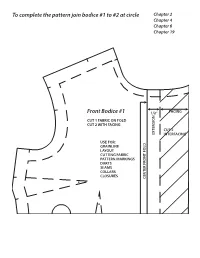

To Complete the Pattern Join Bodice #1 to #2 at Circle Front Bodice #1

To complete the pattern join bodice #1 to #2 at circle Chapter 2 Chapter 4 Chapter 6 Chapter 19 Front Bodice #1 1/2” FACING N CUT 1 FABRIC ON FOLD CUT 2 WITH FACING CUT 2 EXTENSIO INTERFACING USE FOR: GRAINLINE LAYOUT CUTTING FABRIC PATTERN MARKINGS DARTS SEAMS COLLARS CLOSURES CENTER FRONT FOLD To completeTo the pattern join bodice #1 to #2 at circle TO COMPLETE THE PATTERN JOIN BODICE #1 TO #2 AT CIRCLE STITCH TO MATCHPOINTS FOR DART TUCK FRONT BODICE #2 Front Bodice #2 Chapter 2 Chapter 4 Chapter 6 Chapter 19 STITCH TO MATCHPOINTS FOR DART TUCKS To complete the pattern join bodice #3 to #4 at circle Chapter 2 Chapter 4 Chapter 6 Back Bodice #3 CUT 2 FABRIC USE FOR: CK SEAM GRAINLINE LAYOUT CUTTING FABRIC CK FOLD PATTERN MARKINGS DARTS SEAMS COLLARS CENTER BA CUT HERE FOR CENTER BA To completeTo the pattern join bodice #3 to #4 at circle STITCH TO MATCHPOINTS FOR DART TUCKS Back Bodice #4 Chapter 2 Chapter 4 Chapter 6 Chapter 4 Chapter 11 Chapter 14 Chapter 17 MATCHPOINT Front Skirt #5 CUT 1 FABRIC USE FOR: V SHAPED SEAM WAISTBAND WAIST FACING BIAS WAIST FINISH CURVED/ALINE HEM BIAS FALSE HEM CENTER FRONT FOLD Chapter 4 Chapter 11 Chapter 14 Front Yoke #6 CUT 1 FABRIC CUT 1 INTERFACING USE FOR: V SHAPE SEAM WAISTBAND WAIST FACING BIAS WAIST FINISH C. F. FOLD C. F. MATCHPOINT Chapter 4 Chapter 6 Chapter 10 Chapter 11 Chapter 14 Chapter 17 MARK DART POINT HERE Back Skirt #7 CUT 2 FABRIC USE FOR: SEAMS ZIPPERS WAISTBAND WAIST FACING BIAS WAIST FINISH CURVED ALINE HEM BIAS FALSE HEM Chapter 4 CUT ON FOLD Chapter 12 Chapter 17 H TC NO WHEN -

FLY-FRONT ZIPPER III OTTOBRE Design® 7/2019 1

FLY-FRONT ZIPPER III OTTOBRE design® 7/2019 1 10 mm CF 1. Serge or zigzag raw curved 2. Understitch seam allowances to facing 3. Construct fly shield: Fold fly shield in 7. Turn pants fronts wrong side 8. Make cardboard template with 9. Stitch bar-tack on fly at the edge of fly facing. Pin and stitch close to seamline. Fold fly facing to wrong side half right sides together and stitch its up and pin free zipper tape to fly pattern piece for fly facing and use it as point where the first line of fly facing to edge of zipper of pants front and press crease along center- bottom edge. Turn fly shield right side facing only. Remove pins from guide when topstitching the fly. topstitching ends and the placket on left pants front right front edge of placket. out and serge or zigzag its raw long right side. Open zipper and second starts. Close zipper. Pin and topstitch facing to sides together. edges together. stitch free zipper tape to fly left pants front from right side in two facing carefully with two parallel Pin and stitch right zipper tape to right stages: First topstitch straight portion rows of stitching. edge of fly shield, both right side up and of facing, flipping fly shield out of the placing top end of zipper teeth 2 cm way and ending topstitching approx. PATTERN PIECES below top edge of fly shield. Use zipper 3 cm above bottom of fly. • left and right pants fronts foot for stitching. • fly shield (underlap under zipper on finished pants) left pants front Then pin fly shield in position and • fly facing (facing on wrong side of placket edge that conceals topstitch curved bottom edge of facing zipper on finished pants) to pants front, catching bottom end of 17 mm fly shield in stitching. -

Flame Resistant Apparel

Flame Resistant Apparel FireWear® Public Safety Pant PA08- 55% FFR™ (Fibrous Flame Retardant Fiber) 45% Cotton Twill Weave • Fabric weight 9.5 oz. • Self-locking brass zipper with NO- • Uniform style with permanent creases MEX® zipper tape • Hook and eye closure with french fly • Seven belt loops will take 2” belt • Bottoms of side pockets are rein- • Two welted hip pockets; left one has forced using “X” bartack button and loop closure • Two inserted front pockets • Sewn throughout with NOMEX® • Outseams are reinforced by topstitch- thread ing entire length NFPA 70E Arc Rating 7905 Navy 9.5 oz. - 11.5 PPE Category 2 Denim Jeans CJ01-Flame Resistant 100% Cotton FR Denim • Fabric weight 14 oz. buttonhole • Jean style with scooped front pockets • Two patch hip pockets on back • Right pocket has watch pocket • Seven belt loops will take 2” belt • Brass zipper with Aramid tape • FR pockets • Waistband closes with shank button and • Sewn throughout with Aramid thread NFPA 70E Arc Rating 22529 14.0 oz. - 21.0 Blue Denim PPE Category 2 PRICING GUIDE AND CATALOG COMING SOON! 800.901.4784 PinnacleTextile.com NOMEX® EMS Pant PA65- 55% FFR™ (Fibrous Flame Retardant Fiber) 45% Cotton Twill Weave • Fabric weight 9.5 oz. • Hook and eye waist closure with french • Large cargo pocket on left leg with flap fly that closes with two hidden snaps • Fully lined waistband with FR shirt grip • Right leg has EMS scissor pocket • Two patch hip pockets; with hidden • Bottoms of side pockets are reinforced snap closures using “X” bartack • Seven belt loops will take 2” belt • Two inserted front pockets • Sewn throughout with NOMEX® thread • Self-locking brass zipper with NOMEX® zipper tape NFPA 70E Arc Rating 7905 Navy 9.5 oz. -

MONTHLY NE'wsi"E'irer

{ " MONTHLY NE'WSI"E'irER • THE BRITISH CLUB 189 Suriwongse Road Bangkok Telephone: 234-0247,234-2592 Chairman: Mr. C. Stewart Vice-Chairman: Mr. A.J . Phillips Hon Treasurer: Mr. R. Barrett OUTPOST No. 6 August 1980 The top of the news this month is that the third Squash court is finally under construction. The project which was a twinkle in the eye of ex-squash chairman John Weymouth and which, during its over 9 months gestation period, was such a controversial issue within the Club is at last taking shape. A milestone round the neck of the Club some would say - but obviously not a moment to soon for those who can't get a court booking at a sociable hour. For those familiar with the recondite mysteries of crypto piscatorial activity on the calcareous watercourses of Hampshire - your prayers are answered - someone else would like to discuss it - see page 10. (Might have to run that ad. a while to get a reply-Ed.) There is no connection with the Reel Club - see page 6 (well I don't think so anyway) On a sadder note we report that a memorial service for Mike Dutton was conducted by Canon Taylor at Christ Church on July 8th, when about 100 friends attended to offer their prayers and respects to the memory of one of the Club's best-known members. Bob Coombes read the lesson and Arthur Hawtin delivered the eulogy. Many members later repaired to the Club for an impromptu wake, which is what Mike would have expected. -

Urban Outfitters Minimum Quality and Construction Standards

URBAN OUTFITTERS MINIMUM QUALITY AND CONSTRUCTION STANDARDS: Urban Outfitters Inc. produces and sells high quality garments and accessories. All vendors manufacturing products for UOI must adhere to “best practice” industry standards regarding all aspects of product development & production. This list does not include all of our standards; however, it does highlight the most important details. Any deviation from these standards must be approved by the Technical Designer prior to the start of bulk production. Urban Outfitters reserves the right to cancel, charge back or enforce repairs on any order if our minimum construction standards are not strictly followed. All fit samples should be made using the approved quality fabrics, trims & wash treatments. If substitute qualities are used to make a fit sample and that sample is then approved for production then the vendor must assume full responsibility for testing & comparing all approved qualities against the substitute qualities to ensure the production garment will have the same feel, function, fit & appearance as the approved fit sample unless otherwise noted. TABLE OF CONTENTS ADJUSTERS ................................................................................................................................................. 2 BARTACKS .................................................................................................................................................. 2 BEADS/SEQUINS ....................................................................................................................................... -

Garment Catalog

DRESS FOR SUCCESS. www.domesticuniform.com ROKAP CONTENTS A Red Kap® term for our no-roll waistband. This garment has enhanced engineering to withstand industrial laundry stress and abrasion. The Shirts 3-9 waistband has a mesh inner lining inside to reduce breakdown in fabric Image 10-12 fibers, giving the pant a permanent crease even after industrial laundering. Dealer Image 12 Pants 13-15 RELAXED FIT Our relaxed fit jeans feature a traditional rise that sits at the natural waist. Outerwear 16-21 Relaxed seat and thigh offer more room for mobility, and the slightly tapered leg fits over work boots. Food Processing 22-24 Flame-resistant 25-27 Hi Visibility 28 Enhanced Visibility 29 WASH CODES Lockers 30 INDUSTRIAL LAUNDRY LIGHT SOIL WASH HOME WASH Emblem Options 31 FLAME-RESISTANT ICON GUIDE ICON GUIDE 1 2HRC 1 PROTECTION3 4 Arc-rated FR shirt andNEW FR pants or FR coverall with a required WOMEN’S COMPANION PIECE AVAILABLE minimum ATPV of 4 cal/cm2. Garments on pages 3-6 featuring this icon have a female companion piece on pages 7-9. 1 3HRC 2 4PROTECTION Arc-rated FR shirtNEW and FR pants or FR coverall with a required minimum ATPV of 8 cal/cm2. TOUCHTEX™ TECHNOLOGY ® Superior color retention, soil release and wickability. NFPA 2112 COMPLIANT Bulwark® Protective Apparel offers flame-resistant protective garments that are certified by Underwriters Laboratories to meet the requirements of NFPA® 2112 Standard on Flame Resistant Garments for Protection of Industrial Personnel Against Flash TOUCHTEX PRO™ Fire, 2012 Edition. NFPA® 2113 Standard on Selection, Care, Use, Same features as Touchtex but with an even softer hand. -

User Manual 3DR Support

™ User Manual 3DR Support Contact 3DR Support for questions and technical help. online: 3dr.com/support email: [email protected] Support hours: Mon-Fri 8 am to 5 pm PST 3D Robotics (3DR) 1608 4th Street, Suite 410 Berkeley, CA 94710 Tel. +1 (858) 225-1414 3dr.com Solo User Manual V8 © 2016 3D Robotics Inc. Solo is a trademark of 3D Robotics, Inc. GoPro, HERO, the GoPro logo, and the GoPro Be a HERO logo are trademarks or registered trademarks of GoPro, Inc. 1 Contents 1 Introduction 1 1.1 System Overview 1 1.2 Aircraft Overview 2 1.3 Controller Overview 3 1.4 Operating Parameters 4 1.5 Autopilot 5 1.6 Propulsion 5 1.7 LED Meanings 5 2 Setup 6 2.1 In the Box - Solo with The Frame 6 2.2 In the Box - Solo with 3-Axis Gimbal 6 2.3 Battery 6 2.4 Controller 8 2.5 Propellers 9 2.6 Camera 10 2.7 Mobile App 11 3 The Solo Gimbal 16 3.1 In the Box 16 3.2 Gimbal Installation 16 3.3 Gimbal Operation 22 4 Safety 25 4.1 Location 25 4.2 Environmental Awareness 25 4.3 Visual Line of Sight 25 4.4 Flight School 26 4.5 Propellers 26 4.6 GPS 26 4.7 Home Position 27 4.8 Altitude Limit 27 4.9 Emergency Procedures 27 4.10 Flight Battery 28 4.11 Controller 28 4.12 Antenna Configuration 29 5 First Flight 30 5.1 Preflight Checklist 30 5.2 Takeoff 30 5.3 Landing 31 5.4 Return Home 32 5.5 In-Flight Data 34 5.6 Joystick Control 35 6 Using the Solo App 38 6.1 App Interface Overview 38 6.2 Smart Shots 44 6.3 Selfie 45 6.4 Cable Cam 47 6.5 Orbit 48 6.6 Follow 50 7 Alerts 52 7.1 Preflight Errors 52 7.2 In-Flight Errors 53 8 Advanced Settings 56 8.1 Advanced Flight Modes -

A History of the French Revolution Through the Lens of Fashion, Culture, and Identity Bithy R

Bucknell University Bucknell Digital Commons Honors Theses Student Theses Spring 2012 The oM dernity of la Mode: a History of the French Revolution Through the Lens of Fashion, Culture, and Identity Bithy R. Goodman Bucknell University Follow this and additional works at: https://digitalcommons.bucknell.edu/honors_theses Part of the History Commons Recommended Citation Goodman, Bithy R., "The odeM rnity of la Mode: a History of the French Revolution Through the Lens of Fashion, Culture, and Identity" (2012). Honors Theses. 123. https://digitalcommons.bucknell.edu/honors_theses/123 This Honors Thesis is brought to you for free and open access by the Student Theses at Bucknell Digital Commons. It has been accepted for inclusion in Honors Theses by an authorized administrator of Bucknell Digital Commons. For more information, please contact [email protected]. i ii iii Acknowledgments I would like to thank my adviser, David Del Testa, for his dedication to history as a subject and to my pursuits within this vast field. His passion and constant question of “So what?” has inspired me to think critically and passionately. Furthermore, he has helped me to always face the task of history with a sense of humor. Thank you to my secondary advisor and mentor, Paula Davis, who has always encouraged me to develop my own point of view. She has helped to me to recognize that my point of view is significant; for, having something to say, in whatever medium, is a creative process. Thank you to the History, Theater, and English Departments, which have jointly given me the confidence to question and provided me a vehicle through which to articulate and answer these questions. -

US EPA, Pesticide Product Label, NO FLY ZONE,08/02/2019

UNITED STATES ENVIRONMENTAL PROTECTION AGENCY WASHINGTON, DC 20460 OFFICE OF CHEMICAL SAFETY AND POLLUTION PREVENTION August 2, 2019 Micah T. Reynolds Regulatory Consultant International Textile Group, Inc. 804 Green Valley Rd., Suite 300 Greensboro, NC 2748 Subject: Notification per PRN 98-10 – Adding alternate statements and company image/logo Product Name: NO FLY ZONE EPA Registration Number: 83588-1 Application Date: 17 April 2019 Decision Number: 550870 Dear Mr. Reynolds: The Agency is in receipt of your Application for Pesticide Notification under Pesticide Registration Notice (PRN) 98-10 for the above referenced product. The Registration Division (RD) has conducted a review of this request for its applicability under PRN 98-10 and finds that the action requested falls within the scope of PRN 98-10. The label submitted with the application has been stamped “Notification” and will be placed in our records. Should you wish to add/retain a reference to the company’s website on your label, then please be aware that the website becomes labeling under the Federal Insecticide Fungicide and Rodenticide Act and is subject to review by the Agency. If the website is false or misleading, the product would be misbranded and unlawful to sell or distribute under FIFRA section 12(a)(1)(E). 40 CFR 156.10(a)(5) list examples of statements EPA may consider false or misleading. In addition, regardless of whether a website is referenced on your product’s label, claims made on the website may not substantially differ from those claims approved through the registration process. Therefore, should the Agency find or if it is brought to our attention that a website contains false or misleading statements or claims substantially differing from the EPA approved registration, the website will be referred to the EPA’s Office of Enforcement and Compliance.