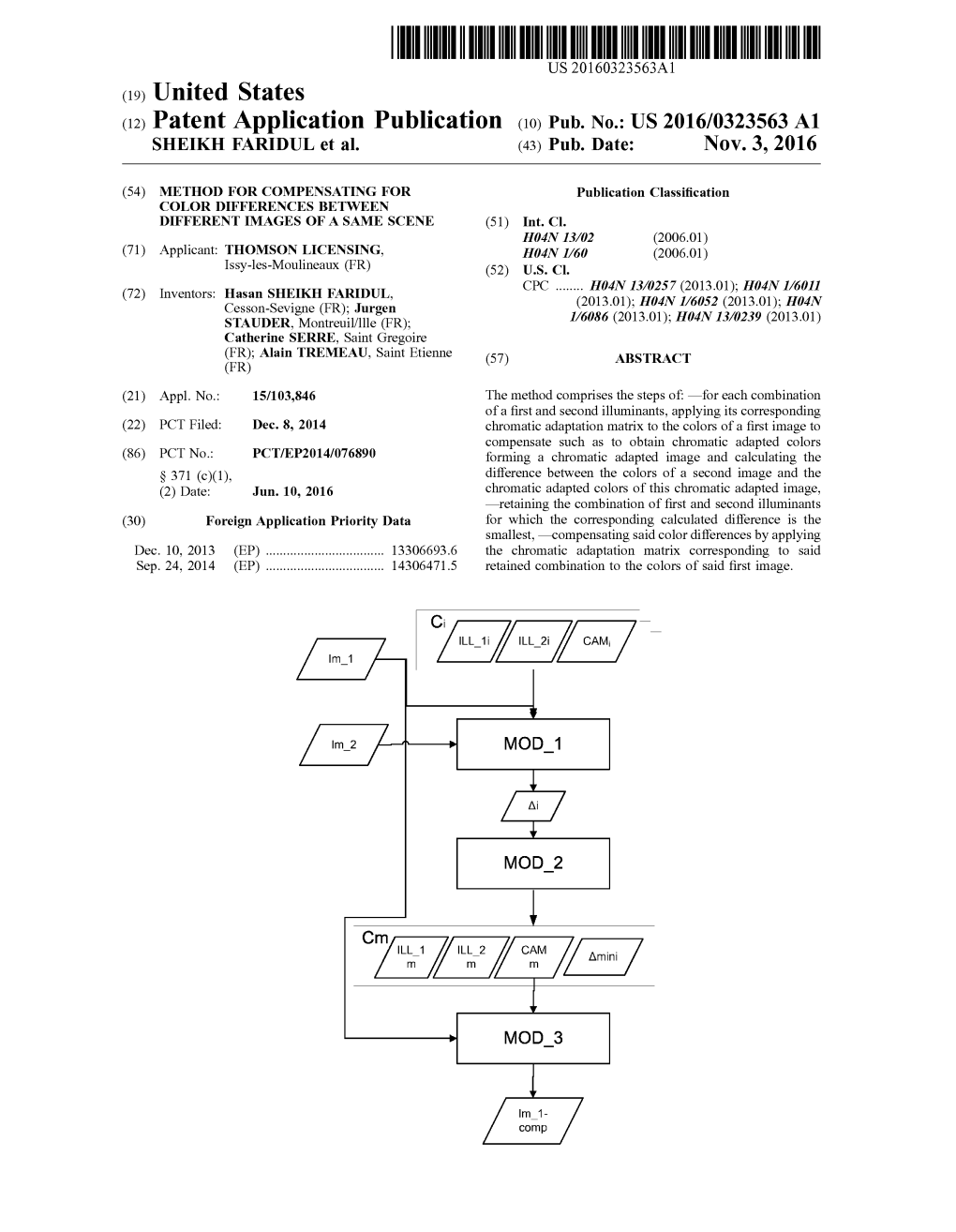

(12) Patent Application Publication (10) Pub. No.: US 2016/0323563 A1 SHEKH FARDUL Et Al

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Method for Compensating for Color Differences Between Different Images of a Same Scene

(19) TZZ¥ZZ__T (11) EP 3 001 668 A1 (12) EUROPEAN PATENT APPLICATION (43) Date of publication: (51) Int Cl.: 30.03.2016 Bulletin 2016/13 H04N 1/60 (2006.01) (21) Application number: 14306471.5 (22) Date of filing: 24.09.2014 (84) Designated Contracting States: (72) Inventors: AL AT BE BG CH CY CZ DE DK EE ES FI FR GB • Sheikh Faridul, Hasan GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO 35576 CESSON-SÉVIGNÉ (FR) PL PT RO RS SE SI SK SM TR • Stauder, Jurgen Designated Extension States: 35576 CESSON-SÉVIGNÉ (FR) BA ME • Serré, Catherine 35576 CESSON-SÉVIGNÉ (FR) (71) Applicants: • Tremeau, Alain • Thomson Licensing 42000 SAINT-ETIENNE (FR) 92130 Issy-les-Moulineaux (FR) • CENTRE NATIONAL DE (74) Representative: Browaeys, Jean-Philippe LA RECHERCHE SCIENTIFIQUE -CNRS- Technicolor 75794 Paris Cedex 16 (FR) 1, rue Jeanne d’Arc • Université Jean Monnet de Saint-Etienne 92443 Issy-les-Moulineaux (FR) 42023 Saint-Etienne Cedex 2 (FR) (54) Method for compensating for color differences between different images of a same scene (57) The method comprises the steps of: - for each combination of a first and second illuminants, applying its corresponding chromatic adaptation matrix to the colors of a first image to compensate such as to obtain chromatic adapted colors forming a chromatic adapted image and calculating the difference between the colors of a second image and the chromatic adapted colors of this chromatic adapted image, - retaining the combination of first and second illuminants for which the corresponding calculated difference is the smallest, - compensating said color differences by applying the chromatic adaptation matrix corresponding to said re- tained combination to the colors of said first image. -

Book of Abstracts of the International Colour Association (AIC) Conference 2020

NATURAL COLOURS - DIGITAL COLOURS Book of Abstracts of the International Colour Association (AIC) Conference 2020 Avignon, France 20, 26-28th november 2020 Sponsored by le Centre Français de la Couleur (CFC) Published by International Colour Association (AIC) This publication includes abstracts of the keynote, oral and poster papers presented in the International Colour Association (AIC) Conference 2020. The theme of the conference was Natural Colours - Digital Colours. The conference, organised by the Centre Français de la Couleur (CFC), was held in Avignon, France on 20, 26-28th November 2020. That conference, for the first time, was managed online and onsite due to the sanitary conditions provided by the COVID-19 pandemic. More information in: www.aic2020.org. © 2020 International Colour Association (AIC) International Colour Association Incorporated PO Box 764 Newtown NSW 2042 Australia www.aic-colour.org All rights reserved. DISCLAIMER Matters of copyright for all images and text associated with the papers within the Proceedings of the International Colour Association (AIC) 2020 and Book of Abstracts are the responsibility of the authors. The AIC does not accept responsibility for any liabilities arising from the publication of any of the submissions. COPYRIGHT Reproduction of this document or parts thereof by any means whatsoever is prohibited without the written permission of the International Colour Association (AIC). All copies of the individual articles remain the intellectual property of the individual authors and/or their -

International Journal for Scientific Research & Development

IJSRD - International Journal for Scientific Research & Development| Vol. 1, Issue 12, 2014 | ISSN (online): 2321-0613 Modifying Image Appearance for Improvement in Information Gaining For Colour Blinds Prof.D.S.Khurge1 Bhagyashree Peswani2 1Professor, ME2, 1, 2 Department of Electronics and Communication, 1,2L.J. Institute of Technology, Ahmedabad Abstract--- Color blindness is a color perception problem of Light transmitted by media i.e., Television uses additive human eye to distinguish colors. Persons who are suffering color mixing with primary colors of red, green, and blue, from color blindness face many problem in day to day life each of which is stimulating one of the three types of the because many information are contained in color color receptors of eyes with as little stimulation as possible representations like traffic light, road signs etc. of the other two. Its called "RGB" color space. Mixtures of Daltonization is a procedure for adapting colors in an image light are actually a mixture of these primary colors and it or a sequence of images for improving the color perception covers a huge part of the human color space and thus by a color-deficient viewer. In this paper, we propose a re- produces a large part of human color experiences. That‟s coloring algorithm to improve the accessibility for the color because color television sets or color computer monitors deficient viewers. In Particular, we select protanopia, a type needs to produce mixtures of primary colors. of dichromacy where the patient does not naturally develop Other primary colors could in principle be used, but the “Red”, or Long wavelength, cones in his or her eyes. -

Application of Contrast Sensitivity Functions in Standard and High Dynamic Range Color Spaces

https://doi.org/10.2352/ISSN.2470-1173.2021.11.HVEI-153 This work is licensed under the Creative Commons Attribution 4.0 International License. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/. Color Threshold Functions: Application of Contrast Sensitivity Functions in Standard and High Dynamic Range Color Spaces Minjung Kim, Maryam Azimi, and Rafał K. Mantiuk Department of Computer Science and Technology, University of Cambridge Abstract vision science. However, it is not obvious as to how contrast Contrast sensitivity functions (CSFs) describe the smallest thresholds in DKL would translate to thresholds in other color visible contrast across a range of stimulus and viewing param- spaces across their color components due to non-linearities such eters. CSFs are useful for imaging and video applications, as as PQ encoding. contrast thresholds describe the maximum of color reproduction In this work, we adapt our spatio-chromatic CSF1 [12] to error that is invisible to the human observer. However, existing predict color threshold functions (CTFs). CTFs describe detec- CSFs are limited. First, they are typically only defined for achro- tion thresholds in color spaces that are more commonly used in matic contrast. Second, even when they are defined for chromatic imaging, video, and color science applications, such as sRGB contrast, the thresholds are described along the cardinal dimen- and YCbCr. The spatio-chromatic CSF model from [12] can sions of linear opponent color spaces, and therefore are difficult predict detection thresholds for any point in the color space and to relate to the dimensions of more commonly used color spaces, for any chromatic and achromatic modulation. -

A Novel Technique for Modification of Images for Deuteranopic Viewers

ISSN (Online) 2278-1021 IJARCCE ISSN (Print) 2319 5940 International Journal of Advanced Research in Computer and Communication Engineering Vol. 5, Issue 4, April 2016 A Novel Technique for Modification of Images for Deuteranopic Viewers Jyoti D. Badlani1, Prof. C.N. Deshmukh 2 PG Student [Dig Electronics], Dept. of Electronics & Comm. Engineering, PRMIT&R, Badnera, Amravati, Maharashtra, India1 Professor, Dept. of Electronics & Comm. Engineering, PRMIT&R, Badnera, Amravati, Maharashtra, India2 Abstract: About 8% of men and 0.5% women in world are affected by the Colour Vision Deficiency. As per the statistics, there are nearly 200 million colour blind people in the world. Color vision deficient people are liable to missing some information that is taken by color. People with complete color blindness can only view things in white, gray and black. Insufficiency of color acuity creates many problems for the color blind people, from daily actions to education. Color vision deficiency, is a condition in which the affected individual cannot differentiate between colors as well as individuals without CVD. Colour vision deficiency, predominantly caused by hereditary reasons, while, in some rare cases, is believed to be acquired by neurological injuries. A colour vision deficient will miss out certain critical information present in the image or video. But with the aid of Image processing, many methods have been developed that can modify the image and thus making it suitable for viewing by the person suffering from CVD. Color adaptation tools modify the colors used in an image to improve the discrimination of colors for individuals with CVD. This paper enlightens some previous research studies in this field and follows the advancement that has occurred over the time. -

Dark Image Enhancement Using Perceptual Color Transfer

IEEE Access, vol. 5, 2017, pp. 1-1. Dark image enhancement using perceptual color transfer. Cepeda-Negrete, J., Sanchez-Yanez, RE., Correa-Tome, Fernando E y Lizarraga-Morales, Rocio A. Cita: Cepeda-Negrete, J., Sanchez-Yanez, RE., Correa-Tome, Fernando E y Lizarraga-Morales, Rocio A (2017). Dark image enhancement using perceptual color transfer. IEEE Access, 5 1-1. Dirección estable: https://www.aacademica.org/jcepedanegrete/14 Esta obra está bajo una licencia de Creative Commons. Para ver una copia de esta licencia, visite http://creativecommons.org/licenses/by-nc-nd/4.0/deed.es. Acta Académica es un proyecto académico sin fines de lucro enmarcado en la iniciativa de acceso abierto. Acta Académica fue creado para facilitar a investigadores de todo el mundo el compartir su producción académica. Para crear un perfil gratuitamente o acceder a otros trabajos visite: http://www.aacademica.org. Received September 21, 2017, accepted October 13, 2017. Date of publication xxxx 00, 0000, date of current version xxxx 00, 0000. Digital Object Identifier 10.1109/ACCESS.2017.2763898 Dark Image Enhancement Using Perceptual Color Transfer JONATHAN CEPEDA-NEGRETE 1, RAUL E. SANCHEZ-YANEZ 2, (Member, IEEE), FERNANDO E. CORREA-TOME2, AND ROCIO A. LIZARRAGA-MORALES3, (Member, IEEE) AQ:1 1Department of Agricultural Engineering, University of Guanajuato DICIVA, Guanajuato 36500 , Mexico 2Department of Electronics Engineering, University of Guanajuato DICIS, Guanajuato 36500, Mexico 3Department of Multidisciplinary Studies, University of Guanajuato DICIS, Guanajuato 36500, Mexico Corresponding author: Raul E. Sanchez-Yanez ([email protected]) The work of J. Cepeda-Negrete was supported by the Mexican National Council on Science and Technology (CONACyT) through the Scholarship 290747 under Grant 388681/254884. -

Color Appearance Models Second Edition

Color Appearance Models Second Edition Mark D. Fairchild Munsell Color Science Laboratory Rochester Institute of Technology, USA Color Appearance Models Wiley–IS&T Series in Imaging Science and Technology Series Editor: Michael A. Kriss Formerly of the Eastman Kodak Research Laboratories and the University of Rochester The Reproduction of Colour (6th Edition) R. W. G. Hunt Color Appearance Models (2nd Edition) Mark D. Fairchild Published in Association with the Society for Imaging Science and Technology Color Appearance Models Second Edition Mark D. Fairchild Munsell Color Science Laboratory Rochester Institute of Technology, USA Copyright © 2005 John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England Telephone (+44) 1243 779777 This book was previously publisher by Pearson Education, Inc Email (for orders and customer service enquiries): [email protected] Visit our Home Page on www.wileyeurope.com or www.wiley.com All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except under the terms of the Copyright, Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licensing Agency Ltd, 90 Tottenham Court Road, London W1T 4LP, UK, without the permission in writing of the Publisher. Requests to the Publisher should be addressed to the Permissions Department, John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England, or emailed to [email protected], or faxed to (+44) 1243 770571. This publication is designed to offer Authors the opportunity to publish accurate and authoritative information in regard to the subject matter covered. -

Color Blindness Bartender: an Embodied VR Game Experience

2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) Color Blindness Bartender: An Embodied VR Game Experience Zhiquan Wang* Huimin Liu† Yucong Pan‡ Christos Mousas§ Department of Computer Graphics Technology Purdue University, West Lafayette, Indiana, U.S.A. ABSTRACT correction [4]. Many color blindness test applications have been Color blindness is a very common condition, as almost one in ten summarized in Plothe [13]. For example, color-vision adaptation people have some level of color blindness or visual impairment. methods for digital game have been developed to assist people with However, there are many tasks in daily life that require the abilities color-blindness [12]. of color recognition and visual discrimination. In order to understand 2.1 Colorblindness Types the inconvenience that color-blind people experience in daily life, we developed a virtual reality (VR) application that provides the sense Cone cells are responsible for color recognition. There are three of embodiment of a color-blind person. Specifically, we designed a types of cones [2], which respond to low, medium, and long wave- color-based task for users to complete under different types of color lengths, respectively, and missing one of the cone types results in one blindness in which users make colorful cocktails for customers and of three different kinds of color blindness: tritanopia, deuteranopia, need to switch between different color blindness modalities of the and protanopia. Tritanopia refers to missing short-wavelength cones, application to distinguish different colors. Our application aims to and results in an inability to distinguish the colors of blue and yellow. -

Increasing Web Accessibility Through an Assisted Color Specification Interface for Colorblind People

Interaction Design and Architecture(s) Journal - IxD&A, N. 5-6, 2009, pp. 41-48 Increasing Web accessibility through an assisted color specification interface for colorblind people Antonella Foti Giuseppe Santucci Dipartimento di Informatica e Sistemistica Dipartimento di Informatica e Sistemistica Sapienza Università degli studi di Roma Sapienza Università degli studi di Roma [email protected] [email protected] 1. In order to be effective it focuses on a subset of the ABSTRACT accessibility issues, dealing with problems associated Nowadays web accessibility refers mainly to users with severe with hypo-sight and colorblindness. In fact, it is the disabilities, neglecting colorblind people, i.e., people lacking a authors' belief that, in order to address affectively chromatic dimension at receptor level. As a consequence, a wrong accessibility issues, it is mandatory to focus on a usage of colors in a web site, in terms of red or green, together specific class of users at a time, addressing only the with blue or yellow, may result in a loss of information. Color problems that are relevant for that class. As an example, models and color selection strategies proposed so far fail to while dealing with colorblind people it is crucial to accurately address such issues. This article describes a module of ensure color separation between plain text and the VisAwis (VISual Accessibility for Web Interfaces) project hyperlink text; such an activity is totally useless for that, following a compromise between usability and accessibility, people impaired by hypo-sight. allow color blind people to select distinguishable colors taking 2. It defines a set of strategies and metrics to automatically into account their specific missing receptor. -

Computing Chromatic Adaptation

Computing Chromatic Adaptation Sabine S¨usstrunk A thesis submitted for the Degree of Doctor of Philosophy in the School of Computing Sciences, University of East Anglia, Norwich. July 2005 c This copy of the thesis has been supplied on condition that anyone who consults it is understood to recognise that its copyright rests with the author and that no quotation from the thesis, nor any information derived therefrom, may be published without the author’s prior written consent. ii Abstract Most of today’s chromatic adaptation transforms (CATs) are based on a modified form of the von Kries chromatic adaptation model, which states that chromatic adaptation is an independent gain regulation of the three photoreceptors in the human visual system. However, modern CATs apply the scaling not in cone space, but use “sharper” sensors, i.e. sensors that have a narrower shape than cones. The recommended transforms currently in use are derived by minimizing perceptual error over experimentally obtained corresponding color data sets. We show that these sensors are still not optimally sharp. Using different com- putational approaches, we obtain sensors that are even more narrowband. In a first experiment, we derive a CAT by using spectral sharpening on Lam’s corresponding color data set. The resulting Sharp CAT, which minimizes XYZ errors, performs as well as the current most popular CATs when tested on several corresponding color data sets and evaluating perceptual error. Designing a spherical sampling technique, we can indeed show that these CAT sensors are not unique, and that there exist a large number of sensors that perform just as well as CAT02, the chromatic adap- tation transform used in CIECAM02 and the ICC color management framework. -

RPI LRC Capturing the Lighting Edge New Color Metrics Mark Fairchild

Color Appearance of Displays, etc. RGC scheduled September 28, 2012 from 6:45 AM to 9:45 AM RPI LRC Capturing the Lighting Edge New Color Metrics Oct. 3, 2012 Mark Fairchild Rochester Institute of Technology, College of Science 1 Some Adaptation Demos 2 Color 3 4 5 6 Blur (Sharpness) 7 8 9 10 Noise 11 12 13 14 Chromatic, Blur, and Noise Adaptation 15 Outline •Color Appearance Phenomena •Chromatic Adaptation •Metamerism •Color Appearance Models •HDR 16 Color Appearance Phenomena 17 Color Appearance Phenomena If two stimuli do not match in color appearance when (XYZ)1 = (XYZ)2, then some aspect of the viewing conditions differs. Various color-appearance phenomena describe relationships between changes in viewing conditions and changes in appearance. Bezold-Brücke Hue Shift Abney Effect Helmholtz-Kohlrausch Effect Hunt Effect Simultaneous Contrast Crispening Helson-Judd Effect Stevens Effect Bartleson-Breneman Equations Chromatic Adaptation Color Constancy Memory Color Object Recognition 18 Simultaneous Contrast The background in which a stimulus is presented influences the apparent color of the stimulus. Stimulus Indicates lateral interactions and adaptation. Stimulus Color- Background Background Change Appearance Change Darker Lighter Lighter Darker Red Green Green Red Yellow Blue Blue Yellow 19 Simultaneous Contrast Example (a) (b) 20 Josef Albers 21 Complex Spatial Interactions 22 Hunt Effect Corresponding chromaticities across indicated relative changes in luminance (Hypothetical Data) For a constant chromaticity, perceived 0.6 colorfulness increases with luminance. 0.5 As luminance increases, stimuli of lower colorimetric purity are required to match 1 10 a given reference stimulus. y 0.4 100 1000 10000 10000 1000 100 10 1 0.3 Indicates nonlinearities in visual processing. -

COLOR APPEARANCE MODELS, 2Nd Ed. Table of Contents

COLOR APPEARANCE MODELS, 2nd Ed. Mark D. Fairchild Table of Contents Dedication Table of Contents Preface Introduction Chapter 1 Human Color Vision 1.1 Optics of the Eye 1.2 The Retina 1.3 Visual Signal Processing 1.4 Mechanisms of Color Vision 1.5 Spatial and Temporal Properties of Color Vision 1.6 Color Vision Deficiencies 1.7 Key Features for Color Appearance Modeling Chapter 2 Psychophysics 2.1 Definition of Psychophysics 2.2 Historical Context 2.3 Hierarchy of Scales 2.4 Threshold Techniques 2.5 Matching Techniques 2.6 One-Dimensional Scaling 2.7 Multidimensional Scaling 2.8 Design of Psychophysical Experiments 2.9 Importance in Color Appearance Modeling Chapter 3 Colorimetry 3.1 Basic and Advanced Colorimetry 3.2 Why is Color? 3.3 Light Sources and Illuminants 3.4 Colored Materials 3.5 The Visual Response 3.6 Tristimulus Values and Color-Matching Functions 3.7 Chromaticity Diagrams 3.8 CIE Color Spaces 3.9 Color-Difference Specification 3.10 The Next Step Chapter 4 Color Appearance Models: Table of Contents Page 1 Color-Appearance Terminology 4.1 Importance of Definitions 4.2 Color 4.3 Hue 4.4 Brightness and Lightness 4.5 Colorfulness and Chroma 4.6 Saturation 4.7 Unrelated and Related Colors 4.8 Definitions in Equations 4.9 Brightness-Colorfulness vs. Lightness-Chroma Chapter 5 Color Order Systems 5.1 Overview and Requirements 5.2 The Munsell Book of Color 5.3 The Swedish Natural Color System (NCS) 5.4 The Colorcurve System 5.5 Other Color Order Systems 5.6 Uses of Color Order Systems 5.7 Color Naming Systems Chapter 6 Color-Appearance