Image-Based Wheat Fungi Diseases Identification by Deep Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Induced Resistance in Wheat

https://doi.org/10.35662/unine-thesis-2819 Induced resistance in wheat A dissertation submitted to the University of Neuchâtel for the degree of Doctor in Naturel Science by Fares BELLAMECHE Thesis direction Prof. Brigitte Mauch-Mani Dr. Fabio Mascher Thesis committee Prof. Brigitte Mauch-Mani, University of Neuchâtel, Switzerland Prof. Daniel Croll, University of Neuchâtel, Switzerland Dr. Fabio Mascher, Agroscope, Changins, Switzerland Prof. Victor Flors, University of Jaume I, Castellon, Spain Defense on the 6th of March 2020 University of Neuchâtel Summary During evolution, plants have developed a variety of chemical and physical defences to protect themselves from stressors. In addition to constitutive defences, plants possess inducible mechanisms that are activated in the presence of the pathogen. Also, plants are capable of enhancing their defensive level once they are properly stimulated with non-pathogenic organisms or chemical stimuli. This phenomenon is called induced resistance (IR) and it was widely reported in studies with dicotyledonous plants. However, mechanisms governing IR in monocots are still poorly investigated. Hence, the aim of this thesis was to study the efficacy of IR to control wheat diseases such leaf rust and Septoria tritici blotch. In this thesis histological and transcriptomic analysis were conducted in order to better understand mechanisms related to IR in monocots and more specifically in wheat plants. Successful use of beneficial rhizobacteria requires their presence and activity at the appropriate level without any harmful effect to host plant. In a first step, the interaction between Pseudomonas protegens CHA0 (CHA0) and wheat was assessed. Our results demonstrated that CHA0 did not affect wheat seed germination and was able to colonize and persist on wheat roots with a beneficial effect on plant growth. -

Image-Based Wheat Fungi Diseases Identification by Deep

plants Article Image-Based Wheat Fungi Diseases Identification by Deep Learning Mikhail A. Genaev 1,2,3, Ekaterina S. Skolotneva 1,2, Elena I. Gultyaeva 4 , Elena A. Orlova 5, Nina P. Bechtold 5 and Dmitry A. Afonnikov 1,2,3,* 1 Institute of Cytology and Genetics, Siberian Branch of the Russian Academy of Sciences, 630090 Novosibirsk, Russia; [email protected] (M.A.G.); [email protected] (E.S.S.) 2 Faculty of Natural Sciences, Novosibirsk State University, 630090 Novosibirsk, Russia 3 Kurchatov Genomics Center, Institute of Cytology and Genetics, Siberian Branch of the Russian Academy of Sciences, 630090 Novosibirsk, Russia 4 All Russian Institute of Plant Protection, Pushkin, 196608 St. Petersburg, Russia; [email protected] 5 Siberian Research Institute of Plant Production and Breeding, Branch of the Institute of Cytology and Genetics, Siberian Branch of Russian Academy of Sciences, 630501 Krasnoobsk, Russia; [email protected] (E.A.O.); [email protected] (N.P.B.) * Correspondence: [email protected]; Tel.: +7-(383)-363-49-63 Abstract: Diseases of cereals caused by pathogenic fungi can significantly reduce crop yields. Many cultures are exposed to them. The disease is difficult to control on a large scale; thus, one of the relevant approaches is the crop field monitoring, which helps to identify the disease at an early stage and take measures to prevent its spread. One of the effective control methods is disease identification based on the analysis of digital images, with the possibility of obtaining them in field conditions, Citation: Genaev, M.A.; Skolotneva, using mobile devices. In this work, we propose a method for the recognition of five fungal diseases E.S.; Gultyaeva, E.I.; Orlova, E.A.; of wheat shoots (leaf rust, stem rust, yellow rust, powdery mildew, and septoria), both separately Bechtold, N.P.; Afonnikov, D.A. -

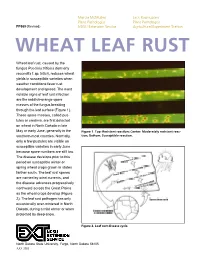

Wheat Leaf Rust

Marcia McMullen Jack Rasmussen Plant Pathologist Plant Pathologist PP589 (Revised) NDSU Extension Service Agricultural Experiment Station WHEAT LEAF RUST Wheat leaf rust, caused by the fungus Puccinia triticina (formerly recondita f. sp. tritici), reduces wheat yields in susceptible varieties when weather conditions favor rust development and spread. The most notable signs of leaf rust infection are the reddish-orange spore masses of the fungus breaking through the leaf surface (Figure 1). These spore masses, called pus- tules or uredinia, are first detected on wheat in North Dakota in late May or early June, generally in the Figure 1. Top: Resistant reaction; Center: Moderately resistant reac- southern-most counties. Normally, tion; Bottom: Susceptible reaction. only a few pustules are visible on susceptible varieties in early June because spore numbers are still low. The disease develops prior to this period on susceptible winter or spring wheat crops grown in states farther south. The leaf rust spores are carried by wind currents, and the disease advances progressively northward across the Great Plains as the wheat crops develop (Figure 2). The leaf rust pathogen has only occasionally over-wintered in North Dakota, during a mild winter or when protected by deep snow. Figure 2. Leaf rust disease cycle. North Dakota State University, Fargo, North Dakota 58105 JULY 2002 The Disease The following factors must be present for wheat leaf rust infection to occur: viable spores; susceptible or moder- ately susceptible wheat plants; moisture on the leaves (six to eight hours of dew); and favorable temperatures (60 to 80 degrees Fahrenheit). Relatively cool nights combined with warm days are excellent conditions for disease development. -

Cereal Rye Section 9 Diseases

SOUTHERN SEPTEMBER 2018 CEREAL RYE SECTION 9 DISEASES TOOLS FOR DIAGNOSING CEREAL DISEASE | ERGOT | TAKE-ALL | RUSTS | YELLOW LEAF SPOT (TAN SPOT) | FUSARIUM: CROWN ROT AND FHB | COMMON ROOT ROT | SMUT | RHIZOCTONIA ROOT ROT | CEREAL FUNGICIDES | DISEASE FOLLOWING EXTREME WEATHER EVENTS SOUTHERN JANUARY 2018 SECTION 9 CEREAL rye Diseases Key messages • Rye has good tolerance to cereal root diseases. • The most important disease of rye is ergot (Claviceps purpurea). It is important to realise that feeding stock with ergot infested grain can result in serious losses. Grain with three ergots per 1,000 kernels can be toxic. 1 • Stem and leaf rusts can usually be seen on cereal rye in most years, but they are only occasionally a serious problem. 2 • All commercial cereal rye varieties have resistance to the current pathotypes of stripe rust. However, the out-crossing nature of the species will mean that under high disease pressure, a proportion of the crop (approaching 15–20% of the plant population) may show evidence of the disease. Other diseases are usually insignificant. • Cereal rye has tolerance to take-all, making it a useful break crop following grassy pastures. 3 • Bevy is a host for the root disease take-all and this should be carefully monitored. 4 General disease management strategies: • Use resistant or partially resistant varieties. • Use disease-free seed. • Use fungicidal seed treatments to kill fungi carried on the seed coat or in the seed. • Have a planned in-crop fungicide regime. • Conduct in-crop disease audits to determine the severity of the disease. This can be used as a tool to determine what crop is grown in what paddock the following year. -

Virulence of Puccinia Triticina on Wheat in Iran

African Journal of Plant Science Vol. 4 (2), pp. 026-031, February 2010 Available online at http://www.academicjournals.org/ajps ISSN 1996-0824 © 2010 Academic Journals Full Length Research Paper Virulence of Puccinia triticina on wheat in Iran S. Elyasi-Gomari Azad Islamic University, Shoushtar Branch, Faculty of Agriculture, Shoushtar, Iran. E-mail: [email protected]. Accepted 12 January, 2010 Wheat leaf rust is controlled mainly by race-specific resistance. To be effective, breeding wheat for resistance to leaf rust requires knowledge of virulence diversity in local populations of the pathogen. Collections of Puccinia triticina were made from rust-infected wheat leaves on the territory of Khuzestan province (south-west) in Iran during 2008 - 2009. In 2009, up to 20 isolates each of the seven most common leaf rust races plus 8 -10 isolates of unnamed races were tested for virulence to 35 near- isogenic wheat lines with different single Lr genes for leaf rust resistance. The lines with Lr9, Lr25, Lr28 and Lr29 gene were resistant to all of the isolates. Few isolates of known races but most isolates of the new, unnamed races were virulent on Lr19. The 35 Lr gene lines were also exposed to mixed race inoculum in field plots to tests effectiveness of their resistance. No leaf rust damage occurred on Lr9, Lr25, Lr28 and Lr29 in the field, and lines with Lr19, Lr16, Lr18, Lr35, Lr36, Lr37 and the combination Lr27 + Lr31 showed less than 15% severity. A total of 500 isolates of P. triticina obtained from five commercial varieties of wheat at two locations in the eastern and northern parts of the Khuzestan region were identified to race using the eight standard leaf rust differential varieties of Johnson and Browder. -

Early Molecular Signatures of Responses of Wheat to Zymoseptoria Tritici in Compatible and Incompatible Interactions

Plant Pathology (2016) Doi: 10.1111/ppa.12633 Early molecular signatures of responses of wheat to Zymoseptoria tritici in compatible and incompatible interactions E. S. Ortona, J. J.Ruddb and J. K. M. Browna* aJohn Innes Centre, Norwich Research Park, Norwich NR4 7UH; and bRothamsted Research, Harpenden AL5 2JQ, UK Zymoseptoria tritici, the causal agent of septoria tritici blotch, a serious foliar disease of wheat, is a necrotrophic pathogen that undergoes a long latent period. Emergence of insensitivity to fungicides, and pesticide reduction policies, mean there is a pressing need to understand septoria and control it through greater varietal resistance. Stb6 and Stb15, the most common qualitative resistance genes in modern wheat cultivars, determine specific resistance to avirulent fun- gal genotypes following a gene-for-gene relationship. This study investigated compatible and incompatible interactions of wheat with Z. tritici using eight combinations of cultivars and isolates, with the aim of identifying molecular responses that could be used as markers for disease resistance during the early, symptomless phase of colonization. The accumulation of TaMPK3 was estimated using western blotting, and the expression of genes implicated in gene-for- gene interactions of plants with a wide range of other pathogens was measured by qRT-PCR during the presymp- tomatic stages of infection. Production of TaMPK3 and expression of most of the genes responded to inoculation with Z. tritici but varied considerably between experimental replicates. However, there was no significant difference between compatible and incompatible interactions in any of the responses tested. These results demonstrate that the molecular biology of the gene-for-gene interaction between wheat and Zymoseptoria is unlike that in many other plant diseases, indicate that environmental conditions may strongly influence early responses of wheat to infection by Z. -

Evaluation of Winter Wheat Germplasm for Resistance To

EVALUATION OF WINTER WHEAT GERMPLASM FOR RESISTANCE TO STRIPE RUST AND LEAF RUST A Thesis Submitted to the Graduate Faculty of the North Dakota State University of Agriculture and Applied Science By ALBERT OKABA KERTHO In Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE Major Department: Plant Pathology August 2014 Fargo, North Dakota North Dakota State University Graduate School Title EVALUATION OF WINTER WHEAT GERMPLASM FOR RESISTANCE TO STRIPE RUST AND LEAF RUST By ALBERT OKABA KERTHO The Supervisory Committee certifies that this disquisition complies with North Dakota State University’s regulations and meets the accepted standards for the degree of MASTER OF SCIENCE SUPERVISORY COMMITTEE: Dr. Maricelis Acevedo Chair Dr. Francois Marais Dr. Liu Zhaohui Dr. Phil McClean Approved: 09/29/2014 Dr. Jack Rasmussen Date Department Chair ABSTRACT Wheat leaf rust, caused by Puccinia triticina (Pt), and wheat stripe rust caused by P. striiformis f. sp. tritici (Pst) are important foliar diseases of wheat (Triticum aestivum L.) worldwide. Breeding for disease resistance is the preferred strategy of managing both diseases. The continued emergence of new races of Pt and Pst requires a constant search for new sources of resistance. Winter wheat accessions were evaluated at seedling stage in the greenhouse with races of Pt and Pst that are predominant in the North Central US. Association mapping approach was performed on landrace accessions to identify new or underutilized sources of resistance to Pt and Pst. The majority of the accessions were susceptible to all the five races of Pt and one race of Pst. Association mapping studies identified 29 and two SNP markers associated with seedling resistance to leaf rust and stripe rust, respectively. -

Achievements and Prospects of Wheat Breeding for Disease Resistance

Czech J. Genet. Plant Breed., 38, 2002 (1): 16–28 Achievements and Prospects of Wheat Breeding for Disease Resistance PAVEL BARTOŠ, VÁCLAV ŠÍP, JANA CHRPOVÁ, JOSEF VACKE, EVA STUCHLÍKOVÁ, VERONIKA BLAŽKOVÁ, JANA ŠÁROVÁ and ALENA HANZALOVÁ Research Institute of Crop Production – Division of Genetics and Plant Breeding, Prague-Ruzyně, Czech Republic Abstract: Achievements and prospects of wheat breeding for disease resistance in the world and in the Czech Republic are reviewed. Attention is paid to rusts, powdery mildew, leaf blotch, glume blotch, tan spot, fusarium head blight, common and dwarf bunt, eyespot, barley yellow dwarf virus on wheat and wheat dwarf virus. Genes for resistance to rusts and powdery mildew in the cultivars registered in the Czech Republic are listed. Promising resistance genes and sources of resistance to the above mentioned diseases are reviewed. Prospects of resistance breeding including application of methods of molecular genetics and development of synthetic hexaploids are outlined. Keywords: wheat; resistance breeding; fungal diseases; virus diseases; Czech Republic The development of disease resistance breeding is (defined by JOHNSON [1981]) attracted attention par- closely linked with the development of genetics, plant ticularly in the last decade when several conferences pathology and the progress of plant breeding methods. were devoted to this aspect of resistance. Finally, recent First crosses of wheat based on Mendels genetic princi- development of molecular biology opened many new ples, aiming to transfer disease resistance, were carried prospects for the resistance breeding. out by BIFFEN (1905). He found monogenic inheritance of yellow rust resistance in wheat. Such inheritance has been later confirmed in other crops and pathogens. -

Characterization of Puccinia Recondita, the Causal Agent of Brown Rust: a Review

AGRICULTURAL SCIENCES DOI: 10.22616/rrd.25.2019.051 CHARACTERIZATION OF PUCCINIA RECONDITA, THE CAUSAL AGENT OF BROWN RUST: A REVIEW Kintija Peksa1,2, Biruta Bankina1 1Latvia University of Life Sciences and Technologies, Latvia 2Institute of Agricultural Resources and Economics, Latvia [email protected] Abstract Brown rust caused by Puccinia recondita is a significant disease in cereal growing areas worldwide. On average, brown rust can cause yield losses from 10% to 40% resulting in economic losses. The disease damages the leaves, stems and glumes of cereals. Classification of the pathogen causing brown rust has undergone several revisions. There are two different opinions about the causal agent causing brown rust on rye and wheat. Some scientists consider that the causal agent of brown rust in wheat (Triticum aestivum L.) and rye (Secale cereale) is included in the broad species of P. recondita defined by Cummins, but other scientists consider that there are two species causing brown rust – P. recondita in rye and P. triticina in wheat. There are many studies about the races of P. recondita in wheat: in North America, 70 races are collected every year; in Canada, 35 races have been found; in Europe, 105 races have been found. Unfortunately, there are no studies about the races of the pathogen of rye. P. recondita is a heteroecious fungus with a complicated life cycle. For successful development, the fungus requires cereals as primary hosts and different alternative hosts, depending on the specialization of the pathogen. Specific studies about the biology, distribution and harmfulness of Puccinia recondita in Latvia are necessary. -

Combating Stem and Leaf Rust of Wheat

IFPRI Discussion Paper 00910 November 2009 Combating Stem and Leaf Rust of Wheat Historical Perspective, Impacts, and Lessons Learned H.J. Dubin John P. Brennan 2020 Vision Initiative This paper has been prepared for the project on Millions Fed: Proven Successes in Agricultural Development (www.ifpri.org/millionsfed) INTERNATIONAL FOOD POLICY RESEARCH INSTITUTE The International Food Policy Research Institute (IFPRI) was established in 1975. IFPRI is one of 15 agricultural research centers that receive principal funding from governments, private foundations, and international and regional organizations, most of which are members of the Consultative Group on International Agricultural Research (CGIAR). FINANCIAL CONTRIBUTORS AND PARTNERS IFPRI’s research, capacity strengthening, and communications work is made possible by its financial contributors and partners. IFPRI receives its principal funding from governments, private foundations, and international and regional organizations, most of which are members of the Consultative Group on International Agricultural Research (CGIAR). IFPRI gratefully acknowledges the generous unrestricted funding from Australia, Canada, China, Finland, France, Germany, India, Ireland, Italy, Japan, Netherlands, Norway, South Africa, Sweden, Switzerland, United Kingdom, United States, and World Bank. MILLIONS FED “Millions Fed: Proven Successes in Agricultural Development” is a project led by IFPRI and its 2020 Vision Initiative to identify interventions in agricultural development that have substantially reduced hunger and poverty; to document evidence about where, when, and why these interventions succeeded; to learn about the key drivers and factors underlying success; and to share lessons to help inform better policy and investment decisions in the future. A total of 20 case studies are included in this project, each one based on a synthesis of the peer- reviewed literature, along with other relevant knowledge, that documents an intervention’s impact on hunger and malnutrition and the pathways to food security. -

Puccinia Triticina (Wheat Leaf Rust) Yuan Chai1, Darren J

Puccinia triticina (Wheat Leaf Rust) Yuan Chai1, Darren J. Kriticos1,2, Jason M. Beddow1, Noboru Ota3, Tania Yonow1,2, and William S. Cuddy4 1 HarvestChoice, InSTePP, University of Minnesota, St. Paul, MN, USA 2 CSIRO, Biosecurity and Agriculture Flagships, Canberra, ACT, Australia 3 CSIRO, Agriculture Flagship, Perth, WA, Australia 4 South Wales Department of Primary Industries, Menangle, NSW, Australia Background Information Introduction Common Names: Among the cereal rust diseases, wheat leaf rust, caused Wheat Leaf Rust, Brown Rust by Puccinia triticina, occurs most commonly and has the widest distribution (Kolmer et al. 2009). Leaf rust pri‐ Scientiic Name: marily infects the leaf blades, causing red‐orange pus‐ Puccinia triticina tules to erupt from the leaves. These pustules contain thousands of urediniospores that can disperse to infect Taxonomy: other plants. Yield losses in excess of 50 percent can re‐ Kingdom: Fungi; Phylum: Basidiomycota; sult if infection occurs early in the crop’s lifecycle (Huerta Class: Pucciniomycetes; Order: Pucciniales; ‐Espino et al. 2011). The frequency and wide geographic Family: Pucciniaceae distribution of leaf rust infections lead some (e.g., Huerta‐ Crop Hosts: Espino et al. 2011; Samborski 1985) to conclude that leaf Wheat (Triticum sp.), barley (Hordeum vulgare), rye rust is responsible for more crop damage worldwide than the other wheat rusts. (Secale cereale), triticale (X Triticosecale) Known Distribution Wheat leaf rust is distributed in all wheat‐growing re‐ gions of the world (Fig. 2). Leaf rust occurred nearly eve‐ ry year in the United States (Fig. 3), Canada and Mexico, causing serious losses in wheat production (Huerta‐ Espino et al. 2011; Roelfs 1989; Singh et al. -

Genomic Mapping of Leaf Rust and Stem Rust Resistance Loci in Durum

GENOMIC MAPPING OF LEAF RUST AND STEM RUST RESISTANCE LOCI IN DURUM WHEAT AND USE OF RAD-GENOTYPE BY SEQUENCING FOR THE STUDY OF POPULATION GENETICS IN PUCCINIA TRITICINA A Dissertation Submitted to the Graduate Faculty of the North Dakota State University of Agriculture and Applied Science By Meriem Aoun In Partial Fulfillment of the Requirements for the Degree of DOCTOR OF PHILOSOPHY Major Department Plant Pathology November 2016 Fargo, North Dakota North Dakota State University Graduate School Title GENOMIC MAPPING OF LEAF RUST AND STEM RUST RESISTANCE LOCI IN DURUM WHEAT AND USE OF RAD-GENOTYPE BY SEQUENCING FOR THE STUDY OF POPULATION GENETICS IN PUCCINIA TRITICINA By Meriem Aoun The Supervisory Committee certifies that this disquisition complies with North Dakota State University’s regulations and meets the accepted standards for the degree of DOCTOR OF PHILOSOPHY SUPERVISORY COMMITTEE: Dr. Maricelis Acevedo Chair Dr. James A. Kolmer Dr. Elias Elias Dr. Robert Brueggeman Dr. Zhaohui Liu Approved: 11/09/16 Dr. Jack Rasmussen Date Department Chair ABSTRACT Leaf rust, caused by Puccinia triticina Erikss. (Pt), and stem rust, caused by Puccinia graminis f. sp. tritici Erikss. and E. Henn (Pgt), are among the most devastating diseases of durum wheat (Triticum turgidum L. var. durum). This study focused on the identification of Lr and Sr loci using association mapping (AM) and bi-parental population mapping. From the AM conducted on the USDA-National Small Grain Collection (NSGC), 37 loci associated with leaf rust response were identified, of which 14 were previously uncharacterized. Inheritance study and bulked segregant analysis on bi-parental populations developed from eight leaf rust resistance accessions from the USDA-NSGC showed that five of these accessions carry single dominant Lr genes on chromosomes 2B, 4A, 6BS, and 6BL.