Effective Crypto Ransomawre Detection Using Hardware

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Operating System Boot from Fully Encrypted Device

Masaryk University Faculty of Informatics Operating system boot from fully encrypted device Bachelor’s Thesis Daniel Chromik Brno, Fall 2016 Replace this page with a copy of the official signed thesis assignment and the copy of the Statement of an Author. Declaration Hereby I declare that this paper is my original authorial work, which I have worked out by my own. All sources, references and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Daniel Chromik Advisor: ing. Milan Brož i Acknowledgement I would like to thank my advisor, Ing. Milan Brož, for his guidance and his patience of a saint. Another round of thanks I would like to send towards my family and friends for their support. ii Abstract The goal of this work is description of existing solutions for boot- ing Linux and Windows from fully encrypted devices with Secure Boot. Before that, though, early boot process and bootloaders are de- scribed. A simple Linux distribution is then set up to boot from a fully encrypted device. And lastly, existing Windows encryption solutions are described. iii Keywords boot process, Linux, Windows, disk encryption, GRUB 2, LUKS iv Contents 1 Introduction ............................1 1.1 Thesis goals ..........................1 1.2 Thesis structure ........................2 2 Boot Process Description ....................3 2.1 Early Boot Process ......................3 2.2 Firmware interfaces ......................4 2.2.1 BIOS – Basic Input/Output System . .4 2.2.2 UEFI – Unified Extended Firmware Interface .5 2.3 Partitioning tables ......................5 2.3.1 MBR – Master Boot Record . -

Taxonomy for Anti-Forensics Techniques & Countermeasures

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by St. Cloud State University St. Cloud State University theRepository at St. Cloud State Culminating Projects in Information Assurance Department of Information Systems 4-2020 Taxonomy for Anti-Forensics Techniques & Countermeasures Ziada Katamara [email protected] Follow this and additional works at: https://repository.stcloudstate.edu/msia_etds Recommended Citation Katamara, Ziada, "Taxonomy for Anti-Forensics Techniques & Countermeasures" (2020). Culminating Projects in Information Assurance. 109. https://repository.stcloudstate.edu/msia_etds/109 This Starred Paper is brought to you for free and open access by the Department of Information Systems at theRepository at St. Cloud State. It has been accepted for inclusion in Culminating Projects in Information Assurance by an authorized administrator of theRepository at St. Cloud State. For more information, please contact [email protected]. Taxonomy for Anti-Forensics Techniques and Countermeasures by Ziada Katamara A Starred Paper Submitted to the Graduate Faculty of St Cloud State University in Partial Fulfillment of the Requirements for the Degree Master of Science in Information Assurance June, 2020 Starred Paper Committee: Abdullah Abu Hussein, Chairperson Lynn A Collen Balasubramanian Kasi 2 Abstract Computer Forensic Tools are used by forensics investigators to analyze evidence from the seized devices collected at a crime scene or from a person, in such ways that the results or findings can be used in a court of law. These computer forensic tools are very important and useful as they help the law enforcement personnel to solve crimes. Computer criminals are now aware of the forensics tools used; therefore, they use countermeasure techniques to efficiently obstruct the investigation processes. -

Winter 2016 E-Newsletter

WINTER 2016 E-NEWSLETTER At Digital Mountain we assist our clients with their e-discovery, computer forensics and cybersecurity needs. With increasing encryption usage and the recent news of the government requesting Apple to provide "backdoor" access to iPhones, we chose to theme this E-Newsletter on the impact data encryption has on attorneys, litigation support professionals and investigators. THE SHIFTING LANDSCAPE OF DATA ENCRYPTION TrueCrypt, a free on-the-fly full disk encryption product, was the primary cross-platform solution for practitioners in the electronic discovery and computer forensics sector. Trusted and widely adopted, TrueCrypt’s flexibility to perform either full disk encryption or encrypt a volume on a hard drive was an attractive feature. When TrueCrypt encrypted a volume, a container was created to add files for encryption. As soon as the drive was unmounted, the data was protected. The ability to add a volume to the original container, where any files or the folder structure could be hidden within an encrypted volume, provided an additional benefit to TrueCrypt users. However, that all changed in May 2014 when the anonymous team that developed TrueCrypt decided to retire support for TrueCrypt. The timing of TrueCrypt’s retirement is most often credited to Microsoft’s ending support of Windows XP. The TrueCrypt team warned users that without support for Windows XP, TrueCrypt was vulnerable. Once support for TrueCrypt stopped, trust continued to erode as independent security audits uncovered specific security flaws. In the wake of TrueCrypt’s demise, people were forced to look for other encryption solutions. TrueCrypt’s website offered instructions for users to migrate to BitLocker, a full disk encryption program available in certain editions of Microsoft operating systems beginning with Windows Vista. -

Full-Disk-Encryption Crash-Course

25C3: Full-Disk-Encryption Crash-Course Everything to hide Jürgen Pabel, CISSP Akkaya Consulting GmbH #0 Abstract Crash course for software based Full-Disk-Encryption. The technical architectures of full-disk-encryption solutions for Microsoft Windows and Linux are described. Technological differences between the two open-source projects TrueCrypt and DiskCryptor are explained. A wish-list with new features for these open-source Full-Disk-Encryption offerings wraps things up. Readers should be familiar with general operating system concepts and have some software development experience. #1 Introduction Software based Full-Disk-Encryption (¹FDEª) solutions are usually employed to maintain the confidentiality of data stored on mobile computer systems. The established terminology is ¹data-at-rest protectionª; it implies that data is protected against unauthorized access, even if an adversary possesses the storage media. Therefore, some of the most common risks to data confidentiality are addressed: loss and theft of mobile computer systems. The encryption and decryption of data is completely transparent to computer users, which makes Full-Disk- Encryption solutions trivial to use. The only user interaction occurs in the Pre-Boot-Authentication (¹PBAª) environment, in which the computer user provides the cryptographic key in order to allow for the operating system to be started and data to be accessed. #2 Architecture I: Pre-Boot-Authentication The Pre-Boot-Authentication environment is loaded before the operating system when the computer is powered-on. The primary purpose of the Pre-Boot-Authentication is to load the encryption key into memory and to initiate the boot process of the installed operating system. -

Advanced Notification of Cyber Threats Against Mamba Ransomware

Advanced Notification of Cyber Threats against Mamba Ransomware Security Advisory AE-Advisory 17-42 Criticality Critical Advisory Released On 13 August 2017 Impact Mamba ransomware fully encrypts the hard drive of an infected machine. Solution Adhere the advices written under the solution section. Affected Platforms Windows Operating System (OS) Summary As the leading trusted secure cyber coordination center in the region, aeCERT has researched and found about a ransomware that is new to the region currently named as “Mamba Ransomware”. Mamba ransomware uses DiskCryptor to encrypt the disks by using a very strong full disk encryption method. The hacker uses psexec utility to gain access to the network and infect the machine with the malware. The malware goes deep into the system to gain access to all services, forces the machine to restart, then starts to encrypt the disk. Advisory Details Mamba ransomware’s initial distribution is similar to other ransomware-type viruses, such as WannaCrypt and Petya. Furthermore, Mamba is used against corporations and organizations. The attacker uses Mamba ransomware to encrypt the hard drive of the victim’s machine. This ransomware uses a legitimate utility called DiskCryptor to achieve full disk encryption of the infected machine on the Master Boot Record level. In order to decrypt the machine, the victim is asked to contact the attacker for the decryption of the hard drive. This group of attackers need to gain access to the network of the company by using psexec utility to run the ransomware on the victim’s machine. The exploit generates a password for DiskCryptor utility. -

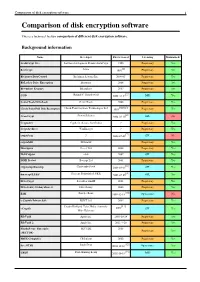

Comparison of Disk Encryption Software 1 Comparison of Disk Encryption Software

Comparison of disk encryption software 1 Comparison of disk encryption software This is a technical feature comparison of different disk encryption software. Background information Name Developer First released Licensing Maintained? ArchiCrypt Live Softwaredevelopment Remus ArchiCrypt 1998 Proprietary Yes [1] BestCrypt Jetico 1993 Proprietary Yes BitArmor DataControl BitArmor Systems Inc. 2008-05 Proprietary Yes BitLocker Drive Encryption Microsoft 2006 Proprietary Yes Bloombase Keyparc Bloombase 2007 Proprietary Yes [2] CGD Roland C. Dowdeswell 2002-10-04 BSD Yes CenterTools DriveLock CenterTools 2008 Proprietary Yes [3][4][5] Check Point Full Disk Encryption Check Point Software Technologies Ltd 1999 Proprietary Yes [6] CrossCrypt Steven Scherrer 2004-02-10 GPL No Cryptainer Cypherix (Secure-Soft India) ? Proprietary Yes CryptArchiver WinEncrypt ? Proprietary Yes [7] cryptoloop ? 2003-07-02 GPL No cryptoMill SEAhawk Proprietary Yes Discryptor Cosect Ltd. 2008 Proprietary Yes DiskCryptor ntldr 2007 GPL Yes DISK Protect Becrypt Ltd 2001 Proprietary Yes [8] cryptsetup/dmsetup Christophe Saout 2004-03-11 GPL Yes [9] dm-crypt/LUKS Clemens Fruhwirth (LUKS) 2005-02-05 GPL Yes DriveCrypt SecurStar GmbH 2001 Proprietary Yes DriveSentry GoAnywhere 2 DriveSentry 2008 Proprietary Yes [10] E4M Paul Le Roux 1998-12-18 Open source No e-Capsule Private Safe EISST Ltd. 2005 Proprietary Yes Dustin Kirkland, Tyler Hicks, (formerly [11] eCryptfs 2005 GPL Yes Mike Halcrow) FileVault Apple Inc. 2003-10-24 Proprietary Yes FileVault 2 Apple Inc. 2011-7-20 Proprietary -

Disk Encryption

Centre for Research on Cryptography and Security DiskDisk encencryptryptionion ...... MilanMilan BrožBrož ESETESET [email protected]@fi.muni.cz OctoberOctober 30,30, 20142014 FDE - Full Disk Encryption ● Transparent encryption on disk level (sectors) FDE (Full Disk Encryption) FVE (Full Volume Encryption) ... + offline protection for notebook, external drives + transparent for filesystem + no user decision later what to encrypt + encryption of hibernation and swap file + key removal = easy data disposal - more users, disk accessible to all of them - key disclosure, complete data leak - sometimes performance problems FDE – layer selection ● userspace (In Linux usually FUSE handled) (not directly in OS kernel) SW ● sw driver - low-level storage/SCSI - virtual device dm-crypt, TrueCrypt, DiskCryptor, Bitlocker, ... AES-NI, special chips (mobile), TPM (Trusted Platform Module) ● driver + hw (hw acceleration) ● disk controller Chipset FDE (... external enclosure) HW Many external USB drives with "full hw encryption" HDD FDE Hitachi: BDE (Bulk Data Encryption) ● on-disk special drives Seagate: FDE (Full Disk Encryption) Toshiba: SED (Self-Encrypting Drives) ... Chipset or on-disk FDE ● Encryption algorithm is usually AES-128/256 ... encryption mode is often not specified clearly ● Where is the key stored? ● proprietary formats and protocols ● randomly generated? ● External enclosure ● Encryption often present (even if password is empty) => cannot access data without enclosure HW board ● Recovery: you need the same board / firmware ● Weak hw part: connectors ● Full system configuration ● laptop with FDE support in BIOS / trusted boot ● Vendor lock-in ● FIPS 140-2 (mandatory US standard, not complete evaluation of security!) Chipset FDE examples ● Encryption on external disk controller board + standard SATA drive ● USB3 external disk enclosure ● AES-256 encryption http://imgs.xkcd.com/comics/security.png DiskDisk encencryptryptionion ..... -

ICT-Entr 2010

COMMUNITY SURVEY ON ICT USAGE AND E -COMMERCE IN ENTERPRISES 2010 General outline of the survey Sampling unit: Enterprise. Scope / Target Population: Economic activity: Enterprises classified in the following categories of NACE Rev. 2: - Section C – “Manufacturing” ; - Section D,E – “Electricity, gas and steam, water supply, sewerage and waste management” ; - Section F – “Construction” ; - Section G – “Wholesale and retail trade; repair of motor vehicles and motorcycles” ; - Section H – “Transportation and storage” ; - Section I – “Accommodation and food service activities” ; - Section J – “Information and communication” ; - Section L – “Real estate activities” ; - Division 69 -74 –“Professional, scientific and technical activities”; - Section N - "Administrative and support activities"; - Group 95.1 - “Repair of computers ”; Only for modules A to C, E , G and X (X1, X2, X5): - Classes/groups 64.19 + 64.92 + 65.1 + 65.2 + 66.12 + 66.19 - “Financial and insurance activities” . Enterprise size: Enterprises with 10 or more persons employed; Optional : enterprises with number of persons employed between 1 and 9. Geographic scope: Enterprises located in any part of the territory of the Country. Reference period: Year 2009 for the % of sales/orders data and where specified. January 2010 for the other data. Survey period: First quarter 2010. Questionnaire: The layout of the national questionnaire should be defined by the country. However, countries should follow the order of the list of variables enclosed, if possible. The background information (Module X) should be placed at the end of the questionnaire. This information can be obtained in 3 different ways: from national registers, from Structural Business Statistics or collected directly with the ICT usage survey. -

Encryption of Data - Questionnaire

Council of the European Union Brussels, 20 September 2016 (OR. en) 12368/16 LIMITE CYBER 102 JAI 764 ENFOPOL 295 GENVAL 95 COSI 138 COPEN 269 NOTE From: Presidency To: Delegations Subject: Encryption of data - Questionnaire Over lunch during the informal meeting of the Justice Ministers (Bratislava, 8 July 2016) the issue of encryption was discussed in the context of the fight against crime. Apart from an exchange on the national approaches, and the possible benefits of an EU or even global approach, the challenges which encryption poses to criminal proceedings were also debated. The Member States' positions varied mostly between those which have recently suffered terrorist attacks and those which have not. In general, the existence of problems stemming from data/device encryption was recognised as well as the need for further discussion. To prepare the follow-up in line with the Justice Ministers' discussion, the Presidency has prepared a questionnaire to map the situation and identify the obstacles faced by law enforcement authorities when gathering or securing encrypted e-evidence for the purposes of criminal proceedings. 12368/16 MK/ec 1 DGD2B LIMITE EN On the basis of the information be gathered from Member States' replies, the Presidency will prepare the discussion that will take place in the Friends of the Presidency Group on Cyber Issues and consequently in CATS in preparation for the JHA Council in December 2016. Delegations are kindly invited to fill in the questionnaire as set out in the Annex and return it by October 3, 2016 to the following e-mail address: [email protected]. -

How Technology Enhances the Right to Privacy – a Case Study on the Right to Hide Project of the Hungarian Civil Liberties Union

How Technology Enhances the Right to Privacy – A Case Study on the Right to Hide Project of the Hungarian Civil Liberties Union Fanny Hidvégi* & Rita Zágoni** INTRODUCTION The Right to Hide project of the Hungarian Civil Liberties Union (HCLU) is a website dedicated to the promotion of privacy-enhancing technologies. The HCLU developed the www.righttohide.com (and in Hungarian the www. nopara.org) websites to offer tips and tools for every Internet user on how to protect their online privacy. Part I presents the legal and political atmosphere in which the HCLU realized the urgency to develop the www.righttohide.com website and discusses the current shortcomings of the Hungarian privacy, surveillance, and whistle-blower protection laws. In Part II, we cover some of the theoretical and practical answers the website offers to these problems. We share these solutions through the HCLU’s Right to Hide website as a way of surmounting the legal and political hurdles that limit the fundamental right to privacy in Hungary. The HCLU is a non-profit human rights watchdog NGO that was established in Budapest, Hungary in 1994. The HCLU works independently of political parties, the state or any of its institutions. The HCLU’s aim is to promote the cause of fundamental rights. Generally, it has the goal of building and strengthen- ing civil society and the rule of law in Hungary and in the Central and Eastern European (CEE) region. Since the HCLU is an independent non-profit organiza- tion, it relies mostly on foundations and private donations for financial support. -

Mamba Ransomware Weaponizing Diskcryptor Contact FBI CYWATCH Summary

TLP:WHITE The following information is being provided by the FBI, with no 23 March 2021 guarantees or warranties, for potential use at the sole discretion of recipients in order to protect against cyber threats. This data is Alert Number provided to help cyber security professionals and system CU-000143-MW administrators’ guard against the persistent malicious actions of cyber actors. This FLASH was coordinated with DHS-CISA. WE NEED YOUR This FLASH has been released TLP:WHITE. Subject to standard HELP! copyright rules, TLP:WHITE information may be distributed without If you find any of restriction. For comments or questions related to the content or these indicators on dissemination of this product, contact CyWatch. your networks, or have related information, please Mamba Ransomware Weaponizing DiskCryptor contact FBI CYWATCH Summary immediately. Mamba ransomware has been deployed against local governments, Email: public transportation agencies, legal services, technology services, [email protected] industrial, commercial, manufacturing, and construction businesses. Mamba ransomware weaponizes DiskCryptor—an open source full Phone: disk encryption software— to restrict victim access by encrypting an 1-855-292-3937 entire drive, including the operating system. DiskCryptor is not inherently malicious but has been weaponized. Once encrypted, the *Note: By reporting any related system displays a ransom note including the actor’s email address, information to FBI CyWatch, ransomware file name, the host system name, and a place to enter the you are assisting in sharing decryption key. Victims are instructed to contact the actor’s email information that allows the FBI address to pay the ransom in exchange for the decryption key. -

Vých Sítí a Trestní Právo

Právnická fakulta Masarykovy univerzity Katedra trestního práva Disertační práce Globální systémy propojených počítačo- vých sítí a trestní právo JUDr. Andrej Lobotka 2015 2 „Prohlašuji, že jsem disertační práci na téma Globální systémy propojených po- čítačových sítí a trestní právo zpracoval sám. Veškeré prameny a zdroje informací, kte- ré jsem použil k sepsání této práce, byly citovány v poznámkách pod čarou a jsou uve- deny v seznamu použitých pramenů a literatury.“ V Brně dne 31. srpna 2015 ………………………….…………… JUDr. Andrej Lobotka 3 4 Na tomto místě bych rád poděkoval svému školiteli doc. JUDr. Josefu Kuchtovi, CSc., rovněž jako ostatním členům Katedry trestního práva PrF MU, jmenovitě doc. JUDr. Věře Kalvodové, Dr., doc. JUDr. Marekovi Fryštákovi, Ph.D., prof. JUDr. Jaroslavu Fenykovi, PhD., DSc., Univ. Priv. Prof., prof. JUDr. Vladimíru Kratochvílovi, CSc., JUDr. Evě Žatecké, Ph.D. a JUDr. Milaně Hrušákové, Ph.D., za vstřícný a ochotný přístup, odborné vedení a za cenné metodické rady, které mi poskytli jak při konzultování této disertační práce, tak během celého doktorského studia. 5 Disertační práce odpovídá právnímu stavu a judikatuře ke dni 31. srpna 2015. 6 ANOTACE/ ANNOTATION Disertační práce Globální systémy propojených počítačových sítí a trestní právo pojed- nává o internetové trestné činnosti a boji s ní. Práce hned po úvodní části ozřejmující vznik internetu a základního fungování internetu definuje internetovou kriminalitu a blíže popisuje její jednotlivá specifika (specifické podoby jednání, změna způsobu provedení klasických trestných činů, jurisdikce jednotlivých států, místní příslušnost soudů, změna charakteristiky „typického pachatele“ určitého trestného činu), ze kterých následně plynou i osobité požadavky pro vyšetřování a dokazování internetové krimina- lity, pro trestání pachatelů internetové kriminality a pro preventivní strategie, a to jak strategie národní, tak školní či firemní nebo individuální.