DIGITAL ELECTRONICS and DESIGN with VHDL This Page Intentionally Left Blank DIGITAL ELECTRONICS and DESIGN with VHDL

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Proposal to Add U+2B95 Rightwards Black Arrow to Unicode Emoji

Proposal to add U+2B95 Rightwards Black Arrow to Unicode Emoji J. S. Choi, 2015‐12‐12 Abstract In the Unicode Standard 7.0 from 2014, ⮕ U+2B95 was added with the intent to complete the family of black arrows encoded by ⬅⬆⬇ U+2B05–U+2B07. However, due to historical timing, ⮕ U+2B95 was not yet encoded when the Unicode Emoji were frst encoded in 2009–2010, and thus the family of four emoji black arrows were mapped not only to ⬅⬆⬇ U+2B05–U+2B07 but also to ➡ U+27A1—a compatibility character for ITC Zapf Dingbats—instead of ⮕ U+2B95. It is thus proposed that ⮕ U+2B95 be added to the set of Unicode emoji characters and be given emoji‐ and text‐style standardized variants, in order to match the properties of its siblings ⬅⬆⬇ U+2B05–U+2B07, with which it is explicitly unifed. 1 Introduction Tis document primarily discusses fve encoded characters, already in Unicode as of 2015: ⮕ U+2B95 Rightwards Black Arrow: Te main encoded character being discussed. Located in the Miscellaneous Symbols and Arrows block. ⬅⬆⬇ U+2B05–U+2B07 Leftwards, Upwards, and Downwards Black Arrow: Te three black arrows that ⮕ U+2B95 completes. Also located in the Miscellaneous Symbols and Arrows block. ➡ U+27A1 Black Rightwards Arrow: A compatibility character for ITC Zapf Dingbats. Located in the Dingbats block. Tis document proposes the addition of ⮕ U+2B95 to the set of emoji characters as defned by Unicode Technical Report (UTR) #51: “Unicode Emoji”. In other words, it proposes: 1. A property change: ⮕ U+2B95 should be given the Emoji property defned in UTR #51. -

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

IGP® / VGL Emulation Code V™ Graphics Language Programmer's Reference Manual Line Matrix Series Printers

IGP® / VGL Emulation Code V™ Graphics Language Programmer’s Reference Manual Line Matrix Series Printers Trademark Acknowledgements IBM and IBM PC are registered trademarks of the International Business Machines Corp. HP and PCL are registered trademarks of Hewlett-Packard Company. IGP, LinePrinter Plus, PSA, and Printronix are registered trademarks of Printronix, LLC. QMS is a registered trademark and Code V is a trademark of Quality Micro Systems, Inc. CSA is a registered certification mark of the Canadian Standards Association. TUV is a registered certification mark of TUV Rheinland of North America, Inc. UL is a registered certification mark of Underwriters Laboratories, Inc. This product uses Intellifont Scalable typefaces and Intellifont technology. Intellifont is a registered trademark of Agfa Division, Miles Incorporated (Agfa). CG Triumvirate are trademarks of Agfa Division, Miles Incorporated (Agfa). CG Times, based on Times New Roman under license from The Monotype Corporation Plc is a product of Agfa. Printronix, LLC. makes no representations or warranties of any kind regarding this material, including, but not limited to, implied warranties of merchantability and fitness for a particular purpose. Printronix, LLC. shall not be held responsible for errors contained herein or any omissions from this material or for any damages, whether direct, indirect, incidental or consequential, in connection with the furnishing, distribution, performance or use of this material. The information in this manual is subject to change without notice. This document contains proprietary information protected by copyright. No part of this document may be reproduced, copied, translated or incorporated in any other material in any form or by any means, whether manual, graphic, electronic, mechanical or otherwise, without the prior written consent of Printronix, LLC. -

Release Notes for Xfree86® 4.8.0 the Xfree86 Project, Inc December 2008

Release Notes for XFree86® 4.8.0 The XFree86 Project, Inc December 2008 Abstract This document contains information about the various features and their current sta- tus in the XFree86 4.8.0 release. 1. Introduction to the 4.x Release Series XFree86 4.0 was the first official release of the XFree86 4 series. The current release (4.8.0) is the latest in that series. The XFree86 4.x series represents a significant redesign of the XFree86 X server,with a strong focus on modularity and configurability. 2. Configuration: aQuickSynopsis Automatic configuration was introduced with XFree86 4.4.0 which makes it possible to start XFree86 without first creating a configuration file. This has been further improved in subsequent releases. If you experienced any problems with automatic configuration in a previous release, it is worth trying it again with this release. While the initial automatic configuration support was originally targeted just for Linux and the FreeBSD variants, as of 4.5.0 it also includes Solaris, NetBSD and OpenBSD support. Full support for automatic configuration is planned for other platforms in futurereleases. If you arerunning Linux, FreeBSD, NetBSD, OpenBSD, or Solaris, try Auto Configuration by run- ning: XFree86 -autoconfig If you want to customise some things afterwards, you can cut and paste the automatically gener- ated configuration from the /var/log/XFree86.0.log file into an XF86Config file and make your customisations there. If you need to customise some parts of the configuration while leav- ing others to be automatically detected, you can combine a partial static configuration with the automatically detected one by running: XFree86 -appendauto If you areusing a platform that is not currently supported, then you must try one of the older methods for getting started like "xf86cfg", which is our graphical configuration tool. -

CEN WORKSHOP Agreementfinal Draft for CWA/MES:1998

CEN WORKSHOP AGREEMENTFinal draft for CWA/MES:1998 1998-11-18 English version Information technology – Multilingual European Subsets in ISO/IEC 10646-1 Technologies de l’information – Informationstechnologie – Jeux partiels européens multilingues Mehrsprachige europäische Untermengen dans l’ISO/CEI 10646-1 in ISO/IEC 10646-1 This CEN Workshop Agreement has been drafted and approved by a Workshop of representatives of interested parties, whose names and affiliations can be obtained from the CEN/ISSS Secretariat. The formal process followed by the Workshop in the development of this Workshop Agreement has been endorsed by the National Members of CEN, but neither the National Members of CEN nor the CEN Central Secretariat can be held accountable for the technical content of this CEN Workshop Agreement or for possible conflicts with standards or legislation. This CEN Workshop Agreement can in no way be held as being an official standard developed by CEN and its Members. This CEN Workshop Agreement is publicly available, as a reference document, from the CEN Members National Standard Bodies. CEN Members are the National Standards Bodies of Austria, Belgium, the Czech Republic, Denmark, Finland, France, Germany, Greece, Iceland, Ireland, Italy, Luxembourg, the Netherlands, Norway, Portugal, Spain, Sweden, Switzerland and the United Kingdom. CEN EUROPEAN COMMITTEE FOR STANDARDIZATION COMITÉ EUROPÉEN DE NORMALISATION EUROPÄISCHES KOMITEE FÜR NORMUNG Central Secretariat: rue de Stassart 36, B-1050 Brussels © CEN 1998 All rights of exploitation in any form and by any means reserved worldwide for CEN national Members Ref.No. CWA/MES:1998 E Information technology – Page 2 Multilingual European Subsets in ISO/IEC 10646-1 Final Draft for CWA/MES:1998 Contents Foreword 3 Introduction 4 1. -

Arphic Font Library Catalog Contents

Arphic Font Library Catalog Contents Global Font 2 Traditional Chinese Big-5 Character Set 4 Unicode 3.0 & 4.1 Character Set 14 HKSCS Character Set 16 Simplifi ed Chinese GB 2312 Character Set 18 爱 GBK Character Set 28 GB 18030 Character Set 29 Japanese JIS X 0208 Character Set 32 Adobe Japan 1-3 Character Set 37 あ ARIB Gaiji Font 38 Korean KS X 1001 Character Set 자 39 KS X 1005 Character Set 39 Latin a 40 Complex Scripts Thai 47 Bengali / Assamese 48 One-Stop Font Shop Devanagari ( Hindi / Marathi / Nepali ) 48 Sinhala 49 Tamil 49 Myanmar 49 Arabic / Farsi / Urdu 50 Lao / Hebrew / Khmer 51 Design Collection 52 Supported Languages / Scripts 54 TrueType TrueType Collection OpenType CESI-certicated Arphic Mobile Font® * Ryobi Traditional Font Series (Initial with RA) is jointly-developed with RYOBI,Ryobi Imagix,and Arphic Technology for digital type. Ryobi and Ryobi Imagix are responsible for overall editorial supervisor, while Aphic is responsible for the development of Kanji character. All rights of Ryobi Traditional Font Series are reserved to TypeBank Co., Ltd. One - Stop Font Shop 1 Global Font Global Font About Global Font In this age of globalization, the utilization of the written word is no longer limited to a specifi c region or language. A product that transcends nationalities, languages, and Latin Chinese-TC Arabic Thai Devanagari regions is in demand. Since 2012, Arphic Technology has begun the integration pro- cess for regional language starting in the Greater China cultural circle with charac- ters in traditional Chinese, Simplifi ed Chi- nese, Japanese, and Korean. -

Fonts & Encodings

Fonts & Encodings Yannis Haralambous To cite this version: Yannis Haralambous. Fonts & Encodings. O’Reilly, 2007, 978-0-596-10242-5. hal-02112942 HAL Id: hal-02112942 https://hal.archives-ouvertes.fr/hal-02112942 Submitted on 27 Apr 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. ,title.25934 Page iii Friday, September 7, 2007 10:44 AM Fonts & Encodings Yannis Haralambous Translated by P. Scott Horne Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo ,copyright.24847 Page iv Friday, September 7, 2007 10:32 AM Fonts & Encodings by Yannis Haralambous Copyright © 2007 O’Reilly Media, Inc. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (safari.oreilly.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Printing History: September 2007: First Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Fonts & Encodings, the image of an axis deer, and related trade dress are trademarks of O’Reilly Media, Inc. -

Font Collection

z/OS Version 2 Release 3 Font Collection IBM GA32-1048-30 Note Before using this information and the product it supports, read the information in “Notices” on page 137. This edition applies to Version 2 Release 3 of z/OS (5650-ZOS) and to all subsequent releases and modifications until otherwise indicated in new editions. Last updated: 2019-02-16 © Copyright International Business Machines Corporation 2002, 2017. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents List of Figures........................................................................................................ v List of Tables........................................................................................................vii About this publication...........................................................................................ix Who needs to read this publication............................................................................................................ ix How this publication is organized............................................................................................................... ix Related information......................................................................................................................................x How to send your comments to IBM.......................................................................xi If you have a technical problem..................................................................................................................xi -

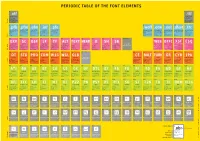

7 R Cweghkaj

pEriodic taBlE oF the Font ElEmEnts 1 2 .otf .ttf OpenType Font File TrueType Font File PostScript = CFF TrueType (TTF) (TrueType = TTF) Glyphs: x³-Bézier splines Glyphs: x²-Bézier splines adobe (microsoft) microsoft, adobe Mac OS, Windows Flavour Mac OS, Windows 3 4 5 6 7 8 9 10 11 12 .pfb .pfm .afm .inf .pfa .woff .otw .eot .dfont .ttc PostScript Font Binary PostScript Font Metric Adobe Font Metric Information Printer Font ASCII Web Open Font Format OpenType Webfont Embedded OpenType Data Fork Suitcase Format TrueType Collection PostScript Type 1 PostScript Type 1 PostScript Type 1 PostScript Type 1 PostScript Type 1 TrueType, PostScript TrueType, PostScript TrueType, PostScript TrueType/QuickDraw GX TrueType Glyph data Font metric information Metric information (ascii) Font information (ascii) ascii version of PFB Webfont generic Webfont extension Webfont Multiple fonts container adobe adobe adobe adobe adobe van blokland/leming/kew ascender microsoft, ascender apple microsoft, adobe Windows Windows Mac OS, Linux Windows, DOS Linux Firefox Internet Explorer et al Internet Explorer Mac OS X Mac OS, Windows Files 13 14 15 16 17 18 19 20 21 22 23 24 – 59 60 61 62 63 Exp sc osF lF tF alt tExt head d sH sB web offc xsF Esq Expert (Set) Small Caps Old Style Figures Lining Figures Tabular Figures Alternates Text Headline Display Supertype Bodytype see OpenType Webfont Office Excellent Screen Font Exhanced Screen Font Typographic features PostScript Type 1 PostScript PostScript PostScript, TrueType PostScript, TrueType PostScript (TrueType) -

Font Summary Overview 1

InfoPrint Font Collection Font Summary Overview 1 Font concepts 2 Version 3.7 AFP Fonts 3 AFP Outline Fonts 4 AFP Classic OpenType Fonts 5 AFP Asian Classic OpenType Fonts 6 WorldType Fonts 7 AFP Raster Fonts 8 Code pages and extended code pages 9 For information not in this manual, refer to the Help System in your product. Read this manual carefully and keep it handy for future reference. TABLE OF CONTENTS Introduction Important............................................................................................................................................ 3 Cautions regarding this guide............................................................................................................. 3 Publications for this product ................................................................................................................ 3 How to read the documentation ......................................................................................................... 3 Before using InfoPrint Font Collection.................................................................................................. 3 Related publications ........................................................................................................................... 4 Symbols.............................................................................................................................................. 4 Abbreviations .................................................................................................................................... -

Elektronskoizdanje(2019)

Elektronsko izdanje (2021) Elektronsko izdanje (2021) Filip Marić Predrag Janičić PROGRAMIRANJE 1 Osnove programiranja kroz programski jezik C Elektronsko izdanje (2021) Beograd 2021. Autori: dr Filip Marić, vanredni profesor na Matematičkom fakultetu u Beogradu dr Predrag Janičić, redovni profesor na Matematičkom fakultetu u Beogradu PROGRAMIRANJE 1 Izdavač: Matematički fakultet Univerziteta u Beogradu Studentski trg 16, 11000 Beograd Za izdavača: prof. dr Zoran Rakić, dekan Recenzenti: dr Gordana Pavlović-Lažetić, redovni profesor na Matematičkom fakultetu u Beogradu dr Miodrag Živković, redovni profesor na Matematičkom fakultetu u Beogradu dr Dragan Urošević, naučni savetnik na Matematičkom institutu SANU Obrada teksta, crteži i korice: autori Elektronsko izdanje (2021) ISBN 978-86-7589-100-0 ○c 2015. Filip Marić i Predrag Janičić Ovo delo zaštićeno je licencom Creative Commons CC BY-NC-ND 4.0 (Attribution-NonCommercial-NoDerivatives 4.0 International License). Detalji licence mogu se videti na veb-adresi http://creativecommons.org/licenses/by-nc-nd/ 4.0/. Dozvoljeno je umnožavanje, distribucija i javno saopštavanje dela, pod uslovom da se navedu imena autora. Upotreba dela u komercijalne svrhe nije dozvoljena. Prerada, preoblikovanje i upotreba dela u sklopu nekog drugog nije dozvoljena. Sadržaj Sadržaj 5 I Osnovni pojmovi računarstva i programiranja9 1 Računarstvo i računarski sistemi 10 1.1 Rana istorija računarskih sistema.................................... 10 1.2 Računari Fon Nojmanove arhitekture................................. -

Regexmagic Manual in PDF Format

Version 2.11.0 — 20 May 2021 Published by Just Great Software Co. Ltd. Copyright © 2009–2021 Jan Goyvaerts. All rights reserved. “RegexMagic” and “Just Great Software” are trademarks of Jan Goyvaerts i Table of Contents How to Use RegexMagic ...................................................................................... 1 1. Introducing RegexMagic................................................................................................................................................ 3 2. Getting Started with RegexMagic ................................................................................................................................ 4 Creating Regular Expressions with RegexMagic............................................................................................................ 5 3. How to Add Sample Text ............................................................................................................................................. 6 4. How to Create Regular Expressions............................................................................................................................ 7 5. How to Create Capturing Groups and Replacement Text ...................................................................................... 9 6. How to Use Your Regular Expressions .................................................................................................................... 10 RegexMagic Examples ......................................................................................