Synthesis Techniques for Semi-Custom Dynamically Reconfigurable Superscalar Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Latticemico32 Development Kit User's Guide for Latticeecp

LatticeMico32 Development Kit User’s Guide for LatticeECP Lattice Semiconductor Corporation 5555 NE Moore Court Hillsboro, OR 97124 (503) 268-8000 May 2007 Copyright Copyright © 2007 Lattice Semiconductor Corporation. This document may not, in whole or part, be copied, photocopied, reproduced, translated, or reduced to any electronic medium or machine- readable form without prior written consent from Lattice Semiconductor Corporation. Trademarks Lattice Semiconductor Corporation, L Lattice Semiconductor Corporation (logo), L (stylized), L (design), Lattice (design), LSC, E2CMOS, Extreme Performance, FlashBAK, flexiFlash, flexiMAC, flexiPCS, FreedomChip, GAL, GDX, Generic Array Logic, HDL Explorer, IPexpress, ISP, ispATE, ispClock, ispDOWNLOAD, ispGAL, ispGDS, ispGDX, ispGDXV, ispGDX2, ispGENERATOR, ispJTAG, ispLEVER, ispLeverCORE, ispLSI, ispMACH, ispPAC, ispTRACY, ispTURBO, ispVIRTUAL MACHINE, ispVM, ispXP, ispXPGA, ispXPLD, LatticeEC, LatticeECP, LatticeECP-DSP, LatticeECP2, LatticeECP2M, LatticeMico8, LatticeMico32, LatticeSC, LatticeSCM, LatticeXP, LatticeXP2, MACH, MachXO, MACO, ORCA, PAC, PAC-Designer, PAL, Performance Analyst, PURESPEED, Reveal, Silicon Forest, Speedlocked, Speed Locking, SuperBIG, SuperCOOL, SuperFAST, SuperWIDE, sysCLOCK, sysCONFIG, sysDSP, sysHSI, sysI/O, sysMEM, The Simple Machine for Complex Design, TransFR, UltraMOS, and specific product designations are either registered trademarks or trademarks of Lattice Semiconductor Corporation or its subsidiaries in the United States and/or other countries. ISP, Bringing the Best Together, and More of the Best are service marks of Lattice Semiconductor Corporation. Other product names used in this publication are for identification purposes only and may be trademarks of their respective companies. Disclaimers NO WARRANTIES: THE INFORMATION PROVIDED IN THIS DOCUMENT IS “AS IS” WITHOUT ANY EXPRESS OR IMPLIED WARRANTY OF ANY KIND INCLUDING WARRANTIES OF ACCURACY, COMPLETENESS, MERCHANTABILITY, NONINFRINGEMENT OF INTELLECTUAL PROPERTY, OR FITNESS FOR ANY PARTICULAR PURPOSE. -

Latticemico32 Software Developer User Guide

LatticeMico32 Software Developer User Guide May 2014 Copyright Copyright © 2014 Lattice Semiconductor Corporation. This document may not, in whole or part, be copied, photocopied, reproduced, translated, or reduced to any electronic medium or machine-readable form without prior written consent from Lattice Semiconductor Corporation. Trademarks Lattice Semiconductor Corporation, L Lattice Semiconductor Corporation (logo), L (stylized), L (design), Lattice (design), LSC, CleanClock, Custom Mobile Device, DiePlus, E2CMOS, ECP5, Extreme Performance, FlashBAK, FlexiClock, flexiFLASH, flexiMAC, flexiPCS, FreedomChip, GAL, GDX, Generic Array Logic, HDL Explorer, iCE Dice, iCE40, iCE65, iCEblink, iCEcable, iCEchip, iCEcube, iCEcube2, iCEman, iCEprog, iCEsab, iCEsocket, IPexpress, ISP, ispATE, ispClock, ispDOWNLOAD, ispGAL, ispGDS, ispGDX, ispGDX2, ispGDXV, ispGENERATOR, ispJTAG, ispLEVER, ispLeverCORE, ispLSI, ispMACH, ispPAC, ispTRACY, ispTURBO, ispVIRTUAL MACHINE, ispVM, ispXP, ispXPGA, ispXPLD, Lattice Diamond, LatticeCORE, LatticeEC, LatticeECP, LatticeECP-DSP, LatticeECP2, LatticeECP2M, LatticeECP3, LatticeECP4, LatticeMico, LatticeMico8, LatticeMico32, LatticeSC, LatticeSCM, LatticeXP, LatticeXP2, MACH, MachXO, MachXO2, MachXO3, MACO, mobileFPGA, ORCA, PAC, PAC-Designer, PAL, Performance Analyst, Platform Manager, ProcessorPM, PURESPEED, Reveal, SensorExtender, SiliconBlue, Silicon Forest, Speedlocked, Speed Locking, SuperBIG, SuperCOOL, SuperFAST, SuperWIDE, sysCLOCK, sysCONFIG, sysDSP, sysHSI, sysI/O, sysMEM, The Simple Machine for Complex -

Technical Competencies and Professional Skills

TECHNICAL COMPETENCIES AND PROFESSIONAL SKILLS Applied Mathematics Technical Skills: data visualization and analysis, SQL, finance and accounting Programming Languages: R, MATLAB, C++, Java, Python Markup Languages: LaTeX, HTML, CSS Software: Microsoft Office (Excel, Office, Word), XCode, Visual Studio Note: Skills, software, and languages are dependent on elective taken, computer selection, and class year. Applied Sciences Instrumentation: UV/Vis spectroscopy, NMR spectroscopy, IR spectroscopy, GC-MS, LC-MS, thermocycler, nucleic acid sequencer, microplate reader, cryocooler Lab Techniques: Gel electrophoresis and SDS-PAGE, Western blotting, primer design and Polymerase Chain Reaction (PCR), QPCR, sterile lab technique, cell culture, nucleic acid isolation and purification, protein isolation and purification (chromatography, centrifugation, dialysis), enzyme thermodynamics and kinetics, transfection and transformation, chemical synthesis and purification (distillation, extraction, chromatography, rotary evaporator, crystallization), inert atmosphere and Schlenk line techniques, titration, dissection of preserved specimens, four-probe electrical measurement, Bragg diffraction Competencies: Application of the scientific method, Scientific writing and oral communication Software and computational skills: Microsoft Office (Word, Excel, Powerpoint), ChemDraw, PyMol, TopSpin, Java, Protein DataBank (PDB), NCBI suite (including BLAST, GenBank), Orca Quantum Chemistry software, LoggerPro, LabView Architecture Design Skills: Multi-scale civic -

Cray XD1™ Supercomputer Release 1.3

CRAY XD1 DATASHEET Cray XD1™ Supercomputer Release 1.3 ■ Purpose-built for HPC — delivers exceptional application The Cray XD1 supercomputer combines breakthrough performance interconnect, management and reconfigurable computing ■ Affordable power — designed for a broad range of HPC technologies to meet users’ demands for exceptional workloads and budgets performance, reliability and usability. Designed to meet ■ Linux, 32 and 64-bit x86 compatible — runs a wide variety of ISV the requirements of highly demanding HPC applications applications and open source codes in fields ranging from product design to weather prediction to ■ Simplified system administration — automates configuration and scientific research, the Cray XD1 system is an indispensable management functions tool for engineers and scientists to simulate and analyze ■ Highly reliable — monitors and maintains system health faster, solve more complex problems, and bring solutions ■ Scalable to hundreds of compute nodes — high bandwidth and to market sooner. low latency let applications scale Direct Connected Processor Architecture Cray XD1 System Highlights The Cray XD1 system is based on the Direct Connected Processor (DCP) architecture, harnessing many processors into a single, unified system to deliver new levels of application � � � ���� � � � Compute� � � Processors performance. ���� � Cray’s implementation of the DCP architecture optimizes message-passing applications by 12 AMD Opteron™ 64-bit single directly linking processors to each other through a high performance interconnect fabric, or dual core processors run eliminating shared memory contention and PCI bus bottlenecks. Linux and are organized as six nodes of 2 or 4-way SMPs to deliver up to 106 GFLOPS* per chassis. Matching memory and I/O performance removes bottlenecks and maximizes processor performance. -

Merrimac – High-Performance and Highly-Efficient Scientific Computing with Streams

MERRIMAC – HIGH-PERFORMANCE AND HIGHLY-EFFICIENT SCIENTIFIC COMPUTING WITH STREAMS A DISSERTATION SUBMITTED TO THE DEPARTMENT OF ELECTRICAL ENGINEERING AND THE COMMITTEE ON GRADUATE STUDIES OF STANFORD UNIVERSITY IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY Mattan Erez May 2007 c Copyright by Mattan Erez 2007 All Rights Reserved ii I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. (William J. Dally) Principal Adviser I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. (Patrick M. Hanrahan) I certify that I have read this dissertation and that, in my opinion, it is fully adequate in scope and quality as a dissertation for the degree of Doctor of Philosophy. (Mendel Rosenblum) Approved for the University Committee on Graduate Studies. iii iv Abstract Advances in VLSI technology have made the raw ingredients for computation plentiful. Large numbers of fast functional units and large amounts of memory and bandwidth can be made efficient in terms of chip area, cost, and energy, however, high-performance com- puters realize only a small fraction of VLSI’s potential. This dissertation describes the Merrimac streaming supercomputer architecture and system. Merrimac has an integrated view of the applications, software system, compiler, and architecture. We will show how this approach leads to over an order of magnitude gains in performance per unit cost, unit power, and unit floor-space for scientific applications when compared to common scien- tific computers designed around clusters of commodity general-purpose processors. -

Openpiton: an Open Source Manycore Research Framework

OpenPiton: An Open Source Manycore Research Framework Jonathan Balkind Michael McKeown Yaosheng Fu Tri Nguyen Yanqi Zhou Alexey Lavrov Mohammad Shahrad Adi Fuchs Samuel Payne ∗ Xiaohua Liang Matthew Matl David Wentzlaff Princeton University fjbalkind,mmckeown,yfu,trin,yanqiz,alavrov,mshahrad,[email protected], [email protected], fxiaohua,mmatl,[email protected] Abstract chipset Industry is building larger, more complex, manycore proces- sors on the back of strong institutional knowledge, but aca- demic projects face difficulties in replicating that scale. To Tile alleviate these difficulties and to develop and share knowl- edge, the community needs open architecture frameworks for simulation, synthesis, and software exploration which Chip support extensibility, scalability, and configurability, along- side an established base of verification tools and supported software. In this paper we present OpenPiton, an open source framework for building scalable architecture research proto- types from 1 core to 500 million cores. OpenPiton is the world’s first open source, general-purpose, multithreaded manycore processor and framework. OpenPiton leverages the industry hardened OpenSPARC T1 core with modifica- Figure 1: OpenPiton Architecture. Multiple manycore chips tions and builds upon it with a scratch-built, scalable uncore are connected together with chipset logic and networks to creating a flexible, modern manycore design. In addition, build large scalable manycore systems. OpenPiton’s cache OpenPiton provides synthesis and backend scripts for ASIC coherence protocol extends off chip. and FPGA to enable other researchers to bring their designs to implementation. OpenPiton provides a complete verifica- tion infrastructure of over 8000 tests, is supported by mature software tools, runs full-stack multiuser Debian Linux, and has been widespread across the industry with manycore pro- is written in industry standard Verilog. -

NESS Esk a 2005.0034090 a 22005 Sato Et

US007 185309B1 (12) United States Patent (10) Patent No.: US 7,185,309 B1 Kulkarni et al. (45) Date of Patent: Feb. 27, 2007 (54) METHOD AND APPARATUS FOR 2004/0006584 A1 1/2004 Vandeweerd APPLICATION-SPECIFIC PROGRAMMABLE 2004/O128120 A1 7/2004 Coburn et al. NESS Esk A 2005.00340902005/0114593 A1A 220055/2005 SatoCassell et al.et al. (75) Inventors: Chidamber R. Kulkarni, San Jose, CA 2005/0172085 A1 8/2005 Klingman (US); Gordon J. Brebner, Monte 2005/0172087 A1 8/2005 Klingman Sereno, CA (US); Eric R. Keller, 2005/0172088 A1 8/2005 Klingman Boulder, CO (US); Philip B. 2005/0172089 A1 8/2005 Klingman James-Roxby, Longmont, CO (US) 2005/0172090 A1 8/2005 Klingman O O 2005/0172289 A1 8/2005 Klingman (73) Assignee: Xilinx, Inc., San Jose, CA (US) 2005/0172290 A1 8/2005 Klingman (*) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 (21) Appl. No.: 10/769,591 OTHER PUBLICATIONS (22) Filed: Jan. 30, 2004 U.S. Appl. No. 10/769,330, filed Jan. 30, 2004, James-Roxby et al. (51) Int. Cl. (Continued) G06F 7/50 (2006.01) Primary Examiner Thuan Do (52) U.S. Cl. ............................................. 716/18: 718/2 Assistant Examiner Binh Tat (58) Field of Classification Search .................. 716/18, (74) Attorney, Agent, or Firm—Robert Brush 716/2, 3: 709/217: 71.9/313 See application file for complete search history. (57) ABSTRACT (56) References Cited U.S. PATENT DOCUMENTS Programmable architecture for implementing a message 5,867,180 A * 2/1999 Katayama et al. -

Standby Power Management Architecture for Deep- Submicron Systems

Standby Power Management Architecture for Deep- Submicron Systems Michael Alan Sheets Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2006-70 http://www.eecs.berkeley.edu/Pubs/TechRpts/2006/EECS-2006-70.html May 19, 2006 Copyright © 2006, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Standby Power Management Architecture for Deep-Submicron Systems by Michael Alan Sheets B.S.C.E. (Georgia Institute of Technology) 1999 M.S. (University of California, Berkeley) 2003 A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Engineering-Electrical Engineering and Computer Sciences in the GRADUATE DIVISION of the UNIVERSITY OF CALIFORNIA, BERKELEY Committee in charge: Professor Jan Rabaey, Chair Professor Robert Brodersen Professor Paul Wright Spring 2006 The dissertation of Michael Alan Sheets is approved: Chair Date Date Date University of California, Berkeley Spring 2006 Standby Power Management Architecture for Deep-Submicron Systems Copyright 2006 by Michael Alan Sheets 1 Abstract Standby Power Management Architecture for Deep-Submicron Systems by Michael Alan Sheets Doctor of Philosophy in Engineering-Electrical Engineering and Computer Sciences University of California, Berkeley Professor Jan Rabaey, Chair In deep-submicron processes a signi¯cant portion of the power budget is lost in standby power due to increasing leakage e®ects. -

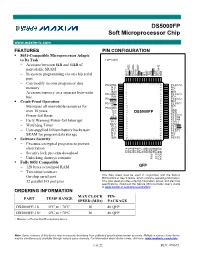

DS5000FP Soft Microprocessor Chip

DS5000FP Soft Microprocessor Chip www.maxim-ic.com FEATURES PIN CONFIGURATION 8051-Compatible Microprocessor Adapts to Its Task TOP VIEW − Accesses between 8kB and 64kB of nonvolatile SRAM LE BD6 PSEN BD5 P2.7/A15 BD4 − In-system programming via on-chip serial BA11 P0.5/AD5 CE2 P0.6/AD6 BA10 P0.7/AD7 CE1 EA N.C. BD7 A port − Can modify its own program or data 80 79 78 77 76 75 74 73 72 71 70 69 68 67 66 65 P0.4/AD4 1 64 P2.6/A14 2 N.C. 63 N.C. memory 3 62 N.C. N.C. BA9 4 61 BD3 − Accesses memory on a separate byte-wide P0.3/AD3 5 60 P2.5/A13 bus BA8 6 59 BD2 P0.2/AD2 7 58 P2.4/A12 Crash-Proof Operation BA13 8 57 BD1 P0.1/AD1 9 56 P2.3/A11 R/W 10 55 BD0 − Maintains all nonvolatile resources for 11 54 P0.0/AD0 VLI VCC0 12 53 GND over 10 years VCC 13 DS5000FP 52 GND 14 51 − Power-fail Reset VCC P2.2/A10 P1.0 15 50 P2.1/A9 BA14 16 49 P2.0/A8 − Early Warning Power-fail Interrupt P1.1 17 48 XTAL1 BA12 18 47 XTAL2 19 − Watchdog Timer P1.2 46 P3.7/RD 20 45 BA7 P3.6/WR − User-supplied lithium battery backs user P1.3 21 44 P3.5/T1 N.C. 22 43 N.C. SRAM for program/data storage N.C. 23 42 N.C. -

Pubtex Output 2006.05.15:1001

Cray XD1™ Release Description Private S–2453–14 © 2006 Cray Inc. All Rights Reserved. Unpublished Private Information. This unpublished work is protected to trade secret, copyright and other laws. Except as permitted by contract or express written permission of Cray Inc., no part of this work or its content may be used, reproduced or disclosed in any form. U.S. GOVERNMENT RESTRICTED RIGHTS NOTICE The Computer Software is delivered as "Commercial Computer Software" as defined in DFARS 48 CFR 252.227-7014. All Computer Software and Computer Software Documentation acquired by or for the U.S. Government is provided with Restricted Rights. Use, duplication or disclosure by the U.S. Government is subject to the restrictions described in FAR 48 CFR 52.227-14 or DFARS 48 CFR 252.227-7014, as applicable. Technical Data acquired by or for the U.S. Government, if any, is provided with Limited Rights. Use, duplication or disclosure by the U.S. Government is subject to the restrictions described in FAR 48 CFR 52.227-14 or DFARS 48 CFR 252.227-7013, as applicable. Autotasking, Cray, Cray Channels, Cray Y-MP, GigaRing, LibSci, UNICOS and UNICOS/mk are federally registered trademarks and Active Manager, CCI, CCMT, CF77, CF90, CFT, CFT2, CFT77, ConCurrent Maintenance Tools, COS, Cray Ada, Cray Animation Theater, Cray APP, Cray Apprentice2, Cray C++ Compiling System, Cray C90, Cray C90D, Cray CF90, Cray EL, Cray Fortran Compiler, Cray J90, Cray J90se, Cray J916, Cray J932, Cray MTA, Cray MTA-2, Cray MTX, Cray NQS, Cray Research, Cray SeaStar, Cray S-MP, -

FPGA Design Guide

FPGA Design Guide Lattice Semiconductor Corporation 5555 NE Moore Court Hillsboro, OR 97124 (503) 268-8000 September 16, 2008 Copyright Copyright © 2008 Lattice Semiconductor Corporation. This document may not, in whole or part, be copied, photocopied, reproduced, translated, or reduced to any electronic medium or machine- readable form without prior written consent from Lattice Semiconductor Corporation. Trademarks Lattice Semiconductor Corporation, L Lattice Semiconductor Corporation (logo), L (stylized), L (design), Lattice (design), LSC, E2CMOS, Extreme Performance, FlashBAK, flexiFlash, flexiMAC, flexiPCS, FreedomChip, GAL, GDX, Generic Array Logic, HDL Explorer, IPexpress, ISP, ispATE, ispClock, ispDOWNLOAD, ispGAL, ispGDS, ispGDX, ispGDXV, ispGDX2, ispGENERATOR, ispJTAG, ispLEVER, ispLeverCORE, ispLSI, ispMACH, ispPAC, ispTRACY, ispTURBO, ispVIRTUAL MACHINE, ispVM, ispXP, ispXPGA, ispXPLD, LatticeEC, LatticeECP, LatticeECP-DSP, LatticeECP2, LatticeECP2M, LatticeMico8, LatticeMico32, LatticeSC, LatticeSCM, LatticeXP, LatticeXP2, MACH, MachXO, MACO, ORCA, PAC, PAC-Designer, PAL, Performance Analyst, PURESPEED, Reveal, Silicon Forest, Speedlocked, Speed Locking, SuperBIG, SuperCOOL, SuperFAST, SuperWIDE, sysCLOCK, sysCONFIG, sysDSP, sysHSI, sysI/O, sysMEM, The Simple Machine for Complex Design, TransFR, UltraMOS, and specific product designations are either registered trademarks or trademarks of Lattice Semiconductor Corporation or its subsidiaries in the United States and/or other countries. ISP, Bringing the Best Together, and More -

ESC-470: ARM 9 Instruction Set Architecture with Performance

ARM 9 Instruction Set Architecture Introduction with Performance Perspective Joe-Ming Cheng, Ph.D. ARM-family processors are positioned among the leaders in key embedded applications. Many presentations and short lectures have already addressed the ARM’s applications and capabilities. In this introduction, we intend to discuss the ARM’s instruction set uniqueness from the performance prospective. This introduction is also trying to follow the approaches established by two outstanding textbooks of David Patterson and John Hennessey [PetHen00] [HenPet02]. 1.0 ARM Instruction Set Architecture Processor instruction set architecture (ISA) choices have evolved from accumulator, stack, register-to- memory, to register-register (load-store) organization. ARM 9 ISA is a load-store machine. ARM 9 ISA takes advantage of its smaller set of registers (16 vs. many 32-register processors) to incorporate more direct controls and achieve high encoding density. ARM’s load or store multiple register instruction, for example , allows enlisting of all possible registers and conditional execution in one instruction. The Thumb mode instruction set is another exa mple of how ARM ISA facilitates higher encode density. Rather than compressing the code, Thumb -mode instructions are two 16-bit instructions packed in a 32-bit ARM-mode instruction space. The Thumb -mode instructions are a subset of ARM instructions. When executing in Thumb mode, a single 32-bit instruction fetch cycle effectively brings in two instructions. Thumb code reduces access bandwidth, code size, and improves instruction cache hit rate. Another way ARM achieves cycle time reduction is by using Harvard architecture. The architecture facilitates independent data and instruction buses.