Memory Centric Characterization and Analysis of SPEC CPU2017 Suite1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microprocessor

MICROPROCESSOR www.MPRonline.com THE REPORTINSIDER’S GUIDE TO MICROPROCESSOR HARDWARE EEMBC’S MULTIBENCH ARRIVES CPU Benchmarks: Not Just For ‘Benchmarketing’ Any More By Tom R. Halfhill {7/28/08-01} Imagine a world without measurements or statistical comparisons. Baseball fans wouldn’t fail to notice that a .300 hitter is better than a .100 hitter. But would they welcome a trade that sends the .300 hitter to Cleveland for three .100 hitters? System designers and software developers face similar quandaries when making trade-offs EEMBC’s role has evolved over the years, and Multi- with multicore processors. Even if a dual-core processor Bench is another step. Originally, EEMBC was conceived as an appears to be better than a single-core processor, how much independent entity that would create benchmark suites and better is it? Twice as good? Would a quad-core processor be certify the scores for accuracy, allowing vendors and customers four times better? Are more cores worth the additional cost, to make valid comparisons among embedded microproces- design complexity, power consumption, and programming sors. (See MPR 5/1/00-02, “EEMBC Releases First Bench- difficulty? marks.”) EEMBC still serves that role. But, as it turns out, most The Embedded Microprocessor Benchmark Consor- EEMBC members don’t openly publish their scores. Instead, tium (EEMBC) wants to help answer those questions. they disclose scores to prospective customers under an NDA or EEMBC’s MultiBench 1.0 is a new benchmark suite for use the benchmarks for internal testing and analysis. measuring the throughput of multiprocessor systems, Partly for this reason, MPR rarely cites EEMBC scores including those built with multicore processors. -

Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Session 11: Performance Analysis and Simulation ICPE ’19, April 7–11, 2019, Mumbai, India Memory Centric Characterization and Analysis of SPEC CPU2017 Suite Sarabjeet Singh Manu Awasthi [email protected] [email protected] Ashoka University Ashoka University ABSTRACT These benchmarks have become the standard for any researcher or In this paper, we provide a comprehensive, memory-centric charac- commercial entity wishing to benchmark their architecture or for terization of the SPEC CPU2017 benchmark suite, using a number of exploring new designs. mechanisms including dynamic binary instrumentation, measure- The latest offering of SPEC CPU suite, SPEC CPU2017, was re- ments on native hardware using hardware performance counters leased in June 2017 [8]. SPEC CPU2017 retains a number of bench- and operating system based tools. marks from previous iterations but has also added many new ones We present a number of results including working set sizes, mem- to reflect the changing nature of applications. Some recent stud- ory capacity consumption and memory bandwidth utilization of ies [21, 24] have already started characterizing the behavior of various workloads. Our experiments reveal that, on the x86_64 ISA, SPEC CPU2017 applications, looking for potential optimizations to SPEC CPU2017 workloads execute a significant number of mem- system architectures. ory related instructions, with approximately 50% of all dynamic In recent years the memory hierarchy, from the caches, all the instructions requiring memory accesses. We also show that there is way to main memory, has become a first class citizen of computer a large variation in the memory footprint and bandwidth utilization system design. -

Load Testing, Benchmarking, and Application Performance Management for the Web

Published in the 2002 Computer Measurement Group (CMG) Conference, Reno, NV, Dec. 2002. LOAD TESTING, BENCHMARKING, AND APPLICATION PERFORMANCE MANAGEMENT FOR THE WEB Daniel A. Menascé Department of Computer Science and E-center of E-Business George Mason University Fairfax, VA 22030-4444 [email protected] Web-based applications are becoming mission-critical for many organizations and their performance has to be closely watched. This paper discusses three important activities in this context: load testing, benchmarking, and application performance management. Best practices for each of these activities are identified. The paper also explains how basic performance results can be used to increase the efficiency of load testing procedures. infrastructure depends on the traffic it expects to see 1. Introduction at its site. One needs to spend enough but no more than is required in the IT infrastructure. Besides, Web-based applications are becoming mission- resources need to be spent where they will generate critical to most private and governmental the most benefit. For example, one should not organizations. The ever-increasing number of upgrade the Web servers if most of the delay is in computers connected to the Internet and the fast the database server. So, in order to maximize the growing number of Web-enabled wireless devices ROI, one needs to know when and how to upgrade create incentives for companies to invest in Web- the IT infrastructure. In other words, not spending at based infrastructures and the associated personnel the right time and spending at the wrong place will to maintain them. By establishing a Web presence, a reduce the cost-benefit of the investment. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

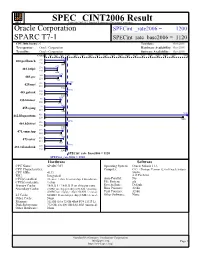

Oracle Corporation: SPARC T7-1

SPEC CINT2006 Result spec Copyright 2006-2015 Standard Performance Evaluation Corporation Oracle Corporation SPECint_rate2006 = 1200 SPARC T7-1 SPECint_rate_base2006 = 1120 CPU2006 license: 6 Test date: Oct-2015 Test sponsor: Oracle Corporation Hardware Availability: Oct-2015 Tested by: Oracle Corporation Software Availability: Oct-2015 Copies 0 300 600 900 1200 1600 2000 2400 2800 3200 3600 4000 4400 4800 5200 5600 6000 6400 6800 7600 1100 400.perlbench 192 224 1040 675 401.bzip2 256 224 666 875 403.gcc 160 224 720 1380 429.mcf 128 224 1160 1190 445.gobmk 256 224 1120 854 456.hmmer 96 224 813 1020 458.sjeng 192 224 988 7550 462.libquantum 416 224 7330 1190 464.h264ref 256 224 1150 956 471.omnetpp 255 224 885 986 473.astar 416 224 862 1180 483.xalancbmk 256 224 1140 SPECint_rate_base2006 = 1120 SPECint_rate2006 = 1200 Hardware Software CPU Name: SPARC M7 Operating System: Oracle Solaris 11.3 CPU Characteristics: Compiler: C/C++/Fortran: Version 12.4 of Oracle Solaris CPU MHz: 4133 Studio, FPU: Integrated 4/15 Patch Set CPU(s) enabled: 32 cores, 1 chip, 32 cores/chip, 8 threads/core Auto Parallel: No CPU(s) orderable: 1 chip File System: zfs Primary Cache: 16 KB I + 16 KB D on chip per core System State: Default Secondary Cache: 2 MB I on chip per chip (256 KB / 4 cores); Base Pointers: 32-bit 4 MB D on chip per chip (256 KB / 2 cores) Peak Pointers: 32-bit L3 Cache: 64 MB I+D on chip per chip (8 MB / 4 cores) Other Software: None Other Cache: None Memory: 512 GB (16 x 32 GB 4Rx4 PC4-2133P-L) Disk Subsystem: 732 GB, 4 x 400 GB SAS SSD -

Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK

1 From Start-ups to Scale-ups: Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK Abstract—This paper1 describes some of the challenges and research questions that target the most productive intersection opportunities when deploying static and dynamic analysis at we have yet witnessed: that between exciting, intellectually scale, drawing on the authors’ experience with the Infer and challenging science, and real-world deployment impact. Sapienz Technologies at Facebook, each of which started life as a research-led start-up that was subsequently deployed at scale, Many industrialists have perhaps tended to regard it unlikely impacting billions of people worldwide. that much academic work will prove relevant to their most The paper identifies open problems that have yet to receive pressing industrial concerns. On the other hand, it is not significant attention from the scientific community, yet which uncommon for academic and scientific researchers to believe have potential for profound real world impact, formulating these that most of the problems faced by industrialists are either as research questions that, we believe, are ripe for exploration and that would make excellent topics for research projects. boring, tedious or scientifically uninteresting. This sociological phenomenon has led to a great deal of miscommunication between the academic and industrial sectors. I. INTRODUCTION We hope that we can make a small contribution by focusing on the intersection of challenging and interesting scientific How do we transition research on static and dynamic problems with pressing industrial deployment needs. Our aim analysis techniques from the testing and verification research is to move the debate beyond relatively unhelpful observations communities to industrial practice? Many have asked this we have typically encountered in, for example, conference question, and others related to it. -

Poweredge R940 (Intel Xeon Gold 5122, 3.60 Ghz) Specint Rate Base2006 = 1080 CPU2006 License: 55 Test Date: Jun-2017 Test Sponsor: Dell Inc

SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. SPECint_rate2006 = 1150 PowerEdge R940 (Intel Xeon Gold 5122, 3.60 GHz) SPECint_rate_base2006 = 1080 CPU2006 license: 55 Test date: Jun-2017 Test sponsor: Dell Inc. Hardware Availability: Jul-2017 Tested by: Dell Inc. Software Availability: Nov-2016 Copies 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 11000 12000 13000 14000 15000 16000 18000 917 400.perlbench 32 32 762 521 401.bzip2 32 32 487 808 403.gcc 32 32 802 1480 429.mcf 32 624 445.gobmk 32 2010 456.hmmer 32 32 1530 710 458.sjeng 32 32 658 17700 462.libquantum 32 1170 464.h264ref 32 32 1130 554 471.omnetpp 32 32 509 614 473.astar 32 1430 483.xalancbmk 32 SPECint_rate_base2006 = 1080 SPECint_rate2006 = 1150 Hardware Software CPU Name: Intel Xeon Gold 5122 Operating System: SUSE Linux Enterprise Server 12 SP2 CPU Characteristics: Intel Turbo Boost Technology up to 3.70 GHz 4.4.21-69-default CPU MHz: 3600 Compiler: C/C++: Version 17.0.3.191 of Intel C/C++ FPU: Integrated Compiler for Linux CPU(s) enabled: 16 cores, 4 chips, 4 cores/chip, 2 threads/core Auto Parallel: Yes CPU(s) orderable: 2,4 chip File System: xfs Primary Cache: 32 KB I + 32 KB D on chip per core System State: Run level 3 (multi-user) Secondary Cache: 1 MB I+D on chip per core Base Pointers: 32-bit L3 Cache: 16.5 MB I+D on chip per chip Peak Pointers: 32/64-bit Other Cache: None Other Software: Microquill SmartHeap V10.2 Memory: 768 GB (48 x 16 GB 2Rx8 PC4-2666V-R) Disk Subsystem: 1 x 960 GB SATA SSD Other Hardware: None Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. -

Evaluation of AMD EPYC

Evaluation of AMD EPYC Chris Hollowell <[email protected]> HEPiX Fall 2018, PIC Spain What is EPYC? EPYC is a new line of x86_64 server CPUs from AMD based on their Zen microarchitecture Same microarchitecture used in their Ryzen desktop processors Released June 2017 First new high performance series of server CPUs offered by AMD since 2012 Last were Piledriver-based Opterons Steamroller Opteron products cancelled AMD had focused on low power server CPUs instead x86_64 Jaguar APUs ARM-based Opteron A CPUs Many vendors are now offering EPYC-based servers, including Dell, HP and Supermicro 2 How Does EPYC Differ From Skylake-SP? Intel’s Skylake-SP Xeon x86_64 server CPU line also released in 2017 Both Skylake-SP and EPYC CPU dies manufactured using 14 nm process Skylake-SP introduced AVX512 vector instruction support in Xeon AVX512 not available in EPYC HS06 official GCC compilation options exclude autovectorization Stock SL6/7 GCC doesn’t support AVX512 Support added in GCC 4.9+ Not heavily used (yet) in HEP/NP offline computing Both have models supporting 2666 MHz DDR4 memory Skylake-SP 6 memory channels per processor 3 TB (2-socket system, extended memory models) EPYC 8 memory channels per processor 4 TB (2-socket system) 3 How Does EPYC Differ From Skylake (Cont)? Some Skylake-SP processors include built in Omnipath networking, or FPGA coprocessors Not available in EPYC Both Skylake-SP and EPYC have SMT (HT) support 2 logical cores per physical core (absent in some Xeon Bronze models) Maximum core count (per socket) Skylake-SP – 28 physical / 56 logical (Xeon Platinum 8180M) EPYC – 32 physical / 64 logical (EPYC 7601) Maximum socket count Skylake-SP – 8 (Xeon Platinum) EPYC – 2 Processor Inteconnect Skylake-SP – UltraPath Interconnect (UPI) EYPC – Infinity Fabric (IF) PCIe lanes (2-socket system) Skylake-SP – 96 EPYC – 128 (some used by SoC functionality) Same number available in single socket configuration 4 EPYC: MCM/SoC Design EPYC utilizes an SoC design Many functions normally found in motherboard chipset on the CPU SATA controllers USB controllers etc. -

3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) Zero Ovf

Chapter 3 Arithmetic for Computers 1 § 3.1Introduction Arithmetic for Computers Operations on integers Addition and subtraction Multiplication and division Dealing with overflow Floating-point real numbers Representation and operations Rechnerstrukturen 182.092 3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) zero ovf 1 Must support the Arithmetic/Logic 1 operations of the ISA A 32 add, addi, addiu, addu ALU result sub, subu 32 mult, multu, div, divu B 32 sqrt 4 and, andi, nor, or, ori, xor, xori m (operation) beq, bne, slt, slti, sltiu, sltu With special handling for sign extend – addi, addiu, slti, sltiu zero extend – andi, ori, xori overflow detection – add, addi, sub Rechnerstrukturen 182.092 3 — Arithmetic for Computers 3 (Simplyfied) 1-bit MIPS ALU AND, OR, ADD, SLT Rechnerstrukturen 182.092 3 — Arithmetic for Computers 4 Final 32-bit ALU Rechnerstrukturen 182.092 3 — Arithmetic for Computers 5 Performance issues Critical path of n-bit ripple-carry adder is n*CP CarryIn0 A0 1-bit result0 ALU B0 CarryOut0 CarryIn1 A1 1-bit result1 B ALU 1 CarryOut 1 CarryIn2 A2 1-bit result2 ALU B2 CarryOut CarryIn 2 3 A3 1-bit result3 ALU B3 CarryOut3 Design trick – throw hardware at it (Carry Lookahead) Rechnerstrukturen 182.092 3 — Arithmetic for Computers 6 Carry Lookahead Logic (4 bit adder) LS 283 Rechnerstrukturen 182.092 3 — Arithmetic for Computers 7 § 3.2 Addition and Subtraction 3.2 Integer Addition Example: 7 + 6 Overflow if result out of range Adding +ve and –ve operands, no overflow Adding two +ve operands -

IBM Power Systems Performance Report Apr 13, 2021

IBM Power Performance Report Power7 to Power10 September 8, 2021 Table of Contents 3 Introduction to Performance of IBM UNIX, IBM i, and Linux Operating System Servers 4 Section 1 – SPEC® CPU Benchmark Performance 4 Section 1a – Linux Multi-user SPEC® CPU2017 Performance (Power10) 4 Section 1b – Linux Multi-user SPEC® CPU2017 Performance (Power9) 4 Section 1c – AIX Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 5 Section 1d – Linux Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 6 Section 2 – AIX Multi-user Performance (rPerf) 6 Section 2a – AIX Multi-user Performance (Power8, Power9 and Power10) 9 Section 2b – AIX Multi-user Performance (Power9) in Non-default Processor Power Mode Setting 9 Section 2c – AIX Multi-user Performance (Power7 and Power7+) 13 Section 2d – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power8) 15 Section 2e – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power7 and Power7+) 20 Section 3 – CPW Benchmark Performance 19 Section 3a – CPW Benchmark Performance (Power8, Power9 and Power10) 22 Section 3b – CPW Benchmark Performance (Power7 and Power7+) 25 Section 4 – SPECjbb®2015 Benchmark Performance 25 Section 4a – SPECjbb®2015 Benchmark Performance (Power9) 25 Section 4b – SPECjbb®2015 Benchmark Performance (Power8) 25 Section 5 – AIX SAP® Standard Application Benchmark Performance 25 Section 5a – SAP® Sales and Distribution (SD) 2-Tier – AIX (Power7 to Power8) 26 Section 5b – SAP® Sales and Distribution (SD) 2-Tier – Linux on Power (Power7 to Power7+) -

Asustek Computer Inc.: Asus P6T

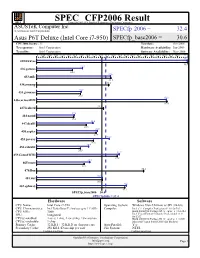

SPEC CFP2006 Result spec Copyright 2006-2014 Standard Performance Evaluation Corporation ASUSTeK Computer Inc. (Test Sponsor: Intel Corporation) SPECfp 2006 = 32.4 Asus P6T Deluxe (Intel Core i7-950) SPECfp_base2006 = 30.6 CPU2006 license: 13 Test date: Oct-2008 Test sponsor: Intel Corporation Hardware Availability: Jun-2009 Tested by: Intel Corporation Software Availability: Nov-2008 0 3.00 6.00 9.00 12.0 15.0 18.0 21.0 24.0 27.0 30.0 33.0 36.0 39.0 42.0 45.0 48.0 51.0 54.0 57.0 60.0 63.0 66.0 71.0 70.7 410.bwaves 70.8 21.5 416.gamess 16.8 34.6 433.milc 34.8 434.zeusmp 33.8 20.9 435.gromacs 20.6 60.8 436.cactusADM 61.0 437.leslie3d 31.3 17.6 444.namd 17.4 26.4 447.dealII 23.3 28.5 450.soplex 27.9 34.0 453.povray 26.6 24.4 454.calculix 20.5 38.7 459.GemsFDTD 37.8 24.9 465.tonto 22.3 470.lbm 50.2 30.9 481.wrf 30.9 42.1 482.sphinx3 41.3 SPECfp_base2006 = 30.6 SPECfp2006 = 32.4 Hardware Software CPU Name: Intel Core i7-950 Operating System: Windows Vista Ultimate w/ SP1 (64-bit) CPU Characteristics: Intel Turbo Boost Technology up to 3.33 GHz Compiler: Intel C++ Compiler Professional 11.0 for IA32 CPU MHz: 3066 Build 20080930 Package ID: w_cproc_p_11.0.054 Intel Visual Fortran Compiler Professional 11.0 FPU: Integrated for IA32 CPU(s) enabled: 4 cores, 1 chip, 4 cores/chip, 2 threads/core Build 20080930 Package ID: w_cprof_p_11.0.054 CPU(s) orderable: 1 chip Microsoft Visual Studio 2008 (for libraries) Primary Cache: 32 KB I + 32 KB D on chip per core Auto Parallel: Yes Secondary Cache: 256 KB I+D on chip per core File System: NTFS Continued on next page Continued on next page Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CFP2006 Result spec Copyright 2006-2014 Standard Performance Evaluation Corporation ASUSTeK Computer Inc. -

An Effective Dynamic Analysis for Detecting Generalized Deadlocks

An Effective Dynamic Analysis for Detecting Generalized Deadlocks Pallavi Joshi Mayur Naik Koushik Sen EECS, UC Berkeley, CA, USA Intel Labs Berkeley, CA, USA EECS, UC Berkeley, CA, USA [email protected] [email protected] [email protected] David Gay Intel Labs Berkeley, CA, USA [email protected] ABSTRACT for a synchronization event that will never happen. Dead- We present an effective dynamic analysis for finding a broad locks are a common problem in real-world multi-threaded class of deadlocks, including the well-studied lock-only dead- programs. For instance, 6,500/198,000 (∼ 3%) of the bug locks as well as the less-studied, but no less widespread or reports in the bug database at http://bugs.sun.com for insidious, deadlocks involving condition variables. Our anal- Sun's Java products involve the keyword \deadlock" [11]. ysis consists of two stages. In the first stage, our analysis Moreover, deadlocks often occur non-deterministically, un- observes a multi-threaded program execution and generates der very specific thread schedules, making them harder to a simple multi-threaded program, called a trace program, detect and reproduce using conventional testing approaches. that only records operations observed during the execution Finally, extending existing multi-threaded programs or fix- that are deemed relevant to finding deadlocks. Such op- ing other concurrency bugs like races often involves intro- erations include lock acquire and release, wait and notify, ducing new synchronization, which, in turn, can introduce thread start and join, and change of values of user-identified new deadlocks. Therefore, deadlock detection tools are im- synchronization predicates associated with condition vari- portant for developing and testing multi-threaded programs.