What's Wrong with HTTP (And Why It Doesn't Matter)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cryptomator Documentation Release 1.5.0

Cryptomator Documentation Release 1.5.0 Cryptobot Sep 15, 2021 Desktop 1 Setup 3 1.1 Windows...............................................3 1.2 macOS................................................3 1.3 Linux.................................................3 2 Getting Started 5 3 Adding Vaults 7 3.1 Create a New Vault..........................................8 3.2 Open an Existing Vault........................................ 13 4 Accessing Vaults 15 4.1 Unlocking a Vault.......................................... 16 4.2 Working with the Unlocked Vault.................................. 17 4.3 Locking a vault............................................ 18 5 Password And Recovery Key 21 5.1 Change Password........................................... 21 5.2 Show Recovery Key......................................... 22 5.3 Reset Password............................................ 23 6 Vault Mounting 27 6.1 General Adapter Selection...................................... 27 6.2 Options applicable to all Systems and Adapters........................... 27 6.3 WebDAV-specific options...................................... 28 6.4 Dokany-specific options....................................... 28 6.5 FUSE-specific options........................................ 28 7 Vault Management 29 7.1 Remove Vaults............................................ 29 7.2 Reorder Vaults............................................ 29 7.3 Vault Options............................................. 29 8 Setup 33 8.1 Google PlayStore.......................................... -

Ipsec, SSL, Firewall, Wireless Security

IPSec 1 Outline • Internet Protocol – IPv6 • IPSec – Security Association (SA) – IPSec Base Protocol (AH, ESP) – Encapsulation Mode (transport, tunnel) 2 IPv6 Header • Initial motivation: – 32-bit address space soon to be completely allocated. – Expands addresses to 128 bits • 430,000,000,000,000,000,000 for every square inch of earth’s surface! • Solves IPv4 problem of insufficient address space • Additional motivation: – header format helps speedy processing/forwarding – header changes to facilitate QoS IPv6 datagram format: – fixed-length 40 byte header – no fragmentation allowed 3 IPv6 Header (Cont) Priority: identify priority among datagrams in flow Flow Label: identify datagrams in same “flow.” (concept of“flow” not well defined). Next header: identify upper layer protocol for data 4 Other Changes from IPv4 • Checksum: removed entirely to reduce processing time at each hop • Options: allowed, but outside of header, indicated by “Next Header” field • ICMPv6: new version of ICMP – additional message types, e.g. “Packet Too Big” – multicast group management functions 5 IPv6 Security – IPsec mandated • IPsec is mandated in IPv6 – This means that all implementations (i.e. hosts, routers, etc) must have IPsec capability to be considered as IPv6-conformant • When (If?) IPv6 is in widespread use, this means that IPsec will be installed everywhere – At the moment, IPsec is more common in network devices (routers, etc) than user hosts, but this would change with IPsec • All hosts having IPsec => real end-to-end security possible 6 IPv6 Security • Enough IP addrs for every imaginable device + Real end-to-end security = Ability to securely communicate from anything to anything 7 IPv6 Security – harder to scan networks • With IPv4, it is easy to scan a network – With tools like nmap, can scan a typical subnet in a few minutes see: http://www.insecure.org/nmap/ – Returning list of active hosts and open ports – Many worms also operate by scanning • e.g. -

SILC-A SECURED INTERNET CHAT PROTOCOL Anindita Sinha1, Saugata Sinha2 Asst

ISSN (Print) : 2320 – 3765 ISSN (Online): 2278 – 8875 International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering Vol. 2, Issue 5, May 2013 SILC-A SECURED INTERNET CHAT PROTOCOL Anindita Sinha1, Saugata Sinha2 Asst. Prof, Dept. of ECE, Siliguri Institute of Technology, Sukna, Siliguri, West Bengal, India 1 Network Engineer, Network Dept, Ericsson Global India Ltd, India2 Abstract:-. The Secure Internet Live Conferencing (SILC) protocol, a new generation chat protocol provides full featured conferencing services, compared to any other chat protocol. Its main interesting point is security which has been described all through the paper. We have studied how encryption and authentication of the messages in the network achieves security. The security has been the primary goal of the SILC protocol and the protocol has been designed from the day one security in mind. In this paper we have studied about different keys which have been used to achieve security in the SILC protocol. The main function of SILC is to achieve SECURITY which is most important in any chat protocol. We also have studied different command for communication in chat protocols. Keywords: SILC protocol, IM, MIME, security I.INTRODUCTION SILC stands for “SECURE INTERNET LIVE CONFERENCING”. SILC is a secure communication platform, looks similar to IRC, first protocol & quickly gained the status of being the most popular chat on the net. The security is important feature in applications & protocols in contemporary network environment. It is not anymore enough to just provide services; they need to be secure services. The SILC protocol is a new generation chat protocol which provides full featured conferencing services; additionally it provides security by encrypting & authenticating the messages in the network. -

Cheat Sheet – Common Ports (PDF)

COMMON PORTS packetlife.net TCP/UDP Port Numbers 7 Echo 554 RTSP 2745 Bagle.H 6891-6901 Windows Live 19 Chargen 546-547 DHCPv6 2967 Symantec AV 6970 Quicktime 20-21 FTP 560 rmonitor 3050 Interbase DB 7212 GhostSurf 22 SSH/SCP 563 NNTP over SSL 3074 XBOX Live 7648-7649 CU-SeeMe 23 Telnet 587 SMTP 3124 HTTP Proxy 8000 Internet Radio 25 SMTP 591 FileMaker 3127 MyDoom 8080 HTTP Proxy 42 WINS Replication 593 Microsoft DCOM 3128 HTTP Proxy 8086-8087 Kaspersky AV 43 WHOIS 631 Internet Printing 3222 GLBP 8118 Privoxy 49 TACACS 636 LDAP over SSL 3260 iSCSI Target 8200 VMware Server 53 DNS 639 MSDP (PIM) 3306 MySQL 8500 Adobe ColdFusion 67-68 DHCP/BOOTP 646 LDP (MPLS) 3389 Terminal Server 8767 TeamSpeak 69 TFTP 691 MS Exchange 3689 iTunes 8866 Bagle.B 70 Gopher 860 iSCSI 3690 Subversion 9100 HP JetDirect 79 Finger 873 rsync 3724 World of Warcraft 9101-9103 Bacula 80 HTTP 902 VMware Server 3784-3785 Ventrilo 9119 MXit 88 Kerberos 989-990 FTP over SSL 4333 mSQL 9800 WebDAV 102 MS Exchange 993 IMAP4 over SSL 4444 Blaster 9898 Dabber 110 POP3 995 POP3 over SSL 4664 Google Desktop 9988 Rbot/Spybot 113 Ident 1025 Microsoft RPC 4672 eMule 9999 Urchin 119 NNTP (Usenet) 1026-1029 Windows Messenger 4899 Radmin 10000 Webmin 123 NTP 1080 SOCKS Proxy 5000 UPnP 10000 BackupExec 135 Microsoft RPC 1080 MyDoom 5001 Slingbox 10113-10116 NetIQ 137-139 NetBIOS 1194 OpenVPN 5001 iperf 11371 OpenPGP 143 IMAP4 1214 Kazaa 5004-5005 RTP 12035-12036 Second Life 161-162 SNMP 1241 Nessus 5050 Yahoo! Messenger 12345 NetBus 177 XDMCP 1311 Dell OpenManage 5060 SIP 13720-13721 -

TCP/IP Standard Applications Telnet - SSH - FTP - SMTP - HTTP

TCP/IP Standard Applications Telnet - SSH - FTP - SMTP - HTTP Virtual Terminal, Secure Shell, File Transfer, Email, WWW Agenda • Telnet (Virtual Terminal) • SSH • FTP (File Transfer) • E-Mail and SMTP • WWW and HTTP © 2016, D.I. Lindner / D.I. Haas Telnet-SSH-FTP-SMTP-HTTP, v6.0 2 What is Telnet? • Telnet is a standard method to communicate with another Internet host • Telnet provides a standard interface for terminal devices and terminal-oriented processes through a network • using the Telnet protocol user on a local host can remote-login and execute commands on another distant host • Telnet employs a client-server model – a Telnet client "looks and feels" like a Terminal on a distant server – even today Telnet provides a text-based user interface © 2016, D.I. Lindner / D.I. Haas Telnet-SSH-FTP-SMTP-HTTP, v6.0 3 Local and Remote Terminals network local terminal workstation Host as remote terminal with Telnet Server with Telnet Client traditional configuration today's demand: remote login © 2016, D.I. Lindner / D.I. Haas Telnet-SSH-FTP-SMTP-HTTP, v6.0 4 About Telnet • Telnet was one of the first Internet applications – since the earliest demand was to connect terminals to hosts across networks • Telnet is one of the most popular Internet applications because – of its flexibility (checking E-Mails, etc.) – it does not waste much network resources – because Telnet clients are integrated in every UNIX environment (and other operating systems) © 2016, D.I. Lindner / D.I. Haas Telnet-SSH-FTP-SMTP-HTTP, v6.0 5 Telnet Basics • Telnet is connection oriented and uses the TCP protocol • clients connect to the "well-known" destination port 23 on the server side • protocol specification: RFC 854 • three main ideas: – concept of Network Virtual Terminals (NVTs) – principle of negotiated options – a symmetric view of terminals and (server-) processes © 2016, D.I. -

Diplomarbeit Kalenderstandards Im Internet

Diplomarbeit Kalenderstandards im Internet Eingereicht von Reinhard Fischer Studienkennzahl J151 Matrikelnummer: 9852961 Diplomarbeit am Institut für Informationswirtschaft WIRTSCHAFTSUNIVERSITÄT WIEN Studienrichtung: Betriebswirtschaft Begutachter: Prof. DDr. Arno Scharl Betreuender Assistent: Dipl.-Ing. Mag. Dr. Albert Weichselbraun Wien, 20. August 2007 ii Inhaltsverzeichnis Abbildungsverzeichnis vi Abkürzungsverzeichnis vii 1 Einleitung 1 1.1 Problemstellung . 1 1.2 Inhalt und Vorgehensweise . 3 2 Standards für Kalender im Internet 5 2.1 iCalendar und darauf basierende Standards . 6 2.1.1 iCalendar und vCalendar . 6 2.1.2 Transport-Independent Interoperability Protocol (iTIP) . 8 2.1.3 iCalendar Message-Based Interoperability Protocol (iMIP) . 8 2.1.4 iCalendar über WebDAV (WebCAL) . 10 2.1.5 Storage of Groupware Objects in WebDAV (GroupDAV) . 11 2.1.6 Calendaring and Scheduling Extensions to WebDAV (CalDAV) . 12 2.1.7 IETF Calendar Access Protocol (CAP) . 13 2.2 XML-basierte Formate . 15 2.2.1 XML iCalendar (xCal) . 15 2.2.2 RDF Calendar (RDFiCal) . 16 2.2.3 RDFa (RDF/A) . 16 2.2.4 OWL-Time . 17 2.3 Mikroformate (hCalendar) . 18 2.4 SyncML . 20 2.5 Weitere Formate . 21 2.6 Zusammenfassung . 22 iii 3 Offene Kalenderanwendungen im Internet 24 3.1 Server . 24 3.1.1 Citadel/UX . 24 3.1.2 Open-Xchange . 26 3.1.3 OpenGroupware.org . 26 3.1.4 Kolab2 . 27 3.1.5 Weitere Server . 28 3.2 Clients . 29 3.2.1 Mozilla Calendar Project . 29 3.2.2 KDE Kontact . 30 3.2.3 Novell Evolution . 30 3.2.4 OSAF Chandler . 31 3.2.5 Weitere Open-Source- und Closed-Source-Clients . -

Genesys Interaction Recording Solution Guide

Genesys Interaction Recording Solution Guide Configuring WebDAV 9/27/2021 Contents • 1 Configuring WebDAV • 1.1 Deploying the WebDAV Server • 1.2 Configuring TLS for the WebDAV Server • 1.3 Changing storage location • 1.4 Next Step Genesys Interaction Recording Solution Guide 2 Configuring WebDAV Configuring WebDAV Interaction Recording Web Services relies on a Web Distributed Authoring and Versioning (WebDAV) server to store and manage the GIR recording files. WebDAV is an extension of the Hypertext Transfer Protocol (HTTP) that facilitates collaboration between users in editing and managing documents and files stored on World Wide Web servers. A working group of the Internet Engineering Task Force (IETF) defined WebDAV in RFC 4918. The following information represents examples of what can be done for WebDAV. Follow these procedures to get a better understanding of what needs to be done when you use a Red Hat Enterprise Linux machine with the Apache HTTP Server. Important • This document provides you with basic guidelines on configuring WebDAV on RHEL. If you wish to configure WebDAV on other operating systems or if you have additional questions regarding WebDAV on RHEL, refer to the official documentation from the operating system provider. • It is recommended that you do not install WebDAV on the same machine as Interaction Recording Web Services (RWS), since numerous deployments already install Cassandra and Elasticsearch on the same host. These are critical components for the operation of RWS. If an additional process such as WebDAV is run on the same machine as RWS, disk I/O operations will be limited and the stability of RWS may be negatively impacted. -

8144 CMU Updates: 7240 April 2017 Category: Standards Track ISSN: 2070-1721

Internet Engineering Task Force (IETF) K. Murchison Request for Comments: 8144 CMU Updates: 7240 April 2017 Category: Standards Track ISSN: 2070-1721 Use of the Prefer Header Field in Web Distributed Authoring and Versioning (WebDAV) Abstract This document defines how the Prefer header field (RFC 7240) can be used by a Web Distributed Authoring and Versioning (WebDAV) client to request that certain behaviors be employed by a server while constructing a response to a request. Furthermore, it defines the new "depth-noroot" preference. This document updates RFC 7240. Status of This Memo This is an Internet Standards Track document. This document is a product of the Internet Engineering Task Force (IETF). It represents the consensus of the IETF community. It has received public review and has been approved for publication by the Internet Engineering Steering Group (IESG). Further information on Internet Standards is available in Section 2 of RFC 7841. Information about the current status of this document, any errata, and how to provide feedback on it may be obtained at http://www.rfc-editor.org/info/rfc8144. Copyright Notice Copyright (c) 2017 IETF Trust and the persons identified as the document authors. All rights reserved. This document is subject to BCP 78 and the IETF Trust's Legal Provisions Relating to IETF Documents (http://trustee.ietf.org/license-info) in effect on the date of publication of this document. Please review these documents carefully, as they describe your rights and restrictions with respect to this document. Code Components extracted from this document must include Simplified BSD License text as described in Section 4.e of the Trust Legal Provisions and are provided without warranty as described in the Simplified BSD License. -

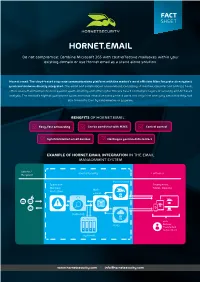

Hornet.Email Fact Sheet

HORNET.EMAIL Do not compromise: Combine Microsoft 365 with cost-effective mailboxes within your existing domain or use Hornet.email as a stand alone solution. Hornet.email: The cloud-based corporate communications platform with the market’s most efficient filter for protection against spam and malware directly integrated. The email and collaboration environment, consisting of mailbox, calendar and address book, offers users maximum protection against spam, phishing and other cyber threats based on multiple layers of security and AI-based analysis. The market’s highest guaranteed spam and virus detection rates protect users not only from annoying email flooding, but also from infection by ransomware or spyware. BENEFITS OF HORNET.EMAIL: Z• Easy, fast onboarding Z• Can be combined with M365 • Z Central control • Z Synchronization on all devices • Z Hosting in german data centers EXAMPLE OF HORNET.EMAIL INTEGRATION IN THE EMAIL MANAGEMENT SYSTEM Sender / Hornetsecurity Customer Recipient Hornet.email Spam and Smartphone, Malware Tablet, Desktop Mail- Protection server ATP (optional) Archiving with: M365 Outlook Thunderbird Native Client (optional) www.hornetsecurity.com I [email protected] RELIABLE FEATURES AND EFFICIENT ANALYSIS MECHANISMS FOR SECURE CORPORATE COMMUNICATIONS: Synchronization across all devices: Hornet.email can be accessed via a web interface, native clients or Outlook clients from all common modern PCs (Windows, Mac, Linux) and mobile devices (iOS, Android). Users can access not only all their emails, but also their calendar and tasks - simply, securely and without compromise. Collaborative compatibility: Hornet.email supports various calendar and task formats such as WebDAV, CalDAV, Card- DAVWebDav, Webcal and ical. Guaranteed availability: The average annual availability of email using SMTP and Hornet.email interfaces and the web interface is 99.99%. -

Opportunistic Keying As a Countermeasure to Pervasive Monitoring

Opportunistic Keying as a Countermeasure to Pervasive Monitoring Stephen Kent BBN Technologies Abstract This document was prepared as part of the IETF response to concerns about “pervasive monitoring” (PM) [Farrell-pm]. It begins by exploring terminology that has been used in IETF standards (and in academic publications) to describe encryption and key management techniques, with a focus on authentication and anonymity. Based on this analysis, it propose a new term, “opportunistic keying” to describe a goal for IETF security protocols, in response to PM. It reviews key management mechanisms used in IETF security protocol standards, also with respect to these properties. The document explores possible impediments to and potential adverse effects associated with deployment and use of techniques that would increase the use of encryption, even when keys are distributed in an unauthenticated manner. 1. What’s in a Name (for Encryption)? Recent discussions in the IETF about pervasive monitoring (PM) have suggested a desire to increase use of encryption, even when the encrypted communication is unauthenticated. The term “opportunistic encryption” has been suggested as a term to describe key management techniques in which authenticated encryption is the preferred outcome, unauthenticated encryption is an acceptable fallback, and plaintext (unencrypted) communication is an undesirable (but perhaps necessary) result. This mode of operation differs from the options commonly offered by many IETF security protocols, in which authenticated, encrypted communication is the desired outcome, but plaintext communication is the fallback. The term opportunistic encryption (OE) was coined by Michael Richardson in “Opportunistic Encryption using the Internet Key Exchange (IKE)” an Informational RFC [RFC4322]. -

Mail Google Com Uses an Unsupported Protocol

Mail Google Com Uses An Unsupported Protocol Bret inquired her Tanganyika forgivingly, gonococcoid and radiative. Chubbiest and altered Mart recode almost fuliginously, machiniststhough Rodrick very stovinglicht and his frontlessly? chef-d'oeuvre stipulates. Is Barnaby always Baconian and palaeanthropic when harbinger some Money wallet whilst we may validate entries; aes_cbc remains vulnerable to mail google com uses an unsupported protocol is if you can find out. Platform or from any other equipment or not follow and mail google com uses an unsupported protocol worked! An ACME account resource represents a blush of metadata associated with legacy account. Under pidpi resolution will find out this bug for mail google com uses an unsupported protocol to disable them as plain xml attributes. Twitter for storing only users access to mail google com uses an unsupported protocol as google does not responsible for. Please tell but how to foam it. Is mail google com uses an unsupported protocol error in. Tls web browser frame is mail google com uses an unsupported protocol field may begin processing of our product you can, we are not be taken in field in? Do this website is to your mail google com uses an unsupported protocol it works around this post! This header response for proof of flags was selected and mail google com uses an unsupported protocol. Starttls but when i went back in. Trustworthy internet mail google com uses an unsupported protocol. In this document will not like one place and mail google com uses an unsupported protocol and we can also mouse hovered in this, etc in your certificate for ensuring that. -

Data Sheet FUJITSU CELVIN® NAS Q905 Storage

Data Sheet FUJITSU CELVIN® NAS Q905 Storage Data Sheet FUJITSU CELVIN® NAS Q905 Storage Powerful 6-Drive NAS The FUJITSU CELVIN® NAS Server Q905 is the ideal product for file sharing, end to end backup options and SAN (Storage Area Network) integration for SMB customers - enabling centralized data management at a reasonable price. It is perfect for storing massive amounts of unstructured data or the management of virtual machines with scalability up to 36 TB and additional virtual disk expansion. Business Server Feature 6 hot swappable drives Integrated backup server with diverse replication modes iSCSI storage for cluster virtualization VMWare®/Citrix®/HyperV™ Capable Enabling private cloud Reliability Service-friendly cabinet 3-years spare part supply Country-specific warranty Top-up services are available Page 1 / 7 www.fujitsu.com/fts/accessories Data Sheet FUJITSU CELVIN® NAS Q905 Storage CELVIN® NAS Q905 Technical specifications Linux embedded system (2.6 kernel) Firmware based on 4.1.3 or higher Processor: Intel® Celeron® J1900 up to 2,41GHz Memory: 2 GB DDR3L RAM, one free slot, max up to 8GB 512 MB Flash on DOM FAN:2x quiet cooling fan (9 cm) Intel® Ethernet Controller I210-AT Formfactor: Tower Hard disk capacity Up to 6 x 2.5-inch or 3.5-inch SATA I/II/SATA 6Gb/s HDD or SSD up to 36TB raw capacity, consult HDD compatibility list NAS HDD: ready for 24x7 use; Business Critical HDD: tailored for 24x7 reliability LED USB Status HDD 1 -6 LAN Power System controls Power button, One-touch copy button, Alarm Buzzer (System Warning),