Web Enabled Data Management with DPM &

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cryptomator Documentation Release 1.5.0

Cryptomator Documentation Release 1.5.0 Cryptobot Sep 15, 2021 Desktop 1 Setup 3 1.1 Windows...............................................3 1.2 macOS................................................3 1.3 Linux.................................................3 2 Getting Started 5 3 Adding Vaults 7 3.1 Create a New Vault..........................................8 3.2 Open an Existing Vault........................................ 13 4 Accessing Vaults 15 4.1 Unlocking a Vault.......................................... 16 4.2 Working with the Unlocked Vault.................................. 17 4.3 Locking a vault............................................ 18 5 Password And Recovery Key 21 5.1 Change Password........................................... 21 5.2 Show Recovery Key......................................... 22 5.3 Reset Password............................................ 23 6 Vault Mounting 27 6.1 General Adapter Selection...................................... 27 6.2 Options applicable to all Systems and Adapters........................... 27 6.3 WebDAV-specific options...................................... 28 6.4 Dokany-specific options....................................... 28 6.5 FUSE-specific options........................................ 28 7 Vault Management 29 7.1 Remove Vaults............................................ 29 7.2 Reorder Vaults............................................ 29 7.3 Vault Options............................................. 29 8 Setup 33 8.1 Google PlayStore.......................................... -

Cheat Sheet – Common Ports (PDF)

COMMON PORTS packetlife.net TCP/UDP Port Numbers 7 Echo 554 RTSP 2745 Bagle.H 6891-6901 Windows Live 19 Chargen 546-547 DHCPv6 2967 Symantec AV 6970 Quicktime 20-21 FTP 560 rmonitor 3050 Interbase DB 7212 GhostSurf 22 SSH/SCP 563 NNTP over SSL 3074 XBOX Live 7648-7649 CU-SeeMe 23 Telnet 587 SMTP 3124 HTTP Proxy 8000 Internet Radio 25 SMTP 591 FileMaker 3127 MyDoom 8080 HTTP Proxy 42 WINS Replication 593 Microsoft DCOM 3128 HTTP Proxy 8086-8087 Kaspersky AV 43 WHOIS 631 Internet Printing 3222 GLBP 8118 Privoxy 49 TACACS 636 LDAP over SSL 3260 iSCSI Target 8200 VMware Server 53 DNS 639 MSDP (PIM) 3306 MySQL 8500 Adobe ColdFusion 67-68 DHCP/BOOTP 646 LDP (MPLS) 3389 Terminal Server 8767 TeamSpeak 69 TFTP 691 MS Exchange 3689 iTunes 8866 Bagle.B 70 Gopher 860 iSCSI 3690 Subversion 9100 HP JetDirect 79 Finger 873 rsync 3724 World of Warcraft 9101-9103 Bacula 80 HTTP 902 VMware Server 3784-3785 Ventrilo 9119 MXit 88 Kerberos 989-990 FTP over SSL 4333 mSQL 9800 WebDAV 102 MS Exchange 993 IMAP4 over SSL 4444 Blaster 9898 Dabber 110 POP3 995 POP3 over SSL 4664 Google Desktop 9988 Rbot/Spybot 113 Ident 1025 Microsoft RPC 4672 eMule 9999 Urchin 119 NNTP (Usenet) 1026-1029 Windows Messenger 4899 Radmin 10000 Webmin 123 NTP 1080 SOCKS Proxy 5000 UPnP 10000 BackupExec 135 Microsoft RPC 1080 MyDoom 5001 Slingbox 10113-10116 NetIQ 137-139 NetBIOS 1194 OpenVPN 5001 iperf 11371 OpenPGP 143 IMAP4 1214 Kazaa 5004-5005 RTP 12035-12036 Second Life 161-162 SNMP 1241 Nessus 5050 Yahoo! Messenger 12345 NetBus 177 XDMCP 1311 Dell OpenManage 5060 SIP 13720-13721 -

Diplomarbeit Kalenderstandards Im Internet

Diplomarbeit Kalenderstandards im Internet Eingereicht von Reinhard Fischer Studienkennzahl J151 Matrikelnummer: 9852961 Diplomarbeit am Institut für Informationswirtschaft WIRTSCHAFTSUNIVERSITÄT WIEN Studienrichtung: Betriebswirtschaft Begutachter: Prof. DDr. Arno Scharl Betreuender Assistent: Dipl.-Ing. Mag. Dr. Albert Weichselbraun Wien, 20. August 2007 ii Inhaltsverzeichnis Abbildungsverzeichnis vi Abkürzungsverzeichnis vii 1 Einleitung 1 1.1 Problemstellung . 1 1.2 Inhalt und Vorgehensweise . 3 2 Standards für Kalender im Internet 5 2.1 iCalendar und darauf basierende Standards . 6 2.1.1 iCalendar und vCalendar . 6 2.1.2 Transport-Independent Interoperability Protocol (iTIP) . 8 2.1.3 iCalendar Message-Based Interoperability Protocol (iMIP) . 8 2.1.4 iCalendar über WebDAV (WebCAL) . 10 2.1.5 Storage of Groupware Objects in WebDAV (GroupDAV) . 11 2.1.6 Calendaring and Scheduling Extensions to WebDAV (CalDAV) . 12 2.1.7 IETF Calendar Access Protocol (CAP) . 13 2.2 XML-basierte Formate . 15 2.2.1 XML iCalendar (xCal) . 15 2.2.2 RDF Calendar (RDFiCal) . 16 2.2.3 RDFa (RDF/A) . 16 2.2.4 OWL-Time . 17 2.3 Mikroformate (hCalendar) . 18 2.4 SyncML . 20 2.5 Weitere Formate . 21 2.6 Zusammenfassung . 22 iii 3 Offene Kalenderanwendungen im Internet 24 3.1 Server . 24 3.1.1 Citadel/UX . 24 3.1.2 Open-Xchange . 26 3.1.3 OpenGroupware.org . 26 3.1.4 Kolab2 . 27 3.1.5 Weitere Server . 28 3.2 Clients . 29 3.2.1 Mozilla Calendar Project . 29 3.2.2 KDE Kontact . 30 3.2.3 Novell Evolution . 30 3.2.4 OSAF Chandler . 31 3.2.5 Weitere Open-Source- und Closed-Source-Clients . -

Genesys Interaction Recording Solution Guide

Genesys Interaction Recording Solution Guide Configuring WebDAV 9/27/2021 Contents • 1 Configuring WebDAV • 1.1 Deploying the WebDAV Server • 1.2 Configuring TLS for the WebDAV Server • 1.3 Changing storage location • 1.4 Next Step Genesys Interaction Recording Solution Guide 2 Configuring WebDAV Configuring WebDAV Interaction Recording Web Services relies on a Web Distributed Authoring and Versioning (WebDAV) server to store and manage the GIR recording files. WebDAV is an extension of the Hypertext Transfer Protocol (HTTP) that facilitates collaboration between users in editing and managing documents and files stored on World Wide Web servers. A working group of the Internet Engineering Task Force (IETF) defined WebDAV in RFC 4918. The following information represents examples of what can be done for WebDAV. Follow these procedures to get a better understanding of what needs to be done when you use a Red Hat Enterprise Linux machine with the Apache HTTP Server. Important • This document provides you with basic guidelines on configuring WebDAV on RHEL. If you wish to configure WebDAV on other operating systems or if you have additional questions regarding WebDAV on RHEL, refer to the official documentation from the operating system provider. • It is recommended that you do not install WebDAV on the same machine as Interaction Recording Web Services (RWS), since numerous deployments already install Cassandra and Elasticsearch on the same host. These are critical components for the operation of RWS. If an additional process such as WebDAV is run on the same machine as RWS, disk I/O operations will be limited and the stability of RWS may be negatively impacted. -

8144 CMU Updates: 7240 April 2017 Category: Standards Track ISSN: 2070-1721

Internet Engineering Task Force (IETF) K. Murchison Request for Comments: 8144 CMU Updates: 7240 April 2017 Category: Standards Track ISSN: 2070-1721 Use of the Prefer Header Field in Web Distributed Authoring and Versioning (WebDAV) Abstract This document defines how the Prefer header field (RFC 7240) can be used by a Web Distributed Authoring and Versioning (WebDAV) client to request that certain behaviors be employed by a server while constructing a response to a request. Furthermore, it defines the new "depth-noroot" preference. This document updates RFC 7240. Status of This Memo This is an Internet Standards Track document. This document is a product of the Internet Engineering Task Force (IETF). It represents the consensus of the IETF community. It has received public review and has been approved for publication by the Internet Engineering Steering Group (IESG). Further information on Internet Standards is available in Section 2 of RFC 7841. Information about the current status of this document, any errata, and how to provide feedback on it may be obtained at http://www.rfc-editor.org/info/rfc8144. Copyright Notice Copyright (c) 2017 IETF Trust and the persons identified as the document authors. All rights reserved. This document is subject to BCP 78 and the IETF Trust's Legal Provisions Relating to IETF Documents (http://trustee.ietf.org/license-info) in effect on the date of publication of this document. Please review these documents carefully, as they describe your rights and restrictions with respect to this document. Code Components extracted from this document must include Simplified BSD License text as described in Section 4.e of the Trust Legal Provisions and are provided without warranty as described in the Simplified BSD License. -

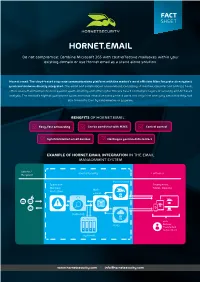

Hornet.Email Fact Sheet

HORNET.EMAIL Do not compromise: Combine Microsoft 365 with cost-effective mailboxes within your existing domain or use Hornet.email as a stand alone solution. Hornet.email: The cloud-based corporate communications platform with the market’s most efficient filter for protection against spam and malware directly integrated. The email and collaboration environment, consisting of mailbox, calendar and address book, offers users maximum protection against spam, phishing and other cyber threats based on multiple layers of security and AI-based analysis. The market’s highest guaranteed spam and virus detection rates protect users not only from annoying email flooding, but also from infection by ransomware or spyware. BENEFITS OF HORNET.EMAIL: Z• Easy, fast onboarding Z• Can be combined with M365 • Z Central control • Z Synchronization on all devices • Z Hosting in german data centers EXAMPLE OF HORNET.EMAIL INTEGRATION IN THE EMAIL MANAGEMENT SYSTEM Sender / Hornetsecurity Customer Recipient Hornet.email Spam and Smartphone, Malware Tablet, Desktop Mail- Protection server ATP (optional) Archiving with: M365 Outlook Thunderbird Native Client (optional) www.hornetsecurity.com I [email protected] RELIABLE FEATURES AND EFFICIENT ANALYSIS MECHANISMS FOR SECURE CORPORATE COMMUNICATIONS: Synchronization across all devices: Hornet.email can be accessed via a web interface, native clients or Outlook clients from all common modern PCs (Windows, Mac, Linux) and mobile devices (iOS, Android). Users can access not only all their emails, but also their calendar and tasks - simply, securely and without compromise. Collaborative compatibility: Hornet.email supports various calendar and task formats such as WebDAV, CalDAV, Card- DAVWebDav, Webcal and ical. Guaranteed availability: The average annual availability of email using SMTP and Hornet.email interfaces and the web interface is 99.99%. -

Data Sheet FUJITSU CELVIN® NAS Q905 Storage

Data Sheet FUJITSU CELVIN® NAS Q905 Storage Data Sheet FUJITSU CELVIN® NAS Q905 Storage Powerful 6-Drive NAS The FUJITSU CELVIN® NAS Server Q905 is the ideal product for file sharing, end to end backup options and SAN (Storage Area Network) integration for SMB customers - enabling centralized data management at a reasonable price. It is perfect for storing massive amounts of unstructured data or the management of virtual machines with scalability up to 36 TB and additional virtual disk expansion. Business Server Feature 6 hot swappable drives Integrated backup server with diverse replication modes iSCSI storage for cluster virtualization VMWare®/Citrix®/HyperV™ Capable Enabling private cloud Reliability Service-friendly cabinet 3-years spare part supply Country-specific warranty Top-up services are available Page 1 / 7 www.fujitsu.com/fts/accessories Data Sheet FUJITSU CELVIN® NAS Q905 Storage CELVIN® NAS Q905 Technical specifications Linux embedded system (2.6 kernel) Firmware based on 4.1.3 or higher Processor: Intel® Celeron® J1900 up to 2,41GHz Memory: 2 GB DDR3L RAM, one free slot, max up to 8GB 512 MB Flash on DOM FAN:2x quiet cooling fan (9 cm) Intel® Ethernet Controller I210-AT Formfactor: Tower Hard disk capacity Up to 6 x 2.5-inch or 3.5-inch SATA I/II/SATA 6Gb/s HDD or SSD up to 36TB raw capacity, consult HDD compatibility list NAS HDD: ready for 24x7 use; Business Critical HDD: tailored for 24x7 reliability LED USB Status HDD 1 -6 LAN Power System controls Power button, One-touch copy button, Alarm Buzzer (System Warning), -

ASUSTOR AS7110T Datasheet

Full Xeon Speed Ahead! AS7110T 1x 10 Gigabit 8GB ECC Ethernet DDR4 3x 2.5 Gigabit Intel Xeon Ethernet Coffee Lake 3x USB 3.2 2x M.2 Slot Intel Xeon 9th Generation Quad-Core Processor • Server-grade ECC memory: 8GB ECC DDR4-2666 (Max 32GB, 16GB x2) • Dual M.2 NVMe SSD ports for fast caching • Complete iSCSI/IP-SAN and NFS support. VMware, Citrix and Hyper-V ready. • 2 Supports Virtualization and Docker apps Brings the smoothest work experience with 10-Gigabit Ethernet 2• and triple 2.5-Gigabit ports and USB 3.2 Gen 2 ports. • Supports Wake on LAN (2.5-Gigabit ports) Performance Boundless Intuitive Incredible 5-year King Functionality UI Value Warranty 10-Gigabit and Triple 2.5-Gigabit ports. Extreme speeds The all new Lockerstor 10 Pro NAS feature a powerful Aquantia 10-Gigabit Ethernet port as well as triple Aquantia 2.5-Gigabit Ethernet ports. With a managed switch that supports multiple speeds, take advantage of up to 10 Gbps of speed on the 10-Gigabit port or up to 7.5 Gbps on all 2.5-Gigabit ports. 2.5 Gigabit x3 Single 10GbE LAN Read (MB/s) Write (MB/s) Read (MB/s) Write (MB/s) 848 847 1,181 1,178 *M.2 SSD Caching internal performance testing. *1x Cat 6a and 3x Cat 5e cables included. *Single client read/ write performance can reach the 10GbE full speed through the single Intel 10GbE port. Data Protection Starts with Storage ASUSTOR understands that your data is priceless, therefore ASUSTOR NAS provides different RAID Volume options, giving your data various levels of protection from the moment it is first stored on the NAS. -

Webdav and BB.Pdf

WebDav and BB-CE6 WebDav allows you to use a remote Web server as though it were a file server. Here's how you get set up: 1. Go to one of your BB-CE6 courses. 2. Click on the Build tab, then on File Manager in the Designer Tools menu. 3. Look for the Action Menu next to the File Manager heading, then click on it; this will display a View WebDav Info button: 4. Click on the View WebDav Info button and look for the URL or folder path to pop up: KKirkland 05/12 5. Copy this URL (as you will need it to configure your network places on your desktop). 6. Once you've copied the URL, go to the Start Menu in Windows and look for your My Network Places option. 7. Select My Network Places and then select Add a Network Place (select the "Choose Another Network Location") 8. In the Internet or Network address field, paste the WebDav URL you copied from from the Info popup. 9. Click Next and provide the login form that is prompted with your BB-CE6 username and password. 10. After you click Finish you may need to authenticate again, but then you should see your files on the BB-CE6 server. While you can work with your course directly via WebDav, it is a best practice to copy the content to a folder that allows you to work "offline." When you're ready to publish those changes to your course, just drag and drop the revised file from the "offline" folder into the WebDav directory. -

Multi-National Information Sharing Cross Domain Collaborative

UNCLASSIFIED Multi-National Information Sharing Cross Domain Collaborative Information Environment (CDCIE) Solution United States Joint Forces Command Joint Futures Lab Mr. Boyd Fletcher ([email protected]) Mr. Dana Hare ([email protected]) 12 April 2005 Revision 4 1 UNCLASSIFIED ABSTRACT In February 2004, the Joint Forces Command (JFCOM) began its efforts to provide a near term Multinational Information Sharing (MNIS) solution to support Warfighters operating in a coalition environment. The JFCOM Joint Futures Laboratory (JFL) solution to MNIS is the Cross Domain Collaborative Information Environment (CDCIE). The CDCIE is a suite of standards-based and largely open source code applications that provide the capability to collaborate, share and manage documents, and use web portals, from one classification domain to another. The applications will also work within a single classification domain. While the CDCIE is designed to run in today’s environment, it is also a building block for the future information infrastructure – the CDCIE is adhering to the same basic standards that are guiding Net-Centric Enterprise Services development. Currently portal, document management, and cross domain text chat capabilities have been developed for the CDCIE solution. The cross domain text chat capability will be submitted for Certification and Accreditation (C&A) this year. CDCIE development will continue to be expanded to include audio chat, application casting and whiteboarding. As the CDCIE is enhanced, the suite will return to C&A. The CDCIE project has been designed to function at the operational command and control level. 2 UNCLASSIFIED INTRODUCTION In the future, United States military operations will include coalition partners. -

Secure Public Instant Messaging

Secure Public Instant Messaging By Mohammad Abdul Mannan A thesis submitted to the Faculty of Graduate Studies and Research in partial fulfilment of the requirements for the degree of Master of Computer Science Ottawa-Carleton Institute for Computer Science School of Computer Science Carleton University Ottawa, Ontario, Canada August 2005 ©Copyright Mohammad Abdul Mannan, 2005 Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. Library and Bibliotheque et 1*1 Archives Canada Archives Canada Published Heritage Direction du Branch Patrimoine de I'edition 395 Wellington Street 395, rue Wellington Ottawa ON K1A 0N4 Ottawa ON K1A 0N4 Canada Canada Your file Votre reference ISBN: 0-494-10118-0 Our file Notre reference ISBN: 0-494-10118-0 NOTICE: AVIS: The author has granted a non L'auteur a accorde une licence non exclusive exclusive license allowing Library permettant a la Bibliotheque et Archives and Archives Canada to reproduce,Canada de reproduire, publier, archiver, publish, archive, preserve, conserve,sauvegarder, conserver, transmettre au public communicate to the public by par telecommunication ou par I'lnternet, preter, telecommunication or on the Internet,distribuer et vendre des theses partout dans loan, distribute and sell theses le monde, a des fins commerciales ou autres, worldwide, for commercial or non sur support microforme, papier, electronique commercial purposes, in microform,et/ou autres formats. paper, electronic and/or any other formats. The author retains copyright L'auteur conserve la propriete du droit d'auteur ownership and moral rights in et des droits moraux qui protege cette these. this thesis. Neither the thesis Ni la these ni des extraits substantiels de nor substantial extracts from it celle-ci ne doivent etre imprimes ou autrement may be printed or otherwise reproduits sans son autorisation. -

Tsung Documentation Release 1.7.0

Tsung Documentation Release 1.7.0 Nicolas Niclausse Oct 08, 2017 Contents 1 Introduction 3 1.1 What is Tsung?..............................................3 1.2 What is Erlang and why is it important for Tsung?...........................3 1.3 Tsung background............................................3 2 Features 5 2.1 Tsung main features...........................................5 2.2 HTTP related features..........................................6 2.3 WEBDAV related features........................................6 2.4 Jabber/XMPP related features......................................6 2.5 PostgreSQL related features.......................................7 2.6 MySQL related features.........................................7 2.7 Websocket related features........................................7 2.8 AMQP related features..........................................7 2.9 MQTT related features..........................................7 2.10 LDAP related features..........................................8 2.11 Raw plugin related features.......................................8 2.12 Complete reports set...........................................8 2.13 Highlights................................................8 3 Installation 9 3.1 Dependencies...............................................9 3.2 Compilation............................................... 10 3.3 Configuration............................................... 10 3.4 Running................................................. 10 3.5 Feedback................................................