What Is Computational Thinking? in Short, Computational Thinking Is a Systematic Way for Students to Learn Complex Problems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Jet Development in Leading Log QCD.Pdf

17 CALT-68-740 DoE RESEARCH AND DEVELOPMENT REPORT Jet Development in Leading Log QCD * by STEPHEN WOLFRAM California Institute of Technology, Pasadena. California 91125 ABSTRACT A simple picture of jet development in QCD is described. Various appli- cations are treated, including transverse spreading of jets, hadroproduced y* pT distributions, lepton energy spectra from heavy quark decays, soft parton multiplicities and hadron cluster formation. *Work supported in part by the U.S. Department of Energy under Contract No. DE-AC-03-79ER0068 and by a Feynman Fellowship. 18 According to QCD, high-energy e+- e annihilation into hadrons is initiated by the production from the decaying virtual photon of a quark and an antiquark, ,- +- each with invariant masses up to the c.m. energy vs in the original e e collision. The q and q then travel outwards radiating gluons which serve to spread their energy and color into a jet of finite angle. After a time ~ 1/IS, the rate of gluon emissions presumably decreases roughly inversely with time, except for the logarithmic rise associated with the effective coupling con 2 stant, (a (t) ~ l/log(t/A ), where It is the invariant mass of the radiating s . quark). Finally, when emissions have degraded the energies of the partons produced until their invariant masses fall below some critical ~ (probably c a few times h), the system of quarks and gluons begins to condense into the observed hadrons. The probability for a gluon to be emitted at times of 0(--)1 is small IS and may be est~mated from the leading terms of a perturbation series in a (s). -

Edsger Dijkstra: the Man Who Carried Computer Science on His Shoulders

INFERENCE / Vol. 5, No. 3 Edsger Dijkstra The Man Who Carried Computer Science on His Shoulders Krzysztof Apt s it turned out, the train I had taken from dsger dijkstra was born in Rotterdam in 1930. Nijmegen to Eindhoven arrived late. To make He described his father, at one time the president matters worse, I was then unable to find the right of the Dutch Chemical Society, as “an excellent Aoffice in the university building. When I eventually arrived Echemist,” and his mother as “a brilliant mathematician for my appointment, I was more than half an hour behind who had no job.”1 In 1948, Dijkstra achieved remarkable schedule. The professor completely ignored my profuse results when he completed secondary school at the famous apologies and proceeded to take a full hour for the meet- Erasmiaans Gymnasium in Rotterdam. His school diploma ing. It was the first time I met Edsger Wybe Dijkstra. shows that he earned the highest possible grade in no less At the time of our meeting in 1975, Dijkstra was 45 than six out of thirteen subjects. He then enrolled at the years old. The most prestigious award in computer sci- University of Leiden to study physics. ence, the ACM Turing Award, had been conferred on In September 1951, Dijkstra’s father suggested he attend him three years earlier. Almost twenty years his junior, I a three-week course on programming in Cambridge. It knew very little about the field—I had only learned what turned out to be an idea with far-reaching consequences. a flowchart was a couple of weeks earlier. -

Reinventing Education Based on Data and What Works • Since 1955

Reinventing Education Based on Data and What Works • Since 1955 Carnegie Mellon is reinventing education and the way we think about leveraging technology through its study of the science of learning – an interdisciplinary effort that we’ve been tackling for more than 50 years with both computer scientists and psychologists. CMU's educational technology innovations have inspired numerous startup companies, which are helping students to learn more effectively and efficiently. 1955: Allen Newell (TPR ’57) joins 1995: Prof. Kenneth R. Koedinger (HSS Prof. Herbert Simon’s research team as ’88,’90) and Anderson develop Practical a Ph.D. student. Algebra Tutor. The program pioneers a new form of computer-aided instruction for high 1956: CMU creates one of the world’s first school students based on cognitive tutors. university computation centers. With Prof. Alan Perlis (MCS ’42) as its head, it is a joint 1995: Prof. Jack Mostow (SCS ’81) undertaking of faculty from the business, develops Project LISTEN, an intelligent tutor Simon, Newell psychology, electrical engineering and that helps children learn to read. The National mathematics departments, and the Science Foundation included Project precursor to computer science. LISTEN’s speech recognition system as one of its top 50 innovations from 1950-2000. 1956: Simon creates a “thinking machine”—enacting a mental process 1995: The Center for Automated by breaking it down into its simplest Learning and Discovery is formed, led steps. Later that year, the term “artificial by Prof. Thomas M. Mitchell. intelligence” is coined by a small group Perlis including Newell and Simon. 1998: Spinoff company Carnegie Learning is founded by CMU scientists to expand 1956: Simon, Newell and J. -

The Advent of Recursion & Logic in Computer Science

The Advent of Recursion & Logic in Computer Science MSc Thesis (Afstudeerscriptie) written by Karel Van Oudheusden –alias Edgar G. Daylight (born October 21st, 1977 in Antwerpen, Belgium) under the supervision of Dr Gerard Alberts, and submitted to the Board of Examiners in partial fulfillment of the requirements for the degree of MSc in Logic at the Universiteit van Amsterdam. Date of the public defense: Members of the Thesis Committee: November 17, 2009 Dr Gerard Alberts Prof Dr Krzysztof Apt Prof Dr Dick de Jongh Prof Dr Benedikt Löwe Dr Elizabeth de Mol Dr Leen Torenvliet 1 “We are reaching the stage of development where each new gener- ation of participants is unaware both of their overall technological ancestry and the history of the development of their speciality, and have no past to build upon.” J.A.N. Lee in 1996 [73, p.54] “To many of our colleagues, history is only the study of an irrele- vant past, with no redeeming modern value –a subject without useful scholarship.” J.A.N. Lee [73, p.55] “[E]ven when we can't know the answers, it is important to see the questions. They too form part of our understanding. If you cannot answer them now, you can alert future historians to them.” M.S. Mahoney [76, p.832] “Only do what only you can do.” E.W. Dijkstra [103, p.9] 2 Abstract The history of computer science can be viewed from a number of disciplinary perspectives, ranging from electrical engineering to linguistics. As stressed by the historian Michael Mahoney, different `communities of computing' had their own views towards what could be accomplished with a programmable comput- ing machine. -

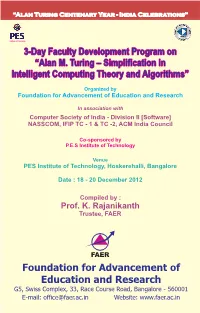

Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms”

“Alan Turing Centenary Year - India Celebrations” 3-Day Faculty Development Program on “Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms” Organized by Foundation for Advancement of Education and Research In association with Computer Society of India - Division II [Software] NASSCOM, IFIP TC - 1 & TC -2, ACM India Council Co-sponsored by P.E.S Institute of Technology Venue PES Institute of Technology, Hoskerehalli, Bangalore Date : 18 - 20 December 2012 Compiled by : Prof. K. Rajanikanth Trustee, FAER “Alan Turing Centenary Year - India Celebrations” 3-Day Faculty Development Program on “Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms” Organized by Foundation for Advancement of Education and Research In association with Computer Society of India - Division II [Software], NASSCOM, IFIP TC - 1 & TC -2, ACM India Council Co-sponsored by P.E.S Institute of Technology December 18 – 20, 2012 Compiled by : Prof. K. Rajanikanth Trustee, FAER Foundation for Advancement of Education and Research G5, Swiss Complex, 33, Race Course Road, Bangalore - 560001 E-mail: [email protected] Website: www.faer.ac.in PREFACE Alan Mathison Turing was born on June 23rd 1912 in Paddington, London. Alan Turing was a brilliant original thinker. He made original and lasting contributions to several fields, from theoretical computer science to artificial intelligence, cryptography, biology, philosophy etc. He is generally considered as the father of theoretical computer science and artificial intelligence. His brilliant career came to a tragic and untimely end in June 1954. In 1945 Turing was awarded the O.B.E. for his vital contribution to the war effort. In 1951 Turing was elected a Fellow of the Royal Society. -

PARTON and HADRON PRODUCTION in E+E- ANNIHILATION Stephen Wolfram California Institute of Technology, Pasadena, California 91125

549 * PARTON AND HADRON PRODUCTION IN e+e- ANNIHILATION Stephen Wolfram California Institute of Technology, Pasadena, California 91125 Ab stract: The production of showers of partons in e+e- annihilation final states is described according to QCD , and the formation of hadrons is dis cussed. Resume : On y decrit la production d'averses d� partons dans la theorie QCD qui forment l'etat final de !'annihilation e+ e , ainsi que leur transform ation en hadrons. *Work supported in part by the U.S. Department of Energy under contract no. DE-AC-03-79ER0068. SSt1 Introduction In these notes , I discuss some attemp ts e ri the cl!evellope1!1t of - to d s c be hadron final states in e e annihilation events ll>Sing. QCD. few featm11res A (barely visible at available+ energies} of this deve l"'P"1'ent amenab lle· are lt<J a precise and formal analysis in QCD by means of pe·rturbatirnc• the<J ry. lfor the mos t part, however, existing are quite inadle<!i""' lte! theoretical mett:l�ods one must therefore simply try to identify dl-iimam;t physical p.l:ten<0melilla tt:he to be expected from QCD, and make estll!att:es of dne:i.r effects , vi.th the hop·e that results so obtained will provide a good appro�::illliatimn to eventllLal'c ex act calculations . In so far as such estllma�es r necessa�y� pre�ise �r..uan- a e titative tests of QCD are precluded . On the ott:her handl , if QC!Ji is ass11l!med correct, then existing experimental data to inve•stigate its be may \JJ:SE•«f havior in regions not yet explored by theoreticalbe llJleaums . -

The Problem of Distributed Consensus: a Survey Stephen Wolfram*

The Problem of Distributed Consensus: A Survey Stephen Wolfram* A survey is given of approaches to the problem of distributed consensus, focusing particularly on methods based on cellular automata and related systems. A variety of new results are given, as well as a history of the field and an extensive bibliography. Distributed consensus is of current relevance in a new generation of blockchain-related systems. In preparation for a conference entitled “Distributed Consensus with Cellular Automata and Related Systems” that we’re organizing with NKN (= “New Kind of Network”) I decided to explore the problem of distributed consensus using methods from A New Kind of Science (yes, NKN “rhymes” with NKS) as well as from the Wolfram Physics Project. A Simple Example Consider a collection of “nodes”, each one of two possible colors. We want to determine the majority or “consensus” color of the nodes, i.e. which color is the more common among the nodes. A version of this document with immediately executable code is available at writings.stephenwolfram.com/2021/05/the-problem-of-distributed-consensus Originally published May 17, 2021 *Email: [email protected] 2 | Stephen Wolfram One obvious method to find this “majority” color is just sequentially to visit each node, and tally up all the colors. But it’s potentially much more efficient if we can use a distributed algorithm, where we’re running computations in parallel across the various nodes. One possible algorithm works as follows. First connect each node to some number of neighbors. For now, we’ll just pick the neighbors according to the spatial layout of the nodes: The algorithm works in a sequence of steps, at each step updating the color of each node to be whatever the “majority color” of its neighbors is. -

Purchase a Nicer, Printable PDF of This Issue. Or Nicest of All, Subscribe To

Mel says, “This is swell! But it’s not ideal—it’s a free, grainy PDF.” Attain your ideals! Purchase a nicer, printable PDF of this issue. Or nicest of all, subscribe to the paper version of the Annals of Improbable Research (six issues per year, delivered to your doorstep!). To purchase pretty PDFs, or to subscribe to splendid paper, go to http://www.improbable.com/magazine/ ANNALS OF Special Issue THE 2009 IG® NOBEL PRIZES Panda poo spinoff, Tequila-based diamonds, 11> Chernobyl-inspired bra/mask… NOVEMBER|DECEMBER 2009 (volume 15, number 6) $6.50 US|$9.50 CAN 027447088921 The journal of record for inflated research and personalities Annals of © 2009 Annals of Improbable Research Improbable Research ISSN 1079-5146 print / 1935-6862 online AIR, P.O. Box 380853, Cambridge, MA 02238, USA “Improbable Research” and “Ig” and the tumbled thinker logo are all reg. U.S. Pat. & Tm. Off. 617-491-4437 FAX: 617-661-0927 www.improbable.com [email protected] EDITORIAL: [email protected] The journal of record for inflated research and personalities Co-founders Commutative Editor VP, Human Resources Circulation (Counter-clockwise) Marc Abrahams Stanley Eigen Robin Abrahams James Mahoney Alexander Kohn Northeastern U. Research Researchers Webmaster Editor Associative Editor Kristine Danowski, Julia Lunetta Marc Abrahams Mark Dionne Martin Gardiner, Tom Gill, [email protected] Mary Kroner, Wendy Mattson, General Factotum (web) [email protected] Dissociative Editor Katherine Meusey, Srinivasan Jesse Eppers Rose Fox Rajagopalan, Tom Roberts, Admin Tom Ulrich Technical Eminence Grise Lisa Birk Psychology Editor Dave Feldman Robin Abrahams Design and Art European Bureau Geri Sullivan Art Director emerita Kees Moeliker, Bureau Chief Contributing Editors PROmote Communications Peaco Todd Rotterdam Otto Didact, Stephen Drew, Ernest Lois Malone Webmaster emerita [email protected] Ersatz, Emil Filterbag, Karen Rich & Famous Graphics Steve Farrar, Edinburgh Desk Chief Hopkin, Alice Kaswell, Nick Kim, Amy Gorin Erwin J.O. -

Mount Litera Zee School Grade-VIII G.K Computer and Technology Read and Write in Notebook: 1

Mount Litera Zee School Grade-VIII G.K Computer and Technology Read and write in notebook: 1. Who is called as ‘Father of Computer’? a. Charles Babbage b. Bill Gates c. Blaise Pascal d. Mark Zuckerberg 2. Full Form of virus is? a. Visual Information Resource Under Seize b. Virtual Information Resource Under Size c. Vital Information Resource Under Seize d. Virtue Information Resource Under Seize 3. How many MB (Mega Byte) makes one GB (Giga Byte)? a. 1025 MB b. 1030 MB c. 1020 MB d. 1024 MB 4. Internet was developed in _______. a. 1973 b. 1993 c. 1983 d. 1963 5. Which was the first virus detected on ARPANET, the forerunner of the internet in the early 1970s? a. Exe Flie b. Creeper Virus c. Peeper Virus d. Trozen horse 6. Who is known as the Human Computer of India? a. Shakunthala Devi b. Nandan Nilekani c. Ajith Balakrishnan d. Manish Agarwal 7. When was the first smart phone launched? a. 1992 b. 1990 c. 1998 d. 2000 8. Which one of the following was the first search engine used? a. Google b. Archie c. AltaVista d. WAIS 9. Who is known as father of Internet? a. Alan Perlis b. Jean E. Sammet c. Vint Cerf d. Steve Lawrence 10. What us full form of GOOGLE? a. Global Orient of Oriented Group Language of Earth b. Global Organization of Oriented Group Language of Earth c. Global Orient of Oriented Group Language of Earth d. Global Oriented of Organization Group Language of Earth 11. Who developed Java Programming Language? a. -

Cellular Automation

John von Neumann Cellular automation A cellular automaton is a discrete model studied in computability theory, mathematics, physics, complexity science, theoretical biology and microstructure modeling. Cellular automata are also called cellular spaces, tessellation automata, homogeneous structures, cellular structures, tessellation structures, and iterative arrays.[2] A cellular automaton consists of a regular grid of cells, each in one of a finite number of states, such as on and off (in contrast to a coupled map lattice). The grid can be in any finite number of dimensions. For each cell, a set of cells called its neighborhood is defined relative to the specified cell. An initial state (time t = 0) is selected by assigning a state for each cell. A new generation is created (advancing t by 1), according to some fixed rule (generally, a mathematical function) that determines the new state of each cell in terms of the current state of the cell and the states of the cells in its neighborhood. Typically, the rule for updating the state of cells is the same for each cell and does not change over time, and is applied to the whole grid simultaneously, though exceptions are known, such as the stochastic cellular automaton and asynchronous cellular automaton. The concept was originally discovered in the 1940s by Stanislaw Ulam and John von Neumann while they were contemporaries at Los Alamos National Laboratory. While studied by some throughout the 1950s and 1960s, it was not until the 1970s and Conway's Game of Life, a two-dimensional cellular automaton, that interest in the subject expanded beyond academia. -

A New Kind of Science

Wolfram|Alpha, A New Kind of Science A New Kind of Science Wolfram|Alpha, A New Kind of Science by Bruce Walters April 18, 2011 Research Paper for Spring 2012 INFSY 556 Data Warehousing Professor Rhoda Joseph, Ph.D. Penn State University at Harrisburg Wolfram|Alpha, A New Kind of Science Page 2 of 8 Abstract The core mission of Wolfram|Alpha is “to take expert-level knowledge, and create a system that can apply it automatically whenever and wherever it’s needed” says Stephen Wolfram, the technologies inventor (Wolfram, 2009-02). This paper examines Wolfram|Alpha in its present form. Introduction As the internet became available to the world mass population, British computer scientist Tim Berners-Lee provided “hypertext” as a means for its general consumption, and coined the phrase World Wide Web. The World Wide Web is often referred to simply as the Web, and Web 1.0 transformed how we communicate. Now, with Web 2.0 firmly entrenched in our being and going with us wherever we go, can 3.0 be far behind? Web 3.0, the semantic web, is a web that endeavors to understand meaning rather than syntactically precise commands (Andersen, 2010). Enter Wolfram|Alpha. Wolfram Alpha, officially launched in May 2009, is a rapidly evolving "computational search engine,” but rather than searching pre‐existing documents, it actually computes the answer, every time (Andersen, 2010). Wolfram|Alpha relies on a knowledgebase of data in order to perform these computations, which despite efforts to date, is still only a fraction of world’s knowledge. Scientist, author, and inventor Stephen Wolfram refers to the world’s knowledge this way: “It’s a sad but true fact that most data that’s generated or collected, even with considerable effort, never gets any kind of serious analysis” (Wolfram, 2009-02). -

Halting Problems: a Historical Reading of a Paradigmatic Computer Science Problem

PROGRAMme Autumn workshop 2018, Bertinoro L. De Mol Halting problems: a historical reading of a paradigmatic computer science problem Liesbeth De Mol1 and Edgar G. Daylight2 1 CNRS, UMR 8163 Savoirs, Textes, Langage, Universit´e de Lille, [email protected] 2 Siegen University, [email protected] 1 PROGRAMme Autumn workshop 2018, Bertinoro L. De Mol and E.G. Daylight Introduction (1) \The improbable symbolism of Peano, Russel, and Whitehead, the analysis of proofs by flowcharts spearheaded by Gentzen, the definition of computability by Church and Turing, all inventions motivated by the purest of mathematics, mark the beginning of the computer revolution. Once more, we find a confirmation of the sentence Leonardo jotted despondently on one of those rambling sheets where he confided his innermost thoughts: `Theory is the captain, and application the soldier.' " (Metropolis, Howlett and Rota, 1980) Introduction 2 PROGRAMme Autumn workshop 2018, Bertinoro L. De Mol and E.G. Daylight Introduction (2) Why is this `improbable' symbolism considered relevant in comput- ing? ) Different non-excluding answers... 1. (the socio-historical answers) studying social and institutional developments in computing to understand why logic, or, theory, was/is considered to be the captain (or not) e.g. need for logic framed in CS's struggle for disciplinary identity and independence (cf (Tedre 2015)) 2. (the philosophico-historical answers) studying history of computing on a more technical level to understand why and how logic (or theory) are in- troduced in the computing practices { question: is there something to com- puting which makes logic epistemologically relevant to it? ) significance of combining the different answers (and the respective approaches they result in) ) In this talk: focus on paradigmatic \problem" of (theoretical) computer science { the halting problem Introduction 3 PROGRAMme Autumn workshop 2018, Bertinoro L.